mirror of

https://github.com/qodo-ai/pr-agent.git

synced 2025-07-21 04:50:39 +08:00

Compare commits

259 Commits

tr/static_

...

hl/jira_se

| Author | SHA1 | Date | |

|---|---|---|---|

| 5cc9dcf72a | |||

| a87dd37f66 | |||

| 20c506d2e0 | |||

| a1921d931c | |||

| 31aa460f5f | |||

| 23678c1d4d | |||

| 8d7825233a | |||

| c9f02e63e1 | |||

| c2f1f2dba0 | |||

| 3ab2cac089 | |||

| 989670b159 | |||

| 7e8361b5fd | |||

| eaaaf6a6a2 | |||

| 07f3933f6d | |||

| 84786495ed | |||

| d09aa1b13e | |||

| e9615c6994 | |||

| f3ee4a75b5 | |||

| 452abe2e18 | |||

| a768969d37 | |||

| 9ef9198468 | |||

| d0ea901bca | |||

| 57089c931b | |||

| 03d2bea50b | |||

| 721d38d4ed | |||

| bbec5d9cc9 | |||

| 0be2750dfa | |||

| 5e5c251cd0 | |||

| 048ae8ee9e | |||

| 1a77e9afaf | |||

| 184a52d325 | |||

| 3d38060dff | |||

| c4dc263f2c | |||

| f67cc0dd18 | |||

| 872b27bfd8 | |||

| cb88489dbe | |||

| da786b8020 | |||

| 6a51b8501d | |||

| d34edb83ff | |||

| c6eb253ed1 | |||

| a61f1889d1 | |||

| 75a120952c | |||

| f9a7b18073 | |||

| 6352e6e3bf | |||

| f49217e058 | |||

| e11cec7d9e | |||

| c45cde93ef | |||

| 9e25667d97 | |||

| 7dc9e73423 | |||

| e3d779c30d | |||

| 88a93bdcd7 | |||

| 3c31048afc | |||

| fc5dda0957 | |||

| 936894e4d1 | |||

| dec2859fc4 | |||

| a4d9a65fc6 | |||

| 683108d3a5 | |||

| e68e100117 | |||

| 158892047b | |||

| 7d5e59cd40 | |||

| e8fc351ce9 | |||

| 43e91b0df7 | |||

| 39a461b3b2 | |||

| 19ade4acf0 | |||

| 10f8b522db | |||

| d26ca4f71c | |||

| 9160f756db | |||

| 84c3a7b969 | |||

| d9f9cc65b3 | |||

| 7d99c0db8e | |||

| fe20a8c5e7 | |||

| 43c95106d4 | |||

| 1dd5f0b848 | |||

| 8610aa27a4 | |||

| 91bf3c0749 | |||

| 159155785e | |||

| eabc296246 | |||

| b44030114e | |||

| 1d6f87be3b | |||

| a7c6fa7bd2 | |||

| a825aec5f3 | |||

| 4df097c228 | |||

| 6871e1b27a | |||

| 4afe05761d | |||

| 7d1b6c2f0a | |||

| 3547cf2057 | |||

| f2043d639c | |||

| 6240de3898 | |||

| f08b20c667 | |||

| e64b468556 | |||

| d48d14dac7 | |||

| eb0c959ca9 | |||

| 741a70ad9d | |||

| 22ee03981e | |||

| b1336e7d08 | |||

| 751caca141 | |||

| 612004727c | |||

| 577ee0241d | |||

| a141ca133c | |||

| a14b6a580d | |||

| cc5005c490 | |||

| 3a5d0f54ce | |||

| cd8ba4f59f | |||

| fe27f96bf1 | |||

| 2c3aa7b2dc | |||

| c934523f2d | |||

| 2f4545dc15 | |||

| cbd490b3d7 | |||

| b07f96d26a | |||

| 065777040f | |||

| 9c82047dc3 | |||

| e0c15409bb | |||

| d956c72cb6 | |||

| dfb3d801cf | |||

| 5c5a3e267c | |||

| f9380c2440 | |||

| e6a1f14c0e | |||

| 6339845eb4 | |||

| 732cc18fd6 | |||

| 84d0f80c81 | |||

| ee26bf35c1 | |||

| 7a5e9102fd | |||

| a8c97bfa73 | |||

| af653a048f | |||

| d2663f959a | |||

| e650fe9ce9 | |||

| daeca42ae8 | |||

| 04496f9b0e | |||

| 0eacb3e35e | |||

| c5ed2f040a | |||

| c394fc2767 | |||

| 157251493a | |||

| 4a982a849d | |||

| 6e3544f523 | |||

| bf3ebbb95f | |||

| eb44ecb1be | |||

| 45bae48701 | |||

| b2181e4c79 | |||

| 5939d3b17b | |||

| c1f4964a55 | |||

| 022e407d84 | |||

| 93ba2d239a | |||

| fa49dd5167 | |||

| 16029e66ad | |||

| 7bd6713335 | |||

| ef3241285d | |||

| d9ef26dc1c | |||

| 02949b2b96 | |||

| d301c76b65 | |||

| dacb45dd8a | |||

| 443d06df06 | |||

| 15e8c988a4 | |||

| 60fab1b301 | |||

| 84c1c1b1ca | |||

| 7419a6d51a | |||

| ee58a92fb3 | |||

| 6b64924355 | |||

| 2f5e8472b9 | |||

| 852bb371af | |||

| 7c90e44656 | |||

| 81dea65856 | |||

| a3d572fb69 | |||

| 7186bf4bb3 | |||

| 115fca58a3 | |||

| cbf60ca636 | |||

| 64ac45d03b | |||

| db062e3e35 | |||

| e85472f367 | |||

| 597f1c6f83 | |||

| 66d4f56777 | |||

| fbfb9e0881 | |||

| 223b5408d7 | |||

| 509135a8d4 | |||

| 8db7151bf0 | |||

| b8cfcdbc12 | |||

| a3cd433184 | |||

| 0f284711e6 | |||

| 67b46e7f30 | |||

| 68f2cec077 | |||

| 8e94c8b2f5 | |||

| a221f8edd0 | |||

| 3b47c75c32 | |||

| 2e34d7a05a | |||

| 204a0a7912 | |||

| 9786499fa6 | |||

| 4f14742233 | |||

| c077c71fdb | |||

| 7b5a3d45bd | |||

| c6c6a9b4f0 | |||

| a5e7c37fcc | |||

| 12a9e13509 | |||

| 0b4b6b1589 | |||

| bf049381bd | |||

| 65c917b84b | |||

| b4700bd7c0 | |||

| a957554262 | |||

| d491a942cc | |||

| 6c55a2720a | |||

| f1d0401f82 | |||

| c5bd09e2c9 | |||

| c70acdc7cd | |||

| 1c6f7f9c06 | |||

| 0b32b253ca | |||

| 3efd2213f2 | |||

| 0705bd03c4 | |||

| 927d005e99 | |||

| 0dccfdbbf0 | |||

| dcb7b66fd7 | |||

| b7437147af | |||

| e82afdd2cb | |||

| 0946da3810 | |||

| d1f4069d3f | |||

| d45a892fd2 | |||

| 4a91b8ed8d | |||

| fb85cb721a | |||

| 3a52122677 | |||

| 13c6ed9098 | |||

| 9dd1995464 | |||

| eb804d0b34 | |||

| e0ee878e84 | |||

| 27abe48a34 | |||

| 8fe504a7ec | |||

| f6ba49819a | |||

| 22bf7af9ba | |||

| 840e8c4d6b | |||

| 49f8d86c77 | |||

| 05827d125b | |||

| 74ee9a333e | |||

| e9769fa602 | |||

| 3adff8cf4c | |||

| d249b47ce9 | |||

| 892d1ad15c | |||

| 76d95bb6d7 | |||

| 7db9a03805 | |||

| 4eef3e9190 | |||

| 014ea884d2 | |||

| c1c5ee7cfb | |||

| 3ac1ddc5d7 | |||

| e6c56c7355 | |||

| 727b08fde3 | |||

| 5d9d48dc82 | |||

| 8e8062fefc | |||

| 23a3e208a5 | |||

| bb84063ef2 | |||

| a476e85fa7 | |||

| 4b05a3e858 | |||

| cd158f24f6 | |||

| ada0a3d10f | |||

| ddf1afb23f | |||

| e2b5489495 | |||

| 6459535e39 | |||

| 5a719c1904 | |||

| 1a2ea2c87d | |||

| ca79bafab3 | |||

| 618224beef | |||

| 481c2a5985 | |||

| e21d9dc9e3 | |||

| 6872a7076b | |||

| c2ae429805 |

2

.github/workflows/build-and-test.yaml

vendored

2

.github/workflows/build-and-test.yaml

vendored

@ -37,5 +37,3 @@ jobs:

|

||||

name: Test dev docker

|

||||

run: |

|

||||

docker run --rm codiumai/pr-agent:test pytest -v tests/unittest

|

||||

|

||||

|

||||

|

||||

4

.github/workflows/code_coverage.yaml

vendored

4

.github/workflows/code_coverage.yaml

vendored

@ -37,7 +37,7 @@ jobs:

|

||||

- id: code_cov

|

||||

name: Test dev docker

|

||||

run: |

|

||||

docker run --name test_container codiumai/pr-agent:test pytest tests/unittest --cov=pr_agent --cov-report term --cov-report xml:coverage.xml

|

||||

docker run --name test_container codiumai/pr-agent:test pytest tests/unittest --cov=pr_agent --cov-report term --cov-report xml:coverage.xml

|

||||

docker cp test_container:/app/coverage.xml coverage.xml

|

||||

docker rm test_container

|

||||

|

||||

@ -51,4 +51,4 @@ jobs:

|

||||

- name: Upload coverage to Codecov

|

||||

uses: codecov/codecov-action@v4.0.1

|

||||

with:

|

||||

token: ${{ secrets.CODECOV_TOKEN }}

|

||||

token: ${{ secrets.CODECOV_TOKEN }}

|

||||

|

||||

6

.github/workflows/docs-ci.yaml

vendored

6

.github/workflows/docs-ci.yaml

vendored

@ -1,4 +1,4 @@

|

||||

name: docs-ci

|

||||

name: docs-ci

|

||||

on:

|

||||

push:

|

||||

branches:

|

||||

@ -20,14 +20,14 @@ jobs:

|

||||

- uses: actions/setup-python@v5

|

||||

with:

|

||||

python-version: 3.x

|

||||

- run: echo "cache_id=$(date --utc '+%V')" >> $GITHUB_ENV

|

||||

- run: echo "cache_id=$(date --utc '+%V')" >> $GITHUB_ENV

|

||||

- uses: actions/cache@v4

|

||||

with:

|

||||

key: mkdocs-material-${{ env.cache_id }}

|

||||

path: .cache

|

||||

restore-keys: |

|

||||

mkdocs-material-

|

||||

- run: pip install mkdocs-material

|

||||

- run: pip install mkdocs-material

|

||||

- run: pip install "mkdocs-material[imaging]"

|

||||

- run: pip install mkdocs-glightbox

|

||||

- run: mkdocs gh-deploy -f docs/mkdocs.yml --force

|

||||

|

||||

2

.github/workflows/e2e_tests.yaml

vendored

2

.github/workflows/e2e_tests.yaml

vendored

@ -43,4 +43,4 @@ jobs:

|

||||

- id: test3

|

||||

name: E2E bitbucket app

|

||||

run: |

|

||||

docker run -e BITBUCKET.USERNAME=${{ secrets.BITBUCKET_USERNAME }} -e BITBUCKET.PASSWORD=${{ secrets.BITBUCKET_PASSWORD }} --rm codiumai/pr-agent:test pytest -v tests/e2e_tests/test_bitbucket_app.py

|

||||

docker run -e BITBUCKET.USERNAME=${{ secrets.BITBUCKET_USERNAME }} -e BITBUCKET.PASSWORD=${{ secrets.BITBUCKET_PASSWORD }} --rm codiumai/pr-agent:test pytest -v tests/e2e_tests/test_bitbucket_app.py

|

||||

|

||||

5

.github/workflows/pr-agent-review.yaml

vendored

5

.github/workflows/pr-agent-review.yaml

vendored

@ -1,4 +1,4 @@

|

||||

# This workflow enables developers to call PR-Agents `/[actions]` in PR's comments and upon PR creation.

|

||||

# This workflow enables developers to call PR-Agents `/[actions]` in PR's comments and upon PR creation.

|

||||

# Learn more at https://www.codium.ai/pr-agent/

|

||||

# This is v0.2 of this workflow file

|

||||

|

||||

@ -30,6 +30,3 @@ jobs:

|

||||

GITHUB_ACTION_CONFIG.AUTO_DESCRIBE: true

|

||||

GITHUB_ACTION_CONFIG.AUTO_REVIEW: true

|

||||

GITHUB_ACTION_CONFIG.AUTO_IMPROVE: true

|

||||

|

||||

|

||||

|

||||

|

||||

17

.github/workflows/pre-commit.yml

vendored

Normal file

17

.github/workflows/pre-commit.yml

vendored

Normal file

@ -0,0 +1,17 @@

|

||||

# disabled. We might run it manually if needed.

|

||||

name: pre-commit

|

||||

|

||||

on:

|

||||

workflow_dispatch:

|

||||

# pull_request:

|

||||

# push:

|

||||

# branches: [main]

|

||||

|

||||

jobs:

|

||||

pre-commit:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- uses: actions/checkout@v3

|

||||

- uses: actions/setup-python@v5

|

||||

# SEE https://github.com/pre-commit/action

|

||||

- uses: pre-commit/action@v3.0.1

|

||||

2

.gitignore

vendored

2

.gitignore

vendored

@ -8,4 +8,4 @@ dist/

|

||||

*.egg-info/

|

||||

build/

|

||||

.DS_Store

|

||||

docs/.cache/

|

||||

docs/.cache/

|

||||

|

||||

@ -1,6 +1,3 @@

|

||||

[pr_reviewer]

|

||||

enable_review_labels_effort = true

|

||||

enable_auto_approval = true

|

||||

|

||||

[config]

|

||||

model="claude-3-5-sonnet"

|

||||

|

||||

46

.pre-commit-config.yaml

Normal file

46

.pre-commit-config.yaml

Normal file

@ -0,0 +1,46 @@

|

||||

# See https://pre-commit.com for more information

|

||||

# See https://pre-commit.com/hooks.html for more hooks

|

||||

|

||||

default_language_version:

|

||||

python: python3

|

||||

|

||||

repos:

|

||||

- repo: https://github.com/pre-commit/pre-commit-hooks

|

||||

rev: v5.0.0

|

||||

hooks:

|

||||

- id: check-added-large-files

|

||||

- id: check-toml

|

||||

- id: check-yaml

|

||||

- id: end-of-file-fixer

|

||||

- id: trailing-whitespace

|

||||

# - repo: https://github.com/rhysd/actionlint

|

||||

# rev: v1.7.3

|

||||

# hooks:

|

||||

# - id: actionlint

|

||||

- repo: https://github.com/pycqa/isort

|

||||

# rev must match what's in dev-requirements.txt

|

||||

rev: 5.13.2

|

||||

hooks:

|

||||

- id: isort

|

||||

# - repo: https://github.com/PyCQA/bandit

|

||||

# rev: 1.7.10

|

||||

# hooks:

|

||||

# - id: bandit

|

||||

# args: [

|

||||

# "-c", "pyproject.toml",

|

||||

# ]

|

||||

# - repo: https://github.com/astral-sh/ruff-pre-commit

|

||||

# rev: v0.7.1

|

||||

# hooks:

|

||||

# - id: ruff

|

||||

# args:

|

||||

# - --fix

|

||||

# - id: ruff-format

|

||||

# - repo: https://github.com/PyCQA/autoflake

|

||||

# rev: v2.3.1

|

||||

# hooks:

|

||||

# - id: autoflake

|

||||

# args:

|

||||

# - --in-place

|

||||

# - --remove-all-unused-imports

|

||||

# - --remove-unused-variables

|

||||

@ -1,10 +1,11 @@

|

||||

FROM python:3.10 as base

|

||||

FROM python:3.12 as base

|

||||

|

||||

WORKDIR /app

|

||||

ADD pyproject.toml .

|

||||

ADD requirements.txt .

|

||||

RUN pip install . && rm pyproject.toml requirements.txt

|

||||

ENV PYTHONPATH=/app

|

||||

ADD docs docs

|

||||

ADD pr_agent pr_agent

|

||||

ADD github_action/entrypoint.sh /

|

||||

RUN chmod +x /entrypoint.sh

|

||||

|

||||

2

LICENSE

2

LICENSE

@ -199,4 +199,4 @@

|

||||

distributed under the License is distributed on an "AS IS" BASIS,

|

||||

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

See the License for the specific language governing permissions and

|

||||

limitations under the License.

|

||||

limitations under the License.

|

||||

|

||||

@ -1,2 +1,2 @@

|

||||

recursive-include pr_agent *.toml

|

||||

recursive-exclude pr_agent *.secrets.toml

|

||||

recursive-exclude pr_agent *.secrets.toml

|

||||

|

||||

129

README.md

129

README.md

@ -10,24 +10,22 @@

|

||||

|

||||

</picture>

|

||||

<br/>

|

||||

CodiumAI PR-Agent aims to help efficiently review and handle pull requests, by providing AI feedback and suggestions

|

||||

Qode Merge PR-Agent aims to help efficiently review and handle pull requests, by providing AI feedback and suggestions

|

||||

</div>

|

||||

|

||||

[](https://github.com/Codium-ai/pr-agent/blob/main/LICENSE)

|

||||

[](https://chromewebstore.google.com/detail/pr-agent-chrome-extension/ephlnjeghhogofkifjloamocljapahnl)

|

||||

[](https://pr-agent-docs.codium.ai/finetuning_benchmark/)

|

||||

[](https://github.com/apps/qodo-merge-pro/)

|

||||

[](https://github.com/apps/qodo-merge-pro-for-open-source/)

|

||||

[](https://discord.com/channels/1057273017547378788/1126104260430528613)

|

||||

[](https://twitter.com/codiumai)

|

||||

[](https://www.codium.ai/images/pr_agent/cheat_sheet.pdf)

|

||||

<a href="https://github.com/Codium-ai/pr-agent/commits/main">

|

||||

<img alt="GitHub" src="https://img.shields.io/github/last-commit/Codium-ai/pr-agent/main?style=for-the-badge" height="20">

|

||||

</a>

|

||||

<a href="https://github.com/Codium-ai/pr-agent/commits/main">

|

||||

<img alt="GitHub" src="https://img.shields.io/github/last-commit/Codium-ai/pr-agent/main?style=for-the-badge" height="20">

|

||||

</a>

|

||||

</div>

|

||||

|

||||

### [Documentation](https://pr-agent-docs.codium.ai/)

|

||||

- See the [Installation Guide](https://qodo-merge-docs.qodo.ai/installation/) for instructions on installing PR-Agent on different platforms.

|

||||

- See the [Installation Guide](https://qodo-merge-docs.qodo.ai/installation/) for instructions on installing Qode Merge PR-Agent on different platforms.

|

||||

|

||||

- See the [Usage Guide](https://qodo-merge-docs.qodo.ai/usage-guide/) for instructions on running PR-Agent tools via different interfaces, such as CLI, PR Comments, or by automatically triggering them when a new PR is opened.

|

||||

- See the [Usage Guide](https://qodo-merge-docs.qodo.ai/usage-guide/) for instructions on running Qode Merge PR-Agent tools via different interfaces, such as CLI, PR Comments, or by automatically triggering them when a new PR is opened.

|

||||

|

||||

- See the [Tools Guide](https://qodo-merge-docs.qodo.ai/tools/) for a detailed description of the different tools, and the available configurations for each tool.

|

||||

|

||||

@ -40,48 +38,43 @@ CodiumAI PR-Agent aims to help efficiently review and handle pull requests, by p

|

||||

- [PR-Agent Pro 💎](https://pr-agent-docs.codium.ai/overview/pr_agent_pro/)

|

||||

- [How it works](#how-it-works)

|

||||

- [Why use PR-Agent?](#why-use-pr-agent)

|

||||

|

||||

|

||||

## News and Updates

|

||||

|

||||

### September 21, 2024

|

||||

Need help with PR-Agent? New feature - simply comment `/help "your question"` in a pull request, and PR-Agent will provide you with the [relevant documentation](https://github.com/Codium-ai/pr-agent/pull/1241#issuecomment-2365259334).

|

||||

### December 2, 2024

|

||||

|

||||

<kbd><img src="https://www.codium.ai/images/pr_agent/pr_help_chat.png" width="768"></kbd>

|

||||

Open-source repositories can now freely use Qodo Merge Pro, and enjoy easy one-click installation using a marketplace [app](https://github.com/apps/qodo-merge-pro-for-open-source).

|

||||

|

||||

<kbd><img src="https://github.com/user-attachments/assets/b0838724-87b9-43b0-ab62-73739a3a855c" width="512"></kbd>

|

||||

|

||||

See [here](https://qodo-merge-docs.qodo.ai/installation/pr_agent_pro/) for more details about installing Qodo Merge Pro for private repositories.

|

||||

|

||||

|

||||

### September 12, 2024

|

||||

[Dynamic context](https://pr-agent-docs.codium.ai/core-abilities/dynamic_context/) is now the default option for context extension.

|

||||

This feature enables PR-Agent to dynamically adjusting the relevant context for each code hunk, while avoiding overflowing the model with too much information.

|

||||

### November 18, 2024

|

||||

|

||||

### September 3, 2024

|

||||

A new mode was enabled by default for code suggestions - `--pr_code_suggestions.focus_only_on_problems=true`:

|

||||

|

||||

New version of PR-Agent, v0.24 was released. See the [release notes](https://github.com/Codium-ai/pr-agent/releases/tag/v0.24) for more information.

|

||||

- This option reduces the number of code suggestions received

|

||||

- The suggestions will focus more on identifying and fixing code problems, rather than style considerations like best practices, maintainability, or readability.

|

||||

- The suggestions will be categorized into just two groups: "Possible Issues" and "General".

|

||||

|

||||

### August 26, 2024

|

||||

Still, if you prefer the previous mode, you can set `--pr_code_suggestions.focus_only_on_problems=false` in the [configuration file](https://qodo-merge-docs.qodo.ai/usage-guide/configuration_options/).

|

||||

|

||||

New version of [PR Agent Chrome Extension](https://chromewebstore.google.com/detail/pr-agent-chrome-extension/ephlnjeghhogofkifjloamocljapahnl) was released, with full support of context-aware **PR Chat**. This novel feature is free to use for any open-source repository. See more details in [here](https://pr-agent-docs.codium.ai/chrome-extension/#pr-chat).

|

||||

**Example results:**

|

||||

|

||||

<kbd><img src="https://www.codium.ai/images/pr_agent/pr_chat_1.png" width="768"></kbd>

|

||||

Original mode

|

||||

|

||||

<kbd><img src="https://www.codium.ai/images/pr_agent/pr_chat_2.png" width="768"></kbd>

|

||||

<kbd><img src="https://qodo.ai/images/pr_agent/code_suggestions_original_mode.png" width="512"></kbd>

|

||||

|

||||

Focused mode

|

||||

|

||||

<kbd><img src="https://qodo.ai/images/pr_agent/code_suggestions_focused_mode.png" width="512"></kbd>

|

||||

|

||||

|

||||

### August 11, 2024

|

||||

Increased PR context size for improved results, and enabled [asymmetric context](https://github.com/Codium-ai/pr-agent/pull/1114/files#diff-9290a3ad9a86690b31f0450b77acd37ef1914b41fabc8a08682d4da433a77f90R69-R70)

|

||||

|

||||

### August 10, 2024

|

||||

Added support for [Azure devops pipeline](https://pr-agent-docs.codium.ai/installation/azure/) - you can now easily run PR-Agent as an Azure devops pipeline, without needing to set up your own server.

|

||||

|

||||

|

||||

### August 5, 2024

|

||||

Added support for [GitLab pipeline](https://pr-agent-docs.codium.ai/installation/gitlab/#run-as-a-gitlab-pipeline) - you can now run easily PR-Agent as a GitLab pipeline, without needing to set up your own server.

|

||||

|

||||

### July 28, 2024

|

||||

|

||||

(1) improved support for bitbucket server - [auto commands](https://github.com/Codium-ai/pr-agent/pull/1059) and [direct links](https://github.com/Codium-ai/pr-agent/pull/1061)

|

||||

|

||||

(2) custom models are now [supported](https://pr-agent-docs.codium.ai/usage-guide/changing_a_model/#custom-models)

|

||||

### November 4, 2024

|

||||

|

||||

Qodo Merge PR Agent will now leverage context from Jira or GitHub tickets to enhance the PR Feedback. Read more about this feature

|

||||

[here](https://qodo-merge-docs.qodo.ai/core-abilities/fetching_ticket_context/)

|

||||

|

||||

|

||||

## Overview

|

||||

@ -89,40 +82,41 @@ Added support for [GitLab pipeline](https://pr-agent-docs.codium.ai/installation

|

||||

|

||||

Supported commands per platform:

|

||||

|

||||

| | | GitHub | Gitlab | Bitbucket | Azure DevOps |

|

||||

| | | GitHub | GitLab | Bitbucket | Azure DevOps |

|

||||

|-------|---------------------------------------------------------------------------------------------------------|:--------------------:|:--------------------:|:--------------------:|:------------:|

|

||||

| TOOLS | Review | ✅ | ✅ | ✅ | ✅ |

|

||||

| | ⮑ Incremental | ✅ | | | |

|

||||

| | ⮑ [SOC2 Compliance](https://pr-agent-docs.codium.ai/tools/review/#soc2-ticket-compliance) 💎 | ✅ | ✅ | ✅ | |

|

||||

| | Describe | ✅ | ✅ | ✅ | ✅ |

|

||||

| | ⮑ [Inline File Summary](https://pr-agent-docs.codium.ai/tools/describe#inline-file-summary) 💎 | ✅ | | | |

|

||||

| | Improve | ✅ | ✅ | ✅ | ✅ |

|

||||

| | ⮑ Extended | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Ask | ✅ | ✅ | ✅ | ✅ |

|

||||

| TOOLS | [Review](https://qodo-merge-docs.qodo.ai/tools/review/) | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [Describe](https://qodo-merge-docs.qodo.ai/tools/describe/) | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [Improve](https://qodo-merge-docs.qodo.ai/tools/improve/) | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [Ask](https://qodo-merge-docs.qodo.ai/tools/ask/) | ✅ | ✅ | ✅ | ✅ |

|

||||

| | ⮑ [Ask on code lines](https://pr-agent-docs.codium.ai/tools/ask#ask-lines) | ✅ | ✅ | | |

|

||||

| | [Custom Prompt](https://pr-agent-docs.codium.ai/tools/custom_prompt/) 💎 | ✅ | ✅ | ✅ | |

|

||||

| | [Test](https://pr-agent-docs.codium.ai/tools/test/) 💎 | ✅ | ✅ | | |

|

||||

| | Reflect and Review | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Update CHANGELOG.md | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Find Similar Issue | ✅ | | | |

|

||||

| | [Add PR Documentation](https://pr-agent-docs.codium.ai/tools/documentation/) 💎 | ✅ | ✅ | | |

|

||||

| | [Update CHANGELOG](https://qodo-merge-docs.qodo.ai/tools/update_changelog/) | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [Ticket Context](https://qodo-merge-docs.qodo.ai/core-abilities/fetching_ticket_context/) 💎 | ✅ | ✅ | ✅ | |

|

||||

| | [Utilizing Best Practices](https://qodo-merge-docs.qodo.ai/tools/improve/#best-practices) 💎 | ✅ | ✅ | ✅ | |

|

||||

| | [PR Chat](https://qodo-merge-docs.qodo.ai/chrome-extension/features/#pr-chat) 💎 | ✅ | | | |

|

||||

| | [Suggestion Tracking](https://qodo-merge-docs.qodo.ai/tools/improve/#suggestion-tracking) 💎 | ✅ | ✅ | | |

|

||||

| | [CI Feedback](https://pr-agent-docs.codium.ai/tools/ci_feedback/) 💎 | ✅ | | | |

|

||||

| | [PR Documentation](https://pr-agent-docs.codium.ai/tools/documentation/) 💎 | ✅ | ✅ | | |

|

||||

| | [Custom Labels](https://pr-agent-docs.codium.ai/tools/custom_labels/) 💎 | ✅ | ✅ | | |

|

||||

| | [Analyze](https://pr-agent-docs.codium.ai/tools/analyze/) 💎 | ✅ | ✅ | | |

|

||||

| | [CI Feedback](https://pr-agent-docs.codium.ai/tools/ci_feedback/) 💎 | ✅ | | | |

|

||||

| | [Similar Code](https://pr-agent-docs.codium.ai/tools/similar_code/) 💎 | ✅ | | | |

|

||||

| | [Custom Prompt](https://pr-agent-docs.codium.ai/tools/custom_prompt/) 💎 | ✅ | ✅ | ✅ | |

|

||||

| | [Test](https://pr-agent-docs.codium.ai/tools/test/) 💎 | ✅ | ✅ | | |

|

||||

| | | | | | |

|

||||

| USAGE | CLI | ✅ | ✅ | ✅ | ✅ |

|

||||

| | App / webhook | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Tagging bot | ✅ | | | |

|

||||

| | Actions | ✅ |✅| ✅ |✅|

|

||||

| USAGE | [CLI](https://qodo-merge-docs.qodo.ai/usage-guide/automations_and_usage/#local-repo-cli) | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [App / webhook](https://qodo-merge-docs.qodo.ai/usage-guide/automations_and_usage/#github-app) | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [Tagging bot](https://github.com/Codium-ai/pr-agent#try-it-now) | ✅ | | | |

|

||||

| | [Actions](https://qodo-merge-docs.qodo.ai/installation/github/#run-as-a-github-action) | ✅ |✅| ✅ |✅|

|

||||

| | | | | | |

|

||||

| CORE | PR compression | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Repo language prioritization | ✅ | ✅ | ✅ | ✅ |

|

||||

| CORE | [PR compression](https://qodo-merge-docs.qodo.ai/core-abilities/compression_strategy/) | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Adaptive and token-aware file patch fitting | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Multiple models support | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [Static code analysis](https://pr-agent-docs.codium.ai/core-abilities/#static-code-analysis) 💎 | ✅ | ✅ | ✅ | |

|

||||

| | [Multiple models support](https://qodo-merge-docs.qodo.ai/usage-guide/changing_a_model/) | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [Local and global metadata](https://qodo-merge-docs.qodo.ai/core-abilities/metadata/) | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [Dynamic context](https://qodo-merge-docs.qodo.ai/core-abilities/dynamic_context/) | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [Self reflection](https://qodo-merge-docs.qodo.ai/core-abilities/self_reflection/) | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [Static code analysis](https://qodo-merge-docs.qodo.ai/core-abilities/static_code_analysis/) 💎 | ✅ | ✅ | ✅ | |

|

||||

| | [Global and wiki configurations](https://pr-agent-docs.codium.ai/usage-guide/configuration_options/) 💎 | ✅ | ✅ | ✅ | |

|

||||

| | [PR interactive actions](https://www.codium.ai/images/pr_agent/pr-actions.mp4) 💎 | ✅ | ✅ | | |

|

||||

| | [Impact Evaluation](https://qodo-merge-docs.qodo.ai/core-abilities/impact_evaluation/) 💎 | ✅ | ✅ | | |

|

||||

- 💎 means this feature is available only in [PR-Agent Pro](https://www.codium.ai/pricing/)

|

||||

|

||||

[//]: # (- Support for additional git providers is described in [here](./docs/Full_environments.md))

|

||||

@ -183,14 +177,9 @@ ___

|

||||

</kbd>

|

||||

</p>

|

||||

</div>

|

||||

<hr>

|

||||

|

||||

<h4><a href="https://github.com/Codium-ai/pr-agent/pull/530">/generate_labels</a></h4>

|

||||

<div align="center">

|

||||

<p float="center">

|

||||

<kbd><img src="https://www.codium.ai/images/pr_agent/geneare_custom_labels_main_short.png" width="300"></kbd>

|

||||

</p>

|

||||

</div>

|

||||

|

||||

|

||||

|

||||

[//]: # (<h4><a href="https://github.com/Codium-ai/pr-agent/pull/78#issuecomment-1639739496">/reflect_and_review:</a></h4>)

|

||||

|

||||

@ -261,7 +250,7 @@ Note that when you set your own PR-Agent or use CodiumAI hosted PR-Agent, there

|

||||

1. **Fully managed** - We take care of everything for you - hosting, models, regular updates, and more. Installation is as simple as signing up and adding the PR-Agent app to your GitHub\GitLab\BitBucket repo.

|

||||

2. **Improved privacy** - No data will be stored or used to train models. PR-Agent Pro will employ zero data retention, and will use an OpenAI account with zero data retention.

|

||||

3. **Improved support** - PR-Agent Pro users will receive priority support, and will be able to request new features and capabilities.

|

||||

4. **Extra features** -In addition to the benefits listed above, PR-Agent Pro will emphasize more customization, and the usage of static code analysis, in addition to LLM logic, to improve results.

|

||||

4. **Extra features** -In addition to the benefits listed above, PR-Agent Pro will emphasize more customization, and the usage of static code analysis, in addition to LLM logic, to improve results.

|

||||

See [here](https://qodo-merge-docs.qodo.ai/overview/pr_agent_pro/) for a list of features available in PR-Agent Pro.

|

||||

|

||||

|

||||

|

||||

@ -88,7 +88,7 @@ Significant documentation updates (see [Installation Guide](https://github.com/C

|

||||

- codiumai/pr-agent:0.7-gitlab_webhook

|

||||

- codiumai/pr-agent:0.7-github_polling

|

||||

- codiumai/pr-agent:0.7-github_action

|

||||

|

||||

|

||||

### Added::Algo

|

||||

- New tool /similar_issue - Currently on GitHub app and CLI: indexes the issues in the repo, find the most similar issues to the target issue.

|

||||

- Describe markers: Empower the /describe tool with a templating capability (see more details in https://github.com/Codium-ai/pr-agent/pull/273).

|

||||

|

||||

@ -1,9 +1,9 @@

|

||||

FROM python:3.12.3 AS base

|

||||

|

||||

WORKDIR /app

|

||||

ADD docs/chroma_db.zip /app/docs/chroma_db.zip

|

||||

ADD pyproject.toml .

|

||||

ADD requirements.txt .

|

||||

ADD docs docs

|

||||

RUN pip install . && rm pyproject.toml requirements.txt

|

||||

ENV PYTHONPATH=/app

|

||||

|

||||

|

||||

Binary file not shown.

Binary file not shown.

|

Before Width: | Height: | Size: 15 KiB After Width: | Height: | Size: 4.2 KiB |

@ -1,140 +1 @@

|

||||

<?xml version="1.0" encoding="utf-8"?>

|

||||

<!-- Generator: Adobe Illustrator 28.1.0, SVG Export Plug-In . SVG Version: 6.00 Build 0) -->

|

||||

<svg version="1.1" id="Layer_1" xmlns="http://www.w3.org/2000/svg" xmlns:xlink="http://www.w3.org/1999/xlink" x="0px" y="0px"

|

||||

width="64px" height="64px" viewBox="0 0 64 64" enable-background="new 0 0 64 64" xml:space="preserve">

|

||||

<g>

|

||||

<defs>

|

||||

<rect id="SVGID_1_" x="0.4" y="0.1" width="63.4" height="63.4"/>

|

||||

</defs>

|

||||

<clipPath id="SVGID_00000008836131916906499950000015813697852011234749_">

|

||||

<use xlink:href="#SVGID_1_" overflow="visible"/>

|

||||

</clipPath>

|

||||

<g clip-path="url(#SVGID_00000008836131916906499950000015813697852011234749_)">

|

||||

<path fill="#05E5AD" d="M21.4,9.8c3,0,5.9,0.7,8.5,1.9c-5.7,3.4-9.8,11.1-9.8,20.1c0,9,4,16.7,9.8,20.1c-2.6,1.2-5.5,1.9-8.5,1.9

|

||||

c-11.6,0-21-9.8-21-22S9.8,9.8,21.4,9.8z"/>

|

||||

|

||||

<radialGradient id="SVGID_00000150822754378345238340000008985053211526864828_" cx="-140.0905" cy="350.1757" r="4.8781" gradientTransform="matrix(-4.7708 -6.961580e-02 -0.1061 7.2704 -601.3099 -2523.8489)" gradientUnits="userSpaceOnUse">

|

||||

<stop offset="0" style="stop-color:#6447FF"/>

|

||||

<stop offset="6.666670e-02" style="stop-color:#6348FE"/>

|

||||

<stop offset="0.1333" style="stop-color:#614DFC"/>

|

||||

<stop offset="0.2" style="stop-color:#5C54F8"/>

|

||||

<stop offset="0.2667" style="stop-color:#565EF3"/>

|

||||

<stop offset="0.3333" style="stop-color:#4E6CEC"/>

|

||||

<stop offset="0.4" style="stop-color:#447BE4"/>

|

||||

<stop offset="0.4667" style="stop-color:#3A8DDB"/>

|

||||

<stop offset="0.5333" style="stop-color:#2F9FD1"/>

|

||||

<stop offset="0.6" style="stop-color:#25B1C8"/>

|

||||

<stop offset="0.6667" style="stop-color:#1BC0C0"/>

|

||||

<stop offset="0.7333" style="stop-color:#13CEB9"/>

|

||||

<stop offset="0.8" style="stop-color:#0DD8B4"/>

|

||||

<stop offset="0.8667" style="stop-color:#08DFB0"/>

|

||||

<stop offset="0.9333" style="stop-color:#06E4AE"/>

|

||||

<stop offset="1" style="stop-color:#05E5AD"/>

|

||||

</radialGradient>

|

||||

<path fill="url(#SVGID_00000150822754378345238340000008985053211526864828_)" d="M21.4,9.8c3,0,5.9,0.7,8.5,1.9

|

||||

c-5.7,3.4-9.8,11.1-9.8,20.1c0,9,4,16.7,9.8,20.1c-2.6,1.2-5.5,1.9-8.5,1.9c-11.6,0-21-9.8-21-22S9.8,9.8,21.4,9.8z"/>

|

||||

|

||||

<radialGradient id="SVGID_00000022560571240417802950000012439139323268113305_" cx="-191.7649" cy="385.7387" r="4.8781" gradientTransform="matrix(-2.5514 -0.7616 -0.8125 2.7217 -130.733 -1180.2209)" gradientUnits="userSpaceOnUse">

|

||||

<stop offset="0" style="stop-color:#6447FF"/>

|

||||

<stop offset="6.666670e-02" style="stop-color:#6348FE"/>

|

||||

<stop offset="0.1333" style="stop-color:#614DFC"/>

|

||||

<stop offset="0.2" style="stop-color:#5C54F8"/>

|

||||

<stop offset="0.2667" style="stop-color:#565EF3"/>

|

||||

<stop offset="0.3333" style="stop-color:#4E6CEC"/>

|

||||

<stop offset="0.4" style="stop-color:#447BE4"/>

|

||||

<stop offset="0.4667" style="stop-color:#3A8DDB"/>

|

||||

<stop offset="0.5333" style="stop-color:#2F9FD1"/>

|

||||

<stop offset="0.6" style="stop-color:#25B1C8"/>

|

||||

<stop offset="0.6667" style="stop-color:#1BC0C0"/>

|

||||

<stop offset="0.7333" style="stop-color:#13CEB9"/>

|

||||

<stop offset="0.8" style="stop-color:#0DD8B4"/>

|

||||

<stop offset="0.8667" style="stop-color:#08DFB0"/>

|

||||

<stop offset="0.9333" style="stop-color:#06E4AE"/>

|

||||

<stop offset="1" style="stop-color:#05E5AD"/>

|

||||

</radialGradient>

|

||||

<path fill="url(#SVGID_00000022560571240417802950000012439139323268113305_)" d="M38,18.3c-2.1-2.8-4.9-5.1-8.1-6.6

|

||||

c2-1.2,4.2-1.9,6.6-1.9c2.2,0,4.3,0.6,6.2,1.7C40.8,12.9,39.2,15.3,38,18.3L38,18.3z"/>

|

||||

|

||||

<radialGradient id="SVGID_00000143611122169386473660000017673587931016751800_" cx="-194.7918" cy="395.2442" r="4.8781" gradientTransform="matrix(-2.5514 -0.7616 -0.8125 2.7217 -130.733 -1172.9556)" gradientUnits="userSpaceOnUse">

|

||||

<stop offset="0" style="stop-color:#6447FF"/>

|

||||

<stop offset="6.666670e-02" style="stop-color:#6348FE"/>

|

||||

<stop offset="0.1333" style="stop-color:#614DFC"/>

|

||||

<stop offset="0.2" style="stop-color:#5C54F8"/>

|

||||

<stop offset="0.2667" style="stop-color:#565EF3"/>

|

||||

<stop offset="0.3333" style="stop-color:#4E6CEC"/>

|

||||

<stop offset="0.4" style="stop-color:#447BE4"/>

|

||||

<stop offset="0.4667" style="stop-color:#3A8DDB"/>

|

||||

<stop offset="0.5333" style="stop-color:#2F9FD1"/>

|

||||

<stop offset="0.6" style="stop-color:#25B1C8"/>

|

||||

<stop offset="0.6667" style="stop-color:#1BC0C0"/>

|

||||

<stop offset="0.7333" style="stop-color:#13CEB9"/>

|

||||

<stop offset="0.8" style="stop-color:#0DD8B4"/>

|

||||

<stop offset="0.8667" style="stop-color:#08DFB0"/>

|

||||

<stop offset="0.9333" style="stop-color:#06E4AE"/>

|

||||

<stop offset="1" style="stop-color:#05E5AD"/>

|

||||

</radialGradient>

|

||||

<path fill="url(#SVGID_00000143611122169386473660000017673587931016751800_)" d="M38,45.2c1.2,3,2.9,5.3,4.7,6.8

|

||||

c-1.9,1.1-4,1.7-6.2,1.7c-2.3,0-4.6-0.7-6.6-1.9C33.1,50.4,35.8,48.1,38,45.2L38,45.2z"/>

|

||||

<path fill="#684BFE" d="M20.1,31.8c0-9,4-16.7,9.8-20.1c3.2,1.5,6,3.8,8.1,6.6c-1.5,3.7-2.5,8.4-2.5,13.5s0.9,9.8,2.5,13.5

|

||||

c-2.1,2.8-4.9,5.1-8.1,6.6C24.1,48.4,20.1,40.7,20.1,31.8z"/>

|

||||

|

||||

<radialGradient id="SVGID_00000147942998054305738810000004710078864578628519_" cx="-212.7358" cy="363.2475" r="4.8781" gradientTransform="matrix(-2.3342 -1.063 -1.623 3.5638 149.3813 -1470.1027)" gradientUnits="userSpaceOnUse">

|

||||

<stop offset="0" style="stop-color:#6447FF"/>

|

||||

<stop offset="6.666670e-02" style="stop-color:#6348FE"/>

|

||||

<stop offset="0.1333" style="stop-color:#614DFC"/>

|

||||

<stop offset="0.2" style="stop-color:#5C54F8"/>

|

||||

<stop offset="0.2667" style="stop-color:#565EF3"/>

|

||||

<stop offset="0.3333" style="stop-color:#4E6CEC"/>

|

||||

<stop offset="0.4" style="stop-color:#447BE4"/>

|

||||

<stop offset="0.4667" style="stop-color:#3A8DDB"/>

|

||||

<stop offset="0.5333" style="stop-color:#2F9FD1"/>

|

||||

<stop offset="0.6" style="stop-color:#25B1C8"/>

|

||||

<stop offset="0.6667" style="stop-color:#1BC0C0"/>

|

||||

<stop offset="0.7333" style="stop-color:#13CEB9"/>

|

||||

<stop offset="0.8" style="stop-color:#0DD8B4"/>

|

||||

<stop offset="0.8667" style="stop-color:#08DFB0"/>

|

||||

<stop offset="0.9333" style="stop-color:#06E4AE"/>

|

||||

<stop offset="1" style="stop-color:#05E5AD"/>

|

||||

</radialGradient>

|

||||

<path fill="url(#SVGID_00000147942998054305738810000004710078864578628519_)" d="M50.7,42.5c0.6,3.3,1.5,6.1,2.5,8

|

||||

c-1.8,2-3.8,3.1-6,3.1c-1.6,0-3.1-0.6-4.5-1.7C46.1,50.2,48.9,46.8,50.7,42.5L50.7,42.5z"/>

|

||||

|

||||

<radialGradient id="SVGID_00000083770737908230256670000016126156495859285174_" cx="-208.5327" cy="357.2025" r="4.8781" gradientTransform="matrix(-2.3342 -1.063 -1.623 3.5638 149.3813 -1476.8097)" gradientUnits="userSpaceOnUse">

|

||||

<stop offset="0" style="stop-color:#6447FF"/>

|

||||

<stop offset="6.666670e-02" style="stop-color:#6348FE"/>

|

||||

<stop offset="0.1333" style="stop-color:#614DFC"/>

|

||||

<stop offset="0.2" style="stop-color:#5C54F8"/>

|

||||

<stop offset="0.2667" style="stop-color:#565EF3"/>

|

||||

<stop offset="0.3333" style="stop-color:#4E6CEC"/>

|

||||

<stop offset="0.4" style="stop-color:#447BE4"/>

|

||||

<stop offset="0.4667" style="stop-color:#3A8DDB"/>

|

||||

<stop offset="0.5333" style="stop-color:#2F9FD1"/>

|

||||

<stop offset="0.6" style="stop-color:#25B1C8"/>

|

||||

<stop offset="0.6667" style="stop-color:#1BC0C0"/>

|

||||

<stop offset="0.7333" style="stop-color:#13CEB9"/>

|

||||

<stop offset="0.8" style="stop-color:#0DD8B4"/>

|

||||

<stop offset="0.8667" style="stop-color:#08DFB0"/>

|

||||

<stop offset="0.9333" style="stop-color:#06E4AE"/>

|

||||

<stop offset="1" style="stop-color:#05E5AD"/>

|

||||

</radialGradient>

|

||||

<path fill="url(#SVGID_00000083770737908230256670000016126156495859285174_)" d="M42.7,11.5c1.4-1.1,2.9-1.7,4.5-1.7

|

||||

c2.2,0,4.3,1.1,6,3.1c-1,2-1.9,4.7-2.5,8C48.9,16.7,46.1,13.4,42.7,11.5L42.7,11.5z"/>

|

||||

<path fill="#684BFE" d="M38,45.2c2.8-3.7,4.4-8.4,4.4-13.5c0-5.1-1.7-9.8-4.4-13.5c1.2-3,2.9-5.3,4.7-6.8c3.4,1.9,6.2,5.3,8,9.5

|

||||

c-0.6,3.2-0.9,6.9-0.9,10.8s0.3,7.6,0.9,10.8c-1.8,4.3-4.6,7.6-8,9.5C40.8,50.6,39.2,48.2,38,45.2L38,45.2z"/>

|

||||

<path fill="#321BB2" d="M38,45.2c-1.5-3.7-2.5-8.4-2.5-13.5S36.4,22,38,18.3c2.8,3.7,4.4,8.4,4.4,13.5S40.8,41.5,38,45.2z"/>

|

||||

<path fill="#05E6AD" d="M53.2,12.9c1.1-2,2.3-3.1,3.6-3.1c3.9,0,7,9.8,7,22s-3.1,22-7,22c-1.3,0-2.6-1.1-3.6-3.1

|

||||

c3.4-3.8,5.7-10.8,5.7-18.8C58.8,23.8,56.6,16.8,53.2,12.9z"/>

|

||||

|

||||

<radialGradient id="SVGID_00000009565123575973598080000009335550354766300606_" cx="-7.8671" cy="278.2442" r="4.8781" gradientTransform="matrix(1.5187 0 0 -7.8271 69.237 2209.3281)" gradientUnits="userSpaceOnUse">

|

||||

<stop offset="0" style="stop-color:#05E5AD"/>

|

||||

<stop offset="0.32" style="stop-color:#05E5AD;stop-opacity:0"/>

|

||||

<stop offset="0.9028" style="stop-color:#6447FF"/>

|

||||

</radialGradient>

|

||||

<path fill="url(#SVGID_00000009565123575973598080000009335550354766300606_)" d="M53.2,12.9c1.1-2,2.3-3.1,3.6-3.1

|

||||

c3.9,0,7,9.8,7,22s-3.1,22-7,22c-1.3,0-2.6-1.1-3.6-3.1c3.4-3.8,5.7-10.8,5.7-18.8C58.8,23.8,56.6,16.8,53.2,12.9z"/>

|

||||

<path fill="#684BFE" d="M52.8,31.8c0-3.9-0.8-7.6-2.1-10.8c0.6-3.3,1.5-6.1,2.5-8c3.4,3.8,5.7,10.8,5.7,18.8c0,8-2.3,15-5.7,18.8

|

||||

c-1-2-1.9-4.7-2.5-8C52,39.3,52.8,35.7,52.8,31.8z"/>

|

||||

<path fill="#321BB2" d="M50.7,42.5c-0.6-3.2-0.9-6.9-0.9-10.8s0.3-7.6,0.9-10.8c1.3,3.2,2.1,6.9,2.1,10.8S52,39.3,50.7,42.5z"/>

|

||||

</g>

|

||||

</g>

|

||||

</svg>

|

||||

<?xml version="1.0" encoding="UTF-8"?><svg id="Layer_1" xmlns="http://www.w3.org/2000/svg" viewBox="0 0 109.77 81.94"><defs><style>.cls-1{fill:#7968fa;}.cls-1,.cls-2{stroke-width:0px;}.cls-2{fill:#5ae3ae;}</style></defs><path class="cls-2" d="m109.77,40.98c0,22.62-7.11,40.96-15.89,40.96-3.6,0-6.89-3.09-9.58-8.31,6.82-7.46,11.22-19.3,11.22-32.64s-4.4-25.21-11.22-32.67C86.99,3.09,90.29,0,93.89,0c8.78,0,15.89,18.33,15.89,40.97"/><path class="cls-1" d="m95.53,40.99c0,13.35-4.4,25.19-11.23,32.64-3.81-7.46-6.28-19.3-6.28-32.64s2.47-25.21,6.28-32.67c6.83,7.46,11.23,19.32,11.23,32.67"/><path class="cls-2" d="m55.38,78.15c-4.99,2.42-10.52,3.79-16.38,3.79C17.46,81.93,0,63.6,0,40.98S17.46,0,39,0C44.86,0,50.39,1.37,55.38,3.79c-9.69,6.47-16.43,20.69-16.43,37.19s6.73,30.7,16.43,37.17"/><path class="cls-1" d="m78.02,40.99c0,16.48-9.27,30.7-22.65,37.17-9.69-6.47-16.43-20.69-16.43-37.17S45.68,10.28,55.38,3.81c13.37,6.49,22.65,20.69,22.65,37.19"/><path class="cls-2" d="m84.31,73.63c-4.73,5.22-10.64,8.31-17.06,8.31-4.24,0-8.27-1.35-11.87-3.79,13.37-6.48,22.65-20.7,22.65-37.17,0,13.35,2.47,25.19,6.28,32.64"/><path class="cls-2" d="m84.31,8.31c-3.81,7.46-6.28,19.32-6.28,32.67,0-16.5-9.27-30.7-22.65-37.19,3.6-2.45,7.63-3.8,11.87-3.8,6.43,0,12.33,3.09,17.06,8.31"/></svg>

|

||||

|

||||

|

Before Width: | Height: | Size: 9.0 KiB After Width: | Height: | Size: 1.2 KiB |

BIN

docs/docs/assets/logo_.png

Normal file

BIN

docs/docs/assets/logo_.png

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 8.7 KiB |

@ -2,4 +2,3 @@ We take your code's security and privacy seriously:

|

||||

|

||||

- The Chrome extension will not send your code to any external servers.

|

||||

- For private repositories, we will first validate the user's identity and permissions. After authentication, we generate responses using the existing Qodo Merge Pro integration.

|

||||

|

||||

|

||||

@ -2,9 +2,9 @@

|

||||

|

||||

With a single-click installation you will gain access to a context-aware chat on your pull requests code, a toolbar extension with multiple AI feedbacks, Qodo Merge filters, and additional abilities.

|

||||

|

||||

The extension is powered by top code models like Claude 3.5 Sonnet and GPT4. All the extension's features are free to use on public repositories.

|

||||

The extension is powered by top code models like Claude 3.5 Sonnet and GPT4. All the extension's features are free to use on public repositories.

|

||||

|

||||

For private repositories, you will need to install [Qodo Merge Pro](https://github.com/apps/codiumai-pr-agent-pro) in addition to the extension (Quick GitHub app setup with a 14-day free trial. No credit card needed).

|

||||

For private repositories, you will need to install [Qodo Merge Pro](https://github.com/apps/qodo-merge-pro) in addition to the extension (Quick GitHub app setup with a 14-day free trial. No credit card needed).

|

||||

For a demonstration of how to install Qodo Merge Pro and use it with the Chrome extension, please refer to the tutorial video at the provided [link](https://codium.ai/images/pr_agent/private_repos.mp4).

|

||||

|

||||

<img src="https://codium.ai/images/pr_agent/PR-AgentChat.gif" width="768">

|

||||

|

||||

@ -1,2 +1,2 @@

|

||||

## Overview

|

||||

TBD

|

||||

TBD

|

||||

|

||||

@ -12,9 +12,9 @@ We prioritize the languages of the repo based on the following criteria:

|

||||

|

||||

1. Exclude binary files and non code files (e.g. images, pdfs, etc)

|

||||

2. Given the main languages used in the repo

|

||||

3. We sort the PR files by the most common languages in the repo (in descending order):

|

||||

3. We sort the PR files by the most common languages in the repo (in descending order):

|

||||

* ```[[file.py, file2.py],[file3.js, file4.jsx],[readme.md]]```

|

||||

|

||||

|

||||

|

||||

### Small PR

|

||||

In this case, we can fit the entire PR in a single prompt:

|

||||

|

||||

@ -1,7 +1,7 @@

|

||||

## TL;DR

|

||||

|

||||

Qodo Merge uses an **asymmetric and dynamic context strategy** to improve AI analysis of code changes in pull requests.

|

||||

It provides more context before changes than after, and dynamically adjusts the context based on code structure (e.g., enclosing functions or classes).

|

||||

Qodo Merge uses an **asymmetric and dynamic context strategy** to improve AI analysis of code changes in pull requests.

|

||||

It provides more context before changes than after, and dynamically adjusts the context based on code structure (e.g., enclosing functions or classes).

|

||||

This approach balances providing sufficient context for accurate analysis, while avoiding needle-in-the-haystack information overload that could degrade AI performance or exceed token limits.

|

||||

|

||||

## Introduction

|

||||

@ -17,12 +17,12 @@ Pull request code changes are retrieved in a unified diff format, showing three

|

||||

code line that already existed in the file...

|

||||

code line that already existed in the file...

|

||||

code line that already existed in the file...

|

||||

|

||||

|

||||

@@ -26,2 +26,4 @@ def func2():

|

||||

...

|

||||

```

|

||||

|

||||

This unified diff format can be challenging for AI models to interpret accurately, as it provides limited context for understanding the full scope of code changes.

|

||||

This unified diff format can be challenging for AI models to interpret accurately, as it provides limited context for understanding the full scope of code changes.

|

||||

The presentation of code using '+', '-', and ' ' symbols to indicate additions, deletions, and unchanged lines respectively also differs from the standard code formatting typically used to train AI models.

|

||||

|

||||

|

||||

@ -37,7 +37,7 @@ Pros:

|

||||

Cons:

|

||||

|

||||

- Excessive context may overwhelm the model with extraneous information, creating a "needle in a haystack" scenario where focusing on the relevant details (the code that actually changed) becomes challenging.

|

||||

LLM quality is known to degrade when the context gets larger.

|

||||

LLM quality is known to degrade when the context gets larger.

|

||||

Pull requests often encompass multiple changes across many files, potentially spanning hundreds of lines of modified code. This complexity presents a genuine risk of overwhelming the model with excessive context.

|

||||

|

||||

- Increased context expands the token count, increasing processing time and cost, and may prevent the model from processing the entire pull request in a single pass.

|

||||

@ -47,18 +47,18 @@ To address these challenges, Qodo Merge employs an **asymmetric** and **dynamic*

|

||||

|

||||

**Asymmetric:**

|

||||

|

||||

We start by recognizing that the context preceding a code change is typically more crucial for understanding the modification than the context following it.

|

||||

We start by recognizing that the context preceding a code change is typically more crucial for understanding the modification than the context following it.

|

||||

Consequently, Qodo Merge implements an asymmetric context policy, decoupling the context window into two distinct segments: one for the code before the change and another for the code after.

|

||||

|

||||

By independently adjusting each context window, Qodo Merge can supply the model with a more tailored and pertinent context for individual code changes.

|

||||

By independently adjusting each context window, Qodo Merge can supply the model with a more tailored and pertinent context for individual code changes.

|

||||

|

||||

**Dynamic:**

|

||||

|

||||

We also employ a "dynamic" context strategy.

|

||||

We start by recognizing that the optimal context for a code change often corresponds to its enclosing code component (e.g., function, class), rather than a fixed number of lines.

|

||||

We start by recognizing that the optimal context for a code change often corresponds to its enclosing code component (e.g., function, class), rather than a fixed number of lines.

|

||||

Consequently, we dynamically adjust the context window based on the code's structure, ensuring the model receives the most pertinent information for each modification.

|

||||

|

||||

To prevent overwhelming the model with excessive context, we impose a limit on the number of lines searched when identifying the enclosing component.

|

||||

To prevent overwhelming the model with excessive context, we impose a limit on the number of lines searched when identifying the enclosing component.

|

||||

This balance allows for comprehensive understanding while maintaining efficiency and limiting context token usage.

|

||||

|

||||

## Appendix - relevant configuration options

|

||||

@ -69,4 +69,4 @@ allow_dynamic_context=true # Allow dynamic context extension

|

||||

max_extra_lines_before_dynamic_context = 8 # will try to include up to X extra lines before the hunk in the patch, until we reach an enclosing function or class

|

||||

patch_extra_lines_before = 3 # Number of extra lines (+3 default ones) to include before each hunk in the patch

|

||||

patch_extra_lines_after = 1 # Number of extra lines (+3 default ones) to include after each hunk in the patch

|

||||

```

|

||||

```

|

||||

|

||||

169

docs/docs/core-abilities/fetching_ticket_context.md

Normal file

169

docs/docs/core-abilities/fetching_ticket_context.md

Normal file

@ -0,0 +1,169 @@

|

||||

# Fetching Ticket Context for PRs

|

||||

`Supported Git Platforms : GitHub, GitLab, Bitbucket`

|

||||

|

||||

## Overview

|

||||

Qodo Merge PR Agent streamlines code review workflows by seamlessly connecting with multiple ticket management systems.

|

||||

This integration enriches the review process by automatically surfacing relevant ticket information and context alongside code changes.

|

||||

|

||||

## Ticket systems supported

|

||||

- GitHub

|

||||

- Jira (💎)

|

||||

|

||||

Ticket data fetched:

|

||||

|

||||

1. Ticket Title

|

||||

2. Ticket Description

|

||||

3. Custom Fields (Acceptance criteria)

|

||||

4. Subtasks (linked tasks)

|

||||

5. Labels

|

||||

6. Attached Images/Screenshots

|

||||

|

||||

## Affected Tools

|

||||

|

||||

Ticket Recognition Requirements:

|

||||

|

||||

- The PR description should contain a link to the ticket or if the branch name starts with the ticket id / number.

|

||||

- For Jira tickets, you should follow the instructions in [Jira Integration](https://qodo-merge-docs.qodo.ai/core-abilities/fetching_ticket_context/#jira-integration) in order to authenticate with Jira.

|

||||

|

||||

### Describe tool

|

||||

Qodo Merge PR Agent will recognize the ticket and use the ticket content (title, description, labels) to provide additional context for the code changes.

|

||||

By understanding the reasoning and intent behind modifications, the LLM can offer more insightful and relevant code analysis.

|

||||

|

||||

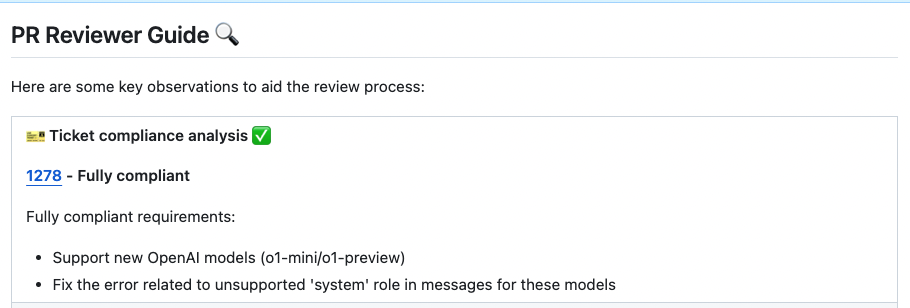

### Review tool

|

||||

Similarly to the `describe` tool, the `review` tool will use the ticket content to provide additional context for the code changes.

|

||||

|

||||

In addition, this feature will evaluate how well a Pull Request (PR) adheres to its original purpose/intent as defined by the associated ticket or issue mentioned in the PR description.

|

||||

Each ticket will be assigned a label (Compliance/Alignment level), Indicates the degree to which the PR fulfills its original purpose, Options: Fully compliant, Partially compliant or Not compliant.

|

||||

|

||||

|

||||

{width=768}

|

||||

|

||||

By default, the tool will automatically validate if the PR complies with the referenced ticket.

|

||||

If you want to disable this feedback, add the following line to your configuration file:

|

||||

|

||||

```toml

|

||||

[pr_reviewer]

|

||||

require_ticket_analysis_review=false

|

||||

```

|

||||

|

||||

## Providers

|

||||

|

||||

### Github Issues Integration

|

||||

|

||||

Qodo Merge PR Agent will automatically recognize Github issues mentioned in the PR description and fetch the issue content.

|

||||

Examples of valid GitHub issue references:

|

||||

|

||||

- `https://github.com/<ORG_NAME>/<REPO_NAME>/issues/<ISSUE_NUMBER>`

|

||||

- `#<ISSUE_NUMBER>`

|

||||

- `<ORG_NAME>/<REPO_NAME>#<ISSUE_NUMBER>`

|

||||

|

||||

Since Qodo Merge PR Agent is integrated with GitHub, it doesn't require any additional configuration to fetch GitHub issues.

|

||||

|

||||

### Jira Integration 💎

|

||||

|

||||

We support both Jira Cloud and Jira Server/Data Center.

|

||||

To integrate with Jira, you can link your PR to a ticket using either of these methods:

|

||||

|

||||

**Method 1: Description Reference:**

|

||||

|

||||

Include a ticket reference in your PR description using either the complete URL format https://<JIRA_ORG>.atlassian.net/browse/ISSUE-123 or the shortened ticket ID ISSUE-123.

|

||||

|

||||

**Method 2: Branch Name Detection:**

|

||||

|

||||

Name your branch with the ticket ID as a prefix (e.g., `ISSUE-123-feature-description` or `ISSUE-123/feature-description`).

|

||||

|

||||

!!! note "Jira Base URL"

|

||||

For shortened ticket IDs or branch detection (method 2), you must configure the Jira base URL in your configuration file under the [jira] section:

|

||||

|

||||

```toml

|

||||

[jira]

|

||||

jira_base_url = "https://<JIRA_ORG>.atlassian.net"

|

||||

```

|

||||

|

||||

#### Jira Cloud 💎

|

||||

There are two ways to authenticate with Jira Cloud:

|

||||

|

||||

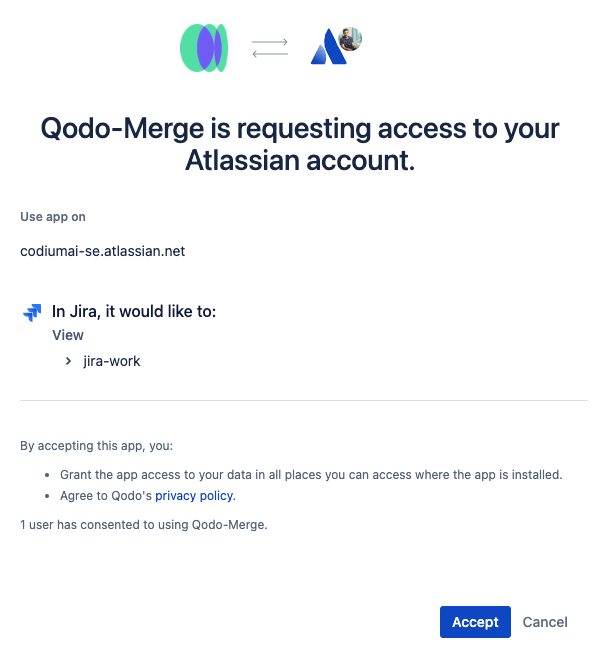

**1) Jira App Authentication**

|

||||

|

||||

The recommended way to authenticate with Jira Cloud is to install the Qodo Merge app in your Jira Cloud instance. This will allow Qodo Merge to access Jira data on your behalf.

|

||||

|

||||

Installation steps:

|

||||

|

||||

1. Click [here](https://auth.atlassian.com/authorize?audience=api.atlassian.com&client_id=8krKmA4gMD8mM8z24aRCgPCSepZNP1xf&scope=read%3Ajira-work%20offline_access&redirect_uri=https%3A%2F%2Fregister.jira.pr-agent.codium.ai&state=qodomerge&response_type=code&prompt=consent) to install the Qodo Merge app in your Jira Cloud instance, click the `accept` button.<br>

|

||||

{width=384}

|

||||

|

||||

2. After installing the app, you will be redirected to the Qodo Merge registration page. and you will see a success message.<br>

|

||||

{width=384}

|

||||

|

||||

3. Now you can use the Jira integration in Qodo Merge PR Agent.

|

||||

|

||||

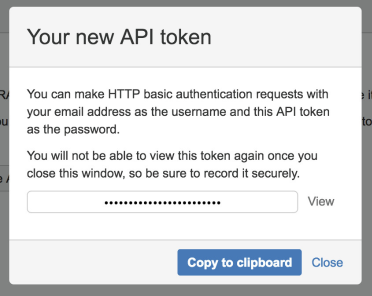

**2) Email/Token Authentication**

|

||||

|

||||

You can create an API token from your Atlassian account:

|

||||

|

||||

1. Log in to https://id.atlassian.com/manage-profile/security/api-tokens.

|

||||

|

||||

2. Click Create API token.

|

||||

|

||||

3. From the dialog that appears, enter a name for your new token and click Create.

|

||||

|

||||

4. Click Copy to clipboard.

|

||||

|

||||

{width=384}

|

||||

|

||||

5. In your [configuration file](https://qodo-merge-docs.qodo.ai/usage-guide/configuration_options/) add the following lines:

|

||||

|

||||

```toml

|

||||

[jira]

|

||||

jira_api_token = "YOUR_API_TOKEN"

|

||||

jira_api_email = "YOUR_EMAIL"

|

||||

```

|

||||

|

||||

|

||||

#### Jira Data Center/Server 💎

|

||||

|

||||

##### Local App Authentication (For Qodo Merge On-Premise Customers)

|

||||

|

||||

##### 1. Step 1: Set up an application link in Jira Data Center/Server

|

||||

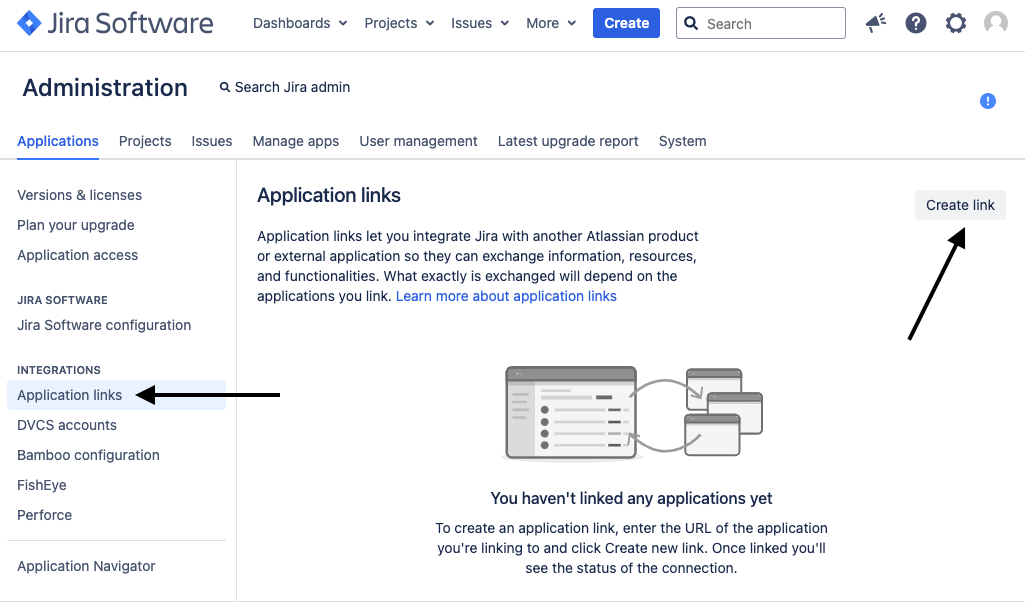

* Go to Jira Administration > Applications > Application Links > Click on `Create link`

|

||||

{width=384}

|

||||

* Choose `External application` and set the direction to `Incoming` and then click `Continue`

|

||||

|

||||

{width=256}

|

||||

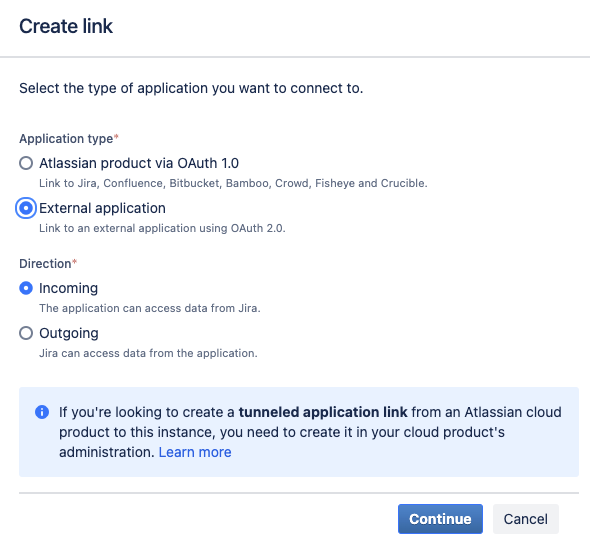

* In the following screen, enter the following details:

|

||||

* Name: `Qodo Merge`

|

||||

* Redirect URL: Enter you Qodo Merge URL followed `https://{QODO_MERGE_ENDPOINT}/register_ticket_provider`

|

||||

* Permission: Select `Read`

|

||||

* Click `Save`

|

||||

|

||||

{width=384}

|

||||

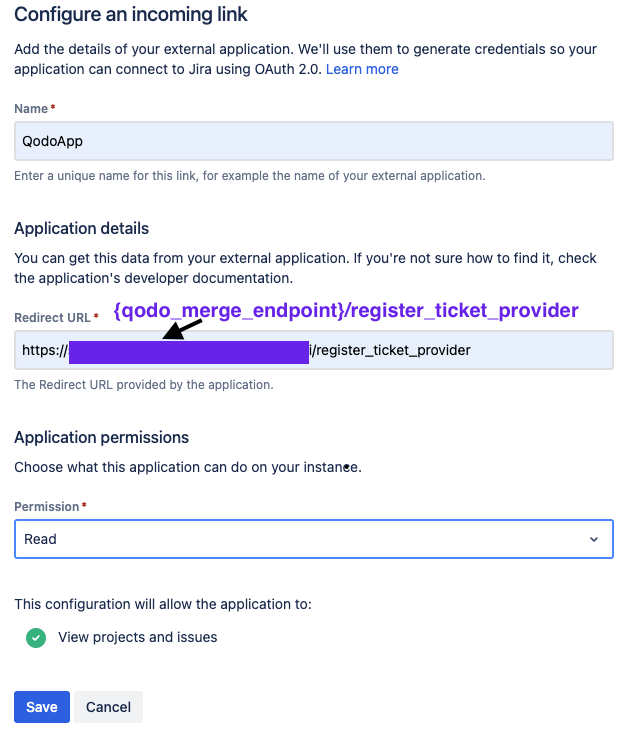

* Copy the `Client ID` and `Client secret` and set them in you `.secrets` file:

|

||||

|

||||

{width=256}

|

||||

```toml

|

||||

[jira]

|

||||

jira_app_secret = "..."

|

||||

jira_client_id = "..."

|

||||

```

|

||||

|

||||

##### 2. Step 2: Authenticate with Jira Data Center/Server

|

||||

* Open this URL in your browser: `https://{QODO_MERGE_ENDPOINT}/jira_auth`

|

||||

* Click on link

|

||||

|

||||

{width=384}

|

||||

|

||||

* You will be redirected to Jira Data Center/Server, click `Allow`

|

||||

* You will be redirected back to Qodo Merge PR Agent and you will see a success message.

|

||||

|

||||

|

||||

##### Personal Access Token (PAT) Authentication

|

||||

We also support Personal Access Token (PAT) Authentication method.

|

||||

|

||||

1. Create a [Personal Access Token (PAT)](https://confluence.atlassian.com/enterprise/using-personal-access-tokens-1026032365.html) in your Jira account

|

||||

2. In your Configuration file/Environment variables/Secrets file, add the following lines:

|

||||

|

||||

```toml

|

||||

[jira]

|

||||

jira_base_url = "YOUR_JIRA_BASE_URL" # e.g. https://jira.example.com

|

||||

jira_api_token = "YOUR_API_TOKEN"

|

||||

```

|

||||

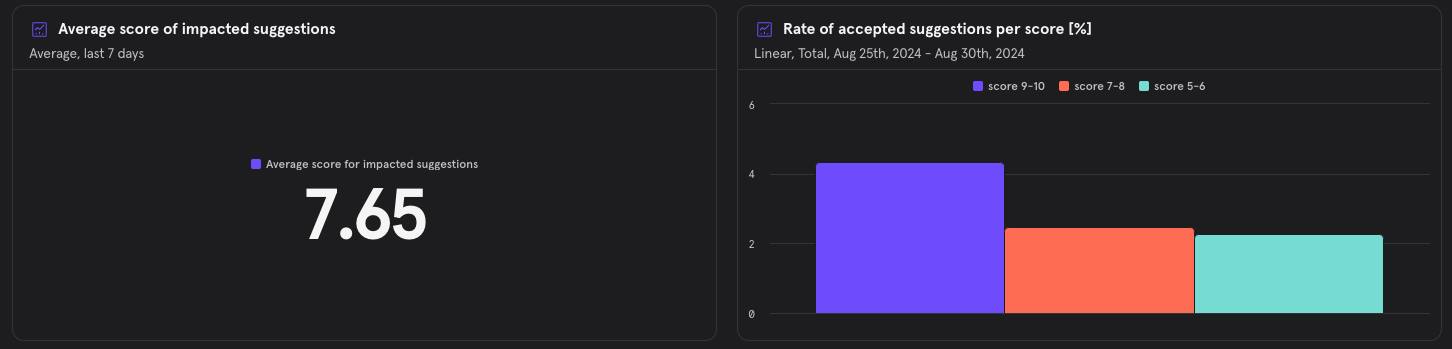

@ -41,4 +41,4 @@ Here are key metrics that the dashboard tracks:

|

||||

|

||||

#### Suggestion Score Distribution

|

||||

{width=512}

|

||||

> Explanation: The distribution of the suggestion score for the implemented suggestions, ensuring that higher-scored suggestions truly represent more significant improvements.

|

||||

> Explanation: The distribution of the suggestion score for the implemented suggestions, ensuring that higher-scored suggestions truly represent more significant improvements.

|

||||

|

||||

@ -1,6 +1,7 @@

|

||||

# Core Abilities

|

||||

Qodo Merge utilizes a variety of core abilities to provide a comprehensive and efficient code review experience. These abilities include:

|

||||

|

||||

- [Fetching ticket context](https://qodo-merge-docs.qodo.ai/core-abilities/fetching_ticket_context/)

|

||||

- [Local and global metadata](https://qodo-merge-docs.qodo.ai/core-abilities/metadata/)

|

||||

- [Dynamic context](https://qodo-merge-docs.qodo.ai/core-abilities/dynamic_context/)

|

||||

- [Self-reflection](https://qodo-merge-docs.qodo.ai/core-abilities/self_reflection/)

|

||||

@ -9,4 +10,20 @@ Qodo Merge utilizes a variety of core abilities to provide a comprehensive and e

|

||||

- [Compression strategy](https://qodo-merge-docs.qodo.ai/core-abilities/compression_strategy/)

|

||||

- [Code-oriented YAML](https://qodo-merge-docs.qodo.ai/core-abilities/code_oriented_yaml/)

|

||||

- [Static code analysis](https://qodo-merge-docs.qodo.ai/core-abilities/static_code_analysis/)

|

||||

- [Code fine-tuning benchmark](https://qodo-merge-docs.qodo.ai/finetuning_benchmark/)

|

||||

- [Code fine-tuning benchmark](https://qodo-merge-docs.qodo.ai/finetuning_benchmark/)

|

||||

|

||||

## Blogs

|

||||

|

||||

Here are some additional technical blogs from Qodo, that delve deeper into the core capabilities and features of Large Language Models (LLMs) when applied to coding tasks.

|

||||

These resources provide more comprehensive insights into leveraging LLMs for software development.

|

||||

|

||||

### Code Generation and LLMs

|

||||

- [State-of-the-art Code Generation with AlphaCodium – From Prompt Engineering to Flow Engineering](https://www.qodo.ai/blog/qodoflow-state-of-the-art-code-generation-for-code-contests/)

|

||||

- [RAG for a Codebase with 10k Repos](https://www.qodo.ai/blog/rag-for-large-scale-code-repos/)

|

||||

|

||||

### Development Processes

|

||||

- [Understanding the Challenges and Pain Points of the Pull Request Cycle](https://www.qodo.ai/blog/understanding-the-challenges-and-pain-points-of-the-pull-request-cycle/)

|

||||

- [Introduction to Code Coverage Testing](https://www.qodo.ai/blog/introduction-to-code-coverage-testing/)

|

||||

|

||||

### Cost Optimization

|

||||

- [Reduce Your Costs by 30% When Using GPT for Python Code](https://www.qodo.ai/blog/reduce-your-costs-by-30-when-using-gpt-3-for-python-code/)

|

||||

|

||||

@ -1,2 +1,2 @@

|

||||

## Interactive invocation 💎

|

||||

TBD

|

||||

TBD

|

||||

|

||||

@ -49,8 +49,8 @@ __old hunk__

|

||||

...

|

||||

```

|

||||

|

||||

(3) The entire PR files that were retrieved are also used to expand and enhance the PR context (see [Dynamic Context](https://qodo-merge-docs.qodo.ai/core-abilities/dynamic-context/)).

|

||||

(3) The entire PR files that were retrieved are also used to expand and enhance the PR context (see [Dynamic Context](https://qodo-merge-docs.qodo.ai/core-abilities/dynamic_context/)).

|

||||

|

||||

|

||||

(4) All the metadata described above represents several level of cumulative analysis - ranging from hunk level, to file level, to PR level, to organization level.

|

||||

This comprehensive approach enables Qodo Merge AI models to generate more precise and contextually relevant suggestions and feedback.

|

||||

This comprehensive approach enables Qodo Merge AI models to generate more precise and contextually relevant suggestions and feedback.

|

||||

|

||||

@ -1,7 +1,7 @@

|

||||

## TL;DR

|

||||

|

||||

Qodo Merge implements a **self-reflection** process where the AI model reflects, scores, and re-ranks its own suggestions, eliminating irrelevant or incorrect ones.

|

||||

This approach improves the quality and relevance of suggestions, saving users time and enhancing their experience.

|

||||

Qodo Merge implements a **self-reflection** process where the AI model reflects, scores, and re-ranks its own suggestions, eliminating irrelevant or incorrect ones.

|

||||

This approach improves the quality and relevance of suggestions, saving users time and enhancing their experience.

|

||||

Configuration options allow users to set a score threshold for further filtering out suggestions.

|

||||

|

||||

## Introduction - Efficient Review with Hierarchical Presentation

|

||||

@ -24,7 +24,7 @@ The AI model is initially tasked with generating suggestions, and outputting the

|

||||

However, in practice we observe that models often struggle to simultaneously generate high-quality code suggestions and rank them well in a single pass.

|

||||

Furthermore, the initial set of generated suggestions sometimes contains easily identifiable errors.

|

||||

|

||||

To address these issues, we implemented a "self-reflection" process that refines suggestion ranking and eliminates irrelevant or incorrect proposals.

|

||||

To address these issues, we implemented a "self-reflection" process that refines suggestion ranking and eliminates irrelevant or incorrect proposals.

|

||||

This process consists of the following steps:

|

||||

|

||||

1. Presenting the generated suggestions to the model in a follow-up call.

|

||||

@ -46,6 +46,5 @@ This results in a more refined and valuable set of suggestions for the user, sav

|

||||

## Appendix - Relevant Configuration Options

|

||||

```

|

||||

[pr_code_suggestions]

|

||||

self_reflect_on_suggestions = true # Enable self-reflection on code suggestions

|

||||

suggestions_score_threshold = 0 # Filter out suggestions with a score below this threshold (0-10)

|

||||

```

|

||||

```

|

||||

|

||||

@ -61,7 +61,7 @@ Or be triggered interactively by using the `analyze` tool.

|

||||

|

||||

### Find Similar Code

|

||||

|

||||

The [`similar code`](https://qodo-merge-docs.qodo.ai/tools/similar_code/) tool retrieves the most similar code components from inside the organization's codebase, or from open-source code.

|

||||

The [`similar code`](https://qodo-merge-docs.qodo.ai/tools/similar_code/) tool retrieves the most similar code components from inside the organization's codebase or from open-source code, including details about the license associated with each repository.

|

||||

|

||||

For example:

|

||||

|

||||

|

||||

@ -31,11 +31,11 @@ ___

|

||||

|

||||

|

||||

- The hierarchical structure of the suggestions is designed to help the user to _quickly_ understand them, and to decide which ones are relevant and which are not:

|

||||

|

||||

|

||||

- Only if the `Category` header is relevant, the user should move to the summarized suggestion description.

|

||||

- Only if the summarized suggestion description is relevant, the user should click on the collapsible, to read the full suggestion description with a code preview example.

|

||||

|

||||

- In addition, we recommend to use the [`extra_instructions`](https://qodo-merge-docs.qodo.ai/tools/improve/#extra-instructions-and-best-practices) field to guide the model to suggestions that are more relevant to the specific needs of the project.

|

||||

- In addition, we recommend to use the [`extra_instructions`](https://qodo-merge-docs.qodo.ai/tools/improve/#extra-instructions-and-best-practices) field to guide the model to suggestions that are more relevant to the specific needs of the project.

|

||||