mirror of

https://github.com/qodo-ai/pr-agent.git

synced 2025-07-07 14:20:37 +08:00

Compare commits

285 Commits

test-PR-ne

...

v0.23

| Author | SHA1 | Date | |

|---|---|---|---|

| 0bf8c1e647 | |||

| be18152446 | |||

| 7fc41409d4 | |||

| 78bcb72205 | |||

| e35f83bdb6 | |||

| 20d9d8ad07 | |||

| f3c80891f8 | |||

| 12973c2c99 | |||

| 1f5c3a4c0f | |||

| 422b4082b5 | |||

| 2235a19345 | |||

| e30c70d2ca | |||

| f7a6e93b6c | |||

| 23e6abcdce | |||

| 0bac03496a | |||

| a228ea8109 | |||

| 0c3940b6a7 | |||

| b05e15e9ec | |||

| bea68084b3 | |||

| 57abf4ac62 | |||

| f0efe4a707 | |||

| 040503039e | |||

| 3e265682a7 | |||

| d7c0f87ea5 | |||

| 92d040c80f | |||

| 96ededd12a | |||

| 8d87b41cf2 | |||

| f058c09a68 | |||

| f2cb70ea67 | |||

| 3e6263e1cc | |||

| 3373fb404a | |||

| df02cc1437 | |||

| 6a5f43f8ce | |||

| ebbf9c25b3 | |||

| 0dc7bdabd2 | |||

| defe200817 | |||

| bf5673912d | |||

| 089a76c897 | |||

| 4c444f5c9a | |||

| e5aae0d14f | |||

| 15f854336a | |||

| 056eb3a954 | |||

| 11abce3ede | |||

| 556dc68add | |||

| b1f728e6b0 | |||

| ca18f85294 | |||

| 382da3a5b6 | |||

| 406dcd7b7b | |||

| b20f364b15 | |||

| 692904bb71 | |||

| ba963149ac | |||

| 7348d4144b | |||

| d0315164be | |||

| 41607b10ef | |||

| 2d21df61c7 | |||

| c185b7c610 | |||

| 3d60954167 | |||

| a57896aa94 | |||

| 73f0eebb69 | |||

| b1d07be728 | |||

| 0f920bcc5b | |||

| 55a82382ef | |||

| 6c2a14d557 | |||

| 4ab747dbfd | |||

| b814e4a26d | |||

| 609fa266cf | |||

| 69f6997739 | |||

| 8cc436cbd6 | |||

| 384dfc2292 | |||

| 40737c3932 | |||

| c46434ac5e | |||

| 255c2d8e94 | |||

| 74bb07e9c4 | |||

| a4db59fadc | |||

| 2990aac955 | |||

| afe037e976 | |||

| 666fcb6264 | |||

| 3f3e9909fe | |||

| 685c443d87 | |||

| c4361ccb01 | |||

| a3d4d6d86f | |||

| b12554ee84 | |||

| 29bc0890ab | |||

| 5fd7ca7d02 | |||

| 41ffa8df51 | |||

| 47b12d8bbc | |||

| ded8dc3689 | |||

| 9034e18772 | |||

| 833bb29808 | |||

| bdf1be921d | |||

| 0c1331f77e | |||

| 164999d83d | |||

| a710f3ff43 | |||

| 025a14014a | |||

| 5968db67b9 | |||

| 3affe011fe | |||

| c4a653f70a | |||

| 663604daa5 | |||

| deda06866d | |||

| e33f2e4c67 | |||

| 00b6a67e1e | |||

| 024ef7eea3 | |||

| 3fee687a34 | |||

| b2c0c4d654 | |||

| 6b56ea4289 | |||

| 2a68a90474 | |||

| de9b21d7bd | |||

| 612c6ed135 | |||

| 6ed65eb82b | |||

| bc09330a44 | |||

| 7bd1e5211c | |||

| 8d44804f84 | |||

| a4320b6b0d | |||

| 73ec67b14e | |||

| 790dcc552e | |||

| 8463aaac0a | |||

| 195f8a03ab | |||

| 5268a84bcc | |||

| e53badbac4 | |||

| a9a27b5a8f | |||

| 4db428456d | |||

| 925fab474c | |||

| a1fb9aac29 | |||

| 774bba4ed2 | |||

| dd8a7200f7 | |||

| 33d8b51abd | |||

| e083841d96 | |||

| 1070f9583f | |||

| bedcc2433c | |||

| 8ff85a9daf | |||

| 58bc54b193 | |||

| aa56c0097d | |||

| 20f6af803c | |||

| 2076454798 | |||

| e367df352b | |||

| a32a12a851 | |||

| 3a897935ae | |||

| 55b52ad6b2 | |||

| b0f9b96c75 | |||

| aac7aeabd1 | |||

| 306fd3d064 | |||

| f1d5587220 | |||

| 07f21a5511 | |||

| 1106dccc4f | |||

| e5f269040e | |||

| 9c8bc6c86a | |||

| f4c9d23084 | |||

| 25fdf16894 | |||

| 12b0df4608 | |||

| 529346b8e0 | |||

| b28f66aaa0 | |||

| 2e535e42ee | |||

| 9c6a363a51 | |||

| 75a27d64b4 | |||

| 4549cb3948 | |||

| d046c2a939 | |||

| aed4ed41cc | |||

| 4d96d11ba5 | |||

| faf4576f03 | |||

| 0b7dcf03a5 | |||

| 8e12787fc8 | |||

| 213ced7e18 | |||

| 6d6fb67306 | |||

| fac8a80c24 | |||

| c53c6aee7f | |||

| b980168e75 | |||

| 86d901d5a6 | |||

| b1444eb180 | |||

| d3a7041f0d | |||

| b4f0ad948f | |||

| ab31d2f1f8 | |||

| 2b0dfc6298 | |||

| 76ff49d446 | |||

| 413547f404 | |||

| f8feaa0be7 | |||

| 09190efb65 | |||

| 2746bd4754 | |||

| 4f13007267 | |||

| 962bb1c23d | |||

| e9804c9f0d | |||

| f3aa9c02cc | |||

| 416b150d66 | |||

| 83f3cc5033 | |||

| 1e1636911f | |||

| 40658cfb7c | |||

| 85f6353d15 | |||

| b9aeb8e443 | |||

| ea7a84901d | |||

| 37f6e18953 | |||

| 62c6211998 | |||

| dc6ae9fa7e | |||

| c6e6cbb50e | |||

| 731c8de4ea | |||

| 4971071b1f | |||

| c341446015 | |||

| ea9d410c84 | |||

| d9a7dae6c4 | |||

| c9c14c10b0 | |||

| bd2f2b3a87 | |||

| c11ee8643e | |||

| 04d55a6309 | |||

| e6c5236156 | |||

| ee90f38501 | |||

| 6e6f54933e | |||

| 911c1268fc | |||

| 17f46bb53b | |||

| 806ba3f9d8 | |||

| 2a69116767 | |||

| b7225c1d10 | |||

| ca5efbc52f | |||

| da44bd7d5e | |||

| 83ff9a0b9b | |||

| 4cd9626217 | |||

| ca9f96a1e3 | |||

| 811965d841 | |||

| 39fe6f69d0 | |||

| 66dc9349bd | |||

| 63340eb75e | |||

| fab5b6f871 | |||

| 71770f3c04 | |||

| a13cb14e9f | |||

| e5bbb701d3 | |||

| 7779038e2a | |||

| c3dca2ef5a | |||

| 985b4f05cf | |||

| 8921d9eb0e | |||

| 2880e48860 | |||

| 9b56c83c1d | |||

| 2369b8da69 | |||

| dcd188193b | |||

| 89819b302b | |||

| 3432d377c7 | |||

| ea4ee1adbc | |||

| f9af9e4a91 | |||

| 3b3e885b76 | |||

| 46e934772c | |||

| cc08394e51 | |||

| 2b4eac2123 | |||

| 570f7d6dcf | |||

| 188d092524 | |||

| 8599c0fed4 | |||

| 0ab19b84b2 | |||

| fec583e45e | |||

| 589b865db5 | |||

| be701aa868 | |||

| 4231a84e7a | |||

| e56320540b | |||

| e4565f7106 | |||

| b4458ffede | |||

| 36ad8935ad | |||

| 9dd2520dbd | |||

| e6708fcb7b | |||

| 05876afc02 | |||

| f3eb74d718 | |||

| b0aac4ec5d | |||

| 95c7b3f55c | |||

| efd906ccf1 | |||

| 5fed21ce37 | |||

| 853cfb3fc9 | |||

| 6c0837491c | |||

| fbacc7c765 | |||

| e69b798aa1 | |||

| 61ba015a55 | |||

| 4f6490b17c | |||

| 9dfc263e2e | |||

| d348cffbae | |||

| c04ab933cd | |||

| a55fa753b9 | |||

| 8e0435d9a0 | |||

| 39c0733d6f | |||

| a588e9f2bb | |||

| 7627e651ea | |||

| 1ebc20b761 | |||

| 38058ea714 | |||

| c92c26448f | |||

| 38051f79b7 | |||

| 738eb055ff | |||

| 5d8d178a60 | |||

| e8f4a45774 | |||

| aa60c7d701 | |||

| 4645cd7cf9 | |||

| edb230c993 | |||

| 7bb1917be7 | |||

| d360fb72cb | |||

| 253f77f4d9 |

2

.gitignore

vendored

2

.gitignore

vendored

@ -1,4 +1,6 @@

|

||||

.idea/

|

||||

.lsp/

|

||||

.vscode/

|

||||

venv/

|

||||

pr_agent/settings/.secrets.toml

|

||||

__pycache__

|

||||

|

||||

@ -1,7 +1,3 @@

|

||||

[pr_reviewer]

|

||||

enable_review_labels_effort = true

|

||||

enable_auto_approval = true

|

||||

|

||||

|

||||

[pr_code_suggestions]

|

||||

commitable_code_suggestions=false

|

||||

|

||||

109

README.md

109

README.md

@ -14,6 +14,8 @@ CodiumAI PR-Agent aims to help efficiently review and handle pull requests, by p

|

||||

</div>

|

||||

|

||||

[](https://github.com/Codium-ai/pr-agent/blob/main/LICENSE)

|

||||

[](https://chromewebstore.google.com/detail/pr-agent-chrome-extension/ephlnjeghhogofkifjloamocljapahnl)

|

||||

[](https://pr-agent-docs.codium.ai/finetuning_benchmark/)

|

||||

[](https://discord.com/channels/1057273017547378788/1126104260430528613)

|

||||

[](https://twitter.com/codiumai)

|

||||

<a href="https://github.com/Codium-ai/pr-agent/commits/main">

|

||||

@ -40,48 +42,26 @@ CodiumAI PR-Agent aims to help efficiently review and handle pull requests, by p

|

||||

|

||||

## News and Updates

|

||||

|

||||

### May 2, 2024

|

||||

Check out the new [PR-Agent Chrome Extension](https://chromewebstore.google.com/detail/pr-agent-chrome-extension/ephlnjeghhogofkifjloamocljapahnl) 🚀🚀🚀

|

||||

### July 4, 2024

|

||||

|

||||

This toolbar integrates seamlessly with your GitHub environment, allowing you to access PR-Agent tools [directly from the GitHub interface](https://www.youtube.com/watch?v=gT5tli7X4H4).

|

||||

You can also easily export your chosen configuration, and use it for the automatic commands.

|

||||

Added improved support for claude-sonnet-3.5 model (anthropic, vertex, bedrock), including dedicated prompts.

|

||||

|

||||

<kbd><img src="https://codium.ai/images/pr_agent/toolbar1.png" width="512"></kbd>

|

||||

### June 17, 2024

|

||||

|

||||

<kbd><img src="https://codium.ai/images/pr_agent/toolbar2.png" width="512"></kbd>

|

||||

New option for a self-review checkbox is now available for the `/improve` tool, along with the ability(💎) to enable auto-approve, or demand self-review in addition to human reviewer. See more [here](https://pr-agent-docs.codium.ai/tools/improve/#self-review).

|

||||

|

||||

<kbd><img src="https://www.codium.ai/images/pr_agent/self_review_1.png" width="512"></kbd>

|

||||

|

||||

### April 14, 2024

|

||||

You can now ask questions about images that appear in the comment, where the entire PR is considered as the context.

|

||||

see [here](https://pr-agent-docs.codium.ai/tools/ask/#ask-on-images) for more details.

|

||||

### June 6, 2024

|

||||

|

||||

<kbd><img src="https://codium.ai/images/pr_agent/ask_images5.png" width="512"></kbd>

|

||||

New option now available (💎) - **apply suggestions**:

|

||||

|

||||

### March 24, 2024

|

||||

PR-Agent is now available for easy installation via [pip](https://pr-agent-docs.codium.ai/installation/locally/#using-pip-package).

|

||||

<kbd><img src="https://www.codium.ai/images/pr_agent/apply_suggestion_1.png" width="512"></kbd>

|

||||

|

||||

### March 17, 2024

|

||||

- A new feature is now available for the review tool: [`require_can_be_split_review`](https://pr-agent-docs.codium.ai/tools/review/#enabledisable-features).

|

||||

If set to true, the tool will add a section that checks if the PR contains several themes, and can be split into smaller PRs.

|

||||

→

|

||||

|

||||

<kbd><img src="https://codium.ai/images/pr_agent/multiple_pr_themes.png" width="512"></kbd>

|

||||

<kbd><img src="https://www.codium.ai/images/pr_agent/apply_suggestion_2.png" width="512"></kbd>

|

||||

|

||||

### March 10, 2024

|

||||

- A new [knowledge-base website](https://pr-agent-docs.codium.ai/) for PR-Agent is now available. It includes detailed information about the different tools, usage guides and more, in an accessible and organized format.

|

||||

|

||||

### March 8, 2024

|

||||

|

||||

- A new tool, [Find Similar Code](https://pr-agent-docs.codium.ai/tools/similar_code/) 💎 is now available.

|

||||

<br>This tool retrieves the most similar code components from inside the organization's codebase, or from open-source code:

|

||||

|

||||

<kbd><a href="https://codium.ai/images/pr_agent/similar_code.mp4"><img src="https://codium.ai/images/pr_agent/similar_code_global2.png" width="512"></a></kbd>

|

||||

|

||||

(click on the image to see an instructional video)

|

||||

|

||||

### Feb 29, 2024

|

||||

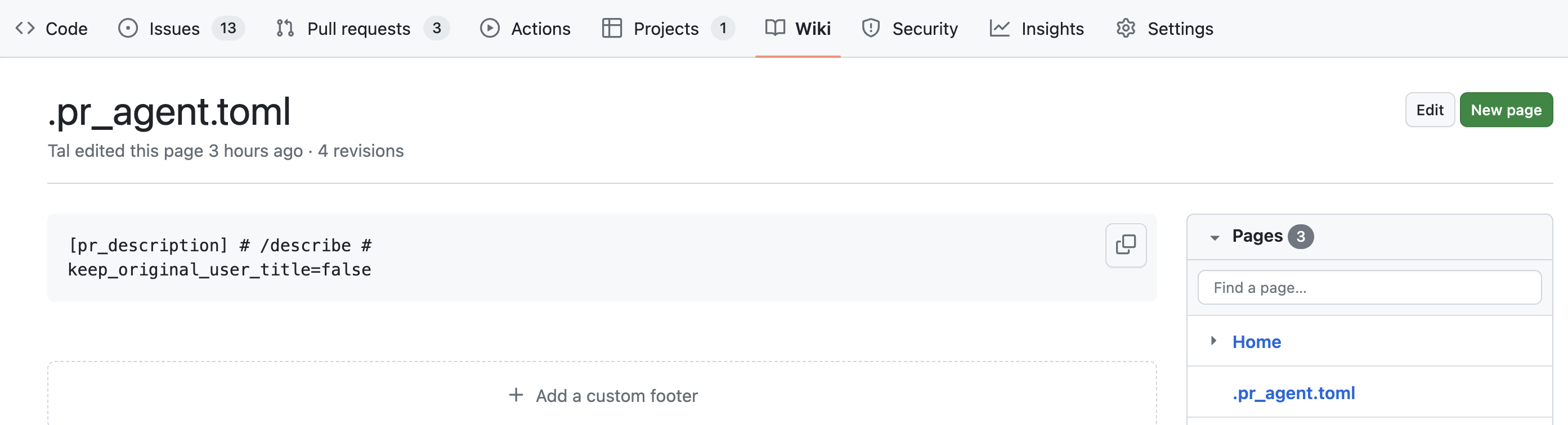

- You can now use the repo's [wiki page](https://pr-agent-docs.codium.ai/usage-guide/configuration_options/) to set configurations for PR-Agent 💎

|

||||

|

||||

<kbd><img src="https://codium.ai/images/pr_agent/wiki_configuration.png" width="512"></kbd>

|

||||

|

||||

|

||||

## Overview

|

||||

@ -89,40 +69,40 @@ If set to true, the tool will add a section that checks if the PR contains sever

|

||||

|

||||

Supported commands per platform:

|

||||

|

||||

| | | GitHub | Gitlab | Bitbucket | Azure DevOps |

|

||||

|-------|-------------------------------------------------------------------------------------------------------------------|:--------------------:|:--------------------:|:--------------------:|:--------------------:|

|

||||

| TOOLS | Review | ✅ | ✅ | ✅ | ✅ |

|

||||

| | ⮑ Incremental | ✅ | | | |

|

||||

| | | GitHub | Gitlab | Bitbucket | Azure DevOps |

|

||||

|-------|---------------------------------------------------------------------------------------------------------|:--------------------:|:--------------------:|:--------------------:|:--------------------:|

|

||||

| TOOLS | Review | ✅ | ✅ | ✅ | ✅ |

|

||||

| | ⮑ Incremental | ✅ | | | |

|

||||

| | ⮑ [SOC2 Compliance](https://pr-agent-docs.codium.ai/tools/review/#soc2-ticket-compliance) 💎 | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Describe | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Describe | ✅ | ✅ | ✅ | ✅ |

|

||||

| | ⮑ [Inline File Summary](https://pr-agent-docs.codium.ai/tools/describe#inline-file-summary) 💎 | ✅ | | | |

|

||||

| | Improve | ✅ | ✅ | ✅ | ✅ |

|

||||

| | ⮑ Extended | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Ask | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Improve | ✅ | ✅ | ✅ | ✅ |

|

||||

| | ⮑ Extended | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Ask | ✅ | ✅ | ✅ | ✅ |

|

||||

| | ⮑ [Ask on code lines](https://pr-agent-docs.codium.ai/tools/ask#ask-lines) | ✅ | ✅ | | |

|

||||

| | [Custom Suggestions](https://pr-agent-docs.codium.ai/tools/custom_suggestions/) 💎 | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [Custom Prompt](https://pr-agent-docs.codium.ai/tools/custom_prompt/) 💎 | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [Test](https://pr-agent-docs.codium.ai/tools/test/) 💎 | ✅ | ✅ | | ✅ |

|

||||

| | Reflect and Review | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Update CHANGELOG.md | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Find Similar Issue | ✅ | | | |

|

||||

| | Reflect and Review | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Update CHANGELOG.md | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Find Similar Issue | ✅ | | | |

|

||||

| | [Add PR Documentation](https://pr-agent-docs.codium.ai/tools/documentation/) 💎 | ✅ | ✅ | | ✅ |

|

||||

| | [Custom Labels](https://pr-agent-docs.codium.ai/tools/custom_labels/) 💎 | ✅ | ✅ | | ✅ |

|

||||

| | [Analyze](https://pr-agent-docs.codium.ai/tools/analyze/) 💎 | ✅ | ✅ | | ✅ |

|

||||

| | [CI Feedback](https://pr-agent-docs.codium.ai/tools/ci_feedback/) 💎 | ✅ | | | |

|

||||

| | [Similar Code](https://pr-agent-docs.codium.ai/tools/similar_code/) 💎 | ✅ | | | |

|

||||

| | | | | | |

|

||||

| USAGE | CLI | ✅ | ✅ | ✅ | ✅ |

|

||||

| | App / webhook | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Tagging bot | ✅ | | | |

|

||||

| | Actions | ✅ | | ✅ | |

|

||||

| | | | | | |

|

||||

| CORE | PR compression | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Repo language prioritization | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Adaptive and token-aware file patch fitting | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Multiple models support | ✅ | ✅ | ✅ | ✅ |

|

||||

| | | | | | |

|

||||

| USAGE | CLI | ✅ | ✅ | ✅ | ✅ |

|

||||

| | App / webhook | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Tagging bot | ✅ | | | |

|

||||

| | Actions | ✅ | | ✅ | |

|

||||

| | | | | | |

|

||||

| CORE | PR compression | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Repo language prioritization | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Adaptive and token-aware file patch fitting | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Multiple models support | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [Static code analysis](https://pr-agent-docs.codium.ai/core-abilities/#static-code-analysis) 💎 | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [Global and wiki configurations](https://pr-agent-docs.codium.ai/usage-guide/configuration_options/) 💎 | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [PR interactive actions](https://www.codium.ai/images/pr_agent/pr-actions.mp4) 💎 | ✅ | | | |

|

||||

| | [PR interactive actions](https://www.codium.ai/images/pr_agent/pr-actions.mp4) 💎 | ✅ | | | |

|

||||

- 💎 means this feature is available only in [PR-Agent Pro](https://www.codium.ai/pricing/)

|

||||

|

||||

[//]: # (- Support for additional git providers is described in [here](./docs/Full_environments.md))

|

||||

@ -146,7 +126,7 @@ ___

|

||||

\

|

||||

‣ **Analyze 💎 ([`/analyze`](https://pr-agent-docs.codium.ai/tools/analyze/))**: Identify code components that changed in the PR, and enables to interactively generate tests, docs, and code suggestions for each component.

|

||||

\

|

||||

‣ **Custom Suggestions 💎 ([`/custom_suggestions`](https://pr-agent-docs.codium.ai/tools/custom_suggestions/))**: Automatically generates custom suggestions for improving the PR code, based on specific guidelines defined by the user.

|

||||

‣ **Custom Prompt 💎 ([`/custom_prompt`](https://pr-agent-docs.codium.ai/tools/custom_prompt/))**: Automatically generates custom suggestions for improving the PR code, based on specific guidelines defined by the user.

|

||||

\

|

||||

‣ **Generate Tests 💎 ([`/test component_name`](https://pr-agent-docs.codium.ai/tools/test/))**: Generates unit tests for a selected component, based on the PR code changes.

|

||||

\

|

||||

@ -321,11 +301,22 @@ Here are some advantages of PR-Agent:

|

||||

|

||||

## Data privacy

|

||||

|

||||

If you host PR-Agent with your OpenAI API key, it is between you and OpenAI. You can read their API data privacy policy here:

|

||||

### Self-hosted PR-Agent

|

||||

|

||||

- If you host PR-Agent with your OpenAI API key, it is between you and OpenAI. You can read their API data privacy policy here:

|

||||

https://openai.com/enterprise-privacy

|

||||

|

||||

When using PR-Agent Pro 💎, hosted by CodiumAI, we will not store any of your data, nor will we use it for training.

|

||||

You will also benefit from an OpenAI account with zero data retention.

|

||||

### CodiumAI-hosted PR-Agent Pro 💎

|

||||

|

||||

- When using PR-Agent Pro 💎, hosted by CodiumAI, we will not store any of your data, nor will we use it for training. You will also benefit from an OpenAI account with zero data retention.

|

||||

|

||||

- For certain clients, CodiumAI-hosted PR-Agent Pro will use CodiumAI’s proprietary models — if this is the case, you will be notified.

|

||||

|

||||

- No passive collection of Code and Pull Requests’ data — PR-Agent will be active only when you invoke it, and it will then extract and analyze only data relevant to the executed command and queried pull request.

|

||||

|

||||

### PR-Agent Chrome extension

|

||||

|

||||

- The [PR-Agent Chrome extension](https://chromewebstore.google.com/detail/pr-agent-chrome-extension/ephlnjeghhogofkifjloamocljapahnl) serves solely to modify the visual appearance of a GitHub PR screen. It does not transmit any user's repo or pull request code. Code is only sent for processing when a user submits a GitHub comment that activates a PR-Agent tool, in accordance with the standard privacy policy of PR-Agent.

|

||||

|

||||

## Links

|

||||

|

||||

|

||||

@ -8,7 +8,7 @@ ENV PYTHONPATH=/app

|

||||

|

||||

FROM base as github_app

|

||||

ADD pr_agent pr_agent

|

||||

CMD ["python", "pr_agent/servers/github_app.py"]

|

||||

CMD ["python", "-m", "gunicorn", "-k", "uvicorn.workers.UvicornWorker", "-c", "pr_agent/servers/gunicorn_config.py", "--forwarded-allow-ips", "*", "pr_agent.servers.github_app:app"]

|

||||

|

||||

FROM base as bitbucket_app

|

||||

ADD pr_agent pr_agent

|

||||

|

||||

49

docs/docs/chrome-extension/index.md

Normal file

49

docs/docs/chrome-extension/index.md

Normal file

@ -0,0 +1,49 @@

|

||||

## PR-Agent chrome extension

|

||||

PR-Agent Chrome extension is a collection of tools that integrates seamlessly with your GitHub environment, aiming to enhance your PR-Agent usage experience, and providing additional features.

|

||||

|

||||

## Features

|

||||

|

||||

### Toolbar extension

|

||||

With PR-Agent Chrome extension, it's [easier than ever](https://www.youtube.com/watch?v=gT5tli7X4H4) to interactively configure and experiment with the different tools and configuration options.

|

||||

|

||||

After you found the setup that works for you, you can also easily export it as a persistent configuration file, and use it for automatic commands.

|

||||

|

||||

<img src="https://codium.ai/images/pr_agent/toolbar1.png" width="512">

|

||||

|

||||

<img src="https://codium.ai/images/pr_agent/toolbar2.png" width="512">

|

||||

|

||||

### PR-Agent filters

|

||||

|

||||

PR-Agent filters is a sidepanel option. that allows you to filter different message in the conversation tab.

|

||||

|

||||

For example, you can choose to present only message from PR-Agent, or filter those messages, focusing only on user's comments.

|

||||

|

||||

<img src="https://codium.ai/images/pr_agent/pr_agent_filters1.png" width="256">

|

||||

|

||||

<img src="https://codium.ai/images/pr_agent/pr_agent_filters2.png" width="256">

|

||||

|

||||

|

||||

### Enhanced code suggestions

|

||||

|

||||

PR-Agent Chrome extension adds the following capabilities to code suggestions tool's comments:

|

||||

|

||||

- Auto-expand the table when you are viewing a code block, to avoid clipping.

|

||||

- Adding a "quote-and-reply" button, that enables to address and comment on a specific suggestion (for example, asking the author to fix the issue)

|

||||

|

||||

|

||||

<img src="https://codium.ai/images/pr_agent/chrome_extension_code_suggestion1.png" width="512">

|

||||

|

||||

<img src="https://codium.ai/images/pr_agent/chrome_extension_code_suggestion2.png" width="512">

|

||||

|

||||

## Installation

|

||||

|

||||

Go to the marketplace and install the extension:

|

||||

[PR-Agent Chrome Extension](https://chromewebstore.google.com/detail/pr-agent-chrome-extension/ephlnjeghhogofkifjloamocljapahnl)

|

||||

|

||||

## Pre-requisites

|

||||

|

||||

The PR-Agent Chrome extension will work on any repo where you have previously [installed PR-Agent](https://pr-agent-docs.codium.ai/installation/).

|

||||

|

||||

## Data privacy and security

|

||||

|

||||

The PR-Agent Chrome extension only modifies the visual appearance of a GitHub PR screen. It does not transmit any user's repo or pull request code. Code is only sent for processing when a user submits a GitHub comment that activates a PR-Agent tool, in accordance with the standard privacy policy of PR-Agent.

|

||||

@ -4,12 +4,21 @@

|

||||

--md-primary-fg-color: #765bfa;

|

||||

--md-accent-fg-color: #AEA1F1;

|

||||

}

|

||||

.md-nav__title, .md-nav__link {

|

||||

font-size: 16px;

|

||||

|

||||

.md-nav--primary {

|

||||

.md-nav__link {

|

||||

font-size: 18px; /* Change the font size as per your preference */

|

||||

}

|

||||

}

|

||||

|

||||

/*.md-nav__title, .md-nav__link {*/

|

||||

/* font-size: 18px;*/

|

||||

/* margin-top: 14px; !* Adjust the space as needed *!*/

|

||||

/* margin-bottom: 14px; !* Adjust the space as needed *!*/

|

||||

/*}*/

|

||||

|

||||

.md-tabs__link {

|

||||

font-size: 16px;

|

||||

font-size: 18px;

|

||||

}

|

||||

|

||||

.md-header__title {

|

||||

|

||||

92

docs/docs/finetuning_benchmark/index.md

Normal file

92

docs/docs/finetuning_benchmark/index.md

Normal file

@ -0,0 +1,92 @@

|

||||

# PR-Agent Code Fine-tuning Benchmark

|

||||

|

||||

On coding tasks, the gap between open-source models and top closed-source models such as GPT4 is significant.

|

||||

<br>

|

||||

In practice, open-source models are unsuitable for most real-world code tasks, and require further fine-tuning to produce acceptable results.

|

||||

|

||||

_PR-Agent fine-tuning benchmark_ aims to benchmark open-source models on their ability to be fine-tuned for a coding task.

|

||||

Specifically, we chose to fine-tune open-source models on the task of analyzing a pull request, and providing useful feedback and code suggestions.

|

||||

|

||||

Here are the results:

|

||||

<br>

|

||||

<br>

|

||||

|

||||

**Model performance:**

|

||||

|

||||

| Model name | Model size [B] | Better than gpt-4 rate, after fine-tuning [%] |

|

||||

|-----------------------------|----------------|----------------------------------------------|

|

||||

| **DeepSeek 34B-instruct** | **34** | **40.7** |

|

||||

| DeepSeek 34B-base | 34 | 38.2 |

|

||||

| Phind-34b | 34 | 38 |

|

||||

| Granite-34B | 34 | 37.6 |

|

||||

| Codestral-22B-v0.1 | 22 | 32.7 |

|

||||

| QWEN-1.5-32B | 32 | 29 |

|

||||

| | | |

|

||||

| **CodeQwen1.5-7B** | **7** | **35.4** |

|

||||

| Granite-8b-code-instruct | 8 | 34.2 |

|

||||

| CodeLlama-7b-hf | 7 | 31.8 |

|

||||

| Gemma-7B | 7 | 27.2 |

|

||||

| DeepSeek coder-7b-instruct | 7 | 26.8 |

|

||||

| Llama-3-8B-Instruct | 8 | 26.8 |

|

||||

| Mistral-7B-v0.1 | 7 | 16.1 |

|

||||

|

||||

<br>

|

||||

|

||||

**Fine-tuning impact:**

|

||||

|

||||

| Model name | Model size [B] | Fine-tuned | Better than gpt-4 rate [%] |

|

||||

|---------------------------|----------------|------------|----------------------------|

|

||||

| DeepSeek 34B-instruct | 34 | yes | 40.7 |

|

||||

| DeepSeek 34B-instruct | 34 | no | 3.6 |

|

||||

|

||||

## Results analysis

|

||||

|

||||

- **Fine-tuning is a must** - without fine-tuning, open-source models provide poor results on most real-world code tasks, which include complicated prompt and lengthy context. We clearly see that without fine-tuning, deepseek model was 96.4% of the time inferior to GPT-4, while after fine-tuning, it is better 40.7% of the time.

|

||||

- **Always start from a code-dedicated model** — When fine-tuning, always start from a code-dedicated model, and not from a general-usage model. The gaps in downstream results are very big.

|

||||

- **Don't believe the hype** —newer models, or models from big-tech companies (Llama3, Gemma, Mistral), are not always better for fine-tuning.

|

||||

- **The best large model** - For large 34B code-dedicated models, the gaps when doing proper fine-tuning are small. The current top model is **DeepSeek 34B-instruct**

|

||||

- **The best small model** - For small 7B code-dedicated models, the gaps when fine-tuning are much larger. **CodeQWEN 1.5-7B** is by far the best model for fine-tuning.

|

||||

- **Base vs. instruct** - For the top model (deepseek), we saw small advantage when starting from the instruct version. However, we recommend testing both versions on each specific task, as the base model is generally considered more suitable for fine-tuning.

|

||||

|

||||

## The dataset

|

||||

|

||||

### Training dataset

|

||||

|

||||

Our training dataset comprises 25,000 pull requests, aggregated from permissive license repos. For each pull request, we generated responses for the three main tools of PR-Agent:

|

||||

[Describe](https://pr-agent-docs.codium.ai/tools/describe/), [Review](https://pr-agent-docs.codium.ai/tools/improve/) and [Improve](https://pr-agent-docs.codium.ai/tools/improve/).

|

||||

|

||||

On the raw data collected, we employed various automatic and manual cleaning techniques to ensure the outputs were of the highest quality, and suitable for instruct-tuning.

|

||||

|

||||

Here are the prompts, and example outputs, used as input-output pairs to fine-tune the models:

|

||||

|

||||

| Tool | Prompt | Example output |

|

||||

|----------|------------------------------------------------------------------------------------------------------------|----------------|

|

||||

| Describe | [link](https://github.com/Codium-ai/pr-agent/blob/main/pr_agent/settings/pr_description_prompts.toml) | [link](https://github.com/Codium-ai/pr-agent/pull/910#issue-2303989601) |

|

||||

| Review | [link](https://github.com/Codium-ai/pr-agent/blob/main/pr_agent/settings/pr_reviewer_prompts.toml) | [link](https://github.com/Codium-ai/pr-agent/pull/910#issuecomment-2118761219) |

|

||||

| Improve | [link](https://github.com/Codium-ai/pr-agent/blob/main/pr_agent/settings/pr_code_suggestions_prompts.toml) | [link](https://github.com/Codium-ai/pr-agent/pull/910#issuecomment-2118761309) |

|

||||

|

||||

### Evaluation dataset

|

||||

|

||||

- For each tool, we aggregated 100 additional examples to be used for evaluation. These examples were not used in the training dataset, and were manually selected to represent diverse real-world use-cases.

|

||||

- For each test example, we generated two responses: one from the fine-tuned model, and one from the best code model in the world, `gpt-4-turbo-2024-04-09`.

|

||||

|

||||

- We used a third LLM to judge which response better answers the prompt, and will likely be perceived by a human as better response.

|

||||

<br>

|

||||

|

||||

We experimented with three model as judges: `gpt-4-turbo-2024-04-09`, `gpt-4o`, and `claude-3-opus-20240229`. All three produced similar results, with the same ranking order. This strengthens the validity of our testing protocol.

|

||||

The evaluation prompt can be found [here](https://github.com/Codium-ai/pr-agent/blob/main/pr_agent/settings/pr_evaluate_prompt_response.toml)

|

||||

|

||||

Here is an example of a judge model feedback:

|

||||

|

||||

```

|

||||

command: improve

|

||||

model1_score: 9,

|

||||

model2_score: 6,

|

||||

why: |

|

||||

Response 1 is better because it provides more actionable and specific suggestions that directly

|

||||

enhance the code's maintainability, performance, and best practices. For example, it suggests

|

||||

using a variable for reusable widget instances and using named routes for navigation, which

|

||||

are practical improvements. In contrast, Response 2 focuses more on general advice and less

|

||||

actionable suggestions, such as changing variable names and adding comments, which are less

|

||||

critical for immediate code improvement."

|

||||

```

|

||||

@ -12,35 +12,35 @@ CodiumAI PR-Agent is an open-source tool to help efficiently review and handle p

|

||||

## PR-Agent Features

|

||||

PR-Agent offers extensive pull request functionalities across various git providers.

|

||||

|

||||

| | | GitHub | Gitlab | Bitbucket | Azure DevOps |

|

||||

|-------|---------------------------------------------------------------------------------------------------------------------|:------:|:------:|:---------:|:------------:|

|

||||

| TOOLS | Review | ✅ | ✅ | ✅ | ✅ |

|

||||

| | ⮑ Incremental | ✅ | | | |

|

||||

| | ⮑ [SOC2 Compliance](https://pr-agent-docs.codium.ai/tools/review/#soc2-ticket-compliance){:target="_blank"} 💎 | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Ask | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Describe | ✅ | ✅ | ✅ | ✅ |

|

||||

| | ⮑ [Inline file summary](https://pr-agent-docs.codium.ai/tools/describe/#inline-file-summary){:target="_blank"} 💎 | ✅ | ✅ | | ✅ |

|

||||

| | Improve | ✅ | ✅ | ✅ | ✅ |

|

||||

| | ⮑ Extended | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [Custom Suggestions](./tools/custom_suggestions.md){:target="_blank"} 💎 | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Reflect and Review | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Update CHANGELOG.md | ✅ | ✅ | ✅ | ️ |

|

||||

| | Find Similar Issue | ✅ | | | ️ |

|

||||

| | [Add PR Documentation](./tools/documentation.md){:target="_blank"} 💎 | ✅ | ✅ | | ✅ |

|

||||

| | | GitHub | Gitlab | Bitbucket | Azure DevOps |

|

||||

|-------|-----------------------------------------------------------------------------------------------------------------------|:------:|:------:|:---------:|:------------:|

|

||||

| TOOLS | Review | ✅ | ✅ | ✅ | ✅ |

|

||||

| | ⮑ Incremental | ✅ | | | |

|

||||

| | ⮑ [SOC2 Compliance](https://pr-agent-docs.codium.ai/tools/review/#soc2-ticket-compliance){:target="_blank"} 💎 | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Ask | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Describe | ✅ | ✅ | ✅ | ✅ |

|

||||

| | ⮑ [Inline file summary](https://pr-agent-docs.codium.ai/tools/describe/#inline-file-summary){:target="_blank"} 💎 | ✅ | ✅ | | ✅ |

|

||||

| | Improve | ✅ | ✅ | ✅ | ✅ |

|

||||

| | ⮑ Extended | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [Custom Prompt](./tools/custom_prompt.md){:target="_blank"} 💎 | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Reflect and Review | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Update CHANGELOG.md | ✅ | ✅ | ✅ | ️ |

|

||||

| | Find Similar Issue | ✅ | | | ️ |

|

||||

| | [Add PR Documentation](./tools/documentation.md){:target="_blank"} 💎 | ✅ | ✅ | | ✅ |

|

||||

| | [Generate Custom Labels](./tools/describe.md#handle-custom-labels-from-the-repos-labels-page-💎){:target="_blank"} 💎 | ✅ | ✅ | | ✅ |

|

||||

| | [Analyze PR Components](./tools/analyze.md){:target="_blank"} 💎 | ✅ | ✅ | | ✅ |

|

||||

| | | | | | ️ |

|

||||

| USAGE | CLI | ✅ | ✅ | ✅ | ✅ |

|

||||

| | App / webhook | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Actions | ✅ | | | ️ |

|

||||

| | | | | |

|

||||

| CORE | PR compression | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Repo language prioritization | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Adaptive and token-aware file patch fitting | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Multiple models support | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Incremental PR review | ✅ | | | |

|

||||

| | [Static code analysis](./tools/analyze.md/){:target="_blank"} 💎 | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [Multiple configuration options](./usage-guide/configuration_options.md){:target="_blank"} 💎 | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [Analyze PR Components](./tools/analyze.md){:target="_blank"} 💎 | ✅ | ✅ | | ✅ |

|

||||

| | | | | | ️ |

|

||||

| USAGE | CLI | ✅ | ✅ | ✅ | ✅ |

|

||||

| | App / webhook | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Actions | ✅ | | | ️ |

|

||||

| | | | | |

|

||||

| CORE | PR compression | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Repo language prioritization | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Adaptive and token-aware file patch fitting | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Multiple models support | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Incremental PR review | ✅ | | | |

|

||||

| | [Static code analysis](./tools/analyze.md/){:target="_blank"} 💎 | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [Multiple configuration options](./usage-guide/configuration_options.md){:target="_blank"} 💎 | ✅ | ✅ | ✅ | ✅ |

|

||||

|

||||

💎 marks a feature available only in [PR-Agent Pro](https://www.codium.ai/pricing/){:target="_blank"}

|

||||

|

||||

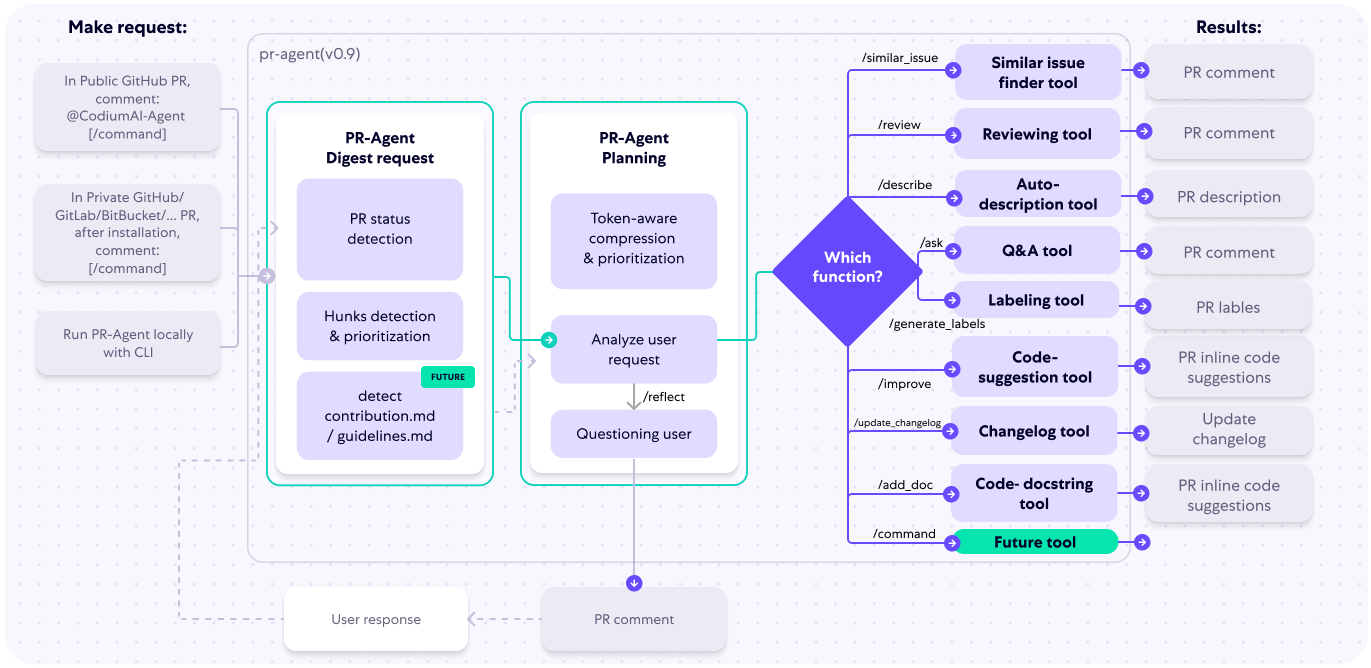

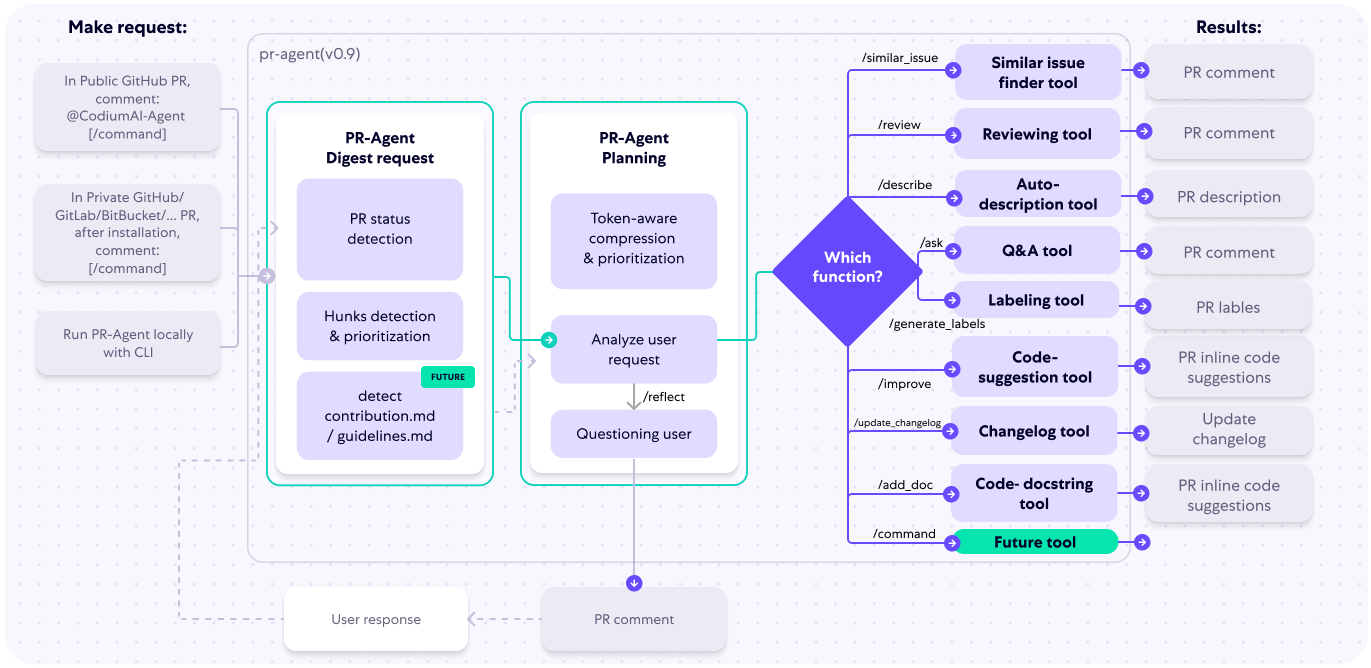

@ -79,35 +79,3 @@ The following diagram illustrates PR-Agent tools and their flow:

|

||||

|

||||

|

||||

Check out the [PR Compression strategy](core-abilities/index.md) page for more details on how we convert a code diff to a manageable LLM prompt

|

||||

|

||||

|

||||

|

||||

## PR-Agent Pro 💎

|

||||

|

||||

[PR-Agent Pro](https://www.codium.ai/pricing/) is a hosted version of PR-Agent, provided by CodiumAI. It is available for a monthly fee, and provides the following benefits:

|

||||

|

||||

1. **Fully managed** - We take care of everything for you - hosting, models, regular updates, and more. Installation is as simple as signing up and adding the PR-Agent app to your GitHub\GitLab\BitBucket repo.

|

||||

2. **Improved privacy** - No data will be stored or used to train models. PR-Agent Pro will employ zero data retention, and will use an OpenAI account with zero data retention.

|

||||

3. **Improved support** - PR-Agent Pro users will receive priority support, and will be able to request new features and capabilities.

|

||||

4. **Extra features** -In addition to the benefits listed above, PR-Agent Pro will emphasize more customization, and the usage of static code analysis, in addition to LLM logic, to improve results. It has the following additional tools and features:

|

||||

- (Tool): [**Analyze PR components**](./tools/analyze.md/)

|

||||

- (Tool): [**Custom Code Suggestions**](./tools/custom_suggestions.md/)

|

||||

- (Tool): [**Tests**](./tools/test.md/)

|

||||

- (Tool): [**PR documentation**](./tools/documentation.md/)

|

||||

- (Tool): [**Improve Component**](https://pr-agent-docs.codium.ai/tools/improve_component/)

|

||||

- (Tool): [**Similar code search**](https://pr-agent-docs.codium.ai/tools/similar_code/)

|

||||

- (Tool): [**CI feedback**](./tools/ci_feedback.md/)

|

||||

- (Feature): [**Interactive triggering**](./usage-guide/automations_and_usage.md/#interactive-triggering)

|

||||

- (Feature): [**SOC2 compliance check**](./tools/review.md/#soc2-ticket-compliance)

|

||||

- (Feature): [**Custom labels**](./tools/describe.md/#handle-custom-labels-from-the-repos-labels-page)

|

||||

- (Feature): [**Global and wiki configuration**](./usage-guide/configuration_options.md/#wiki-configuration-file)

|

||||

- (Feature): [**Inline file summary**](https://pr-agent-docs.codium.ai/tools/describe/#inline-file-summary)

|

||||

|

||||

|

||||

## Data Privacy

|

||||

|

||||

If you host PR-Agent with your OpenAI API key, it is between you and OpenAI. You can read their API data privacy policy here:

|

||||

https://openai.com/enterprise-privacy

|

||||

|

||||

When using PR-Agent Pro 💎, hosted by CodiumAI, we will not store any of your data, nor will we use it for training.

|

||||

You will also benefit from an OpenAI account with zero data retention.

|

||||

|

||||

17

docs/docs/overview/data_privacy.md

Normal file

17

docs/docs/overview/data_privacy.md

Normal file

@ -0,0 +1,17 @@

|

||||

## Self-hosted PR-Agent

|

||||

|

||||

- If you host PR-Agent with your OpenAI API key, it is between you and OpenAI. You can read their API data privacy policy here:

|

||||

https://openai.com/enterprise-privacy

|

||||

|

||||

## PR-Agent Pro 💎

|

||||

|

||||

- When using PR-Agent Pro 💎, hosted by CodiumAI, we will not store any of your data, nor will we use it for training. You will also benefit from an OpenAI account with zero data retention.

|

||||

|

||||

- For certain clients, CodiumAI-hosted PR-Agent Pro will use CodiumAI’s proprietary models. If this is the case, you will be notified.

|

||||

|

||||

- No passive collection of Code and Pull Requests’ data — PR-Agent will be active only when you invoke it, and it will then extract and analyze only data relevant to the executed command and queried pull request.

|

||||

|

||||

|

||||

## PR-Agent Chrome extension

|

||||

|

||||

- The [PR-Agent Chrome extension](https://chromewebstore.google.com/detail/pr-agent-chrome-extension/ephlnjeghhogofkifjloamocljapahnl) serves solely to modify the visual appearance of a GitHub PR screen. It does not transmit any user's repo or pull request code. Code is only sent for processing when a user submits a GitHub comment that activates a PR-Agent tool, in accordance with the standard privacy policy of PR-Agent.

|

||||

81

docs/docs/overview/index.md

Normal file

81

docs/docs/overview/index.md

Normal file

@ -0,0 +1,81 @@

|

||||

# Overview

|

||||

|

||||

CodiumAI PR-Agent is an open-source tool to help efficiently review and handle pull requests.

|

||||

|

||||

- See the [Installation Guide](./installation/index.md) for instructions on installing and running the tool on different git platforms.

|

||||

|

||||

- See the [Usage Guide](./usage-guide/index.md) for instructions on running the PR-Agent commands via different interfaces, including _CLI_, _online usage_, or by _automatically triggering_ them when a new PR is opened.

|

||||

|

||||

- See the [Tools Guide](./tools/index.md) for a detailed description of the different tools.

|

||||

|

||||

|

||||

## PR-Agent Features

|

||||

PR-Agent offers extensive pull request functionalities across various git providers.

|

||||

|

||||

| | | GitHub | Gitlab | Bitbucket | Azure DevOps |

|

||||

|-------|-----------------------------------------------------------------------------------------------------------------------|:------:|:------:|:---------:|:------------:|

|

||||

| TOOLS | Review | ✅ | ✅ | ✅ | ✅ |

|

||||

| | ⮑ Incremental | ✅ | | | |

|

||||

| | ⮑ [SOC2 Compliance](https://pr-agent-docs.codium.ai/tools/review/#soc2-ticket-compliance){:target="_blank"} 💎 | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Ask | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Describe | ✅ | ✅ | ✅ | ✅ |

|

||||

| | ⮑ [Inline file summary](https://pr-agent-docs.codium.ai/tools/describe/#inline-file-summary){:target="_blank"} 💎 | ✅ | ✅ | | ✅ |

|

||||

| | Improve | ✅ | ✅ | ✅ | ✅ |

|

||||

| | ⮑ Extended | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [Custom Prompt](./tools/custom_prompt.md){:target="_blank"} 💎 | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Reflect and Review | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Update CHANGELOG.md | ✅ | ✅ | ✅ | ️ |

|

||||

| | Find Similar Issue | ✅ | | | ️ |

|

||||

| | [Add PR Documentation](./tools/documentation.md){:target="_blank"} 💎 | ✅ | ✅ | | ✅ |

|

||||

| | [Generate Custom Labels](./tools/describe.md#handle-custom-labels-from-the-repos-labels-page-💎){:target="_blank"} 💎 | ✅ | ✅ | | ✅ |

|

||||

| | [Analyze PR Components](./tools/analyze.md){:target="_blank"} 💎 | ✅ | ✅ | | ✅ |

|

||||

| | | | | | ️ |

|

||||

| USAGE | CLI | ✅ | ✅ | ✅ | ✅ |

|

||||

| | App / webhook | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Actions | ✅ | | | ️ |

|

||||

| | | | | |

|

||||

| CORE | PR compression | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Repo language prioritization | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Adaptive and token-aware file patch fitting | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Multiple models support | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Incremental PR review | ✅ | | | |

|

||||

| | [Static code analysis](./tools/analyze.md/){:target="_blank"} 💎 | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [Multiple configuration options](./usage-guide/configuration_options.md){:target="_blank"} 💎 | ✅ | ✅ | ✅ | ✅ |

|

||||

|

||||

💎 marks a feature available only in [PR-Agent Pro](https://www.codium.ai/pricing/){:target="_blank"}

|

||||

|

||||

|

||||

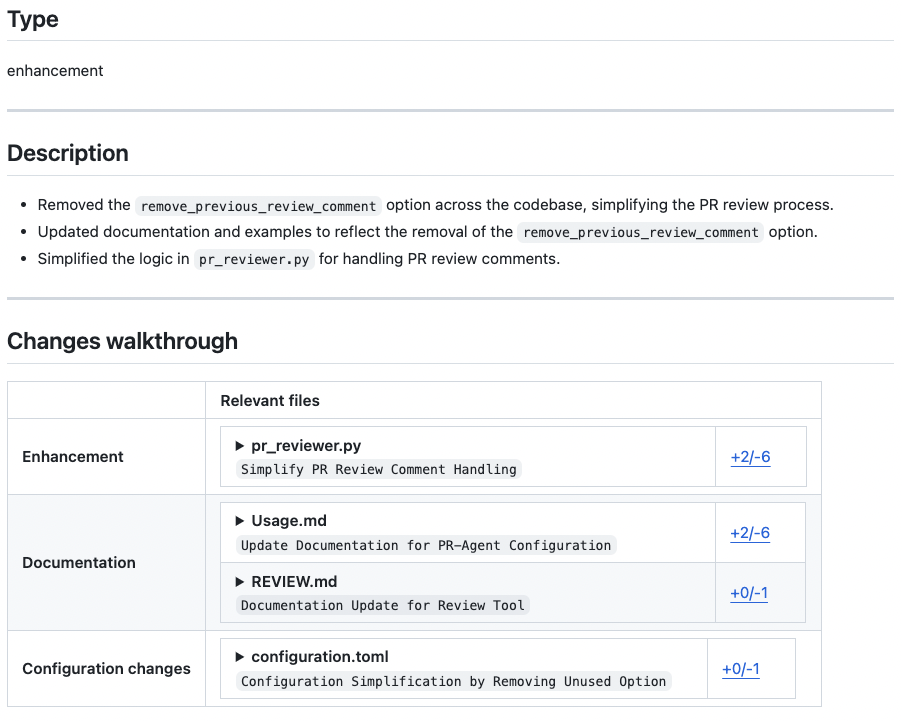

## Example Results

|

||||

<hr>

|

||||

|

||||

#### [/describe](https://github.com/Codium-ai/pr-agent/pull/530)

|

||||

<figure markdown="1">

|

||||

{width=512}

|

||||

</figure>

|

||||

<hr>

|

||||

|

||||

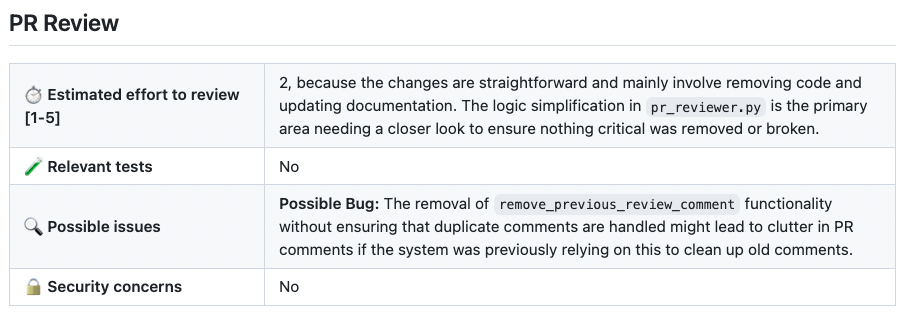

#### [/review](https://github.com/Codium-ai/pr-agent/pull/732#issuecomment-1975099151)

|

||||

<figure markdown="1">

|

||||

{width=512}

|

||||

</figure>

|

||||

<hr>

|

||||

|

||||

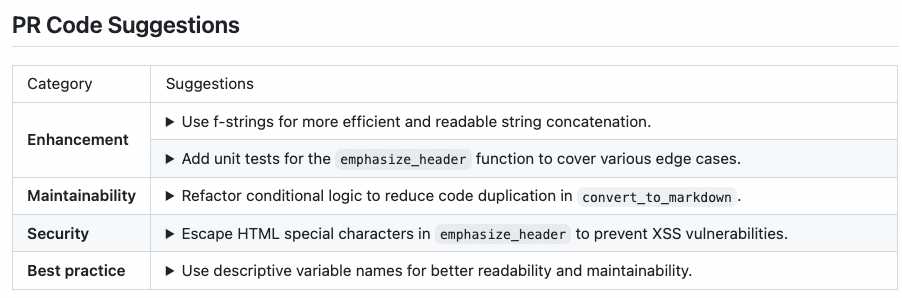

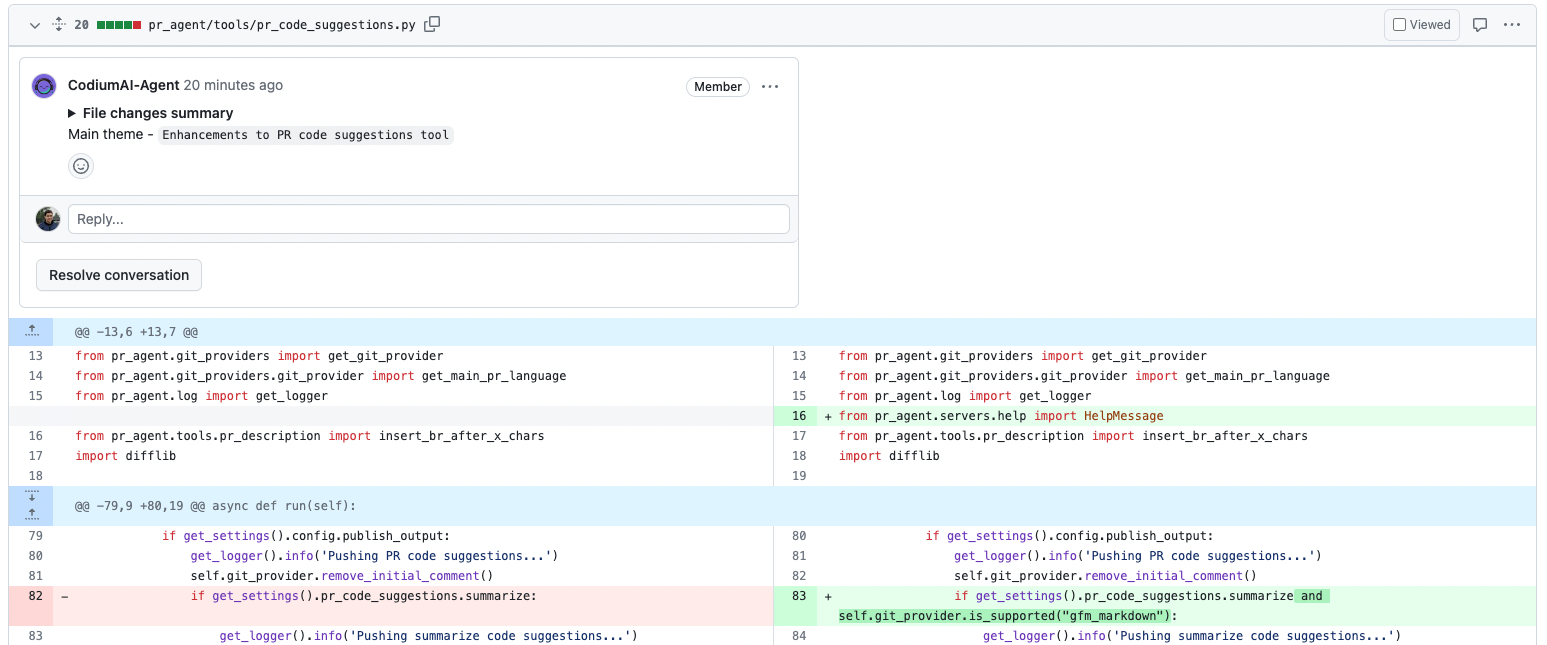

#### [/improve](https://github.com/Codium-ai/pr-agent/pull/732#issuecomment-1975099159)

|

||||

<figure markdown="1">

|

||||

{width=512}

|

||||

</figure>

|

||||

<hr>

|

||||

|

||||

#### [/generate_labels](https://github.com/Codium-ai/pr-agent/pull/530)

|

||||

<figure markdown="1">

|

||||

{width=300}

|

||||

</figure>

|

||||

<hr>

|

||||

|

||||

## How it Works

|

||||

|

||||

The following diagram illustrates PR-Agent tools and their flow:

|

||||

|

||||

|

||||

|

||||

Check out the [PR Compression strategy](core-abilities/index.md) page for more details on how we convert a code diff to a manageable LLM prompt

|

||||

18

docs/docs/overview/pr_agent_pro.md

Normal file

18

docs/docs/overview/pr_agent_pro.md

Normal file

@ -0,0 +1,18 @@

|

||||

[PR-Agent Pro](https://www.codium.ai/pricing/) is a hosted version of PR-Agent, provided by CodiumAI. It is available for a monthly fee, and provides the following benefits:

|

||||

|

||||

1. **Fully managed** - We take care of everything for you - hosting, models, regular updates, and more. Installation is as simple as signing up and adding the PR-Agent app to your GitHub\GitLab\BitBucket repo.

|

||||

2. **Improved privacy** - No data will be stored or used to train models. PR-Agent Pro will employ zero data retention, and will use an OpenAI account with zero data retention.

|

||||

3. **Improved support** - PR-Agent Pro users will receive priority support, and will be able to request new features and capabilities.

|

||||

4. **Extra features** -In addition to the benefits listed above, PR-Agent Pro will emphasize more customization, and the usage of static code analysis, in addition to LLM logic, to improve results. It has the following additional tools and features:

|

||||

- (Tool): [**Analyze PR components**](./tools/analyze.md/)

|

||||

- (Tool): [**Custom Prompt Suggestions**](./tools/custom_prompt.md/)

|

||||

- (Tool): [**Tests**](./tools/test.md/)

|

||||

- (Tool): [**PR documentation**](./tools/documentation.md/)

|

||||

- (Tool): [**Improve Component**](https://pr-agent-docs.codium.ai/tools/improve_component/)

|

||||

- (Tool): [**Similar code search**](https://pr-agent-docs.codium.ai/tools/similar_code/)

|

||||

- (Tool): [**CI feedback**](./tools/ci_feedback.md/)

|

||||

- (Feature): [**Interactive triggering**](./usage-guide/automations_and_usage.md/#interactive-triggering)

|

||||

- (Feature): [**SOC2 compliance check**](./tools/review.md/#soc2-ticket-compliance)

|

||||

- (Feature): [**Custom labels**](./tools/describe.md/#handle-custom-labels-from-the-repos-labels-page)

|

||||

- (Feature): [**Global and wiki configuration**](./usage-guide/configuration_options.md/#wiki-configuration-file)

|

||||

- (Feature): [**Inline file summary**](https://pr-agent-docs.codium.ai/tools/describe/#inline-file-summary)

|

||||

@ -1,14 +1,14 @@

|

||||

## Overview

|

||||

The `custom_suggestions` tool scans the PR code changes, and automatically generates suggestions for improving the PR code.

|

||||

It shares similarities with the `improve` tool, but with one main difference: the `custom_suggestions` tool will **only propose suggestions that follow specific guidelines defined by the prompt** in: `pr_custom_suggestions.prompt` configuration.

|

||||

The `custom_prompt` tool scans the PR code changes, and automatically generates suggestions for improving the PR code.

|

||||

It shares similarities with the `improve` tool, but with one main difference: the `custom_prompt` tool will **only propose suggestions that follow specific guidelines defined by the prompt** in: `pr_custom_prompt.prompt` configuration.

|

||||

|

||||

The tool can be triggered [automatically](../usage-guide/automations_and_usage.md#github-app-automatic-tools-when-a-new-pr-is-opened) every time a new PR is opened, or can be invoked manually by commenting on a PR.

|

||||

|

||||

When commenting, use the following template:

|

||||

|

||||

```

|

||||

/custom_suggestions --pr_custom_suggestions.prompt="

|

||||

The suggestions should focus only on the following:

|

||||

/custom_prompt --pr_custom_prompt.prompt="

|

||||

The code suggestions should focus only on the following:

|

||||

- ...

|

||||

- ...

|

||||

|

||||

@ -18,7 +18,7 @@ The suggestions should focus only on the following:

|

||||

With a [configuration file](../usage-guide/automations_and_usage.md#github-app), use the following template:

|

||||

|

||||

```

|

||||

[pr_custom_suggestions]

|

||||

[pr_custom_prompt]

|

||||

prompt="""\

|

||||

The suggestions should focus only on the following:

|

||||

-...

|

||||

@ -34,9 +34,9 @@ You might benefit from several trial-and-error iterations, until you get the cor

|

||||

|

||||

Here is an example of a possible prompt, defined in the configuration file:

|

||||

```

|

||||

[pr_custom_suggestions]

|

||||

[pr_custom_prompt]

|

||||

prompt="""\

|

||||

The suggestions should focus only on the following:

|

||||

The code suggestions should focus only on the following:

|

||||

- look for edge cases when implementing a new function

|

||||

- make sure every variable has a meaningful name

|

||||

- make sure the code is efficient

|

||||

@ -47,15 +47,12 @@ The suggestions should focus only on the following:

|

||||

|

||||

Results obtained with the prompt above:

|

||||

|

||||

[//]: # ({width=512})

|

||||

|

||||

[//]: # (→)

|

||||

{width=768}

|

||||

{width=768}

|

||||

|

||||

## Configuration options

|

||||

|

||||

`prompt`: the prompt for the tool. It should be a multi-line string.

|

||||

|

||||

`num_code_suggestions`: number of code suggestions provided by the 'custom_suggestions' tool. Default is 4.

|

||||

`num_code_suggestions`: number of code suggestions provided by the 'custom_prompt' tool. Default is 4.

|

||||

|

||||

`enable_help_text`: if set to true, the tool will display a help text in the comment. Default is true.

|

||||

@ -44,33 +44,61 @@ publish_labels = ...

|

||||

|

||||

## Configuration options

|

||||

|

||||

### General configurations

|

||||

|

||||

!!! example "Possible configurations"

|

||||

|

||||

- `publish_labels`: if set to true, the tool will publish the labels to the PR. Default is true.

|

||||

<table>

|

||||

<tr>

|

||||

<td><b>publish_labels</b></td>

|

||||

<td>If set to true, the tool will publish the labels to the PR. Default is true.</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td><b>publish_description_as_comment</b></td>

|

||||

<td>If set to true, the tool will publish the description as a comment to the PR. If false, it will overwrite the original description. Default is false.</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td><b>publish_description_as_comment_persistent</b></td>

|

||||

<td>If set to true and `publish_description_as_comment` is true, the tool will publish the description as a persistent comment to the PR. Default is true.</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td><b>add_original_user_description</b></td>

|

||||

<td>If set to true, the tool will add the original user description to the generated description. Default is true.</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td><b>generate_ai_title</b></td>

|

||||

<td>If set to true, the tool will also generate an AI title for the PR. Default is false.</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td><b>extra_instructions</b></td>

|

||||

<td>Optional extra instructions to the tool. For example: "focus on the changes in the file X. Ignore change in ..."</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td><b>enable_pr_type</b></td>

|

||||

<td>If set to false, it will not show the `PR type` as a text value in the description content. Default is true.</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td><b>final_update_message</b></td>

|

||||

<td>If set to true, it will add a comment message [`PR Description updated to latest commit...`](https://github.com/Codium-ai/pr-agent/pull/499#issuecomment-1837412176) after finishing calling `/describe`. Default is false.</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td><b>enable_semantic_files_types</b></td>

|

||||

<td>If set to true, "Changes walkthrough" section will be generated. Default is true.</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td><b>collapsible_file_list</b></td>

|

||||

<td>If set to true, the file list in the "Changes walkthrough" section will be collapsible. If set to "adaptive", the file list will be collapsible only if there are more than 8 files. Default is "adaptive".</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td><b>enable_large_pr_handling</b></td>

|

||||

<td>Pro feature. If set to true, in case of a large PR the tool will make several calls to the AI and combine them to be able to cover more files. Default is true.</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td><b>enable_help_text</b></td>

|

||||

<td>If set to true, the tool will display a help text in the comment. Default is false.</td>

|

||||

</tr>

|

||||

</table>

|

||||

|

||||

- `publish_description_as_comment`: if set to true, the tool will publish the description as a comment to the PR. If false, it will overwrite the original description. Default is false.

|

||||

|

||||

- `publish_description_as_comment_persistent`: if set to true and `publish_description_as_comment` is true, the tool will publish the description as a persistent comment to the PR. Default is true.

|

||||

|

||||

- `add_original_user_description`: if set to true, the tool will add the original user description to the generated description. Default is true.

|

||||

|

||||

- `generate_ai_title`: if set to true, the tool will also generate an AI title for the PR. Default is false.

|

||||

|

||||

- `extra_instructions`: Optional extra instructions to the tool. For example: "focus on the changes in the file X. Ignore change in ...".

|

||||

|

||||

- To enable `custom labels`, apply the configuration changes described [here](./custom_labels.md#configuration-options)

|

||||

|

||||

- `enable_pr_type`: if set to false, it will not show the `PR type` as a text value in the description content. Default is true.

|

||||

|

||||

- `final_update_message`: if set to true, it will add a comment message [`PR Description updated to latest commit...`](https://github.com/Codium-ai/pr-agent/pull/499#issuecomment-1837412176) after finishing calling `/describe`. Default is true.

|

||||

|

||||

- `enable_semantic_files_types`: if set to true, "Changes walkthrough" section will be generated. Default is true.

|

||||

- `collapsible_file_list`: if set to true, the file list in the "Changes walkthrough" section will be collapsible. If set to "adaptive", the file list will be collapsible only if there are more than 8 files. Default is "adaptive".

|

||||

- `enable_help_text`: if set to true, the tool will display a help text in the comment. Default is false.

|

||||

|

||||

### Inline file summary 💎

|

||||

## Inline file summary 💎

|

||||

|

||||

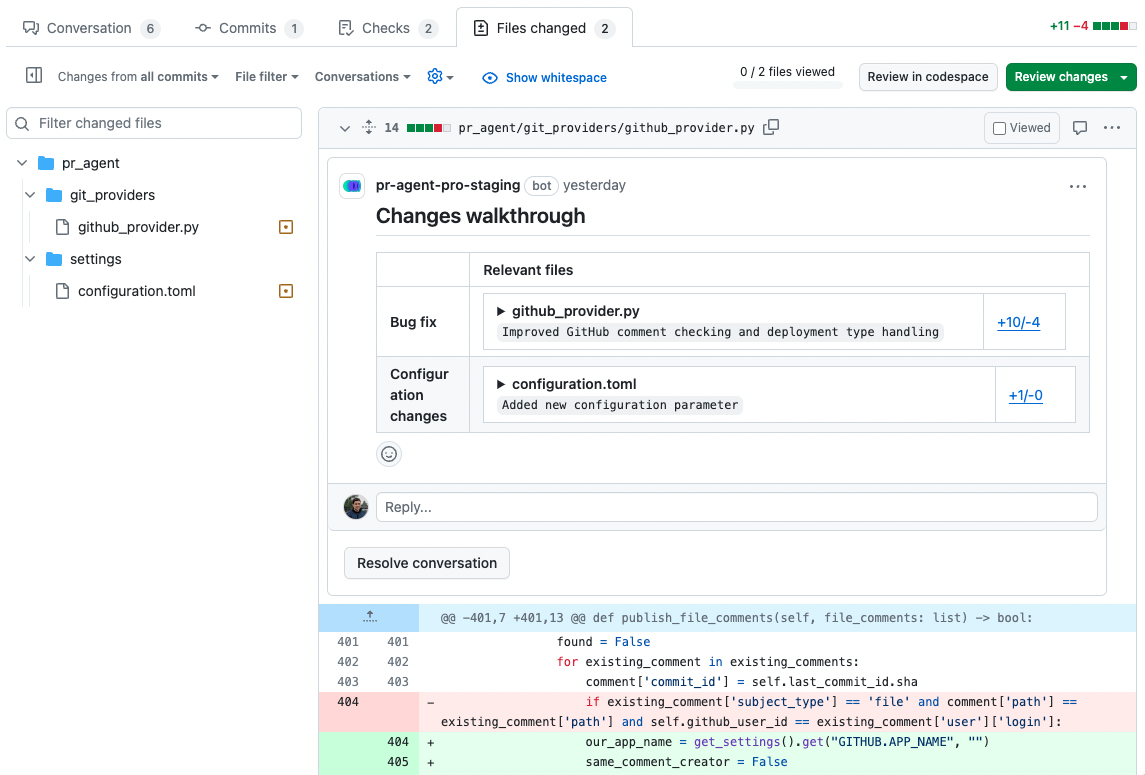

This feature enables you to copy the `changes walkthrough` table to the "Files changed" tab, so you can quickly understand the changes in each file while reviewing the code changes (diff view).

|

||||

|

||||

@ -84,7 +112,7 @@ If you prefer to have the file summaries appear in the "Files changed" tab on ev

|

||||

|

||||

{width=512}

|

||||

|

||||

- `true`: A collapsable file comment with changes title and a changes summary for each file in the PR.

|

||||

- `true`: A collapsible file comment with changes title and a changes summary for each file in the PR.

|

||||

|

||||

{width=512}

|

||||

|

||||

@ -93,7 +121,7 @@ If you prefer to have the file summaries appear in the "Files changed" tab on ev

|

||||

**Note**: that this feature is currently available only for GitHub.

|

||||

|

||||

|

||||

### Markers template

|

||||

## Markers template

|

||||

|

||||

To enable markers, set `pr_description.use_description_markers=true`.

|

||||

Markers enable to easily integrate user's content and auto-generated content, with a template-like mechanism.

|

||||

@ -126,30 +154,33 @@ The marker `pr_agent:type` will be replaced with the PR type, `pr_agent:summary`

|

||||

- `include_generated_by_header`: if set to true, the tool will add a dedicated header: 'Generated by PR Agent at ...' to any automatic content. Default is true.

|

||||

|

||||

## Custom labels

|

||||

|

||||

The default labels of the describe tool are quite generic, since they are meant to be used in any repo: [`Bug fix`, `Tests`, `Enhancement`, `Documentation`, `Other`].

|

||||

|

||||

You can define custom labels that are relevant for your repo and use cases.

|

||||

Custom labels can be defined in a [configuration file](https://pr-agent-docs.codium.ai/tools/custom_labels/#configuration-options), or directly in the repo's [labels page](#handle-custom-labels-from-the-repos-labels-page).

|

||||

|

||||

Examples for custom labels:

|

||||

|

||||

- `Main topic:performance` - pr_agent:The main topic of this PR is performance

|

||||

- `New endpoint` - pr_agent:A new endpoint was added in this PR

|

||||

- `SQL query` - pr_agent:A new SQL query was added in this PR

|

||||

- `Dockerfile changes` - pr_agent:The PR contains changes in the Dockerfile

|

||||

- ...

|

||||

|

||||

The list above is eclectic, and aims to give an idea of different possibilities. Define custom labels that are relevant for your repo and use cases.

|

||||

Note that Labels are not mutually exclusive, so you can add multiple label categories.

|

||||

<br>

|

||||

Make sure to provide proper title, and a detailed and well-phrased description for each label, so the tool will know when to suggest it.

|

||||

Each label description should be a **conditional statement**, that indicates if to add the label to the PR or not, according to the PR content.

|

||||

|

||||

### Handle custom labels from a configuration file

|

||||

Example for a custom labels configuration setup in a configuration file:

|

||||

```

|

||||

[config]

|

||||

enable_custom_labels=true

|

||||

|

||||

|

||||

[custom_labels."sql_changes"]

|

||||

description = "Use when a PR contains changes to SQL queries"

|

||||

|

||||

[custom_labels."test"]

|

||||

description = "use when a PR primarily contains new tests"

|

||||

|

||||

...

|

||||

```

|

||||

|

||||

### Handle custom labels from the Repo's labels page 💎

|

||||

|

||||

You can control the custom labels that will be suggested by the `describe` tool, from the repo's labels page:

|

||||

You can also control the custom labels that will be suggested by the `describe` tool from the repo's labels page:

|

||||

|

||||

* GitHub : go to `https://github.com/{owner}/{repo}/labels` (or click on the "Labels" tab in the issues or PRs page)

|

||||

* GitLab : go to `https://gitlab.com/{owner}/{repo}/-/labels` (or click on "Manage" -> "Labels" on the left menu)

|

||||

@ -159,6 +190,14 @@ Now add/edit the custom labels. they should be formatted as follows:

|

||||

* Label name: The name of the custom label.

|

||||

* Description: Start the description of with prefix `pr_agent:`, for example: `pr_agent: Description of when AI should suggest this label`.<br>

|

||||

|

||||

Examples for custom labels:

|

||||

|

||||

- `Main topic:performance` - pr_agent:The main topic of this PR is performance

|

||||

- `New endpoint` - pr_agent:A new endpoint was added in this PR

|

||||

- `SQL query` - pr_agent:A new SQL query was added in this PR

|

||||

- `Dockerfile changes` - pr_agent:The PR contains changes in the Dockerfile

|

||||

- ...

|

||||

|

||||

The description should be comprehensive and detailed, indicating when to add the desired label. For example:

|

||||

{width=768}

|

||||

|

||||

|

||||

@ -40,58 +40,124 @@ pr_commands = [

|

||||

]

|

||||

|

||||

[pr_code_suggestions]

|

||||

num_code_suggestions = ...

|

||||

num_code_suggestions_per_chunk = ...

|

||||

...

|

||||

```

|

||||

|

||||

- The `pr_commands` lists commands that will be executed automatically when a PR is opened.

|

||||

- The `[pr_code_suggestions]` section contains the configurations for the `improve` tool you want to edit (if any)

|

||||

|

||||

### Extended mode

|

||||

|

||||

An extended mode, which does not involve PR Compression and provides more comprehensive suggestions, can be invoked by commenting on any PR by setting:

|

||||

```

|

||||

[pr_code_suggestions]

|

||||

auto_extended_mode=true

|

||||

```

|

||||

(This mode is true by default).

|

||||

|

||||

Note that the extended mode divides the PR code changes into chunks, up to the token limits, where each chunk is handled separately (might use multiple calls to GPT-4 for large PRs).

|

||||

Hence, the total number of suggestions is proportional to the number of chunks, i.e., the size of the PR.

|

||||

|

||||

### Self-review

|

||||

If you set in a configuration file:

|

||||

```

|

||||

[pr_code_suggestions]

|

||||

demand_code_suggestions_self_review = true

|

||||

```

|

||||

The `improve` tool will add a checkbox below the suggestions, prompting user to acknowledge that they have reviewed the suggestions.

|

||||

You can set the content of the checkbox text via:

|

||||

```

|

||||

[pr_code_suggestions]

|

||||

code_suggestions_self_review_text = "... (your text here) ..."

|

||||

```

|

||||

{width=512}

|

||||

|

||||

💎 In addition, by setting:

|

||||

```

|

||||

[pr_code_suggestions]

|

||||

approve_pr_on_self_review = true

|

||||

```

|

||||

the tool can automatically approve the PR when the user checks the self-review checkbox.

|

||||

|

||||

!!! tip "Demanding self-review from the PR author"

|

||||

If you set the number of required reviewers for a PR to 2, this effectively means that the PR author must click the self-review checkbox before the PR can be merged (in addition to a human reviewer).

|

||||

{width=512}

|

||||

|

||||

|

||||

## Configuration options

|

||||

|

||||

!!! example "General options"

|

||||

|

||||

- `num_code_suggestions`: number of code suggestions provided by the 'improve' tool. Default is 4 for CLI, 0 for auto tools.

|

||||

- `extra_instructions`: Optional extra instructions to the tool. For example: "focus on the changes in the file X. Ignore change in ...".

|

||||

- `rank_suggestions`: if set to true, the tool will rank the suggestions, based on importance. Default is false.

|

||||

- `commitable_code_suggestions`: if set to true, the tool will display the suggestions as commitable code comments. Default is false.

|

||||

- `persistent_comment`: if set to true, the improve comment will be persistent, meaning that every new improve request will edit the previous one. Default is false.

|

||||

- `enable_help_text`: if set to true, the tool will display a help text in the comment. Default is true.

|

||||

|

||||

!!! example "params for '/improve --extended' mode"

|

||||

|

||||

- `auto_extended_mode`: enable extended mode automatically (no need for the `--extended` option). Default is true.

|

||||

- `num_code_suggestions_per_chunk`: number of code suggestions provided by the 'improve' tool, per chunk. Default is 5.

|

||||

- `rank_extended_suggestions`: if set to true, the tool will rank the suggestions, based on importance. Default is true.

|

||||

- `max_number_of_calls`: maximum number of chunks. Default is 5.

|

||||

- `final_clip_factor`: factor to remove suggestions with low confidence. Default is 0.9.;

|

||||

|

||||

## Extended mode

|

||||

|

||||

An extended mode, which does not involve PR Compression and provides more comprehensive suggestions, can be invoked by commenting on any PR:

|

||||

```

|

||||

/improve --extended

|

||||

```

|

||||

|

||||

or by setting:

|

||||

```

|

||||

[pr_code_suggestions]

|

||||

auto_extended_mode=true

|

||||

```

|

||||

(True by default).

|

||||

|

||||

Note that the extended mode divides the PR code changes into chunks, up to the token limits, where each chunk is handled separately (might use multiple calls to GPT-4 for large PRs).

|

||||

Hence, the total number of suggestions is proportional to the number of chunks, i.e., the size of the PR.

|

||||

<table>

|

||||

<tr>

|

||||

<td><b>num_code_suggestions</b></td>

|

||||

<td>Number of code suggestions provided by the 'improve' tool. Default is 4 for CLI, 0 for auto tools.</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td><b>extra_instructions</b></td>

|

||||

<td>Optional extra instructions to the tool. For example: "focus on the changes in the file X. Ignore change in ...".</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td><b>rank_suggestions</b></td>

|

||||

<td>If set to true, the tool will rank the suggestions, based on importance. Default is false.</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td><b>commitable_code_suggestions</b></td>

|

||||

<td>If set to true, the tool will display the suggestions as commitable code comments. Default is false.</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td><b>persistent_comment</b></td>

|

||||

<td>If set to true, the improve comment will be persistent, meaning that every new improve request will edit the previous one. Default is false.</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td><b>self_reflect_on_suggestions</b></td>

|

||||

<td>If set to true, the improve tool will calculate an importance score for each suggestion [1-10], and sort the suggestion labels group based on this score. Default is true.</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td><b>suggestions_score_threshold</b></td>

|

||||

<td> Any suggestion with importance score less than this threshold will be removed. Default is 0. Highly recommend not to set this value above 7-8, since above it may clip relevant suggestions that can be useful. </td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td><b>apply_suggestions_checkbox</b></td>

|

||||

<td> Enable the checkbox to create a committable suggestion. Default is true.</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td><b>enable_help_text</b></td>

|

||||