mirror of

https://github.com/qodo-ai/pr-agent.git

synced 2025-07-21 04:50:39 +08:00

Compare commits

3 Commits

ok/inferen

...

ok/gitlab_

| Author | SHA1 | Date | |

|---|---|---|---|

| e41247c473 | |||

| 5704070834 | |||

| 98fe376add |

@ -1,5 +1,3 @@

|

||||

venv/

|

||||

pr_agent/settings/.secrets.toml

|

||||

pics/

|

||||

pr_agent.egg-info/

|

||||

build/

|

||||

pics/

|

||||

6

.gitignore

vendored

6

.gitignore

vendored

@ -1,8 +1,4 @@

|

||||

.idea/

|

||||

venv/

|

||||

pr_agent/settings/.secrets.toml

|

||||

__pycache__

|

||||

dist/

|

||||

*.egg-info/

|

||||

build/

|

||||

review.md

|

||||

__pycache__

|

||||

11

.gitlab-ci.yml

Normal file

11

.gitlab-ci.yml

Normal file

@ -0,0 +1,11 @@

|

||||

bot-review:

|

||||

stage: test

|

||||

variables:

|

||||

MR_URL: ${CI_MERGE_REQUEST_PROJECT_URL}/-/merge_requests/${CI_MERGE_REQUEST_IID}

|

||||

image: docker:latest

|

||||

services:

|

||||

- docker:19-dind

|

||||

script:

|

||||

- docker run --rm -e OPENAI.KEY=${OPEN_API_KEY} -e OPENAI.ORG=${OPEN_API_ORG} -e GITLAB.PERSONAL_ACCESS_TOKEN=${GITLAB_PAT} -e CONFIG.GIT_PROVIDER=gitlab codiumai/pr-agent --pr_url ${MR_URL} describe

|

||||

rules:

|

||||

- if: $CI_COMMIT_BRANCH != $CI_DEFAULT_BRANCH

|

||||

45

CHANGELOG.md

45

CHANGELOG.md

@ -1,45 +0,0 @@

|

||||

## 2023-08-03

|

||||

|

||||

### Optimized

|

||||

- Optimized PR diff processing by introducing caching for diff files, reducing the number of API calls.

|

||||

- Refactored `load_large_diff` function to generate a patch only when necessary.

|

||||

- Fixed a bug in the GitLab provider where the new file was not retrieved correctly.

|

||||

|

||||

## 2023-08-02

|

||||

|

||||

### Enhanced

|

||||

- Updated several tools in the `pr_agent` package to use commit messages in their functionality.

|

||||

- Commit messages are now retrieved and stored in the `vars` dictionary for each tool.

|

||||

- Added a section to display the commit messages in the prompts of various tools.

|

||||

|

||||

## 2023-08-01

|

||||

|

||||

### Enhanced

|

||||

- Introduced the ability to retrieve commit messages from pull requests across different git providers.

|

||||

- Implemented commit messages retrieval for GitHub and GitLab providers.

|

||||

- Updated the PR description template to include a section for commit messages if they exist.

|

||||

- Added support for repository-specific configuration files (.pr_agent.yaml) for the PR Agent.

|

||||

- Implemented this feature for both GitHub and GitLab providers.

|

||||

- Added a new configuration option 'use_repo_settings_file' to enable or disable the use of a repo-specific settings file.

|

||||

|

||||

|

||||

## 2023-07-30

|

||||

|

||||

### Enhanced

|

||||

- Added the ability to modify any configuration parameter from 'configuration.toml' on-the-fly.

|

||||

- Updated the command line interface and bot commands to accept configuration changes as arguments.

|

||||

- Improved the PR agent to handle additional arguments for each action.

|

||||

|

||||

## 2023-07-28

|

||||

|

||||

### Improved

|

||||

- Enhanced error handling and logging in the GitLab provider.

|

||||

- Improved handling of inline comments and code suggestions in GitLab.

|

||||

- Fixed a bug where an additional unneeded line was added to code suggestions in GitLab.

|

||||

|

||||

## 2023-07-26

|

||||

|

||||

### Added

|

||||

- New feature for updating the CHANGELOG.md based on the contents of a PR.

|

||||

- Added support for this feature for the Github provider.

|

||||

- New configuration settings and prompts for the changelog update feature.

|

||||

@ -1,57 +1,19 @@

|

||||

## Configuration

|

||||

|

||||

The different tools and sub-tools used by CodiumAI PR-Agent are adjustable via the **[configuration file](pr_agent/settings/configuration.toml)**

|

||||

The different tools and sub-tools used by CodiumAI pr-agent are easily configurable via the configuration file: `/pr-agent/settings/configuration.toml`.

|

||||

##### Git Provider:

|

||||

You can select your git_provider with the flag `git_provider` in the `config` section

|

||||

|

||||

### Working from CLI

|

||||

When running from source (CLI), your local configuration file will be initially used.

|

||||

|

||||

Example for invoking the 'review' tools via the CLI:

|

||||

##### PR Reviewer:

|

||||

|

||||

You can enable/disable the different PR Reviewer abilities with the following flags (`pr_reviewer` section):

|

||||

```

|

||||

python cli.py --pr-url=<pr_url> review

|

||||

require_focused_review=true

|

||||

require_score_review=true

|

||||

require_tests_review=true

|

||||

require_security_review=true

|

||||

```

|

||||

In addition to general configurations, the 'review' tool will use parameters from the `[pr_reviewer]` section (every tool has a dedicated section in the configuration file).

|

||||

|

||||

Note that you can print results locally, without publishing them, by setting in `configuration.toml`:

|

||||

|

||||

```

|

||||

[config]

|

||||

publish_output=true

|

||||

verbosity_level=2

|

||||

```

|

||||

This is useful for debugging or experimenting with the different tools.

|

||||

|

||||

### Working from pre-built repo (GitHub Action/GitHub App/Docker)

|

||||

When running PR-Agent from a pre-built repo, the default configuration file will be loaded.

|

||||

|

||||

To edit the configuration, you have two options:

|

||||

1. Place a local configuration file in the root of your local repo. The local file will be used instead of the default one.

|

||||

2. For online usage, just add `--config_path=<value>` to you command, to edit a specific configuration value.

|

||||

For example if you want to edit `pr_reviewer` configurations, you can run:

|

||||

```

|

||||

/review --pr_reviewer.extra_instructions="..." --pr_reviewer.require_score_review=false ...

|

||||

```

|

||||

|

||||

Any configuration value in `configuration.toml` file can be similarly edited.

|

||||

|

||||

### General configuration parameters

|

||||

|

||||

#### Changing a model

|

||||

See [here](pr_agent/algo/__init__.py) for the list of available models.

|

||||

|

||||

To use Llama2 model, for example, set:

|

||||

```

|

||||

[config]

|

||||

model = "replicate/llama-2-70b-chat:2c1608e18606fad2812020dc541930f2d0495ce32eee50074220b87300bc16e1"

|

||||

[replicate]

|

||||

key = ...

|

||||

```

|

||||

(you can obtain a Llama2 key from [here](https://replicate.com/replicate/llama-2-70b-chat/api))

|

||||

|

||||

Also review the [AiHandler](pr_agent/algo/ai_handler.py) file for instruction how to set keys for other models.

|

||||

|

||||

#### Extra instructions

|

||||

All PR-Agent tools have a parameter called `extra_instructions`, that enables to add free-text extra instructions. Example usage:

|

||||

```

|

||||

/update_changelog --pr_update_changelog.extra_instructions="Make sure to update also the version ..."

|

||||

```

|

||||

You can contol the number of suggestions returned by the PR Reviewer with the following flag:

|

||||

```inline_code_comments=3```

|

||||

And enable/disable the inline code suggestions with the following flag:

|

||||

```inline_code_comments=true```

|

||||

|

||||

@ -1,8 +1,8 @@

|

||||

FROM python:3.10 as base

|

||||

|

||||

WORKDIR /app

|

||||

ADD pyproject.toml .

|

||||

RUN pip install . && rm pyproject.toml

|

||||

ADD requirements.txt .

|

||||

RUN pip install -r requirements.txt && rm requirements.txt

|

||||

ENV PYTHONPATH=/app

|

||||

ADD pr_agent pr_agent

|

||||

ADD github_action/entrypoint.sh /

|

||||

|

||||

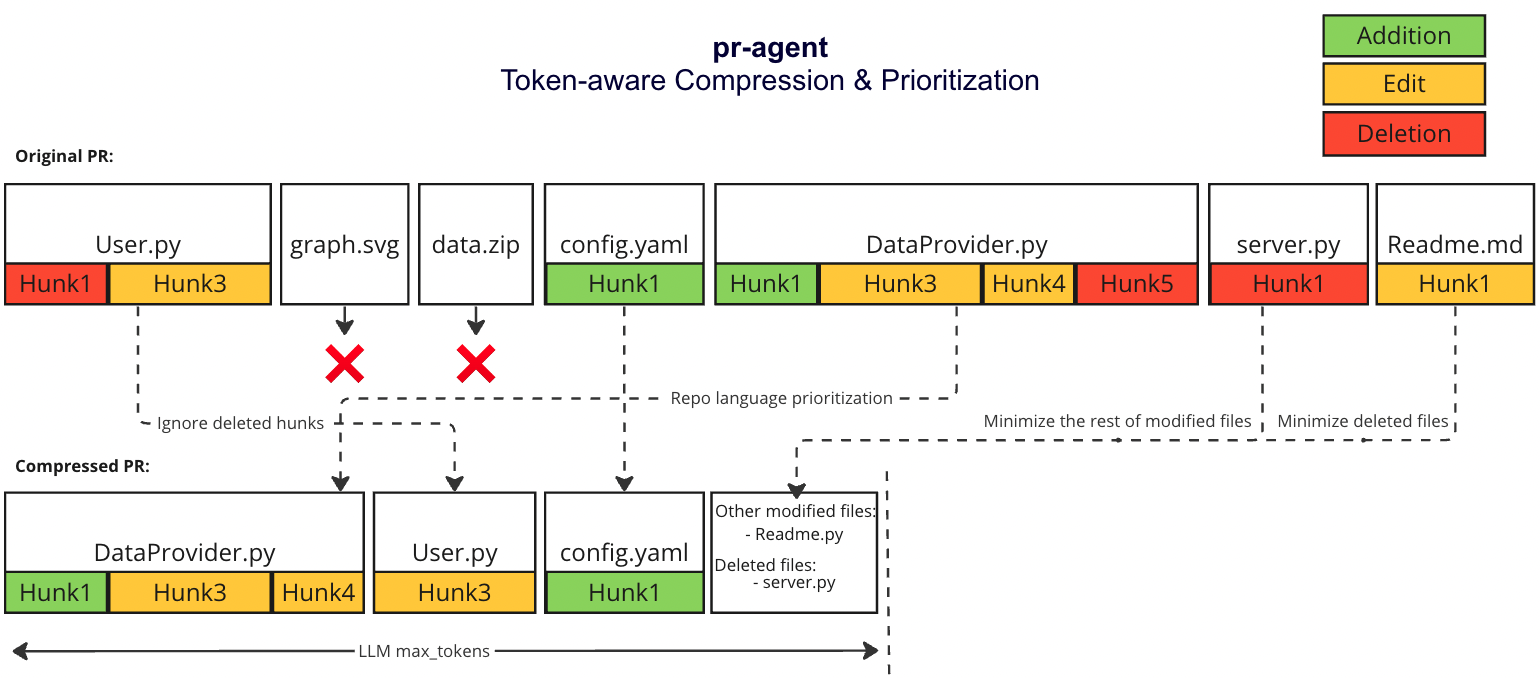

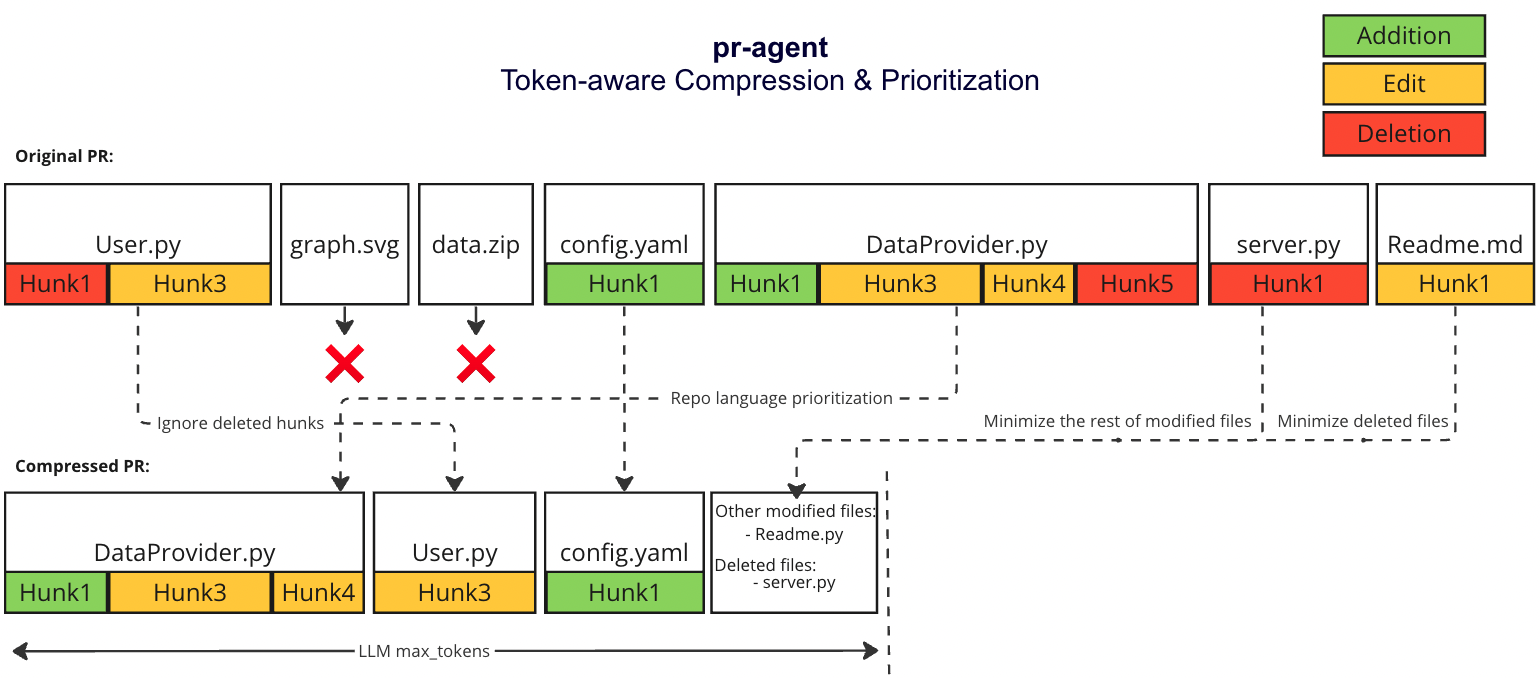

@ -31,7 +31,7 @@ We prioritize additions over deletions:

|

||||

- File patches are a list of hunks, remove all hunks of type deletion-only from the hunks in the file patch

|

||||

#### Adaptive and token-aware file patch fitting

|

||||

We use [tiktoken](https://github.com/openai/tiktoken) to tokenize the patches after the modifications described above, and we use the following strategy to fit the patches into the prompt:

|

||||

1. Within each language we sort the files by the number of tokens in the file (in descending order):

|

||||

1. Withing each language we sort the files by the number of tokens in the file (in descending order):

|

||||

* ```[[file2.py, file.py],[file4.jsx, file3.js],[readme.md]]```

|

||||

2. Iterate through the patches in the order described above

|

||||

2. Add the patches to the prompt until the prompt reaches a certain buffer from the max token length

|

||||

@ -39,4 +39,4 @@ We use [tiktoken](https://github.com/openai/tiktoken) to tokenize the patches af

|

||||

4. If we haven't reached the max token length, add the `deleted files` to the prompt until the prompt reaches the max token length (hard stop), skip the rest of the patches.

|

||||

|

||||

### Example

|

||||

|

||||

|

||||

41

README.md

41

README.md

@ -23,9 +23,7 @@ CodiumAI `PR-Agent` is an open-source tool aiming to help developers review pull

|

||||

\

|

||||

**Question Answering**: Answering free-text questions about the PR.

|

||||

\

|

||||

**Code Suggestions**: Committable code suggestions for improving the PR.

|

||||

\

|

||||

**Update Changelog**: Automatically updating the CHANGELOG.md file with the PR changes.

|

||||

**Code Suggestion**: Committable code suggestions for improving the PR.

|

||||

|

||||

<h3>Example results:</h2>

|

||||

</div>

|

||||

@ -65,9 +63,9 @@ CodiumAI `PR-Agent` is an open-source tool aiming to help developers review pull

|

||||

- [Overview](#overview)

|

||||

- [Try it now](#try-it-now)

|

||||

- [Installation](#installation)

|

||||

- [Usage and tools](#usage-and-tools)

|

||||

- [Configuration](./CONFIGURATION.md)

|

||||

- [How it works](#how-it-works)

|

||||

- [Why use PR-Agent](#why-use-pr-agent)

|

||||

- [Roadmap](#roadmap)

|

||||

- [Similar projects](#similar-projects)

|

||||

</div>

|

||||

@ -83,17 +81,14 @@ CodiumAI `PR-Agent` is an open-source tool aiming to help developers review pull

|

||||

| | Auto-Description | :white_check_mark: | :white_check_mark: | |

|

||||

| | Improve Code | :white_check_mark: | :white_check_mark: | |

|

||||

| | Reflect and Review | :white_check_mark: | | |

|

||||

| | Update CHANGELOG.md | :white_check_mark: | | |

|

||||

| | | | | |

|

||||

| USAGE | CLI | :white_check_mark: | :white_check_mark: | :white_check_mark: |

|

||||

| | App / webhook | :white_check_mark: | :white_check_mark: | |

|

||||

| | Tagging bot | :white_check_mark: | | |

|

||||

| | Actions | :white_check_mark: | | |

|

||||

| | | | | |

|

||||

| CORE | PR compression | :white_check_mark: | :white_check_mark: | :white_check_mark: |

|

||||

| | Repo language prioritization | :white_check_mark: | :white_check_mark: | :white_check_mark: |

|

||||

| | Adaptive and token-aware<br />file patch fitting | :white_check_mark: | :white_check_mark: | :white_check_mark: |

|

||||

| | Multiple models support | :white_check_mark: | :white_check_mark: | :white_check_mark: |

|

||||

| | Incremental PR Review | :white_check_mark: | | |

|

||||

|

||||

Examples for invoking the different tools via the CLI:

|

||||

@ -102,7 +97,6 @@ Examples for invoking the different tools via the CLI:

|

||||

- **Improve**: python cli.py --pr-url=<pr_url> improve

|

||||

- **Ask**: python cli.py --pr-url=<pr_url> ask "Write me a poem about this PR"

|

||||

- **Reflect**: python cli.py --pr-url=<pr_url> reflect

|

||||

- **Update Changelog**: python cli.py --pr-url=<pr_url> update_changelog

|

||||

|

||||

"<pr_url>" is the url of the relevant PR (for example: https://github.com/Codium-ai/pr-agent/pull/50).

|

||||

|

||||

@ -135,41 +129,36 @@ There are several ways to use PR-Agent:

|

||||

- [Method 5: Run as a GitHub App](INSTALL.md#method-5-run-as-a-github-app)

|

||||

- Allowing you to automate the review process on your private or public repositories

|

||||

|

||||

## Usage and Tools

|

||||

|

||||

**PR-Agent** provides five types of interactions ("tools"): `"PR Reviewer"`, `"PR Q&A"`, `"PR Description"`, `"PR Code Sueggestions"` and `"PR Reflect and Review"`.

|

||||

|

||||

- The "PR Reviewer" tool automatically analyzes PRs, and provides various types of feedback.

|

||||

- The "PR Q&A" tool answers free-text questions about the PR.

|

||||

- The "PR Description" tool automatically sets the PR Title and body.

|

||||

- The "PR Code Suggestion" tool provide inline code suggestions for the PR that can be applied and committed.

|

||||

- The "PR Reflect and Review" tool initiates a dialog with the user, asks them to reflect on the PR, and then provides a more focused review.

|

||||

|

||||

## How it works

|

||||

|

||||

The following diagram illustrates PR-Agent tools and their flow:

|

||||

|

||||

|

||||

|

||||

Check out the [PR Compression strategy](./PR_COMPRESSION.md) page for more details on how we convert a code diff to a manageable LLM prompt

|

||||

|

||||

## Why use PR-Agent?

|

||||

|

||||

A reasonable question that can be asked is: `"Why use PR-Agent? What make it stand out from existing tools?"`

|

||||

|

||||

Here are some advantages of PR-Agent:

|

||||

|

||||

- We emphasize **real-life practical usage**. Each tool (review, improve, ask, ...) has a single GPT-4 call, no more. We feel that this is critical for realistic team usage - obtaining an answer quickly (~30 seconds) and affordably.

|

||||

- Our [PR Compression strategy](./PR_COMPRESSION.md) is a core ability that enables to effectively tackle both short and long PRs.

|

||||

- Our JSON prompting strategy enables to have **modular, customizable tools**. For example, the '/review' tool categories can be controlled via the [configuration](./CONFIGURATION.md) file. Adding additional categories is easy and accessible.

|

||||

- We support **multiple git providers** (GitHub, Gitlab, Bitbucket), **multiple ways** to use the tool (CLI, GitHub Action, GitHub App, Docker, ...), and **multiple models** (GPT-4, GPT-3.5, Anthropic, Cohere, Llama2).

|

||||

- We are open-source, and welcome contributions from the community.

|

||||

|

||||

|

||||

## Roadmap

|

||||

|

||||

- [x] Support additional models, as a replacement for OpenAI (see [here](https://github.com/Codium-ai/pr-agent/pull/172))

|

||||

- [ ] Develop additional logic for handling large PRs

|

||||

- [ ] Support open-source models, as a replacement for OpenAI models. (Note - a minimal requirement for each open-source model is to have 8k+ context, and good support for generating JSON as an output)

|

||||

- [x] Support other Git providers, such as Gitlab and Bitbucket.

|

||||

- [ ] Develop additional logic for handling large PRs, and compressing git patches

|

||||

- [ ] Add additional context to the prompt. For example, repo (or relevant files) summarization, with tools such a [ctags](https://github.com/universal-ctags/ctags)

|

||||

- [ ] Adding more tools. Possible directions:

|

||||

- [x] PR description

|

||||

- [x] Inline code suggestions

|

||||

- [x] Reflect and review

|

||||

- [x] Rank the PR (see [here](https://github.com/Codium-ai/pr-agent/pull/89))

|

||||

- [ ] Enforcing CONTRIBUTING.md guidelines

|

||||

- [ ] Performance (are there any performance issues)

|

||||

- [ ] Documentation (is the PR properly documented)

|

||||

- [ ] Rank the PR importance

|

||||

- [ ] ...

|

||||

|

||||

## Similar Projects

|

||||

|

||||

@ -1,8 +1,8 @@

|

||||

FROM python:3.10 as base

|

||||

|

||||

WORKDIR /app

|

||||

ADD pyproject.toml .

|

||||

RUN pip install . && rm pyproject.toml

|

||||

ADD requirements.txt .

|

||||

RUN pip install -r requirements.txt && rm requirements.txt

|

||||

ENV PYTHONPATH=/app

|

||||

ADD pr_agent pr_agent

|

||||

|

||||

|

||||

@ -4,9 +4,9 @@ RUN yum update -y && \

|

||||

yum install -y gcc python3-devel && \

|

||||

yum clean all

|

||||

|

||||

ADD pyproject.toml .

|

||||

RUN pip install . && rm pyproject.toml

|

||||

RUN pip install mangum==0.17.0

|

||||

ADD requirements.txt .

|

||||

RUN pip install -r requirements.txt && rm requirements.txt

|

||||

RUN pip install mangum==16.0.0

|

||||

COPY pr_agent/ ${LAMBDA_TASK_ROOT}/pr_agent/

|

||||

|

||||

CMD ["pr_agent.servers.serverless.serverless"]

|

||||

|

||||

@ -1,75 +1,33 @@

|

||||

import logging

|

||||

import os

|

||||

import shlex

|

||||

import tempfile

|

||||

import re

|

||||

|

||||

from pr_agent.algo.utils import update_settings_from_args

|

||||

from pr_agent.config_loader import get_settings

|

||||

from pr_agent.git_providers import get_git_provider

|

||||

from pr_agent.config_loader import settings

|

||||

from pr_agent.tools.pr_code_suggestions import PRCodeSuggestions

|

||||

from pr_agent.tools.pr_description import PRDescription

|

||||

from pr_agent.tools.pr_information_from_user import PRInformationFromUser

|

||||

from pr_agent.tools.pr_questions import PRQuestions

|

||||

from pr_agent.tools.pr_reviewer import PRReviewer

|

||||

from pr_agent.tools.pr_update_changelog import PRUpdateChangelog

|

||||

from pr_agent.tools.pr_config import PRConfig

|

||||

|

||||

command2class = {

|

||||

"answer": PRReviewer,

|

||||

"review": PRReviewer,

|

||||

"review_pr": PRReviewer,

|

||||

"reflect": PRInformationFromUser,

|

||||

"reflect_and_review": PRInformationFromUser,

|

||||

"describe": PRDescription,

|

||||

"describe_pr": PRDescription,

|

||||

"improve": PRCodeSuggestions,

|

||||

"improve_code": PRCodeSuggestions,

|

||||

"ask": PRQuestions,

|

||||

"ask_question": PRQuestions,

|

||||

"update_changelog": PRUpdateChangelog,

|

||||

"config": PRConfig,

|

||||

"settings": PRConfig,

|

||||

}

|

||||

|

||||

commands = list(command2class.keys())

|

||||

|

||||

class PRAgent:

|

||||

def __init__(self):

|

||||

pass

|

||||

|

||||

async def handle_request(self, pr_url, request) -> bool:

|

||||

# First, apply repo specific settings if exists

|

||||

if get_settings().config.use_repo_settings_file:

|

||||

repo_settings_file = None

|

||||

try:

|

||||

git_provider = get_git_provider()(pr_url)

|

||||

repo_settings = git_provider.get_repo_settings()

|

||||

if repo_settings:

|

||||

repo_settings_file = None

|

||||

fd, repo_settings_file = tempfile.mkstemp(suffix='.toml')

|

||||

os.write(fd, repo_settings)

|

||||

get_settings().load_file(repo_settings_file)

|

||||

finally:

|

||||

if repo_settings_file:

|

||||

try:

|

||||

os.remove(repo_settings_file)

|

||||

except Exception as e:

|

||||

logging.error(f"Failed to remove temporary settings file {repo_settings_file}", e)

|

||||

|

||||

# Then, apply user specific settings if exists

|

||||

request = request.replace("'", "\\'")

|

||||

lexer = shlex.shlex(request, posix=True)

|

||||

lexer.whitespace_split = True

|

||||

action, *args = list(lexer)

|

||||

args = update_settings_from_args(args)

|

||||

|

||||

action = action.lstrip("/").lower()

|

||||

if action == "reflect_and_review" and not get_settings().pr_reviewer.ask_and_reflect:

|

||||

action = "review"

|

||||

if action == "answer":

|

||||

await PRReviewer(pr_url, is_answer=True, args=args).run()

|

||||

elif action in command2class:

|

||||

await command2class[action](pr_url, args=args).run()

|

||||

action, *args = request.strip().split()

|

||||

if any(cmd == action for cmd in ["/answer"]):

|

||||

await PRReviewer(pr_url, is_answer=True).review()

|

||||

elif any(cmd == action for cmd in ["/review", "/review_pr", "/reflect_and_review"]):

|

||||

if settings.pr_reviewer.ask_and_reflect or "/reflect_and_review" in request:

|

||||

await PRInformationFromUser(pr_url).generate_questions()

|

||||

else:

|

||||

await PRReviewer(pr_url, args=args).review()

|

||||

elif any(cmd == action for cmd in ["/describe", "/describe_pr"]):

|

||||

await PRDescription(pr_url).describe()

|

||||

elif any(cmd == action for cmd in ["/improve", "/improve_code"]):

|

||||

await PRCodeSuggestions(pr_url).suggest()

|

||||

elif any(cmd == action for cmd in ["/ask", "/ask_question"]):

|

||||

await PRQuestions(pr_url, args).answer()

|

||||

else:

|

||||

return False

|

||||

|

||||

return True

|

||||

|

||||

@ -7,8 +7,4 @@ MAX_TOKENS = {

|

||||

'gpt-4': 8000,

|

||||

'gpt-4-0613': 8000,

|

||||

'gpt-4-32k': 32000,

|

||||

'claude-instant-1': 100000,

|

||||

'claude-2': 100000,

|

||||

'command-nightly': 4096,

|

||||

'replicate/llama-2-70b-chat:2c1608e18606fad2812020dc541930f2d0495ce32eee50074220b87300bc16e1': 4096,

|

||||

}

|

||||

|

||||

@ -1,15 +1,12 @@

|

||||

import logging

|

||||

|

||||

import litellm

|

||||

import openai

|

||||

from litellm import acompletion

|

||||

from openai.error import APIError, RateLimitError, Timeout, TryAgain

|

||||

from openai.error import APIError, Timeout, TryAgain, RateLimitError

|

||||

from retry import retry

|

||||

|

||||

from pr_agent.config_loader import get_settings

|

||||

|

||||

OPENAI_RETRIES = 5

|

||||

from pr_agent.config_loader import settings

|

||||

|

||||

OPENAI_RETRIES=5

|

||||

|

||||

class AiHandler:

|

||||

"""

|

||||

@ -24,26 +21,16 @@ class AiHandler:

|

||||

Raises a ValueError if the OpenAI key is missing.

|

||||

"""

|

||||

try:

|

||||

openai.api_key = get_settings().openai.key

|

||||

litellm.openai_key = get_settings().openai.key

|

||||

self.azure = False

|

||||

if get_settings().get("OPENAI.ORG", None):

|

||||

litellm.organization = get_settings().openai.org

|

||||

self.deployment_id = get_settings().get("OPENAI.DEPLOYMENT_ID", None)

|

||||

if get_settings().get("OPENAI.API_TYPE", None):

|

||||

if get_settings().openai.api_type == "azure":

|

||||

self.azure = True

|

||||

litellm.azure_key = get_settings().openai.key

|

||||

if get_settings().get("OPENAI.API_VERSION", None):

|

||||

litellm.api_version = get_settings().openai.api_version

|

||||

if get_settings().get("OPENAI.API_BASE", None):

|

||||

litellm.api_base = get_settings().openai.api_base

|

||||

if get_settings().get("ANTHROPIC.KEY", None):

|

||||

litellm.anthropic_key = get_settings().anthropic.key

|

||||

if get_settings().get("COHERE.KEY", None):

|

||||

litellm.cohere_key = get_settings().cohere.key

|

||||

if get_settings().get("REPLICATE.KEY", None):

|

||||

litellm.replicate_key = get_settings().replicate.key

|

||||

openai.api_key = settings.openai.key

|

||||

if settings.get("OPENAI.ORG", None):

|

||||

openai.organization = settings.openai.org

|

||||

self.deployment_id = settings.get("OPENAI.DEPLOYMENT_ID", None)

|

||||

if settings.get("OPENAI.API_TYPE", None):

|

||||

openai.api_type = settings.openai.api_type

|

||||

if settings.get("OPENAI.API_VERSION", None):

|

||||

openai.api_version = settings.openai.api_version

|

||||

if settings.get("OPENAI.API_BASE", None):

|

||||

openai.api_base = settings.openai.api_base

|

||||

except AttributeError as e:

|

||||

raise ValueError("OpenAI key is required") from e

|

||||

|

||||

@ -70,17 +57,15 @@ class AiHandler:

|

||||

TryAgain: If there is an attribute error during OpenAI inference.

|

||||

"""

|

||||

try:

|

||||

response = await acompletion(

|

||||

model=model,

|

||||

deployment_id=self.deployment_id,

|

||||

messages=[

|

||||

{"role": "system", "content": system},

|

||||

{"role": "user", "content": user}

|

||||

],

|

||||

temperature=temperature,

|

||||

azure=self.azure,

|

||||

force_timeout=get_settings().config.ai_timeout

|

||||

)

|

||||

response = await openai.ChatCompletion.acreate(

|

||||

model=model,

|

||||

deployment_id=self.deployment_id,

|

||||

messages=[

|

||||

{"role": "system", "content": system},

|

||||

{"role": "user", "content": user}

|

||||

],

|

||||

temperature=temperature,

|

||||

)

|

||||

except (APIError, Timeout, TryAgain) as e:

|

||||

logging.error("Error during OpenAI inference: ", e)

|

||||

raise

|

||||

@ -90,9 +75,8 @@ class AiHandler:

|

||||

except (Exception) as e:

|

||||

logging.error("Unknown error during OpenAI inference: ", e)

|

||||

raise TryAgain from e

|

||||

if response is None or len(response["choices"]) == 0:

|

||||

if response is None or len(response.choices) == 0:

|

||||

raise TryAgain

|

||||

resp = response["choices"][0]['message']['content']

|

||||

finish_reason = response["choices"][0]["finish_reason"]

|

||||

print(resp, finish_reason)

|

||||

return resp, finish_reason

|

||||

resp = response.choices[0]['message']['content']

|

||||

finish_reason = response.choices[0].finish_reason

|

||||

return resp, finish_reason

|

||||

@ -3,7 +3,7 @@ from __future__ import annotations

|

||||

import logging

|

||||

import re

|

||||

|

||||

from pr_agent.config_loader import get_settings

|

||||

from pr_agent.config_loader import settings

|

||||

|

||||

|

||||

def extend_patch(original_file_str, patch_str, num_lines) -> str:

|

||||

@ -41,11 +41,7 @@ def extend_patch(original_file_str, patch_str, num_lines) -> str:

|

||||

extended_patch_lines.extend(

|

||||

original_lines[start1 + size1 - 1:start1 + size1 - 1 + num_lines])

|

||||

|

||||

try:

|

||||

start1, size1, start2, size2 = map(int, match.groups()[:4])

|

||||

except: # '@@ -0,0 +1 @@' case

|

||||

start1, size1, size2 = map(int, match.groups()[:3])

|

||||

start2 = 0

|

||||

start1, size1, start2, size2 = map(int, match.groups()[:4])

|

||||

section_header = match.groups()[4]

|

||||

extended_start1 = max(1, start1 - num_lines)

|

||||

extended_size1 = size1 + (start1 - extended_start1) + num_lines

|

||||

@ -59,7 +55,7 @@ def extend_patch(original_file_str, patch_str, num_lines) -> str:

|

||||

continue

|

||||

extended_patch_lines.append(line)

|

||||

except Exception as e:

|

||||

if get_settings().config.verbosity_level >= 2:

|

||||

if settings.config.verbosity_level >= 2:

|

||||

logging.error(f"Failed to extend patch: {e}")

|

||||

return patch_str

|

||||

|

||||

@ -130,14 +126,14 @@ def handle_patch_deletions(patch: str, original_file_content_str: str,

|

||||

"""

|

||||

if not new_file_content_str:

|

||||

# logic for handling deleted files - don't show patch, just show that the file was deleted

|

||||

if get_settings().config.verbosity_level > 0:

|

||||

if settings.config.verbosity_level > 0:

|

||||

logging.info(f"Processing file: {file_name}, minimizing deletion file")

|

||||

patch = None # file was deleted

|

||||

else:

|

||||

patch_lines = patch.splitlines()

|

||||

patch_new = omit_deletion_hunks(patch_lines)

|

||||

if patch != patch_new:

|

||||

if get_settings().config.verbosity_level > 0:

|

||||

if settings.config.verbosity_level > 0:

|

||||

logging.info(f"Processing file: {file_name}, hunks were deleted")

|

||||

patch = patch_new

|

||||

return patch

|

||||

@ -145,8 +141,7 @@ def handle_patch_deletions(patch: str, original_file_content_str: str,

|

||||

|

||||

def convert_to_hunks_with_lines_numbers(patch: str, file) -> str:

|

||||

"""

|

||||

Convert a given patch string into a string with line numbers for each hunk, indicating the new and old content of

|

||||

the file.

|

||||

Convert a given patch string into a string with line numbers for each hunk, indicating the new and old content of the file.

|

||||

|

||||

Args:

|

||||

patch (str): The patch string to be converted.

|

||||

@ -202,12 +197,7 @@ def convert_to_hunks_with_lines_numbers(patch: str, file) -> str:

|

||||

patch_with_lines_str += f"{line_old}\n"

|

||||

new_content_lines = []

|

||||

old_content_lines = []

|

||||

try:

|

||||

start1, size1, start2, size2 = map(int, match.groups()[:4])

|

||||

except: # '@@ -0,0 +1 @@' case

|

||||

start1, size1, size2 = map(int, match.groups()[:3])

|

||||

start2 = 0

|

||||

|

||||

start1, size1, start2, size2 = map(int, match.groups()[:4])

|

||||

elif line.startswith('+'):

|

||||

new_content_lines.append(line)

|

||||

elif line.startswith('-'):

|

||||

|

||||

@ -1,19 +1,19 @@

|

||||

# Language Selection, source: https://github.com/bigcode-project/bigcode-dataset/blob/main/language_selection/programming-languages-to-file-extensions.json # noqa E501

|

||||

from typing import Dict

|

||||

|

||||

from pr_agent.config_loader import get_settings

|

||||

from pr_agent.config_loader import settings

|

||||

|

||||

language_extension_map_org = get_settings().language_extension_map_org

|

||||

language_extension_map_org = settings.language_extension_map_org

|

||||

language_extension_map = {k.lower(): v for k, v in language_extension_map_org.items()}

|

||||

|

||||

# Bad Extensions, source: https://github.com/EleutherAI/github-downloader/blob/345e7c4cbb9e0dc8a0615fd995a08bf9d73b3fe6/download_repo_text.py # noqa: E501

|

||||

bad_extensions = get_settings().bad_extensions.default

|

||||

if get_settings().config.use_extra_bad_extensions:

|

||||

bad_extensions += get_settings().bad_extensions.extra

|

||||

bad_extensions = settings.bad_extensions.default

|

||||

if settings.config.use_extra_bad_extensions:

|

||||

bad_extensions += settings.bad_extensions.extra

|

||||

|

||||

|

||||

def filter_bad_extensions(files):

|

||||

return [f for f in files if f.filename is not None and is_valid_file(f.filename)]

|

||||

return [f for f in files if is_valid_file(f.filename)]

|

||||

|

||||

|

||||

def is_valid_file(filename):

|

||||

|

||||

@ -1,19 +1,15 @@

|

||||

from __future__ import annotations

|

||||

|

||||

import difflib

|

||||

import logging

|

||||

import re

|

||||

import traceback

|

||||

from typing import Any, Callable, List, Tuple

|

||||

|

||||

from github import RateLimitExceededException

|

||||

from typing import Tuple, Union, Callable, List

|

||||

|

||||

from pr_agent.algo import MAX_TOKENS

|

||||

from pr_agent.algo.git_patch_processing import convert_to_hunks_with_lines_numbers, extend_patch, handle_patch_deletions

|

||||

from pr_agent.algo.language_handler import sort_files_by_main_languages

|

||||

from pr_agent.algo.token_handler import TokenHandler

|

||||

from pr_agent.config_loader import get_settings

|

||||

from pr_agent.git_providers.git_provider import FilePatchInfo, GitProvider

|

||||

from pr_agent.algo.utils import load_large_diff

|

||||

from pr_agent.config_loader import settings

|

||||

from pr_agent.git_providers.git_provider import GitProvider

|

||||

|

||||

DELETED_FILES_ = "Deleted files:\n"

|

||||

|

||||

@ -23,21 +19,18 @@ OUTPUT_BUFFER_TOKENS_SOFT_THRESHOLD = 1000

|

||||

OUTPUT_BUFFER_TOKENS_HARD_THRESHOLD = 600

|

||||

PATCH_EXTRA_LINES = 3

|

||||

|

||||

|

||||

def get_pr_diff(git_provider: GitProvider, token_handler: TokenHandler, model: str,

|

||||

add_line_numbers_to_hunks: bool = False, disable_extra_lines: bool = False) -> str:

|

||||

"""

|

||||

Returns a string with the diff of the pull request, applying diff minimization techniques if needed.

|

||||

|

||||

Args:

|

||||

git_provider (GitProvider): An object of the GitProvider class representing the Git provider used for the pull

|

||||

request.

|

||||

token_handler (TokenHandler): An object of the TokenHandler class used for handling tokens in the context of the

|

||||

pull request.

|

||||

git_provider (GitProvider): An object of the GitProvider class representing the Git provider used for the pull request.

|

||||

token_handler (TokenHandler): An object of the TokenHandler class used for handling tokens in the context of the pull request.

|

||||

model (str): The name of the model used for tokenization.

|

||||

add_line_numbers_to_hunks (bool, optional): A boolean indicating whether to add line numbers to the hunks in the

|

||||

diff. Defaults to False.

|

||||

disable_extra_lines (bool, optional): A boolean indicating whether to disable the extension of each patch with

|

||||

extra lines of context. Defaults to False.

|

||||

add_line_numbers_to_hunks (bool, optional): A boolean indicating whether to add line numbers to the hunks in the diff. Defaults to False.

|

||||

disable_extra_lines (bool, optional): A boolean indicating whether to disable the extension of each patch with extra lines of context. Defaults to False.

|

||||

|

||||

Returns:

|

||||

str: A string with the diff of the pull request, applying diff minimization techniques if needed.

|

||||

@ -47,11 +40,7 @@ def get_pr_diff(git_provider: GitProvider, token_handler: TokenHandler, model: s

|

||||

global PATCH_EXTRA_LINES

|

||||

PATCH_EXTRA_LINES = 0

|

||||

|

||||

try:

|

||||

diff_files = git_provider.get_diff_files()

|

||||

except RateLimitExceededException as e:

|

||||

logging.error(f"Rate limit exceeded for git provider API. original message {e}")

|

||||

raise

|

||||

diff_files = list(git_provider.get_diff_files())

|

||||

|

||||

# get pr languages

|

||||

pr_languages = sort_files_by_main_languages(git_provider.get_languages(), diff_files)

|

||||

@ -66,7 +55,7 @@ def get_pr_diff(git_provider: GitProvider, token_handler: TokenHandler, model: s

|

||||

|

||||

# if we are over the limit, start pruning

|

||||

patches_compressed, modified_file_names, deleted_file_names = \

|

||||

pr_generate_compressed_diff(pr_languages, token_handler, model, add_line_numbers_to_hunks)

|

||||

pr_generate_compressed_diff(pr_languages, token_handler, add_line_numbers_to_hunks)

|

||||

|

||||

final_diff = "\n".join(patches_compressed)

|

||||

if modified_file_names:

|

||||

@ -82,12 +71,10 @@ def pr_generate_extended_diff(pr_languages: list, token_handler: TokenHandler,

|

||||

add_line_numbers_to_hunks: bool) -> \

|

||||

Tuple[list, int]:

|

||||

"""

|

||||

Generate a standard diff string with patch extension, while counting the number of tokens used and applying diff

|

||||

minimization techniques if needed.

|

||||

Generate a standard diff string with patch extension, while counting the number of tokens used and applying diff minimization techniques if needed.

|

||||

|

||||

Args:

|

||||

- pr_languages: A list of dictionaries representing the languages used in the pull request and their corresponding

|

||||

files.

|

||||

- pr_languages: A list of dictionaries representing the languages used in the pull request and their corresponding files.

|

||||

- token_handler: An object of the TokenHandler class used for handling tokens in the context of the pull request.

|

||||

- add_line_numbers_to_hunks: A boolean indicating whether to add line numbers to the hunks in the diff.

|

||||

|

||||

@ -100,7 +87,12 @@ def pr_generate_extended_diff(pr_languages: list, token_handler: TokenHandler,

|

||||

for lang in pr_languages:

|

||||

for file in lang['files']:

|

||||

original_file_content_str = file.base_file

|

||||

new_file_content_str = file.head_file

|

||||

patch = file.patch

|

||||

|

||||

# handle the case of large patch, that initially was not loaded

|

||||

patch = load_large_diff(file, new_file_content_str, original_file_content_str, patch)

|

||||

|

||||

if not patch:

|

||||

continue

|

||||

|

||||

@ -122,13 +114,10 @@ def pr_generate_extended_diff(pr_languages: list, token_handler: TokenHandler,

|

||||

def pr_generate_compressed_diff(top_langs: list, token_handler: TokenHandler, model: str,

|

||||

convert_hunks_to_line_numbers: bool) -> Tuple[list, list, list]:

|

||||

"""

|

||||

Generate a compressed diff string for a pull request, using diff minimization techniques to reduce the number of

|

||||

tokens used.

|

||||

Generate a compressed diff string for a pull request, using diff minimization techniques to reduce the number of tokens used.

|

||||

Args:

|

||||

top_langs (list): A list of dictionaries representing the languages used in the pull request and their

|

||||

corresponding files.

|

||||

token_handler (TokenHandler): An object of the TokenHandler class used for handling tokens in the context of the

|

||||

pull request.

|

||||

top_langs (list): A list of dictionaries representing the languages used in the pull request and their corresponding files.

|

||||

token_handler (TokenHandler): An object of the TokenHandler class used for handling tokens in the context of the pull request.

|

||||

model (str): The model used for tokenization.

|

||||

convert_hunks_to_line_numbers (bool): A boolean indicating whether to convert hunks to line numbers in the diff.

|

||||

Returns:

|

||||

@ -158,6 +147,7 @@ def pr_generate_compressed_diff(top_langs: list, token_handler: TokenHandler, mo

|

||||

original_file_content_str = file.base_file

|

||||

new_file_content_str = file.head_file

|

||||

patch = file.patch

|

||||

patch = load_large_diff(file, new_file_content_str, original_file_content_str, patch)

|

||||

if not patch:

|

||||

continue

|

||||

|

||||

@ -186,7 +176,7 @@ def pr_generate_compressed_diff(top_langs: list, token_handler: TokenHandler, mo

|

||||

# Current logic is to skip the patch if it's too large

|

||||

# TODO: Option for alternative logic to remove hunks from the patch to reduce the number of tokens

|

||||

# until we meet the requirements

|

||||

if get_settings().config.verbosity_level >= 2:

|

||||

if settings.config.verbosity_level >= 2:

|

||||

logging.warning(f"Patch too large, minimizing it, {file.filename}")

|

||||

if not modified_files_list:

|

||||

total_tokens += token_handler.count_tokens(MORE_MODIFIED_FILES_)

|

||||

@ -201,15 +191,15 @@ def pr_generate_compressed_diff(top_langs: list, token_handler: TokenHandler, mo

|

||||

patch_final = patch

|

||||

patches.append(patch_final)

|

||||

total_tokens += token_handler.count_tokens(patch_final)

|

||||

if get_settings().config.verbosity_level >= 2:

|

||||

if settings.config.verbosity_level >= 2:

|

||||

logging.info(f"Tokens: {total_tokens}, last filename: {file.filename}")

|

||||

|

||||

return patches, modified_files_list, deleted_files_list

|

||||

|

||||

|

||||

async def retry_with_fallback_models(f: Callable):

|

||||

model = get_settings().config.model

|

||||

fallback_models = get_settings().config.fallback_models

|

||||

model = settings.config.model

|

||||

fallback_models = settings.config.fallback_models

|

||||

if not isinstance(fallback_models, list):

|

||||

fallback_models = [fallback_models]

|

||||

all_models = [model] + fallback_models

|

||||

@ -217,70 +207,6 @@ async def retry_with_fallback_models(f: Callable):

|

||||

try:

|

||||

return await f(model)

|

||||

except Exception as e:

|

||||

logging.warning(f"Failed to generate prediction with {model}: {traceback.format_exc()}")

|

||||

logging.warning(f"Failed to generate prediction with {model}: {e}")

|

||||

if i == len(all_models) - 1: # If it's the last iteration

|

||||

raise # Re-raise the last exception

|

||||

|

||||

|

||||

def find_line_number_of_relevant_line_in_file(diff_files: List[FilePatchInfo],

|

||||

relevant_file: str,

|

||||

relevant_line_in_file: str) -> Tuple[int, int]:

|

||||

"""

|

||||

Find the line number and absolute position of a relevant line in a file.

|

||||

|

||||

Args:

|

||||

diff_files (List[FilePatchInfo]): A list of FilePatchInfo objects representing the patches of files.

|

||||

relevant_file (str): The name of the file where the relevant line is located.

|

||||

relevant_line_in_file (str): The content of the relevant line.

|

||||

|

||||

Returns:

|

||||

Tuple[int, int]: A tuple containing the line number and absolute position of the relevant line in the file.

|

||||

"""

|

||||

position = -1

|

||||

absolute_position = -1

|

||||

re_hunk_header = re.compile(

|

||||

r"^@@ -(\d+)(?:,(\d+))? \+(\d+)(?:,(\d+))? @@[ ]?(.*)")

|

||||

|

||||

for file in diff_files:

|

||||

if file.filename.strip() == relevant_file:

|

||||

patch = file.patch

|

||||

patch_lines = patch.splitlines()

|

||||

|

||||

# try to find the line in the patch using difflib, with some margin of error

|

||||

matches_difflib: list[str | Any] = difflib.get_close_matches(relevant_line_in_file,

|

||||

patch_lines, n=3, cutoff=0.93)

|

||||

if len(matches_difflib) == 1 and matches_difflib[0].startswith('+'):

|

||||

relevant_line_in_file = matches_difflib[0]

|

||||

|

||||

delta = 0

|

||||

start1, size1, start2, size2 = 0, 0, 0, 0

|

||||

for i, line in enumerate(patch_lines):

|

||||

if line.startswith('@@'):

|

||||

delta = 0

|

||||

match = re_hunk_header.match(line)

|

||||

start1, size1, start2, size2 = map(int, match.groups()[:4])

|

||||

elif not line.startswith('-'):

|

||||

delta += 1

|

||||

|

||||

if relevant_line_in_file in line and line[0] != '-':

|

||||

position = i

|

||||

absolute_position = start2 + delta - 1

|

||||

break

|

||||

|

||||

if position == -1 and relevant_line_in_file[0] == '+':

|

||||

no_plus_line = relevant_line_in_file[1:].lstrip()

|

||||

for i, line in enumerate(patch_lines):

|

||||

if line.startswith('@@'):

|

||||

delta = 0

|

||||

match = re_hunk_header.match(line)

|

||||

start1, size1, start2, size2 = map(int, match.groups()[:4])

|

||||

elif not line.startswith('-'):

|

||||

delta += 1

|

||||

|

||||

if no_plus_line in line and line[0] != '-':

|

||||

# The model might add a '+' to the beginning of the relevant_line_in_file even if originally

|

||||

# it's a context line

|

||||

position = i

|

||||

absolute_position = start2 + delta - 1

|

||||

break

|

||||

return position, absolute_position

|

||||

|

||||

@ -1,7 +1,8 @@

|

||||

from jinja2 import Environment, StrictUndefined

|

||||

from tiktoken import encoding_for_model, get_encoding

|

||||

from tiktoken import encoding_for_model

|

||||

|

||||

from pr_agent.config_loader import get_settings

|

||||

from pr_agent.algo import MAX_TOKENS

|

||||

from pr_agent.config_loader import settings

|

||||

|

||||

|

||||

class TokenHandler:

|

||||

@ -9,12 +10,9 @@ class TokenHandler:

|

||||

A class for handling tokens in the context of a pull request.

|

||||

|

||||

Attributes:

|

||||

- encoder: An object of the encoding_for_model class from the tiktoken module. Used to encode strings and count the

|

||||

number of tokens in them.

|

||||

- limit: The maximum number of tokens allowed for the given model, as defined in the MAX_TOKENS dictionary in the

|

||||

pr_agent.algo module.

|

||||

- prompt_tokens: The number of tokens in the system and user strings, as calculated by the _get_system_user_tokens

|

||||

method.

|

||||

- encoder: An object of the encoding_for_model class from the tiktoken module. Used to encode strings and count the number of tokens in them.

|

||||

- limit: The maximum number of tokens allowed for the given model, as defined in the MAX_TOKENS dictionary in the pr_agent.algo module.

|

||||

- prompt_tokens: The number of tokens in the system and user strings, as calculated by the _get_system_user_tokens method.

|

||||

"""

|

||||

|

||||

def __init__(self, pr, vars: dict, system, user):

|

||||

@ -27,7 +25,7 @@ class TokenHandler:

|

||||

- system: The system string.

|

||||

- user: The user string.

|

||||

"""

|

||||

self.encoder = encoding_for_model(get_settings().config.model) if "gpt" in get_settings().config.model else get_encoding("cl100k_base")

|

||||

self.encoder = encoding_for_model(settings.config.model)

|

||||

self.prompt_tokens = self._get_system_user_tokens(pr, self.encoder, vars, system, user)

|

||||

|

||||

def _get_system_user_tokens(self, pr, encoder, vars: dict, system, user):

|

||||

@ -47,6 +45,7 @@ class TokenHandler:

|

||||

environment = Environment(undefined=StrictUndefined)

|

||||

system_prompt = environment.from_string(system).render(vars)

|

||||

user_prompt = environment.from_string(user).render(vars)

|

||||

|

||||

system_prompt_tokens = len(encoder.encode(system_prompt))

|

||||

user_prompt_tokens = len(encoder.encode(user_prompt))

|

||||

return system_prompt_tokens + user_prompt_tokens

|

||||

|

||||

@ -1,24 +1,14 @@

|

||||

from __future__ import annotations

|

||||

|

||||

import difflib

|

||||

from datetime import datetime

|

||||

import json

|

||||

import logging

|

||||

import re

|

||||

import textwrap

|

||||

from datetime import datetime

|

||||

from typing import Any, List

|

||||

|

||||

from starlette_context import context

|

||||

from pr_agent.config_loader import settings

|

||||

|

||||

from pr_agent.config_loader import get_settings, global_settings

|

||||

|

||||

|

||||

def get_setting(key: str) -> Any:

|

||||

try:

|

||||

key = key.upper()

|

||||

return context.get("settings", global_settings).get(key, global_settings.get(key, None))

|

||||

except Exception:

|

||||

return global_settings.get(key, None)

|

||||

|

||||

def convert_to_markdown(output_data: dict) -> str:

|

||||

"""

|

||||

@ -40,7 +30,7 @@ def convert_to_markdown(output_data: dict) -> str:

|

||||

"Security concerns": "🔒",

|

||||

"General PR suggestions": "💡",

|

||||

"Insights from user's answers": "📝",

|

||||

"Code feedback": "🤖",

|

||||

"Code suggestions": "🤖",

|

||||

}

|

||||

|

||||

for key, value in output_data.items():

|

||||

@ -50,12 +40,12 @@ def convert_to_markdown(output_data: dict) -> str:

|

||||

markdown_text += f"## {key}\n\n"

|

||||

markdown_text += convert_to_markdown(value)

|

||||

elif isinstance(value, list):

|

||||

if key.lower() == 'code feedback':

|

||||

if key.lower() == 'code suggestions':

|

||||

markdown_text += "\n" # just looks nicer with additional line breaks

|

||||

emoji = emojis.get(key, "")

|

||||

markdown_text += f"- {emoji} **{key}:**\n\n"

|

||||

for item in value:

|

||||

if isinstance(item, dict) and key.lower() == 'code feedback':

|

||||

if isinstance(item, dict) and key.lower() == 'code suggestions':

|

||||

markdown_text += parse_code_suggestion(item)

|

||||

elif item:

|

||||

markdown_text += f" - {item}\n"

|

||||

@ -100,22 +90,18 @@ def try_fix_json(review, max_iter=10, code_suggestions=False):

|

||||

Args:

|

||||

- review: A string containing the JSON message to be fixed.

|

||||

- max_iter: An integer representing the maximum number of iterations to try and fix the JSON message.

|

||||

- code_suggestions: A boolean indicating whether to try and fix JSON messages with code feedback.

|

||||

- code_suggestions: A boolean indicating whether to try and fix JSON messages with code suggestions.

|

||||

|

||||

Returns:

|

||||

- data: A dictionary containing the parsed JSON data.

|

||||

|

||||

The function attempts to fix broken or incomplete JSON messages by parsing until the last valid code suggestion.

|

||||

If the JSON message ends with a closing bracket, the function calls the fix_json_escape_char function to fix the

|

||||

message.

|

||||

If code_suggestions is True and the JSON message contains code feedback, the function tries to fix the JSON

|

||||

message by parsing until the last valid code suggestion.

|

||||

The function uses regular expressions to find the last occurrence of "}," with any number of whitespaces or

|

||||

newlines.

|

||||

If the JSON message ends with a closing bracket, the function calls the fix_json_escape_char function to fix the message.

|

||||

If code_suggestions is True and the JSON message contains code suggestions, the function tries to fix the JSON message by parsing until the last valid code suggestion.

|

||||

The function uses regular expressions to find the last occurrence of "}," with any number of whitespaces or newlines.

|

||||

It tries to parse the JSON message with the closing bracket and checks if it is valid.

|

||||

If the JSON message is valid, the parsed JSON data is returned.

|

||||

If the JSON message is not valid, the last code suggestion is removed and the process is repeated until a valid JSON

|

||||

message is obtained or the maximum number of iterations is reached.

|

||||

If the JSON message is not valid, the last code suggestion is removed and the process is repeated until a valid JSON message is obtained or the maximum number of iterations is reached.

|

||||

If a valid JSON message is not obtained, an error is logged and an empty dictionary is returned.

|

||||

"""

|

||||

|

||||

@ -128,8 +114,7 @@ def try_fix_json(review, max_iter=10, code_suggestions=False):

|

||||

else:

|

||||

closing_bracket = "]}}"

|

||||

|

||||

if (review.rfind("'Code feedback': [") > 0 or review.rfind('"Code feedback": [') > 0) or \

|

||||

(review.rfind("'Code suggestions': [") > 0 or review.rfind('"Code suggestions": [') > 0) :

|

||||

if review.rfind("'Code suggestions': [") > 0 or review.rfind('"Code suggestions": [') > 0:

|

||||

last_code_suggestion_ind = [m.end() for m in re.finditer(r"\}\s*,", review)][-1] - 1

|

||||

valid_json = False

|

||||

iter_count = 0

|

||||

@ -196,65 +181,33 @@ def convert_str_to_datetime(date_str):

|

||||

return datetime.strptime(date_str, datetime_format)

|

||||

|

||||

|

||||

def load_large_diff(filename, new_file_content_str: str, original_file_content_str: str) -> str:

|

||||

def load_large_diff(file, new_file_content_str: str, original_file_content_str: str, patch: str) -> str:

|

||||

"""

|

||||

Generate a patch for a modified file by comparing the original content of the file with the new content provided as

|

||||

input.

|

||||

Generate a patch for a modified file by comparing the original content of the file with the new content provided as input.

|

||||

|

||||

Args:

|

||||

file: The file object for which the patch needs to be generated.

|

||||

new_file_content_str: The new content of the file as a string.

|

||||

original_file_content_str: The original content of the file as a string.

|

||||

patch: An optional patch string that can be provided as input.

|

||||

|

||||

Returns:

|

||||

The generated or provided patch string.

|

||||

|

||||

Raises:

|

||||

None.

|

||||

|

||||

Additional Information:

|

||||

- If 'patch' is not provided as input, the function generates a patch using the 'difflib' library and returns it as output.

|

||||

- If the 'settings.config.verbosity_level' is greater than or equal to 2, a warning message is logged indicating that the file was modified but no patch was found, and a patch is manually created.

|

||||

"""

|

||||

patch = ""

|

||||

try:

|

||||

diff = difflib.unified_diff(original_file_content_str.splitlines(keepends=True),

|

||||

new_file_content_str.splitlines(keepends=True))

|

||||

if get_settings().config.verbosity_level >= 2:

|

||||

logging.warning(f"File was modified, but no patch was found. Manually creating patch: {filename}.")

|

||||

patch = ''.join(diff)

|

||||

except Exception:

|

||||

pass

|

||||

if not patch: # to Do - also add condition for file extension

|

||||

try:

|

||||

diff = difflib.unified_diff(original_file_content_str.splitlines(keepends=True),

|

||||

new_file_content_str.splitlines(keepends=True))

|

||||

if settings.config.verbosity_level >= 2:

|

||||

logging.warning(f"File was modified, but no patch was found. Manually creating patch: {file.filename}.")

|

||||

patch = ''.join(diff)

|

||||

except Exception:

|

||||

pass

|

||||

return patch

|

||||

|

||||

|

||||

def update_settings_from_args(args: List[str]) -> List[str]:

|

||||

"""

|

||||

Update the settings of the Dynaconf object based on the arguments passed to the function.

|

||||

|

||||

Args:

|

||||

args: A list of arguments passed to the function.

|

||||

Example args: ['--pr_code_suggestions.extra_instructions="be funny',

|

||||

'--pr_code_suggestions.num_code_suggestions=3']

|

||||

|

||||

Returns:

|

||||

None

|

||||

|

||||

Raises:

|

||||

ValueError: If the argument is not in the correct format.

|

||||

|

||||

"""

|

||||

other_args = []

|

||||

if args:

|

||||

for arg in args:

|

||||

arg = arg.strip()

|

||||

if arg.startswith('--'):

|

||||

arg = arg.strip('-').strip()

|

||||

vals = arg.split('=')

|

||||

if len(vals) != 2:

|

||||

logging.error(f'Invalid argument format: {arg}')

|

||||

other_args.append(arg)

|

||||

continue

|

||||

key, value = vals

|

||||

key = key.strip().upper()

|

||||

value = value.strip()

|

||||

get_settings().set(key, value)

|

||||

logging.info(f'Updated setting {key} to: "{value}"')

|

||||

else:

|

||||

other_args.append(arg)

|

||||

return other_args

|

||||

|

||||

@ -3,11 +3,14 @@ import asyncio

|

||||

import logging

|

||||

import os

|

||||

|

||||

from pr_agent.agent.pr_agent import PRAgent, commands

|

||||

from pr_agent.config_loader import get_settings

|

||||

from pr_agent.tools.pr_code_suggestions import PRCodeSuggestions

|

||||

from pr_agent.tools.pr_description import PRDescription

|

||||

from pr_agent.tools.pr_information_from_user import PRInformationFromUser

|

||||

from pr_agent.tools.pr_questions import PRQuestions

|

||||

from pr_agent.tools.pr_reviewer import PRReviewer

|

||||

|

||||

|

||||

def run(inargs=None):

|

||||

def run(args=None):

|

||||

parser = argparse.ArgumentParser(description='AI based pull request analyzer', usage=

|

||||

"""\

|

||||

Usage: cli.py --pr-url <URL on supported git hosting service> <command> [<args>].

|

||||

@ -24,22 +27,75 @@ ask / ask_question [question] - Ask a question about the PR.

|

||||

describe / describe_pr - Modify the PR title and description based on the PR's contents.

|

||||

improve / improve_code - Suggest improvements to the code in the PR as pull request comments ready to commit.

|

||||

reflect - Ask the PR author questions about the PR.

|

||||

update_changelog - Update the changelog based on the PR's contents.

|

||||

|

||||

To edit any configuration parameter from 'configuration.toml', just add -config_path=<value>.

|

||||

For example: '- cli.py --pr-url=... review --pr_reviewer.extra_instructions="focus on the file: ..."'

|

||||

""")

|

||||

parser.add_argument('--pr_url', type=str, help='The URL of the PR to review', required=True)

|

||||

parser.add_argument('command', type=str, help='The', choices=commands, default='review')

|

||||

parser.add_argument('command', type=str, help='The', choices=['review', 'review_pr',

|

||||

'ask', 'ask_question',

|

||||

'describe', 'describe_pr',

|

||||

'improve', 'improve_code',

|

||||

'reflect', 'review_after_reflect'],

|

||||

default='review')

|

||||

parser.add_argument('rest', nargs=argparse.REMAINDER, default=[])

|

||||

args = parser.parse_args(inargs)

|

||||

args = parser.parse_args(args)

|

||||

logging.basicConfig(level=os.environ.get("LOGLEVEL", "INFO"))

|

||||

command = args.command.lower()

|

||||

get_settings().set("CONFIG.CLI_MODE", True)

|

||||

result = asyncio.run(PRAgent().handle_request(args.pr_url, command + " " + " ".join(args.rest)))

|

||||

if not result:

|

||||

commands = {

|

||||

'ask': _handle_ask_command,

|

||||

'ask_question': _handle_ask_command,

|

||||

'describe': _handle_describe_command,

|

||||

'describe_pr': _handle_describe_command,

|

||||

'improve': _handle_improve_command,

|

||||

'improve_code': _handle_improve_command,

|

||||

'review': _handle_review_command,

|

||||

'review_pr': _handle_review_command,

|

||||

'reflect': _handle_reflect_command,

|

||||

'review_after_reflect': _handle_review_after_reflect_command

|

||||

}

|

||||

if command in commands:

|

||||

commands[command](args.pr_url, args.rest)

|

||||

else:

|

||||

print(f"Unknown command: {command}")

|

||||

parser.print_help()

|

||||

|

||||

|

||||

def _handle_ask_command(pr_url: str, rest: list):

|

||||

if len(rest) == 0:

|

||||

print("Please specify a question")

|

||||

return

|

||||

print(f"Question: {' '.join(rest)} about PR {pr_url}")

|

||||

reviewer = PRQuestions(pr_url, rest)

|

||||

asyncio.run(reviewer.answer())

|

||||

|

||||

|

||||

def _handle_describe_command(pr_url: str, rest: list):

|

||||

print(f"PR description: {pr_url}")

|

||||

reviewer = PRDescription(pr_url)

|

||||

asyncio.run(reviewer.describe())

|

||||

|

||||

|

||||

def _handle_improve_command(pr_url: str, rest: list):

|

||||

print(f"PR code suggestions: {pr_url}")

|

||||

reviewer = PRCodeSuggestions(pr_url)

|

||||

asyncio.run(reviewer.suggest())

|

||||

|

||||

|

||||

def _handle_review_command(pr_url: str, rest: list):

|

||||

print(f"Reviewing PR: {pr_url}")

|

||||

reviewer = PRReviewer(pr_url, cli_mode=True, args=rest)

|

||||

asyncio.run(reviewer.review())

|

||||

|

||||

|

||||

def _handle_reflect_command(pr_url: str, rest: list):

|

||||

print(f"Asking the PR author questions: {pr_url}")

|

||||

reviewer = PRInformationFromUser(pr_url)

|

||||

asyncio.run(reviewer.generate_questions())

|

||||

|

||||

|

||||

def _handle_review_after_reflect_command(pr_url: str, rest: list):

|

||||

print(f"Processing author's answers and sending review: {pr_url}")

|

||||

reviewer = PRReviewer(pr_url, cli_mode=True, is_answer=True)

|

||||

asyncio.run(reviewer.review())

|

||||

|

||||

|

||||

if __name__ == '__main__':

|

||||

run()

|

||||

|

||||

@ -1,64 +1,20 @@

|

||||

from os.path import abspath, dirname, join

|

||||

from pathlib import Path

|

||||

from typing import Optional

|

||||

|

||||

from dynaconf import Dynaconf

|

||||

from starlette_context import context

|

||||

|

||||

PR_AGENT_TOML_KEY = 'pr-agent'

|

||||

|

||||

current_dir = dirname(abspath(__file__))

|

||||

global_settings = Dynaconf(

|

||||

settings = Dynaconf(

|

||||

envvar_prefix=False,

|

||||

merge_enabled=True,

|

||||

settings_files=[join(current_dir, f) for f in [

|

||||

"settings/.secrets.toml",

|

||||

"settings/configuration.toml",

|

||||

"settings/language_extensions.toml",

|

||||

"settings/pr_reviewer_prompts.toml",

|

||||

"settings/pr_questions_prompts.toml",

|

||||

"settings/pr_description_prompts.toml",

|

||||

"settings/pr_code_suggestions_prompts.toml",

|

||||

"settings/pr_information_from_user_prompts.toml",

|

||||

"settings/pr_update_changelog_prompts.toml",

|

||||

"settings_prod/.secrets.toml"

|

||||

]]

|

||||

"settings/.secrets.toml",

|

||||

"settings/configuration.toml",

|

||||

"settings/language_extensions.toml",

|

||||

"settings/pr_reviewer_prompts.toml",

|

||||

"settings/pr_questions_prompts.toml",

|

||||

"settings/pr_description_prompts.toml",

|

||||

"settings/pr_code_suggestions_prompts.toml",

|

||||

"settings/pr_information_from_user_prompts.toml",

|

||||

"settings_prod/.secrets.toml"

|

||||

]]

|

||||

)

|

||||

|

||||

|

||||

def get_settings():

|

||||

try:

|

||||

return context["settings"]

|

||||

except Exception:

|

||||

return global_settings

|

||||

|

||||

|

||||

# Add local configuration from pyproject.toml of the project being reviewed

|

||||

def _find_repository_root() -> Path:

|

||||

"""

|

||||

Identify project root directory by recursively searching for the .git directory in the parent directories.

|

||||

"""

|

||||

cwd = Path.cwd().resolve()

|

||||

no_way_up = False

|

||||

while not no_way_up:

|

||||