mirror of

https://github.com/qodo-ai/pr-agent.git

synced 2025-07-10 15:50:37 +08:00

Compare commits

79 Commits

mrT23-patc

...

v0.25

| Author | SHA1 | Date | |

|---|---|---|---|

| 91bf3c0749 | |||

| 159155785e | |||

| eabc296246 | |||

| b44030114e | |||

| 1d6f87be3b | |||

| a7c6fa7bd2 | |||

| a825aec5f3 | |||

| 4df097c228 | |||

| 6871e1b27a | |||

| 4afe05761d | |||

| 7d1b6c2f0a | |||

| 3547cf2057 | |||

| f2043d639c | |||

| 6240de3898 | |||

| f08b20c667 | |||

| e64b468556 | |||

| d48d14dac7 | |||

| eb0c959ca9 | |||

| 741a70ad9d | |||

| 22ee03981e | |||

| b1336e7d08 | |||

| 751caca141 | |||

| 612004727c | |||

| 577ee0241d | |||

| a141ca133c | |||

| a14b6a580d | |||

| cc5005c490 | |||

| 3a5d0f54ce | |||

| cd8ba4f59f | |||

| fe27f96bf1 | |||

| 2c3aa7b2dc | |||

| c934523f2d | |||

| 2f4545dc15 | |||

| cbd490b3d7 | |||

| b07f96d26a | |||

| 065777040f | |||

| 9c82047dc3 | |||

| e0c15409bb | |||

| d956c72cb6 | |||

| dfb3d801cf | |||

| 5c5a3e267c | |||

| f9380c2440 | |||

| e6a1f14c0e | |||

| 6339845eb4 | |||

| 732cc18fd6 | |||

| 84d0f80c81 | |||

| ee26bf35c1 | |||

| 7a5e9102fd | |||

| a8c97bfa73 | |||

| af653a048f | |||

| d2663f959a | |||

| e650fe9ce9 | |||

| daeca42ae8 | |||

| 04496f9b0e | |||

| 0eacb3e35e | |||

| c5ed2f040a | |||

| c394fc2767 | |||

| 157251493a | |||

| 4a982a849d | |||

| 6e3544f523 | |||

| bf3ebbb95f | |||

| eb44ecb1be | |||

| 45bae48701 | |||

| b2181e4c79 | |||

| 5939d3b17b | |||

| c1f4964a55 | |||

| 022e407d84 | |||

| 93ba2d239a | |||

| fa49dd5167 | |||

| 16029e66ad | |||

| 7bd6713335 | |||

| ef3241285d | |||

| d9ef26dc1c | |||

| 02949b2b96 | |||

| 443d06df06 | |||

| 852bb371af | |||

| 7c90e44656 | |||

| 81dea65856 | |||

| a3d572fb69 |

2

.github/workflows/build-and-test.yaml

vendored

2

.github/workflows/build-and-test.yaml

vendored

@ -37,5 +37,3 @@ jobs:

|

||||

name: Test dev docker

|

||||

run: |

|

||||

docker run --rm codiumai/pr-agent:test pytest -v tests/unittest

|

||||

|

||||

|

||||

|

||||

3

.github/workflows/pr-agent-review.yaml

vendored

3

.github/workflows/pr-agent-review.yaml

vendored

@ -30,6 +30,3 @@ jobs:

|

||||

GITHUB_ACTION_CONFIG.AUTO_DESCRIBE: true

|

||||

GITHUB_ACTION_CONFIG.AUTO_REVIEW: true

|

||||

GITHUB_ACTION_CONFIG.AUTO_IMPROVE: true

|

||||

|

||||

|

||||

|

||||

|

||||

17

.github/workflows/pre-commit.yml

vendored

Normal file

17

.github/workflows/pre-commit.yml

vendored

Normal file

@ -0,0 +1,17 @@

|

||||

# disabled. We might run it manually if needed.

|

||||

name: pre-commit

|

||||

|

||||

on:

|

||||

workflow_dispatch:

|

||||

# pull_request:

|

||||

# push:

|

||||

# branches: [main]

|

||||

|

||||

jobs:

|

||||

pre-commit:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- uses: actions/checkout@v3

|

||||

- uses: actions/setup-python@v5

|

||||

# SEE https://github.com/pre-commit/action

|

||||

- uses: pre-commit/action@v3.0.1

|

||||

46

.pre-commit-config.yaml

Normal file

46

.pre-commit-config.yaml

Normal file

@ -0,0 +1,46 @@

|

||||

# See https://pre-commit.com for more information

|

||||

# See https://pre-commit.com/hooks.html for more hooks

|

||||

|

||||

default_language_version:

|

||||

python: python3

|

||||

|

||||

repos:

|

||||

- repo: https://github.com/pre-commit/pre-commit-hooks

|

||||

rev: v5.0.0

|

||||

hooks:

|

||||

- id: check-added-large-files

|

||||

- id: check-toml

|

||||

- id: check-yaml

|

||||

- id: end-of-file-fixer

|

||||

- id: trailing-whitespace

|

||||

# - repo: https://github.com/rhysd/actionlint

|

||||

# rev: v1.7.3

|

||||

# hooks:

|

||||

# - id: actionlint

|

||||

- repo: https://github.com/pycqa/isort

|

||||

# rev must match what's in dev-requirements.txt

|

||||

rev: 5.13.2

|

||||

hooks:

|

||||

- id: isort

|

||||

# - repo: https://github.com/PyCQA/bandit

|

||||

# rev: 1.7.10

|

||||

# hooks:

|

||||

# - id: bandit

|

||||

# args: [

|

||||

# "-c", "pyproject.toml",

|

||||

# ]

|

||||

# - repo: https://github.com/astral-sh/ruff-pre-commit

|

||||

# rev: v0.7.1

|

||||

# hooks:

|

||||

# - id: ruff

|

||||

# args:

|

||||

# - --fix

|

||||

# - id: ruff-format

|

||||

# - repo: https://github.com/PyCQA/autoflake

|

||||

# rev: v2.3.1

|

||||

# hooks:

|

||||

# - id: autoflake

|

||||

# args:

|

||||

# - --in-place

|

||||

# - --remove-all-unused-imports

|

||||

# - --remove-unused-variables

|

||||

41

README.md

41

README.md

@ -43,43 +43,38 @@ Qode Merge PR-Agent aims to help efficiently review and handle pull requests, by

|

||||

|

||||

## News and Updates

|

||||

|

||||

### October 27, 2024

|

||||

### December 2, 2024

|

||||

|

||||

Qodo Merge PR Agent will now automatically document accepted code suggestions in a dedicated wiki page (`.pr_agent_accepted_suggestions`), enabling users to track historical changes, assess the tool's effectiveness, and learn from previously implemented recommendations in the repository.

|

||||

Open-source repositories can now freely use Qodo Merge Pro, and enjoy easy one-click installation using our dedicated [app](https://github.com/apps/qodo-merge-pro-for-open-source).

|

||||

|

||||

This dedicated wiki page will also serve as a foundation for future AI model improvements, allowing it to learn from historically implemented suggestions and generate more targeted, contextually relevant recommendations.

|

||||

Read more about this novel feature [here](https://qodo-merge-docs.qodo.ai/tools/improve/#suggestion-tracking).

|

||||

|

||||

<kbd><img href="https://qodo.ai/images/pr_agent/pr_agent_accepted_suggestions1.png" src="https://qodo.ai/images/pr_agent/pr_agent_accepted_suggestions1.png" width="768"></kbd>

|

||||

<kbd><img src="https://github.com/user-attachments/assets/b0838724-87b9-43b0-ab62-73739a3a855c" width="512"></kbd>

|

||||

|

||||

|

||||

### November 18, 2024

|

||||

|

||||

### October 21, 2024

|

||||

**Disable publishing labels by default:**

|

||||

A new mode was enabled by default for code suggestions - `--pr_code_suggestions.focus_only_on_problems=true`:

|

||||

|

||||

The default setting for `pr_description.publish_labels` has been updated to `false`. This means that labels generated by the `/describe` tool will no longer be published, unless this configuration is explicitly set to `true`.

|

||||

- This option reduces the number of code suggestions received

|

||||

- The suggestions will focus more on identifying and fixing code problems, rather than style considerations like best practices, maintainability, or readability.

|

||||

- The suggestions will be categorized into just two groups: "Possible Issues" and "General".

|

||||

|

||||

We constantly strive to balance informative AI analysis with reducing unnecessary noise. User feedback indicated that in many cases, the original PR title alone provides sufficient information, making the generated labels (`enhancement`, `documentation`, `bug fix`, ...) redundant.

|

||||

The [`review_effort`](https://qodo-merge-docs.qodo.ai/tools/review/#configuration-options) label, generated by the `review` tool, will still be published by default, as it provides valuable information enabling reviewers to prioritize small PRs first.

|

||||

Still, if you prefer the previous mode, you can set `--pr_code_suggestions.focus_only_on_problems=false` in the [configuration file](https://qodo-merge-docs.qodo.ai/usage-guide/configuration_options/).

|

||||

|

||||

However, every user has different preferences. To still publish the `describe` labels, set `pr_description.publish_labels=true` in the [configuration file](https://qodo-merge-docs.qodo.ai/usage-guide/configuration_options/).

|

||||

For more tailored and relevant labeling, we recommend using the [`custom_labels 💎`](https://qodo-merge-docs.qodo.ai/tools/custom_labels/) tool, that allows generating labels specific to your project's needs.

|

||||

**Example results:**

|

||||

|

||||

<kbd></kbd>

|

||||

Original mode

|

||||

|

||||

→

|

||||

<kbd><img src="https://qodo.ai/images/pr_agent/code_suggestions_original_mode.png" width="512"></kbd>

|

||||

|

||||

<kbd></kbd>

|

||||

Focused mode

|

||||

|

||||

<kbd><img src="https://qodo.ai/images/pr_agent/code_suggestions_focused_mode.png" width="512"></kbd>

|

||||

|

||||

|

||||

### November 4, 2024

|

||||

|

||||

### October 14, 2024

|

||||

Improved support for GitHub enterprise server with [GitHub Actions](https://qodo-merge-docs.qodo.ai/installation/github/#action-for-github-enterprise-server)

|

||||

|

||||

### October 10, 2024

|

||||

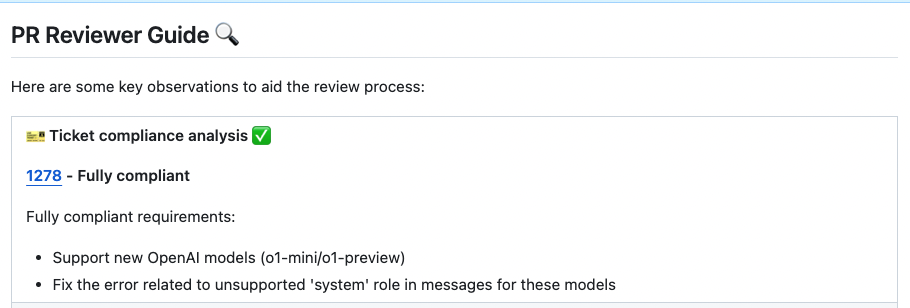

New ability for the `review` tool - **ticket compliance feedback**. If the PR contains a ticket number, PR-Agent will check if the PR code actually [complies](https://github.com/Codium-ai/pr-agent/pull/1279#issuecomment-2404042130) with the ticket requirements.

|

||||

|

||||

<kbd><img src="https://github.com/user-attachments/assets/4a2a728b-5f47-40fa-80cc-16efd296938c" width="768"></kbd>

|

||||

Qodo Merge PR Agent will now leverage context from Jira or GitHub tickets to enhance the PR Feedback. Read more about this feature

|

||||

[here](https://qodo-merge-docs.qodo.ai/core-abilities/fetching_ticket_context/)

|

||||

|

||||

|

||||

## Overview

|

||||

|

||||

@ -2,4 +2,3 @@ We take your code's security and privacy seriously:

|

||||

|

||||

- The Chrome extension will not send your code to any external servers.

|

||||

- For private repositories, we will first validate the user's identity and permissions. After authentication, we generate responses using the existing Qodo Merge Pro integration.

|

||||

|

||||

|

||||

115

docs/docs/core-abilities/fetching_ticket_context.md

Normal file

115

docs/docs/core-abilities/fetching_ticket_context.md

Normal file

@ -0,0 +1,115 @@

|

||||

# Fetching Ticket Context for PRs

|

||||

## Overview

|

||||

Qodo Merge PR Agent streamlines code review workflows by seamlessly connecting with multiple ticket management systems.

|

||||

This integration enriches the review process by automatically surfacing relevant ticket information and context alongside code changes.

|

||||

|

||||

|

||||

## Affected Tools

|

||||

|

||||

Ticket Recognition Requirements:

|

||||

|

||||

1. The PR description should contain a link to the ticket.

|

||||

2. For Jira tickets, you should follow the instructions in [Jira Integration](https://qodo-merge-docs.qodo.ai/core-abilities/fetching_ticket_context/#jira-integration) in order to authenticate with Jira.

|

||||

|

||||

|

||||

### Describe tool

|

||||

Qodo Merge PR Agent will recognize the ticket and use the ticket content (title, description, labels) to provide additional context for the code changes.

|

||||

By understanding the reasoning and intent behind modifications, the LLM can offer more insightful and relevant code analysis.

|

||||

|

||||

### Review tool

|

||||

Similarly to the `describe` tool, the `review` tool will use the ticket content to provide additional context for the code changes.

|

||||

|

||||

In addition, this feature will evaluate how well a Pull Request (PR) adheres to its original purpose/intent as defined by the associated ticket or issue mentioned in the PR description.

|

||||

Each ticket will be assigned a label (Compliance/Alignment level), Indicates the degree to which the PR fulfills its original purpose, Options: Fully compliant, Partially compliant or Not compliant.

|

||||

|

||||

|

||||

{width=768}

|

||||

|

||||

By default, the tool will automatically validate if the PR complies with the referenced ticket.

|

||||

If you want to disable this feedback, add the following line to your configuration file:

|

||||

|

||||

```toml

|

||||

[pr_reviewer]

|

||||

require_ticket_analysis_review=false

|

||||

```

|

||||

|

||||

## Providers

|

||||

|

||||

### Github Issues Integration

|

||||

|

||||

Qodo Merge PR Agent will automatically recognize Github issues mentioned in the PR description and fetch the issue content.

|

||||

Examples of valid GitHub issue references:

|

||||

|

||||

- `https://github.com/<ORG_NAME>/<REPO_NAME>/issues/<ISSUE_NUMBER>`

|

||||

- `#<ISSUE_NUMBER>`

|

||||

- `<ORG_NAME>/<REPO_NAME>#<ISSUE_NUMBER>`

|

||||

|

||||

Since Qodo Merge PR Agent is integrated with GitHub, it doesn't require any additional configuration to fetch GitHub issues.

|

||||

|

||||

### Jira Integration 💎

|

||||

|

||||

We support both Jira Cloud and Jira Server/Data Center.

|

||||

To integrate with Jira, The PR Description should contain a link to the Jira ticket.

|

||||

|

||||

For Jira integration, include a ticket reference in your PR description using either the complete URL format `https://<JIRA_ORG>.atlassian.net/browse/ISSUE-123` or the shortened ticket ID `ISSUE-123`.

|

||||

|

||||

!!! note "Jira Base URL"

|

||||

If using the shortened format, ensure your configuration file contains the Jira base URL under the [jira] section like this:

|

||||

|

||||

```toml

|

||||

[jira]

|

||||

jira_base_url = "https://<JIRA_ORG>.atlassian.net"

|

||||

```

|

||||

|

||||

#### Jira Cloud 💎

|

||||

There are two ways to authenticate with Jira Cloud:

|

||||

|

||||

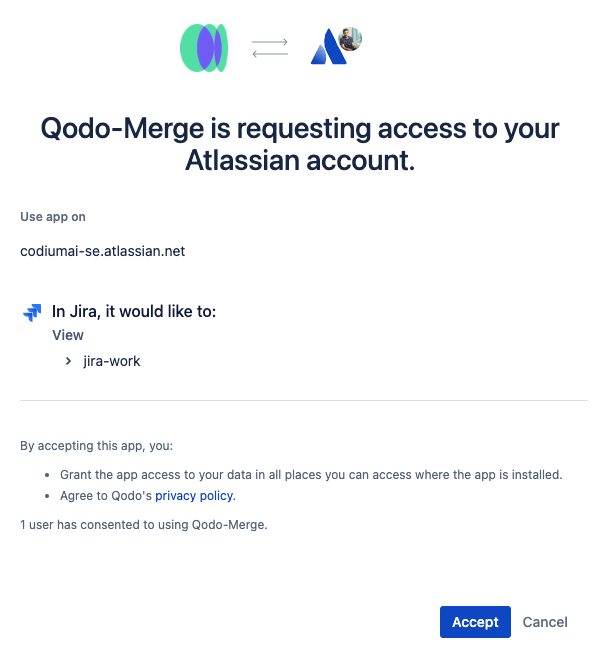

**1) Jira App Authentication**

|

||||

|

||||

The recommended way to authenticate with Jira Cloud is to install the Qodo Merge app in your Jira Cloud instance. This will allow Qodo Merge to access Jira data on your behalf.

|

||||

|

||||

Installation steps:

|

||||

|

||||

1. Click [here](https://auth.atlassian.com/authorize?audience=api.atlassian.com&client_id=8krKmA4gMD8mM8z24aRCgPCSepZNP1xf&scope=read%3Ajira-work%20offline_access&redirect_uri=https%3A%2F%2Fregister.jira.pr-agent.codium.ai&state=qodomerge&response_type=code&prompt=consent) to install the Qodo Merge app in your Jira Cloud instance, click the `accept` button.<br>

|

||||

{width=384}

|

||||

|

||||

2. After installing the app, you will be redirected to the Qodo Merge registration page. and you will see a success message.<br>

|

||||

{width=384}

|

||||

|

||||

3. Now you can use the Jira integration in Qodo Merge PR Agent.

|

||||

|

||||

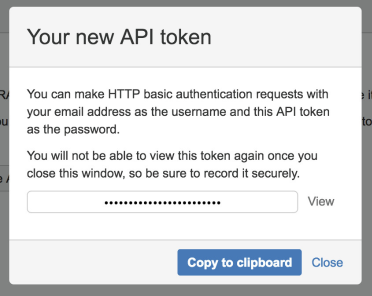

**2) Email/Token Authentication**

|

||||

|

||||

You can create an API token from your Atlassian account:

|

||||

|

||||

1. Log in to https://id.atlassian.com/manage-profile/security/api-tokens.

|

||||

|

||||

2. Click Create API token.

|

||||

|

||||

3. From the dialog that appears, enter a name for your new token and click Create.

|

||||

|

||||

4. Click Copy to clipboard.

|

||||

|

||||

{width=384}

|

||||

|

||||

5. In your [configuration file](https://qodo-merge-docs.qodo.ai/usage-guide/configuration_options/) add the following lines:

|

||||

|

||||

```toml

|

||||

[jira]

|

||||

jira_api_token = "YOUR_API_TOKEN"

|

||||

jira_api_email = "YOUR_EMAIL"

|

||||

```

|

||||

|

||||

|

||||

#### Jira Server/Data Center 💎

|

||||

|

||||

Currently, we only support the Personal Access Token (PAT) Authentication method.

|

||||

|

||||

1. Create a [Personal Access Token (PAT)](https://confluence.atlassian.com/enterprise/using-personal-access-tokens-1026032365.html) in your Jira account

|

||||

2. In your Configuration file/Environment variables/Secrets file, add the following lines:

|

||||

|

||||

```toml

|

||||

[jira]

|

||||

jira_base_url = "YOUR_JIRA_BASE_URL" # e.g. https://jira.example.com

|

||||

jira_api_token = "YOUR_API_TOKEN"

|

||||

```

|

||||

@ -1,6 +1,7 @@

|

||||

# Core Abilities

|

||||

Qodo Merge utilizes a variety of core abilities to provide a comprehensive and efficient code review experience. These abilities include:

|

||||

|

||||

- [Fetching ticket context](https://qodo-merge-docs.qodo.ai/core-abilities/fetching_ticket_context/)

|

||||

- [Local and global metadata](https://qodo-merge-docs.qodo.ai/core-abilities/metadata/)

|

||||

- [Dynamic context](https://qodo-merge-docs.qodo.ai/core-abilities/dynamic_context/)

|

||||

- [Self-reflection](https://qodo-merge-docs.qodo.ai/core-abilities/self_reflection/)

|

||||

|

||||

@ -46,6 +46,5 @@ This results in a more refined and valuable set of suggestions for the user, sav

|

||||

## Appendix - Relevant Configuration Options

|

||||

```

|

||||

[pr_code_suggestions]

|

||||

self_reflect_on_suggestions = true # Enable self-reflection on code suggestions

|

||||

suggestions_score_threshold = 0 # Filter out suggestions with a score below this threshold (0-10)

|

||||

```

|

||||

@ -51,10 +51,12 @@ stages:

|

||||

```

|

||||

This script will run Qodo Merge on every new merge request, with the `improve`, `review`, and `describe` commands.

|

||||

Note that you need to export the `azure_devops__pat` and `OPENAI_KEY` variables in the Azure DevOps pipeline settings (Pipelines -> Library -> + Variable group):

|

||||

|

||||

{width=468}

|

||||

|

||||

Make sure to give pipeline permissions to the `pr_agent` variable group.

|

||||

|

||||

> Note that Azure Pipelines lacks support for triggering workflows from PR comments. If you find a viable solution, please contribute it to our [issue tracker](https://github.com/Codium-ai/pr-agent/issues)

|

||||

|

||||

## Azure DevOps from CLI

|

||||

|

||||

|

||||

@ -38,6 +38,7 @@ You can also modify the `script` section to run different Qodo Merge commands, o

|

||||

|

||||

Note that if your base branches are not protected, don't set the variables as `protected`, since the pipeline will not have access to them.

|

||||

|

||||

> **Note**: The `$CI_SERVER_FQDN` variable is available starting from GitLab version 16.10. If you're using an earlier version, this variable will not be available. However, you can combine `$CI_SERVER_HOST` and `$CI_SERVER_PORT` to achieve the same result. Please ensure you're using a compatible version or adjust your configuration.

|

||||

|

||||

|

||||

## Run a GitLab webhook server

|

||||

|

||||

@ -245,6 +245,32 @@ enable_global_best_practices = true

|

||||

|

||||

Then, create a `best_practices.md` wiki file in the root of [global](https://qodo-merge-docs.qodo.ai/usage-guide/configuration_options/#global-configuration-file) configuration repository, `pr-agent-settings`.

|

||||

|

||||

##### Best practices for multiple languages

|

||||

For a git organization working with multiple programming languages, you can maintain a centralized global `best_practices.md` file containing language-specific guidelines.

|

||||

When reviewing pull requests, Qodo Merge automatically identifies the programming language and applies the relevant best practices from this file.

|

||||

Structure your `best_practices.md` file using the following format:

|

||||

|

||||

```

|

||||

# [Python]

|

||||

...

|

||||

# [Java]

|

||||

...

|

||||

# [JavaScript]

|

||||

...

|

||||

```

|

||||

|

||||

##### Dedicated label for best practices suggestions

|

||||

Best practice suggestions are labeled as `Organization best practice` by default.

|

||||

To customize this label, modify it in your configuration file:

|

||||

|

||||

```toml

|

||||

[best_practices]

|

||||

organization_name = ""

|

||||

```

|

||||

|

||||

And the label will be: `{organization_name} best practice`.

|

||||

|

||||

|

||||

##### Example results

|

||||

|

||||

{width=512}

|

||||

@ -276,12 +302,12 @@ Using a combination of both can help the AI model to provide relevant and tailor

|

||||

<td>Minimum score threshold for suggestions to be presented as commitable PR comments in addition to the table. Default is -1 (disabled).</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td><b>persistent_comment</b></td>

|

||||

<td>If set to true, the improve comment will be persistent, meaning that every new improve request will edit the previous one. Default is false.</td>

|

||||

<td><b>focus_only_on_problems</b></td>

|

||||

<td>If set to true, suggestions will focus primarily on identifying and fixing code problems, and less on style considerations like best practices, maintainability, or readability. Default is true.</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td><b>self_reflect_on_suggestions</b></td>

|

||||

<td>If set to true, the improve tool will calculate an importance score for each suggestion [1-10], and sort the suggestion labels group based on this score. Default is true.</td>

|

||||

<td><b>persistent_comment</b></td>

|

||||

<td>If set to true, the improve comment will be persistent, meaning that every new improve request will edit the previous one. Default is false.</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td><b>suggestions_score_threshold</b></td>

|

||||

|

||||

@ -140,7 +140,7 @@ num_code_suggestions = ...

|

||||

</tr>

|

||||

<tr>

|

||||

<td><b>require_ticket_analysis_review</b></td>

|

||||

<td>If set to true, and the PR contains a GitHub ticket number, the tool will add a section that checks if the PR in fact fulfilled the ticket requirements. Default is true.</td>

|

||||

<td>If set to true, and the PR contains a GitHub or Jira ticket link, the tool will add a section that checks if the PR in fact fulfilled the ticket requirements. Default is true.</td>

|

||||

</tr>

|

||||

</table>

|

||||

|

||||

@ -258,4 +258,3 @@ If enabled, the `review` tool can approve a PR when a specific comment, `/review

|

||||

[//]: # ( Notice If you are interested **only** in the code suggestions, it is recommended to use the [`improve`](./improve.md) feature instead, since it is a dedicated only to code suggestions, and usually gives better results.)

|

||||

|

||||

[//]: # ( Use the `review` tool if you want to get more comprehensive feedback, which includes code suggestions as well.)

|

||||

|

||||

|

||||

@ -160,3 +160,13 @@ ignore_pr_target_branches = ["qa"]

|

||||

|

||||

Where the `ignore_pr_source_branches` and `ignore_pr_target_branches` are lists of regex patterns to match the source and target branches you want to ignore.

|

||||

They are not mutually exclusive, you can use them together or separately.

|

||||

|

||||

|

||||

To allow only specific folders (often needed in large monorepos), set:

|

||||

|

||||

```

|

||||

[config]

|

||||

allow_only_specific_folders=['folder1','folder2']

|

||||

```

|

||||

|

||||

For the configuration above, automatic feedback will only be triggered when the PR changes include files from 'folder1' or 'folder2'

|

||||

|

||||

@ -72,13 +72,14 @@ The configuration parameter `pr_commands` defines the list of tools that will be

|

||||

```

|

||||

[github_app]

|

||||

pr_commands = [

|

||||

"/describe --pr_description.final_update_message=false",

|

||||

"/review --pr_reviewer.num_code_suggestions=0",

|

||||

"/improve",

|

||||

"/describe",

|

||||

"/review",

|

||||

"/improve --pr_code_suggestions.suggestions_score_threshold=5",

|

||||

]

|

||||

```

|

||||

|

||||

This means that when a new PR is opened/reopened or marked as ready for review, Qodo Merge will run the `describe`, `review` and `improve` tools.

|

||||

For the `review` tool, for example, the `num_code_suggestions` parameter will be set to 0.

|

||||

For the `improve` tool, for example, the `suggestions_score_threshold` parameter will be set to 5 (suggestions below a score of 5 won't be presented)

|

||||

|

||||

You can override the default tool parameters by using one the three options for a [configuration file](https://qodo-merge-docs.qodo.ai/usage-guide/configuration_options/): **wiki**, **local**, or **global**.

|

||||

For example, if your local `.pr_agent.toml` file contains:

|

||||

@ -105,7 +106,7 @@ The configuration parameter `push_commands` defines the list of tools that will

|

||||

handle_push_trigger = true

|

||||

push_commands = [

|

||||

"/describe",

|

||||

"/review --pr_reviewer.num_code_suggestions=0 --pr_reviewer.final_update_message=false",

|

||||

"/review",

|

||||

]

|

||||

```

|

||||

This means that when new code is pushed to the PR, the Qodo Merge will run the `describe` and `review` tools, with the specified parameters.

|

||||

@ -148,7 +149,7 @@ After setting up a GitLab webhook, to control which commands will run automatica

|

||||

[gitlab]

|

||||

pr_commands = [

|

||||

"/describe",

|

||||

"/review --pr_reviewer.num_code_suggestions=0",

|

||||

"/review",

|

||||

"/improve",

|

||||

]

|

||||

```

|

||||

@ -161,7 +162,7 @@ The configuration parameter `push_commands` defines the list of tools that will

|

||||

handle_push_trigger = true

|

||||

push_commands = [

|

||||

"/describe",

|

||||

"/review --pr_reviewer.num_code_suggestions=0 --pr_reviewer.final_update_message=false",

|

||||

"/review",

|

||||

]

|

||||

```

|

||||

|

||||

@ -182,7 +183,7 @@ Each time you invoke a `/review` tool, it will use the extra instructions you se

|

||||

|

||||

|

||||

Note that among other limitations, BitBucket provides relatively low rate-limits for applications (up to 1000 requests per hour), and does not provide an API to track the actual rate-limit usage.

|

||||

If you experience lack of responses from Qodo Merge, you might want to set: `bitbucket_app.avoid_full_files=true` in your configuration file.

|

||||

If you experience a lack of responses from Qodo Merge, you might want to set: `bitbucket_app.avoid_full_files=true` in your configuration file.

|

||||

This will prevent Qodo Merge from acquiring the full file content, and will only use the diff content. This will reduce the number of requests made to BitBucket, at the cost of small decrease in accuracy, as dynamic context will not be applicable.

|

||||

|

||||

|

||||

@ -194,13 +195,23 @@ Specifically, set the following values:

|

||||

```

|

||||

[bitbucket_app]

|

||||

pr_commands = [

|

||||

"/review --pr_reviewer.num_code_suggestions=0",

|

||||

"/review",

|

||||

"/improve --pr_code_suggestions.commitable_code_suggestions=true --pr_code_suggestions.suggestions_score_threshold=7",

|

||||

]

|

||||

```

|

||||

Note that we set specifically for bitbucket, we recommend using: `--pr_code_suggestions.suggestions_score_threshold=7` and that is the default value we set for bitbucket.

|

||||

Since this platform only supports inline code suggestions, we want to limit the number of suggestions, and only present a limited number.

|

||||

|

||||

To enable BitBucket app to respond to each **push** to the PR, set (for example):

|

||||

```

|

||||

[bitbucket_app]

|

||||

handle_push_trigger = true

|

||||

push_commands = [

|

||||

"/describe",

|

||||

"/review",

|

||||

]

|

||||

```

|

||||

|

||||

## Azure DevOps provider

|

||||

|

||||

To use Azure DevOps provider use the following settings in configuration.toml:

|

||||

|

||||

@ -10,4 +10,3 @@ Specifically, CLI commands can be issued by invoking a pre-built [docker image](

|

||||

|

||||

For online usage, you will need to setup either a [GitHub App](https://qodo-merge-docs.qodo.ai/installation/github/#run-as-a-github-app) or a [GitHub Action](https://qodo-merge-docs.qodo.ai/installation/github/#run-as-a-github-action) (GitHub), a [GitLab webhook](https://qodo-merge-docs.qodo.ai/installation/gitlab/#run-a-gitlab-webhook-server) (GitLab), or a [BitBucket App](https://qodo-merge-docs.qodo.ai/installation/bitbucket/#run-using-codiumai-hosted-bitbucket-app) (BitBucket).

|

||||

These platforms also enable to run Qodo Merge specific tools automatically when a new PR is opened, or on each push to a branch.

|

||||

|

||||

|

||||

@ -43,6 +43,7 @@ nav:

|

||||

- 💎 Similar Code: 'tools/similar_code.md'

|

||||

- Core Abilities:

|

||||

- 'core-abilities/index.md'

|

||||

- Fetching ticket context: 'core-abilities/fetching_ticket_context.md'

|

||||

- Local and global metadata: 'core-abilities/metadata.md'

|

||||

- Dynamic context: 'core-abilities/dynamic_context.md'

|

||||

- Self-reflection: 'core-abilities/self_reflection.md'

|

||||

|

||||

@ -3,5 +3,5 @@

|

||||

new Date().getTime(),event:'gtm.js'});var f=d.getElementsByTagName(s)[0],

|

||||

j=d.createElement(s),dl=l!='dataLayer'?'&l='+l:'';j.async=true;j.src=

|

||||

'https://www.googletagmanager.com/gtm.js?id='+i+dl;f.parentNode.insertBefore(j,f);

|

||||

})(window,document,'script','dataLayer','GTM-5C9KZBM3');</script>

|

||||

})(window,document,'script','dataLayer','GTM-M6PJSFV');</script>

|

||||

<!-- End Google Tag Manager -->

|

||||

@ -1 +0,0 @@

|

||||

|

||||

|

||||

@ -3,7 +3,6 @@ from functools import partial

|

||||

|

||||

from pr_agent.algo.ai_handlers.base_ai_handler import BaseAiHandler

|

||||

from pr_agent.algo.ai_handlers.litellm_ai_handler import LiteLLMAIHandler

|

||||

|

||||

from pr_agent.algo.utils import update_settings_from_args

|

||||

from pr_agent.config_loader import get_settings

|

||||

from pr_agent.git_providers.utils import apply_repo_settings

|

||||

|

||||

@ -19,6 +19,7 @@ MAX_TOKENS = {

|

||||

'gpt-4o-mini': 128000, # 128K, but may be limited by config.max_model_tokens

|

||||

'gpt-4o-mini-2024-07-18': 128000, # 128K, but may be limited by config.max_model_tokens

|

||||

'gpt-4o-2024-08-06': 128000, # 128K, but may be limited by config.max_model_tokens

|

||||

'gpt-4o-2024-11-20': 128000, # 128K, but may be limited by config.max_model_tokens

|

||||

'o1-mini': 128000, # 128K, but may be limited by config.max_model_tokens

|

||||

'o1-mini-2024-09-12': 128000, # 128K, but may be limited by config.max_model_tokens

|

||||

'o1-preview': 128000, # 128K, but may be limited by config.max_model_tokens

|

||||

@ -31,6 +32,7 @@ MAX_TOKENS = {

|

||||

'vertex_ai/codechat-bison': 6144,

|

||||

'vertex_ai/codechat-bison-32k': 32000,

|

||||

'vertex_ai/claude-3-haiku@20240307': 100000,

|

||||

'vertex_ai/claude-3-5-haiku@20241022': 100000,

|

||||

'vertex_ai/claude-3-sonnet@20240229': 100000,

|

||||

'vertex_ai/claude-3-opus@20240229': 100000,

|

||||

'vertex_ai/claude-3-5-sonnet@20240620': 100000,

|

||||

@ -48,11 +50,13 @@ MAX_TOKENS = {

|

||||

'anthropic/claude-3-opus-20240229': 100000,

|

||||

'anthropic/claude-3-5-sonnet-20240620': 100000,

|

||||

'anthropic/claude-3-5-sonnet-20241022': 100000,

|

||||

'anthropic/claude-3-5-haiku-20241022': 100000,

|

||||

'bedrock/anthropic.claude-instant-v1': 100000,

|

||||

'bedrock/anthropic.claude-v2': 100000,

|

||||

'bedrock/anthropic.claude-v2:1': 100000,

|

||||

'bedrock/anthropic.claude-3-sonnet-20240229-v1:0': 100000,

|

||||

'bedrock/anthropic.claude-3-haiku-20240307-v1:0': 100000,

|

||||

'bedrock/anthropic.claude-3-5-haiku-20241022-v1:0': 100000,

|

||||

'bedrock/anthropic.claude-3-5-sonnet-20240620-v1:0': 100000,

|

||||

'bedrock/anthropic.claude-3-5-sonnet-20241022-v2:0': 100000,

|

||||

'claude-3-5-sonnet': 100000,

|

||||

|

||||

@ -1,17 +1,18 @@

|

||||

try:

|

||||

from langchain_openai import ChatOpenAI, AzureChatOpenAI

|

||||

from langchain_core.messages import SystemMessage, HumanMessage

|

||||

from langchain_core.messages import HumanMessage, SystemMessage

|

||||

from langchain_openai import AzureChatOpenAI, ChatOpenAI

|

||||

except: # we don't enforce langchain as a dependency, so if it's not installed, just move on

|

||||

pass

|

||||

|

||||

import functools

|

||||

|

||||

from openai import APIError, RateLimitError, Timeout

|

||||

from retry import retry

|

||||

|

||||

from pr_agent.algo.ai_handlers.base_ai_handler import BaseAiHandler

|

||||

from pr_agent.config_loader import get_settings

|

||||

from pr_agent.log import get_logger

|

||||

|

||||

from openai import APIError, RateLimitError, Timeout

|

||||

from retry import retry

|

||||

import functools

|

||||

|

||||

OPENAI_RETRIES = 5

|

||||

|

||||

|

||||

@ -73,4 +74,3 @@ class LangChainOpenAIHandler(BaseAiHandler):

|

||||

raise ValueError(f"OpenAI {e.name} is required") from e

|

||||

else:

|

||||

raise e

|

||||

|

||||

|

||||

@ -1,7 +1,8 @@

|

||||

import os

|

||||

import requests

|

||||

|

||||

import litellm

|

||||

import openai

|

||||

import requests

|

||||

from litellm import acompletion

|

||||

from tenacity import retry, retry_if_exception_type, stop_after_attempt

|

||||

|

||||

|

||||

@ -4,6 +4,7 @@ import openai

|

||||

from openai import APIError, AsyncOpenAI, RateLimitError, Timeout

|

||||

from retry import retry

|

||||

|

||||

from pr_agent.algo.ai_handlers.base_ai_handler import BaseAiHandler

|

||||

from pr_agent.config_loader import get_settings

|

||||

from pr_agent.log import get_logger

|

||||

|

||||

@ -41,7 +42,6 @@ class OpenAIHandler(BaseAiHandler):

|

||||

tries=OPENAI_RETRIES, delay=2, backoff=2, jitter=(1, 3))

|

||||

async def chat_completion(self, model: str, system: str, user: str, temperature: float = 0.2):

|

||||

try:

|

||||

deployment_id = self.deployment_id

|

||||

get_logger().info("System: ", system)

|

||||

get_logger().info("User: ", user)

|

||||

messages = [{"role": "system", "content": system}, {"role": "user", "content": user}]

|

||||

|

||||

@ -3,8 +3,8 @@ from __future__ import annotations

|

||||

import re

|

||||

import traceback

|

||||

|

||||

from pr_agent.config_loader import get_settings

|

||||

from pr_agent.algo.types import EDIT_TYPE, FilePatchInfo

|

||||

from pr_agent.config_loader import get_settings

|

||||

from pr_agent.log import get_logger

|

||||

|

||||

|

||||

@ -31,7 +31,7 @@ def extend_patch(original_file_str, patch_str, patch_extra_lines_before=0,

|

||||

|

||||

|

||||

def decode_if_bytes(original_file_str):

|

||||

if isinstance(original_file_str, bytes):

|

||||

if isinstance(original_file_str, (bytes, bytearray)):

|

||||

try:

|

||||

return original_file_str.decode('utf-8')

|

||||

except UnicodeDecodeError:

|

||||

@ -61,23 +61,26 @@ def process_patch_lines(patch_str, original_file_str, patch_extra_lines_before,

|

||||

patch_lines = patch_str.splitlines()

|

||||

extended_patch_lines = []

|

||||

|

||||

is_valid_hunk = True

|

||||

start1, size1, start2, size2 = -1, -1, -1, -1

|

||||

RE_HUNK_HEADER = re.compile(

|

||||

r"^@@ -(\d+)(?:,(\d+))? \+(\d+)(?:,(\d+))? @@[ ]?(.*)")

|

||||

try:

|

||||

for line in patch_lines:

|

||||

for i,line in enumerate(patch_lines):

|

||||

if line.startswith('@@'):

|

||||

match = RE_HUNK_HEADER.match(line)

|

||||

# identify hunk header

|

||||

if match:

|

||||

# finish processing previous hunk

|

||||

if start1 != -1 and patch_extra_lines_after > 0:

|

||||

if is_valid_hunk and (start1 != -1 and patch_extra_lines_after > 0):

|

||||

delta_lines = [f' {line}' for line in original_lines[start1 + size1 - 1:start1 + size1 - 1 + patch_extra_lines_after]]

|

||||

extended_patch_lines.extend(delta_lines)

|

||||

|

||||

section_header, size1, size2, start1, start2 = extract_hunk_headers(match)

|

||||

|

||||

if patch_extra_lines_before > 0 or patch_extra_lines_after > 0:

|

||||

is_valid_hunk = check_if_hunk_lines_matches_to_file(i, original_lines, patch_lines, start1)

|

||||

|

||||

if is_valid_hunk and (patch_extra_lines_before > 0 or patch_extra_lines_after > 0):

|

||||

def _calc_context_limits(patch_lines_before):

|

||||

extended_start1 = max(1, start1 - patch_lines_before)

|

||||

extended_size1 = size1 + (start1 - extended_start1) + patch_extra_lines_after

|

||||

@ -138,7 +141,7 @@ def process_patch_lines(patch_str, original_file_str, patch_extra_lines_before,

|

||||

return patch_str

|

||||

|

||||

# finish processing last hunk

|

||||

if start1 != -1 and patch_extra_lines_after > 0:

|

||||

if start1 != -1 and patch_extra_lines_after > 0 and is_valid_hunk:

|

||||

delta_lines = original_lines[start1 + size1 - 1:start1 + size1 - 1 + patch_extra_lines_after]

|

||||

# add space at the beginning of each extra line

|

||||

delta_lines = [f' {line}' for line in delta_lines]

|

||||

@ -148,6 +151,23 @@ def process_patch_lines(patch_str, original_file_str, patch_extra_lines_before,

|

||||

return extended_patch_str

|

||||

|

||||

|

||||

def check_if_hunk_lines_matches_to_file(i, original_lines, patch_lines, start1):

|

||||

"""

|

||||

Check if the hunk lines match the original file content. We saw cases where the hunk header line doesn't match the original file content, and then

|

||||

extending the hunk with extra lines before the hunk header can cause the hunk to be invalid.

|

||||

"""

|

||||

is_valid_hunk = True

|

||||

try:

|

||||

if i + 1 < len(patch_lines) and patch_lines[i + 1][0] == ' ': # an existing line in the file

|

||||

if patch_lines[i + 1].strip() != original_lines[start1 - 1].strip():

|

||||

is_valid_hunk = False

|

||||

get_logger().error(

|

||||

f"Invalid hunk in PR, line {start1} in hunk header doesn't match the original file content")

|

||||

except:

|

||||

pass

|

||||

return is_valid_hunk

|

||||

|

||||

|

||||

def extract_hunk_headers(match):

|

||||

res = list(match.groups())

|

||||

for i in range(len(res)):

|

||||

|

||||

@ -4,8 +4,6 @@ from typing import Dict

|

||||

from pr_agent.config_loader import get_settings

|

||||

|

||||

|

||||

|

||||

|

||||

def filter_bad_extensions(files):

|

||||

# Bad Extensions, source: https://github.com/EleutherAI/github-downloader/blob/345e7c4cbb9e0dc8a0615fd995a08bf9d73b3fe6/download_repo_text.py # noqa: E501

|

||||

bad_extensions = get_settings().bad_extensions.default

|

||||

|

||||

@ -5,14 +5,15 @@ from typing import Callable, List, Tuple

|

||||

|

||||

from github import RateLimitExceededException

|

||||

|

||||

from pr_agent.algo.git_patch_processing import convert_to_hunks_with_lines_numbers, extend_patch, handle_patch_deletions

|

||||

from pr_agent.algo.language_handler import sort_files_by_main_languages

|

||||

from pr_agent.algo.file_filter import filter_ignored

|

||||

from pr_agent.algo.git_patch_processing import (

|

||||

convert_to_hunks_with_lines_numbers, extend_patch, handle_patch_deletions)

|

||||

from pr_agent.algo.language_handler import sort_files_by_main_languages

|

||||

from pr_agent.algo.token_handler import TokenHandler

|

||||

from pr_agent.algo.utils import get_max_tokens, clip_tokens, ModelType

|

||||

from pr_agent.algo.types import EDIT_TYPE, FilePatchInfo

|

||||

from pr_agent.algo.utils import ModelType, clip_tokens, get_max_tokens

|

||||

from pr_agent.config_loader import get_settings

|

||||

from pr_agent.git_providers.git_provider import GitProvider

|

||||

from pr_agent.algo.types import EDIT_TYPE, FilePatchInfo

|

||||

from pr_agent.log import get_logger

|

||||

|

||||

DELETED_FILES_ = "Deleted files:\n"

|

||||

|

||||

@ -1,8 +1,9 @@

|

||||

from jinja2 import Environment, StrictUndefined

|

||||

from tiktoken import encoding_for_model, get_encoding

|

||||

from pr_agent.config_loader import get_settings

|

||||

from threading import Lock

|

||||

|

||||

from jinja2 import Environment, StrictUndefined

|

||||

from tiktoken import encoding_for_model, get_encoding

|

||||

|

||||

from pr_agent.config_loader import get_settings

|

||||

from pr_agent.log import get_logger

|

||||

|

||||

|

||||

|

||||

@ -14,7 +14,6 @@ from datetime import datetime

|

||||

from enum import Enum

|

||||

from typing import Any, List, Tuple

|

||||

|

||||

|

||||

import html2text

|

||||

import requests

|

||||

import yaml

|

||||

@ -23,10 +22,11 @@ from starlette_context import context

|

||||

|

||||

from pr_agent.algo import MAX_TOKENS

|

||||

from pr_agent.algo.token_handler import TokenEncoder

|

||||

from pr_agent.config_loader import get_settings, global_settings

|

||||

from pr_agent.algo.types import FilePatchInfo

|

||||

from pr_agent.config_loader import get_settings, global_settings

|

||||

from pr_agent.log import get_logger

|

||||

|

||||

|

||||

class Range(BaseModel):

|

||||

line_start: int # should be 0-indexed

|

||||

line_end: int

|

||||

@ -173,7 +173,7 @@ def convert_to_markdown_v2(output_data: dict,

|

||||

if is_value_no(value):

|

||||

markdown_text += f'### {emoji} No relevant tests\n\n'

|

||||

else:

|

||||

markdown_text += f"### PR contains tests\n\n"

|

||||

markdown_text += f"### {emoji} PR contains tests\n\n"

|

||||

elif 'ticket compliance check' in key_nice.lower():

|

||||

markdown_text = ticket_markdown_logic(emoji, markdown_text, value, gfm_supported)

|

||||

elif 'security concerns' in key_nice.lower():

|

||||

@ -224,12 +224,21 @@ def convert_to_markdown_v2(output_data: dict,

|

||||

issue_content = issue.get('issue_content', '').strip()

|

||||

start_line = int(str(issue.get('start_line', 0)).strip())

|

||||

end_line = int(str(issue.get('end_line', 0)).strip())

|

||||

reference_link = git_provider.get_line_link(relevant_file, start_line, end_line)

|

||||

if git_provider:

|

||||

reference_link = git_provider.get_line_link(relevant_file, start_line, end_line)

|

||||

else:

|

||||

reference_link = None

|

||||

|

||||

if gfm_supported:

|

||||

issue_str = f"<a href='{reference_link}'><strong>{issue_header}</strong></a><br>{issue_content}"

|

||||

if reference_link is not None and len(reference_link) > 0:

|

||||

issue_str = f"<a href='{reference_link}'><strong>{issue_header}</strong></a><br>{issue_content}"

|

||||

else:

|

||||

issue_str = f"<strong>{issue_header}</strong><br>{issue_content}"

|

||||

else:

|

||||

issue_str = f"[**{issue_header}**]({reference_link})\n\n{issue_content}\n\n"

|

||||

if reference_link is not None and len(reference_link) > 0:

|

||||

issue_str = f"[**{issue_header}**]({reference_link})\n\n{issue_content}\n\n"

|

||||

else:

|

||||

issue_str = f"**{issue_header}**\n\n{issue_content}\n\n"

|

||||

markdown_text += f"{issue_str}\n\n"

|

||||

except Exception as e:

|

||||

get_logger().exception(f"Failed to process 'Recommended focus areas for review': {e}")

|

||||

|

||||

@ -4,7 +4,7 @@ import os

|

||||

|

||||

from pr_agent.agent.pr_agent import PRAgent, commands

|

||||

from pr_agent.config_loader import get_settings

|

||||

from pr_agent.log import setup_logger, get_logger

|

||||

from pr_agent.log import get_logger, setup_logger

|

||||

|

||||

log_level = os.environ.get("LOG_LEVEL", "INFO")

|

||||

setup_logger(log_level)

|

||||

|

||||

@ -1,14 +1,16 @@

|

||||

from starlette_context import context

|

||||

|

||||

from pr_agent.config_loader import get_settings

|

||||

from pr_agent.git_providers.azuredevops_provider import AzureDevopsProvider

|

||||

from pr_agent.git_providers.bitbucket_provider import BitbucketProvider

|

||||

from pr_agent.git_providers.bitbucket_server_provider import BitbucketServerProvider

|

||||

from pr_agent.git_providers.bitbucket_server_provider import \

|

||||

BitbucketServerProvider

|

||||

from pr_agent.git_providers.codecommit_provider import CodeCommitProvider

|

||||

from pr_agent.git_providers.gerrit_provider import GerritProvider

|

||||

from pr_agent.git_providers.git_provider import GitProvider

|

||||

from pr_agent.git_providers.github_provider import GithubProvider

|

||||

from pr_agent.git_providers.gitlab_provider import GitLabProvider

|

||||

from pr_agent.git_providers.local_git_provider import LocalGitProvider

|

||||

from pr_agent.git_providers.azuredevops_provider import AzureDevopsProvider

|

||||

from pr_agent.git_providers.gerrit_provider import GerritProvider

|

||||

from starlette_context import context

|

||||

|

||||

_GIT_PROVIDERS = {

|

||||

'github': GithubProvider,

|

||||

|

||||

@ -2,33 +2,33 @@ import os

|

||||

from typing import Optional, Tuple

|

||||

from urllib.parse import urlparse

|

||||

|

||||

from ..algo.file_filter import filter_ignored

|

||||

from ..log import get_logger

|

||||

from ..algo.language_handler import is_valid_file

|

||||

from ..algo.utils import clip_tokens, find_line_number_of_relevant_line_in_file, load_large_diff, PRDescriptionHeader

|

||||

from ..config_loader import get_settings

|

||||

from .git_provider import GitProvider

|

||||

from pr_agent.algo.types import EDIT_TYPE, FilePatchInfo

|

||||

|

||||

from ..algo.file_filter import filter_ignored

|

||||

from ..algo.language_handler import is_valid_file

|

||||

from ..algo.utils import (PRDescriptionHeader, clip_tokens,

|

||||

find_line_number_of_relevant_line_in_file,

|

||||

load_large_diff)

|

||||

from ..config_loader import get_settings

|

||||

from ..log import get_logger

|

||||

from .git_provider import GitProvider

|

||||

|

||||

AZURE_DEVOPS_AVAILABLE = True

|

||||

ADO_APP_CLIENT_DEFAULT_ID = "499b84ac-1321-427f-aa17-267ca6975798/.default"

|

||||

MAX_PR_DESCRIPTION_AZURE_LENGTH = 4000-1

|

||||

|

||||

try:

|

||||

# noinspection PyUnresolvedReferences

|

||||

from msrest.authentication import BasicAuthentication

|

||||

# noinspection PyUnresolvedReferences

|

||||

from azure.devops.connection import Connection

|

||||

# noinspection PyUnresolvedReferences

|

||||

from azure.identity import DefaultAzureCredential

|

||||

from azure.devops.v7_1.git.models import (Comment, CommentThread,

|

||||

GitPullRequest,

|

||||

GitPullRequestIterationChanges,

|

||||

GitVersionDescriptor)

|

||||

# noinspection PyUnresolvedReferences

|

||||

from azure.devops.v7_1.git.models import (

|

||||

Comment,

|

||||

CommentThread,

|

||||

GitVersionDescriptor,

|

||||

GitPullRequest,

|

||||

GitPullRequestIterationChanges,

|

||||

)

|

||||

from azure.identity import DefaultAzureCredential

|

||||

from msrest.authentication import BasicAuthentication

|

||||

except ImportError:

|

||||

AZURE_DEVOPS_AVAILABLE = False

|

||||

|

||||

@ -67,16 +67,14 @@ class AzureDevopsProvider(GitProvider):

|

||||

relevant_lines_end = suggestion['relevant_lines_end']

|

||||

|

||||

if not relevant_lines_start or relevant_lines_start == -1:

|

||||

if get_settings().config.verbosity_level >= 2:

|

||||

get_logger().exception(

|

||||

f"Failed to publish code suggestion, relevant_lines_start is {relevant_lines_start}")

|

||||

get_logger().warning(

|

||||

f"Failed to publish code suggestion, relevant_lines_start is {relevant_lines_start}")

|

||||

continue

|

||||

|

||||

if relevant_lines_end < relevant_lines_start:

|

||||

if get_settings().config.verbosity_level >= 2:

|

||||

get_logger().exception(f"Failed to publish code suggestion, "

|

||||

f"relevant_lines_end is {relevant_lines_end} and "

|

||||

f"relevant_lines_start is {relevant_lines_start}")

|

||||

get_logger().warning(f"Failed to publish code suggestion, "

|

||||

f"relevant_lines_end is {relevant_lines_end} and "

|

||||

f"relevant_lines_start is {relevant_lines_start}")

|

||||

continue

|

||||

|

||||

if relevant_lines_end > relevant_lines_start:

|

||||

@ -95,9 +93,11 @@ class AzureDevopsProvider(GitProvider):

|

||||

"side": "RIGHT",

|

||||

}

|

||||

post_parameters_list.append(post_parameters)

|

||||

if not post_parameters_list:

|

||||

return False

|

||||

|

||||

try:

|

||||

for post_parameters in post_parameters_list:

|

||||

for post_parameters in post_parameters_list:

|

||||

try:

|

||||

comment = Comment(content=post_parameters["body"], comment_type=1)

|

||||

thread = CommentThread(comments=[comment],

|

||||

thread_context={

|

||||

@ -117,15 +117,11 @@ class AzureDevopsProvider(GitProvider):

|

||||

repository_id=self.repo_slug,

|

||||

pull_request_id=self.pr_num

|

||||

)

|

||||

if get_settings().config.verbosity_level >= 2:

|

||||

get_logger().info(

|

||||

f"Published code suggestion on {self.pr_num} at {post_parameters['path']}"

|

||||

)

|

||||

return True

|

||||

except Exception as e:

|

||||

if get_settings().config.verbosity_level >= 2:

|

||||

get_logger().error(f"Failed to publish code suggestion, error: {e}")

|

||||

return False

|

||||

except Exception as e:

|

||||

get_logger().warning(f"Azure failed to publish code suggestion, error: {e}")

|

||||

return True

|

||||

|

||||

|

||||

|

||||

def get_pr_description_full(self) -> str:

|

||||

return self.pr.description

|

||||

@ -382,6 +378,9 @@ class AzureDevopsProvider(GitProvider):

|

||||

return []

|

||||

|

||||

def publish_comment(self, pr_comment: str, is_temporary: bool = False, thread_context=None):

|

||||

if is_temporary and not get_settings().config.publish_output_progress:

|

||||

get_logger().debug(f"Skipping publish_comment for temporary comment: {pr_comment}")

|

||||

return None

|

||||

comment = Comment(content=pr_comment)

|

||||

thread = CommentThread(comments=[comment], thread_context=thread_context, status=5)

|

||||

thread_response = self.azure_devops_client.create_thread(

|

||||

@ -620,4 +619,3 @@ class AzureDevopsProvider(GitProvider):

|

||||

|

||||

def publish_file_comments(self, file_comments: list) -> bool:

|

||||

pass

|

||||

|

||||

|

||||

@ -1,4 +1,6 @@

|

||||

import difflib

|

||||

import json

|

||||

import re

|

||||

from typing import Optional, Tuple

|

||||

from urllib.parse import urlparse

|

||||

|

||||

@ -6,13 +8,14 @@ import requests

|

||||

from atlassian.bitbucket import Cloud

|

||||

from starlette_context import context

|

||||

|

||||

from pr_agent.algo.types import FilePatchInfo, EDIT_TYPE

|

||||

from pr_agent.algo.types import EDIT_TYPE, FilePatchInfo

|

||||

|

||||

from ..algo.file_filter import filter_ignored

|

||||

from ..algo.language_handler import is_valid_file

|

||||

from ..algo.utils import find_line_number_of_relevant_line_in_file

|

||||

from ..config_loader import get_settings

|

||||

from ..log import get_logger

|

||||

from .git_provider import GitProvider, MAX_FILES_ALLOWED_FULL

|

||||

from .git_provider import MAX_FILES_ALLOWED_FULL, GitProvider

|

||||

|

||||

|

||||

def _gef_filename(diff):

|

||||

@ -71,24 +74,38 @@ class BitbucketProvider(GitProvider):

|

||||

post_parameters_list = []

|

||||

for suggestion in code_suggestions:

|

||||

body = suggestion["body"]

|

||||

original_suggestion = suggestion.get('original_suggestion', None) # needed for diff code

|

||||

if original_suggestion:

|

||||

try:

|

||||

existing_code = original_suggestion['existing_code'].rstrip() + "\n"

|

||||

improved_code = original_suggestion['improved_code'].rstrip() + "\n"

|

||||

diff = difflib.unified_diff(existing_code.split('\n'),

|

||||

improved_code.split('\n'), n=999)

|

||||

patch_orig = "\n".join(diff)

|

||||

patch = "\n".join(patch_orig.splitlines()[5:]).strip('\n')

|

||||

diff_code = f"\n\n```diff\n{patch.rstrip()}\n```"

|

||||

# replace ```suggestion ... ``` with diff_code, using regex:

|

||||

body = re.sub(r'```suggestion.*?```', diff_code, body, flags=re.DOTALL)

|

||||

except Exception as e:

|

||||

get_logger().exception(f"Bitbucket failed to get diff code for publishing, error: {e}")

|

||||

continue

|

||||

|

||||

relevant_file = suggestion["relevant_file"]

|

||||

relevant_lines_start = suggestion["relevant_lines_start"]

|

||||

relevant_lines_end = suggestion["relevant_lines_end"]

|

||||

|

||||

if not relevant_lines_start or relevant_lines_start == -1:

|

||||

if get_settings().config.verbosity_level >= 2:

|

||||

get_logger().exception(

|

||||

f"Failed to publish code suggestion, relevant_lines_start is {relevant_lines_start}"

|

||||

)

|

||||

get_logger().exception(

|

||||

f"Failed to publish code suggestion, relevant_lines_start is {relevant_lines_start}"

|

||||

)

|

||||

continue

|

||||

|

||||

if relevant_lines_end < relevant_lines_start:

|

||||

if get_settings().config.verbosity_level >= 2:

|

||||

get_logger().exception(

|

||||

f"Failed to publish code suggestion, "

|

||||

f"relevant_lines_end is {relevant_lines_end} and "

|

||||

f"relevant_lines_start is {relevant_lines_start}"

|

||||

)

|

||||

get_logger().exception(

|

||||

f"Failed to publish code suggestion, "

|

||||

f"relevant_lines_end is {relevant_lines_end} and "

|

||||

f"relevant_lines_start is {relevant_lines_start}"

|

||||

)

|

||||

continue

|

||||

|

||||

if relevant_lines_end > relevant_lines_start:

|

||||

@ -112,8 +129,7 @@ class BitbucketProvider(GitProvider):

|

||||

self.publish_inline_comments(post_parameters_list)

|

||||

return True

|

||||

except Exception as e:

|

||||

if get_settings().config.verbosity_level >= 2:

|

||||

get_logger().error(f"Failed to publish code suggestion, error: {e}")

|

||||

get_logger().error(f"Bitbucket failed to publish code suggestion, error: {e}")

|

||||

return False

|

||||

|

||||

def publish_file_comments(self, file_comments: list) -> bool:

|

||||

@ -121,7 +137,7 @@ class BitbucketProvider(GitProvider):

|

||||

|

||||

def is_supported(self, capability: str) -> bool:

|

||||

if capability in ['get_issue_comments', 'publish_inline_comments', 'get_labels', 'gfm_markdown',

|

||||

'publish_file_comments']:

|

||||

'publish_file_comments']:

|

||||

return False

|

||||

return True

|

||||

|

||||

@ -309,6 +325,9 @@ class BitbucketProvider(GitProvider):

|

||||

self.publish_comment(pr_comment)

|

||||

|

||||

def publish_comment(self, pr_comment: str, is_temporary: bool = False):

|

||||

if is_temporary and not get_settings().config.publish_output_progress:

|

||||

get_logger().debug(f"Skipping publish_comment for temporary comment: {pr_comment}")

|

||||

return None

|

||||

pr_comment = self.limit_output_characters(pr_comment, self.max_comment_length)

|

||||

comment = self.pr.comment(pr_comment)

|

||||

if is_temporary:

|

||||

|

||||

@ -1,16 +1,21 @@

|

||||

from distutils.version import LooseVersion

|

||||

from requests.exceptions import HTTPError

|

||||

import difflib

|

||||

import re

|

||||

|

||||

from packaging.version import parse as parse_version

|

||||

from typing import Optional, Tuple

|

||||

from urllib.parse import quote_plus, urlparse

|

||||

|

||||

from atlassian.bitbucket import Bitbucket

|

||||

from requests.exceptions import HTTPError

|

||||

|

||||

from .git_provider import GitProvider

|

||||

from ..algo.types import EDIT_TYPE, FilePatchInfo

|

||||

from ..algo.git_patch_processing import decode_if_bytes

|

||||

from ..algo.language_handler import is_valid_file

|

||||

from ..algo.utils import load_large_diff, find_line_number_of_relevant_line_in_file

|

||||

from ..algo.types import EDIT_TYPE, FilePatchInfo

|

||||

from ..algo.utils import (find_line_number_of_relevant_line_in_file,

|

||||

load_large_diff)

|

||||

from ..config_loader import get_settings

|

||||

from ..log import get_logger

|

||||

from .git_provider import GitProvider

|

||||

|

||||

|

||||

class BitbucketServerProvider(GitProvider):

|

||||

@ -35,7 +40,7 @@ class BitbucketServerProvider(GitProvider):

|

||||

token=get_settings().get("BITBUCKET_SERVER.BEARER_TOKEN",

|

||||

None))

|

||||

try:

|

||||

self.bitbucket_api_version = LooseVersion(self.bitbucket_client.get("rest/api/1.0/application-properties").get('version'))

|

||||

self.bitbucket_api_version = parse_version(self.bitbucket_client.get("rest/api/1.0/application-properties").get('version'))

|

||||

except Exception:

|

||||

self.bitbucket_api_version = None

|

||||

|

||||

@ -65,24 +70,37 @@ class BitbucketServerProvider(GitProvider):

|

||||

post_parameters_list = []

|

||||

for suggestion in code_suggestions:

|

||||

body = suggestion["body"]

|

||||

original_suggestion = suggestion.get('original_suggestion', None) # needed for diff code

|

||||

if original_suggestion:

|

||||

try:

|

||||

existing_code = original_suggestion['existing_code'].rstrip() + "\n"

|

||||

improved_code = original_suggestion['improved_code'].rstrip() + "\n"

|

||||

diff = difflib.unified_diff(existing_code.split('\n'),

|

||||

improved_code.split('\n'), n=999)

|

||||

patch_orig = "\n".join(diff)

|

||||

patch = "\n".join(patch_orig.splitlines()[5:]).strip('\n')

|

||||

diff_code = f"\n\n```diff\n{patch.rstrip()}\n```"

|

||||

# replace ```suggestion ... ``` with diff_code, using regex:

|

||||

body = re.sub(r'```suggestion.*?```', diff_code, body, flags=re.DOTALL)

|

||||

except Exception as e:

|

||||

get_logger().exception(f"Bitbucket failed to get diff code for publishing, error: {e}")

|

||||

continue

|

||||

relevant_file = suggestion["relevant_file"]

|

||||

relevant_lines_start = suggestion["relevant_lines_start"]

|

||||

relevant_lines_end = suggestion["relevant_lines_end"]

|

||||

|

||||

if not relevant_lines_start or relevant_lines_start == -1:

|

||||

if get_settings().config.verbosity_level >= 2:

|

||||

get_logger().exception(

|

||||

f"Failed to publish code suggestion, relevant_lines_start is {relevant_lines_start}"

|

||||

)

|

||||

get_logger().warning(

|

||||

f"Failed to publish code suggestion, relevant_lines_start is {relevant_lines_start}"

|

||||

)

|

||||

continue

|

||||

|

||||

if relevant_lines_end < relevant_lines_start:

|

||||

if get_settings().config.verbosity_level >= 2:

|

||||

get_logger().exception(

|

||||

f"Failed to publish code suggestion, "

|

||||

f"relevant_lines_end is {relevant_lines_end} and "

|

||||

f"relevant_lines_start is {relevant_lines_start}"

|

||||

)

|

||||

get_logger().warning(

|

||||

f"Failed to publish code suggestion, "

|

||||

f"relevant_lines_end is {relevant_lines_end} and "

|

||||

f"relevant_lines_start is {relevant_lines_start}"

|

||||

)

|

||||

continue

|

||||

|

||||

if relevant_lines_end > relevant_lines_start:

|

||||

@ -159,7 +177,7 @@ class BitbucketServerProvider(GitProvider):

|

||||

head_sha = self.pr.fromRef['latestCommit']

|

||||

|

||||

# if Bitbucket api version is >= 8.16 then use the merge-base api for 2-way diff calculation

|

||||

if self.bitbucket_api_version is not None and self.bitbucket_api_version >= LooseVersion("8.16"):

|

||||

if self.bitbucket_api_version is not None and self.bitbucket_api_version >= parse_version("8.16"):

|

||||

try:

|

||||

base_sha = self.bitbucket_client.get(self._get_merge_base())['id']

|

||||

except Exception as e:

|

||||

@ -174,7 +192,7 @@ class BitbucketServerProvider(GitProvider):

|

||||

# if Bitbucket api version is None or < 7.0 then do a simple diff with a guaranteed common ancestor

|

||||

base_sha = source_commits_list[-1]['parents'][0]['id']

|

||||

# if Bitbucket api version is 7.0-8.15 then use 2-way diff functionality for the base_sha

|

||||

if self.bitbucket_api_version is not None and self.bitbucket_api_version >= LooseVersion("7.0"):

|

||||

if self.bitbucket_api_version is not None and self.bitbucket_api_version >= parse_version("7.0"):

|

||||

try:

|

||||

destination_commits = list(

|

||||

self.bitbucket_client.get_commits(self.workspace_slug, self.repo_slug, base_sha,

|

||||

@ -200,25 +218,21 @@ class BitbucketServerProvider(GitProvider):

|

||||

case 'ADD':

|

||||

edit_type = EDIT_TYPE.ADDED

|

||||

new_file_content_str = self.get_file(file_path, head_sha)

|

||||

if isinstance(new_file_content_str, (bytes, bytearray)):

|

||||

new_file_content_str = new_file_content_str.decode("utf-8")

|

||||

new_file_content_str = decode_if_bytes(new_file_content_str)

|

||||

original_file_content_str = ""

|

||||

case 'DELETE':

|

||||

edit_type = EDIT_TYPE.DELETED

|

||||

new_file_content_str = ""

|

||||

original_file_content_str = self.get_file(file_path, base_sha)

|

||||

if isinstance(original_file_content_str, (bytes, bytearray)):

|

||||

original_file_content_str = original_file_content_str.decode("utf-8")

|

||||

original_file_content_str = decode_if_bytes(original_file_content_str)

|

||||

case 'RENAME':

|

||||

edit_type = EDIT_TYPE.RENAMED

|

||||

case _:

|

||||

edit_type = EDIT_TYPE.MODIFIED

|

||||

original_file_content_str = self.get_file(file_path, base_sha)

|

||||

if isinstance(original_file_content_str, (bytes, bytearray)):

|

||||

original_file_content_str = original_file_content_str.decode("utf-8")

|

||||

original_file_content_str = decode_if_bytes(original_file_content_str)

|