mirror of

https://github.com/qodo-ai/pr-agent.git

synced 2025-07-21 04:50:39 +08:00

Compare commits

3 Commits

mrT23-patc

...

tr/static_

| Author | SHA1 | Date | |

|---|---|---|---|

| 685f001298 | |||

| 88c2b90860 | |||

| c84d84ace2 |

@ -1,3 +1,6 @@

|

||||

[pr_reviewer]

|

||||

enable_review_labels_effort = true

|

||||

enable_auto_approval = true

|

||||

|

||||

[config]

|

||||

model="claude-3-5-sonnet"

|

||||

|

||||

@ -1,11 +1,10 @@

|

||||

FROM python:3.12 as base

|

||||

FROM python:3.10 as base

|

||||

|

||||

WORKDIR /app

|

||||

ADD pyproject.toml .

|

||||

ADD requirements.txt .

|

||||

RUN pip install . && rm pyproject.toml requirements.txt

|

||||

ENV PYTHONPATH=/app

|

||||

ADD docs docs

|

||||

ADD pr_agent pr_agent

|

||||

ADD github_action/entrypoint.sh /

|

||||

RUN chmod +x /entrypoint.sh

|

||||

|

||||

55

README.md

55

README.md

@ -10,7 +10,7 @@

|

||||

|

||||

</picture>

|

||||

<br/>

|

||||

Qode Merge PR-Agent aims to help efficiently review and handle pull requests, by providing AI feedback and suggestions

|

||||

CodiumAI PR-Agent aims to help efficiently review and handle pull requests, by providing AI feedback and suggestions

|

||||

</div>

|

||||

|

||||

[](https://github.com/Codium-ai/pr-agent/blob/main/LICENSE)

|

||||

@ -25,9 +25,9 @@ Qode Merge PR-Agent aims to help efficiently review and handle pull requests, by

|

||||

</div>

|

||||

|

||||

### [Documentation](https://pr-agent-docs.codium.ai/)

|

||||

- See the [Installation Guide](https://qodo-merge-docs.qodo.ai/installation/) for instructions on installing Qode Merge PR-Agent on different platforms.

|

||||

- See the [Installation Guide](https://qodo-merge-docs.qodo.ai/installation/) for instructions on installing PR-Agent on different platforms.

|

||||

|

||||

- See the [Usage Guide](https://qodo-merge-docs.qodo.ai/usage-guide/) for instructions on running Qode Merge PR-Agent tools via different interfaces, such as CLI, PR Comments, or by automatically triggering them when a new PR is opened.

|

||||

- See the [Usage Guide](https://qodo-merge-docs.qodo.ai/usage-guide/) for instructions on running PR-Agent tools via different interfaces, such as CLI, PR Comments, or by automatically triggering them when a new PR is opened.

|

||||

|

||||

- See the [Tools Guide](https://qodo-merge-docs.qodo.ai/tools/) for a detailed description of the different tools, and the available configurations for each tool.

|

||||

|

||||

@ -43,43 +43,45 @@ Qode Merge PR-Agent aims to help efficiently review and handle pull requests, by

|

||||

|

||||

## News and Updates

|

||||

|

||||

### October 27, 2024

|

||||

### September 21, 2024

|

||||

Need help with PR-Agent? New feature - simply comment `/help "your question"` in a pull request, and PR-Agent will provide you with the [relevant documentation](https://github.com/Codium-ai/pr-agent/pull/1241#issuecomment-2365259334).

|

||||

|

||||

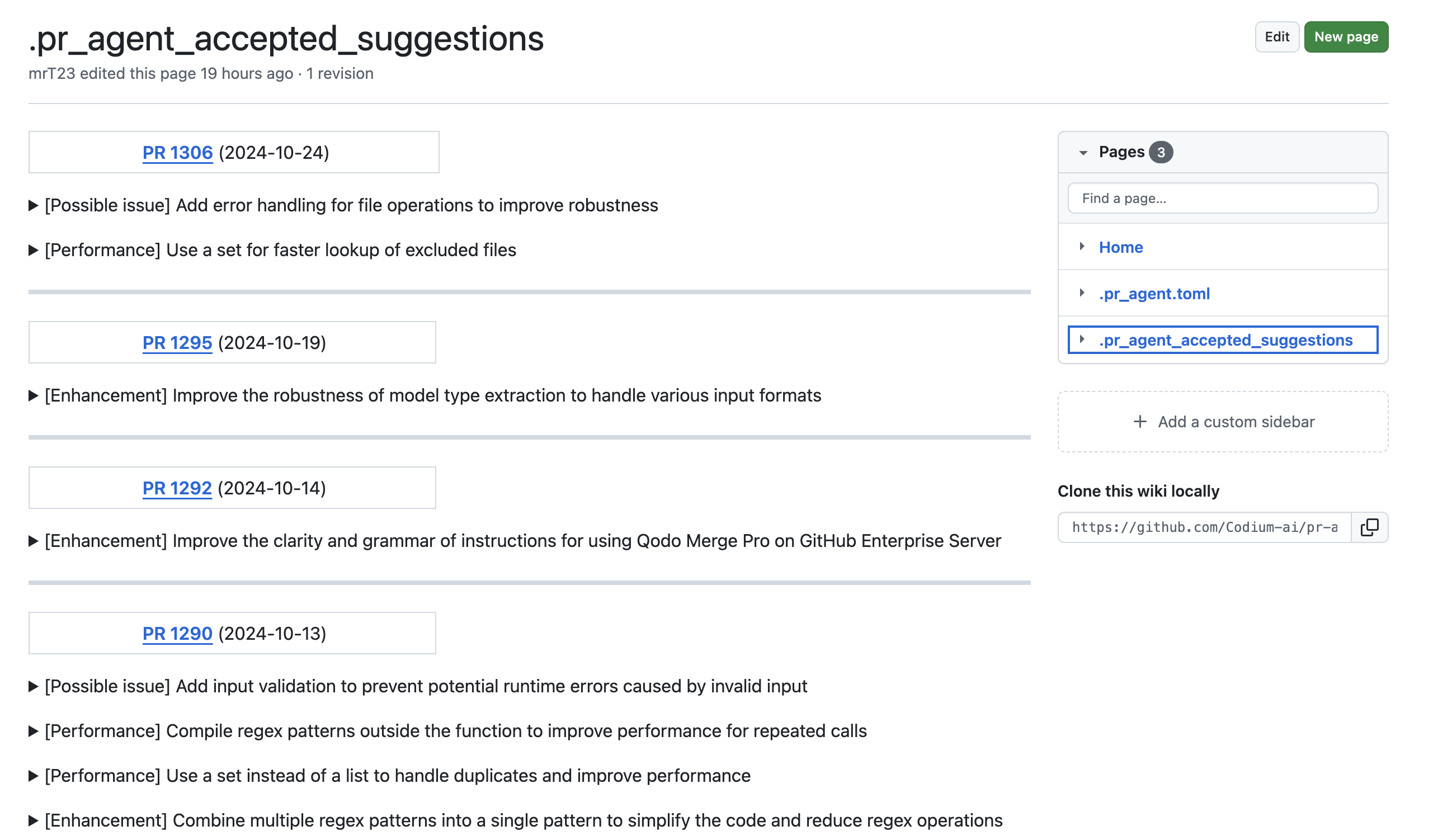

Qodo Merge PR Agent will now automatically document accepted code suggestions in a dedicated wiki page (`.pr_agent_accepted_suggestions`), enabling users to track historical changes, assess the tool's effectiveness, and learn from previously implemented recommendations in the repository.

|

||||

|

||||

This dedicated wiki page will also serve as a foundation for future AI model improvements, allowing it to learn from historically implemented suggestions and generate more targeted, contextually relevant recommendations.

|

||||

Read more about this novel feature [here](https://qodo-merge-docs.qodo.ai/tools/improve/#suggestion-tracking).

|

||||

|

||||

<kbd><img href="https://qodo.ai/images/pr_agent/pr_agent_accepted_suggestions1.png" src="https://qodo.ai/images/pr_agent/pr_agent_accepted_suggestions1.png" width="768"></kbd>

|

||||

<kbd><img src="https://www.codium.ai/images/pr_agent/pr_help_chat.png" width="768"></kbd>

|

||||

|

||||

|

||||

### September 12, 2024

|

||||

[Dynamic context](https://pr-agent-docs.codium.ai/core-abilities/dynamic_context/) is now the default option for context extension.

|

||||

This feature enables PR-Agent to dynamically adjusting the relevant context for each code hunk, while avoiding overflowing the model with too much information.

|

||||

|

||||

### October 21, 2024

|

||||

**Disable publishing labels by default:**

|

||||

### September 3, 2024

|

||||

|

||||

The default setting for `pr_description.publish_labels` has been updated to `false`. This means that labels generated by the `/describe` tool will no longer be published, unless this configuration is explicitly set to `true`.

|

||||

New version of PR-Agent, v0.24 was released. See the [release notes](https://github.com/Codium-ai/pr-agent/releases/tag/v0.24) for more information.

|

||||

|

||||

We constantly strive to balance informative AI analysis with reducing unnecessary noise. User feedback indicated that in many cases, the original PR title alone provides sufficient information, making the generated labels (`enhancement`, `documentation`, `bug fix`, ...) redundant.

|

||||

The [`review_effort`](https://qodo-merge-docs.qodo.ai/tools/review/#configuration-options) label, generated by the `review` tool, will still be published by default, as it provides valuable information enabling reviewers to prioritize small PRs first.

|

||||

### August 26, 2024

|

||||

|

||||

However, every user has different preferences. To still publish the `describe` labels, set `pr_description.publish_labels=true` in the [configuration file](https://qodo-merge-docs.qodo.ai/usage-guide/configuration_options/).

|

||||

For more tailored and relevant labeling, we recommend using the [`custom_labels 💎`](https://qodo-merge-docs.qodo.ai/tools/custom_labels/) tool, that allows generating labels specific to your project's needs.

|

||||

New version of [PR Agent Chrome Extension](https://chromewebstore.google.com/detail/pr-agent-chrome-extension/ephlnjeghhogofkifjloamocljapahnl) was released, with full support of context-aware **PR Chat**. This novel feature is free to use for any open-source repository. See more details in [here](https://pr-agent-docs.codium.ai/chrome-extension/#pr-chat).

|

||||

|

||||

<kbd></kbd>

|

||||

<kbd><img src="https://www.codium.ai/images/pr_agent/pr_chat_1.png" width="768"></kbd>

|

||||

|

||||

→

|

||||

|

||||

<kbd></kbd>

|

||||

<kbd><img src="https://www.codium.ai/images/pr_agent/pr_chat_2.png" width="768"></kbd>

|

||||

|

||||

|

||||

### August 11, 2024

|

||||

Increased PR context size for improved results, and enabled [asymmetric context](https://github.com/Codium-ai/pr-agent/pull/1114/files#diff-9290a3ad9a86690b31f0450b77acd37ef1914b41fabc8a08682d4da433a77f90R69-R70)

|

||||

|

||||

### October 14, 2024

|

||||

Improved support for GitHub enterprise server with [GitHub Actions](https://qodo-merge-docs.qodo.ai/installation/github/#action-for-github-enterprise-server)

|

||||

### August 10, 2024

|

||||

Added support for [Azure devops pipeline](https://pr-agent-docs.codium.ai/installation/azure/) - you can now easily run PR-Agent as an Azure devops pipeline, without needing to set up your own server.

|

||||

|

||||

### October 10, 2024

|

||||

New ability for the `review` tool - **ticket compliance feedback**. If the PR contains a ticket number, PR-Agent will check if the PR code actually [complies](https://github.com/Codium-ai/pr-agent/pull/1279#issuecomment-2404042130) with the ticket requirements.

|

||||

|

||||

<kbd><img src="https://github.com/user-attachments/assets/4a2a728b-5f47-40fa-80cc-16efd296938c" width="768"></kbd>

|

||||

### August 5, 2024

|

||||

Added support for [GitLab pipeline](https://pr-agent-docs.codium.ai/installation/gitlab/#run-as-a-gitlab-pipeline) - you can now run easily PR-Agent as a GitLab pipeline, without needing to set up your own server.

|

||||

|

||||

### July 28, 2024

|

||||

|

||||

(1) improved support for bitbucket server - [auto commands](https://github.com/Codium-ai/pr-agent/pull/1059) and [direct links](https://github.com/Codium-ai/pr-agent/pull/1061)

|

||||

|

||||

(2) custom models are now [supported](https://pr-agent-docs.codium.ai/usage-guide/changing_a_model/#custom-models)

|

||||

|

||||

|

||||

|

||||

## Overview

|

||||

@ -91,6 +93,7 @@ Supported commands per platform:

|

||||

|-------|---------------------------------------------------------------------------------------------------------|:--------------------:|:--------------------:|:--------------------:|:------------:|

|

||||

| TOOLS | Review | ✅ | ✅ | ✅ | ✅ |

|

||||

| | ⮑ Incremental | ✅ | | | |

|

||||

| | ⮑ [SOC2 Compliance](https://pr-agent-docs.codium.ai/tools/review/#soc2-ticket-compliance) 💎 | ✅ | ✅ | ✅ | |

|

||||

| | Describe | ✅ | ✅ | ✅ | ✅ |

|

||||

| | ⮑ [Inline File Summary](https://pr-agent-docs.codium.ai/tools/describe#inline-file-summary) 💎 | ✅ | | | |

|

||||

| | Improve | ✅ | ✅ | ✅ | ✅ |

|

||||

|

||||

@ -1,9 +1,9 @@

|

||||

FROM python:3.12.3 AS base

|

||||

|

||||

WORKDIR /app

|

||||

ADD docs/chroma_db.zip /app/docs/chroma_db.zip

|

||||

ADD pyproject.toml .

|

||||

ADD requirements.txt .

|

||||

ADD docs docs

|

||||

RUN pip install . && rm pyproject.toml requirements.txt

|

||||

ENV PYTHONPATH=/app

|

||||

|

||||

|

||||

BIN

docs/chroma_db.zip

Normal file

BIN

docs/chroma_db.zip

Normal file

Binary file not shown.

Binary file not shown.

|

Before Width: | Height: | Size: 4.2 KiB After Width: | Height: | Size: 15 KiB |

@ -1 +1,140 @@

|

||||

<?xml version="1.0" encoding="UTF-8"?><svg id="Layer_1" xmlns="http://www.w3.org/2000/svg" viewBox="0 0 109.77 81.94"><defs><style>.cls-1{fill:#7968fa;}.cls-1,.cls-2{stroke-width:0px;}.cls-2{fill:#5ae3ae;}</style></defs><path class="cls-2" d="m109.77,40.98c0,22.62-7.11,40.96-15.89,40.96-3.6,0-6.89-3.09-9.58-8.31,6.82-7.46,11.22-19.3,11.22-32.64s-4.4-25.21-11.22-32.67C86.99,3.09,90.29,0,93.89,0c8.78,0,15.89,18.33,15.89,40.97"/><path class="cls-1" d="m95.53,40.99c0,13.35-4.4,25.19-11.23,32.64-3.81-7.46-6.28-19.3-6.28-32.64s2.47-25.21,6.28-32.67c6.83,7.46,11.23,19.32,11.23,32.67"/><path class="cls-2" d="m55.38,78.15c-4.99,2.42-10.52,3.79-16.38,3.79C17.46,81.93,0,63.6,0,40.98S17.46,0,39,0C44.86,0,50.39,1.37,55.38,3.79c-9.69,6.47-16.43,20.69-16.43,37.19s6.73,30.7,16.43,37.17"/><path class="cls-1" d="m78.02,40.99c0,16.48-9.27,30.7-22.65,37.17-9.69-6.47-16.43-20.69-16.43-37.17S45.68,10.28,55.38,3.81c13.37,6.49,22.65,20.69,22.65,37.19"/><path class="cls-2" d="m84.31,73.63c-4.73,5.22-10.64,8.31-17.06,8.31-4.24,0-8.27-1.35-11.87-3.79,13.37-6.48,22.65-20.7,22.65-37.17,0,13.35,2.47,25.19,6.28,32.64"/><path class="cls-2" d="m84.31,8.31c-3.81,7.46-6.28,19.32-6.28,32.67,0-16.5-9.27-30.7-22.65-37.19,3.6-2.45,7.63-3.8,11.87-3.8,6.43,0,12.33,3.09,17.06,8.31"/></svg>

|

||||

<?xml version="1.0" encoding="utf-8"?>

|

||||

<!-- Generator: Adobe Illustrator 28.1.0, SVG Export Plug-In . SVG Version: 6.00 Build 0) -->

|

||||

<svg version="1.1" id="Layer_1" xmlns="http://www.w3.org/2000/svg" xmlns:xlink="http://www.w3.org/1999/xlink" x="0px" y="0px"

|

||||

width="64px" height="64px" viewBox="0 0 64 64" enable-background="new 0 0 64 64" xml:space="preserve">

|

||||

<g>

|

||||

<defs>

|

||||

<rect id="SVGID_1_" x="0.4" y="0.1" width="63.4" height="63.4"/>

|

||||

</defs>

|

||||

<clipPath id="SVGID_00000008836131916906499950000015813697852011234749_">

|

||||

<use xlink:href="#SVGID_1_" overflow="visible"/>

|

||||

</clipPath>

|

||||

<g clip-path="url(#SVGID_00000008836131916906499950000015813697852011234749_)">

|

||||

<path fill="#05E5AD" d="M21.4,9.8c3,0,5.9,0.7,8.5,1.9c-5.7,3.4-9.8,11.1-9.8,20.1c0,9,4,16.7,9.8,20.1c-2.6,1.2-5.5,1.9-8.5,1.9

|

||||

c-11.6,0-21-9.8-21-22S9.8,9.8,21.4,9.8z"/>

|

||||

|

||||

<radialGradient id="SVGID_00000150822754378345238340000008985053211526864828_" cx="-140.0905" cy="350.1757" r="4.8781" gradientTransform="matrix(-4.7708 -6.961580e-02 -0.1061 7.2704 -601.3099 -2523.8489)" gradientUnits="userSpaceOnUse">

|

||||

<stop offset="0" style="stop-color:#6447FF"/>

|

||||

<stop offset="6.666670e-02" style="stop-color:#6348FE"/>

|

||||

<stop offset="0.1333" style="stop-color:#614DFC"/>

|

||||

<stop offset="0.2" style="stop-color:#5C54F8"/>

|

||||

<stop offset="0.2667" style="stop-color:#565EF3"/>

|

||||

<stop offset="0.3333" style="stop-color:#4E6CEC"/>

|

||||

<stop offset="0.4" style="stop-color:#447BE4"/>

|

||||

<stop offset="0.4667" style="stop-color:#3A8DDB"/>

|

||||

<stop offset="0.5333" style="stop-color:#2F9FD1"/>

|

||||

<stop offset="0.6" style="stop-color:#25B1C8"/>

|

||||

<stop offset="0.6667" style="stop-color:#1BC0C0"/>

|

||||

<stop offset="0.7333" style="stop-color:#13CEB9"/>

|

||||

<stop offset="0.8" style="stop-color:#0DD8B4"/>

|

||||

<stop offset="0.8667" style="stop-color:#08DFB0"/>

|

||||

<stop offset="0.9333" style="stop-color:#06E4AE"/>

|

||||

<stop offset="1" style="stop-color:#05E5AD"/>

|

||||

</radialGradient>

|

||||

<path fill="url(#SVGID_00000150822754378345238340000008985053211526864828_)" d="M21.4,9.8c3,0,5.9,0.7,8.5,1.9

|

||||

c-5.7,3.4-9.8,11.1-9.8,20.1c0,9,4,16.7,9.8,20.1c-2.6,1.2-5.5,1.9-8.5,1.9c-11.6,0-21-9.8-21-22S9.8,9.8,21.4,9.8z"/>

|

||||

|

||||

<radialGradient id="SVGID_00000022560571240417802950000012439139323268113305_" cx="-191.7649" cy="385.7387" r="4.8781" gradientTransform="matrix(-2.5514 -0.7616 -0.8125 2.7217 -130.733 -1180.2209)" gradientUnits="userSpaceOnUse">

|

||||

<stop offset="0" style="stop-color:#6447FF"/>

|

||||

<stop offset="6.666670e-02" style="stop-color:#6348FE"/>

|

||||

<stop offset="0.1333" style="stop-color:#614DFC"/>

|

||||

<stop offset="0.2" style="stop-color:#5C54F8"/>

|

||||

<stop offset="0.2667" style="stop-color:#565EF3"/>

|

||||

<stop offset="0.3333" style="stop-color:#4E6CEC"/>

|

||||

<stop offset="0.4" style="stop-color:#447BE4"/>

|

||||

<stop offset="0.4667" style="stop-color:#3A8DDB"/>

|

||||

<stop offset="0.5333" style="stop-color:#2F9FD1"/>

|

||||

<stop offset="0.6" style="stop-color:#25B1C8"/>

|

||||

<stop offset="0.6667" style="stop-color:#1BC0C0"/>

|

||||

<stop offset="0.7333" style="stop-color:#13CEB9"/>

|

||||

<stop offset="0.8" style="stop-color:#0DD8B4"/>

|

||||

<stop offset="0.8667" style="stop-color:#08DFB0"/>

|

||||

<stop offset="0.9333" style="stop-color:#06E4AE"/>

|

||||

<stop offset="1" style="stop-color:#05E5AD"/>

|

||||

</radialGradient>

|

||||

<path fill="url(#SVGID_00000022560571240417802950000012439139323268113305_)" d="M38,18.3c-2.1-2.8-4.9-5.1-8.1-6.6

|

||||

c2-1.2,4.2-1.9,6.6-1.9c2.2,0,4.3,0.6,6.2,1.7C40.8,12.9,39.2,15.3,38,18.3L38,18.3z"/>

|

||||

|

||||

<radialGradient id="SVGID_00000143611122169386473660000017673587931016751800_" cx="-194.7918" cy="395.2442" r="4.8781" gradientTransform="matrix(-2.5514 -0.7616 -0.8125 2.7217 -130.733 -1172.9556)" gradientUnits="userSpaceOnUse">

|

||||

<stop offset="0" style="stop-color:#6447FF"/>

|

||||

<stop offset="6.666670e-02" style="stop-color:#6348FE"/>

|

||||

<stop offset="0.1333" style="stop-color:#614DFC"/>

|

||||

<stop offset="0.2" style="stop-color:#5C54F8"/>

|

||||

<stop offset="0.2667" style="stop-color:#565EF3"/>

|

||||

<stop offset="0.3333" style="stop-color:#4E6CEC"/>

|

||||

<stop offset="0.4" style="stop-color:#447BE4"/>

|

||||

<stop offset="0.4667" style="stop-color:#3A8DDB"/>

|

||||

<stop offset="0.5333" style="stop-color:#2F9FD1"/>

|

||||

<stop offset="0.6" style="stop-color:#25B1C8"/>

|

||||

<stop offset="0.6667" style="stop-color:#1BC0C0"/>

|

||||

<stop offset="0.7333" style="stop-color:#13CEB9"/>

|

||||

<stop offset="0.8" style="stop-color:#0DD8B4"/>

|

||||

<stop offset="0.8667" style="stop-color:#08DFB0"/>

|

||||

<stop offset="0.9333" style="stop-color:#06E4AE"/>

|

||||

<stop offset="1" style="stop-color:#05E5AD"/>

|

||||

</radialGradient>

|

||||

<path fill="url(#SVGID_00000143611122169386473660000017673587931016751800_)" d="M38,45.2c1.2,3,2.9,5.3,4.7,6.8

|

||||

c-1.9,1.1-4,1.7-6.2,1.7c-2.3,0-4.6-0.7-6.6-1.9C33.1,50.4,35.8,48.1,38,45.2L38,45.2z"/>

|

||||

<path fill="#684BFE" d="M20.1,31.8c0-9,4-16.7,9.8-20.1c3.2,1.5,6,3.8,8.1,6.6c-1.5,3.7-2.5,8.4-2.5,13.5s0.9,9.8,2.5,13.5

|

||||

c-2.1,2.8-4.9,5.1-8.1,6.6C24.1,48.4,20.1,40.7,20.1,31.8z"/>

|

||||

|

||||

<radialGradient id="SVGID_00000147942998054305738810000004710078864578628519_" cx="-212.7358" cy="363.2475" r="4.8781" gradientTransform="matrix(-2.3342 -1.063 -1.623 3.5638 149.3813 -1470.1027)" gradientUnits="userSpaceOnUse">

|

||||

<stop offset="0" style="stop-color:#6447FF"/>

|

||||

<stop offset="6.666670e-02" style="stop-color:#6348FE"/>

|

||||

<stop offset="0.1333" style="stop-color:#614DFC"/>

|

||||

<stop offset="0.2" style="stop-color:#5C54F8"/>

|

||||

<stop offset="0.2667" style="stop-color:#565EF3"/>

|

||||

<stop offset="0.3333" style="stop-color:#4E6CEC"/>

|

||||

<stop offset="0.4" style="stop-color:#447BE4"/>

|

||||

<stop offset="0.4667" style="stop-color:#3A8DDB"/>

|

||||

<stop offset="0.5333" style="stop-color:#2F9FD1"/>

|

||||

<stop offset="0.6" style="stop-color:#25B1C8"/>

|

||||

<stop offset="0.6667" style="stop-color:#1BC0C0"/>

|

||||

<stop offset="0.7333" style="stop-color:#13CEB9"/>

|

||||

<stop offset="0.8" style="stop-color:#0DD8B4"/>

|

||||

<stop offset="0.8667" style="stop-color:#08DFB0"/>

|

||||

<stop offset="0.9333" style="stop-color:#06E4AE"/>

|

||||

<stop offset="1" style="stop-color:#05E5AD"/>

|

||||

</radialGradient>

|

||||

<path fill="url(#SVGID_00000147942998054305738810000004710078864578628519_)" d="M50.7,42.5c0.6,3.3,1.5,6.1,2.5,8

|

||||

c-1.8,2-3.8,3.1-6,3.1c-1.6,0-3.1-0.6-4.5-1.7C46.1,50.2,48.9,46.8,50.7,42.5L50.7,42.5z"/>

|

||||

|

||||

<radialGradient id="SVGID_00000083770737908230256670000016126156495859285174_" cx="-208.5327" cy="357.2025" r="4.8781" gradientTransform="matrix(-2.3342 -1.063 -1.623 3.5638 149.3813 -1476.8097)" gradientUnits="userSpaceOnUse">

|

||||

<stop offset="0" style="stop-color:#6447FF"/>

|

||||

<stop offset="6.666670e-02" style="stop-color:#6348FE"/>

|

||||

<stop offset="0.1333" style="stop-color:#614DFC"/>

|

||||

<stop offset="0.2" style="stop-color:#5C54F8"/>

|

||||

<stop offset="0.2667" style="stop-color:#565EF3"/>

|

||||

<stop offset="0.3333" style="stop-color:#4E6CEC"/>

|

||||

<stop offset="0.4" style="stop-color:#447BE4"/>

|

||||

<stop offset="0.4667" style="stop-color:#3A8DDB"/>

|

||||

<stop offset="0.5333" style="stop-color:#2F9FD1"/>

|

||||

<stop offset="0.6" style="stop-color:#25B1C8"/>

|

||||

<stop offset="0.6667" style="stop-color:#1BC0C0"/>

|

||||

<stop offset="0.7333" style="stop-color:#13CEB9"/>

|

||||

<stop offset="0.8" style="stop-color:#0DD8B4"/>

|

||||

<stop offset="0.8667" style="stop-color:#08DFB0"/>

|

||||

<stop offset="0.9333" style="stop-color:#06E4AE"/>

|

||||

<stop offset="1" style="stop-color:#05E5AD"/>

|

||||

</radialGradient>

|

||||

<path fill="url(#SVGID_00000083770737908230256670000016126156495859285174_)" d="M42.7,11.5c1.4-1.1,2.9-1.7,4.5-1.7

|

||||

c2.2,0,4.3,1.1,6,3.1c-1,2-1.9,4.7-2.5,8C48.9,16.7,46.1,13.4,42.7,11.5L42.7,11.5z"/>

|

||||

<path fill="#684BFE" d="M38,45.2c2.8-3.7,4.4-8.4,4.4-13.5c0-5.1-1.7-9.8-4.4-13.5c1.2-3,2.9-5.3,4.7-6.8c3.4,1.9,6.2,5.3,8,9.5

|

||||

c-0.6,3.2-0.9,6.9-0.9,10.8s0.3,7.6,0.9,10.8c-1.8,4.3-4.6,7.6-8,9.5C40.8,50.6,39.2,48.2,38,45.2L38,45.2z"/>

|

||||

<path fill="#321BB2" d="M38,45.2c-1.5-3.7-2.5-8.4-2.5-13.5S36.4,22,38,18.3c2.8,3.7,4.4,8.4,4.4,13.5S40.8,41.5,38,45.2z"/>

|

||||

<path fill="#05E6AD" d="M53.2,12.9c1.1-2,2.3-3.1,3.6-3.1c3.9,0,7,9.8,7,22s-3.1,22-7,22c-1.3,0-2.6-1.1-3.6-3.1

|

||||

c3.4-3.8,5.7-10.8,5.7-18.8C58.8,23.8,56.6,16.8,53.2,12.9z"/>

|

||||

|

||||

<radialGradient id="SVGID_00000009565123575973598080000009335550354766300606_" cx="-7.8671" cy="278.2442" r="4.8781" gradientTransform="matrix(1.5187 0 0 -7.8271 69.237 2209.3281)" gradientUnits="userSpaceOnUse">

|

||||

<stop offset="0" style="stop-color:#05E5AD"/>

|

||||

<stop offset="0.32" style="stop-color:#05E5AD;stop-opacity:0"/>

|

||||

<stop offset="0.9028" style="stop-color:#6447FF"/>

|

||||

</radialGradient>

|

||||

<path fill="url(#SVGID_00000009565123575973598080000009335550354766300606_)" d="M53.2,12.9c1.1-2,2.3-3.1,3.6-3.1

|

||||

c3.9,0,7,9.8,7,22s-3.1,22-7,22c-1.3,0-2.6-1.1-3.6-3.1c3.4-3.8,5.7-10.8,5.7-18.8C58.8,23.8,56.6,16.8,53.2,12.9z"/>

|

||||

<path fill="#684BFE" d="M52.8,31.8c0-3.9-0.8-7.6-2.1-10.8c0.6-3.3,1.5-6.1,2.5-8c3.4,3.8,5.7,10.8,5.7,18.8c0,8-2.3,15-5.7,18.8

|

||||

c-1-2-1.9-4.7-2.5-8C52,39.3,52.8,35.7,52.8,31.8z"/>

|

||||

<path fill="#321BB2" d="M50.7,42.5c-0.6-3.2-0.9-6.9-0.9-10.8s0.3-7.6,0.9-10.8c1.3,3.2,2.1,6.9,2.1,10.8S52,39.3,50.7,42.5z"/>

|

||||

</g>

|

||||

</g>

|

||||

</svg>

|

||||

|

||||

|

Before Width: | Height: | Size: 1.2 KiB After Width: | Height: | Size: 9.0 KiB |

Binary file not shown.

|

Before Width: | Height: | Size: 8.7 KiB |

@ -9,20 +9,4 @@ Qodo Merge utilizes a variety of core abilities to provide a comprehensive and e

|

||||

- [Compression strategy](https://qodo-merge-docs.qodo.ai/core-abilities/compression_strategy/)

|

||||

- [Code-oriented YAML](https://qodo-merge-docs.qodo.ai/core-abilities/code_oriented_yaml/)

|

||||

- [Static code analysis](https://qodo-merge-docs.qodo.ai/core-abilities/static_code_analysis/)

|

||||

- [Code fine-tuning benchmark](https://qodo-merge-docs.qodo.ai/finetuning_benchmark/)

|

||||

|

||||

## Blogs

|

||||

|

||||

Here are some additional technical blogs from Qodo, that delve deeper into the core capabilities and features of Large Language Models (LLMs) when applied to coding tasks.

|

||||

These resources provide more comprehensive insights into leveraging LLMs for software development.

|

||||

|

||||

### Code Generation and LLMs

|

||||

- [State-of-the-art Code Generation with AlphaCodium – From Prompt Engineering to Flow Engineering](https://www.qodo.ai/blog/qodoflow-state-of-the-art-code-generation-for-code-contests/)

|

||||

- [RAG for a Codebase with 10k Repos](https://www.qodo.ai/blog/rag-for-large-scale-code-repos/)

|

||||

|

||||

### Development Processes

|

||||

- [Understanding the Challenges and Pain Points of the Pull Request Cycle](https://www.qodo.ai/blog/understanding-the-challenges-and-pain-points-of-the-pull-request-cycle/)

|

||||

- [Introduction to Code Coverage Testing](https://www.qodo.ai/blog/introduction-to-code-coverage-testing/)

|

||||

|

||||

### Cost Optimization

|

||||

- [Reduce Your Costs by 30% When Using GPT for Python Code](https://www.qodo.ai/blog/reduce-your-costs-by-30-when-using-gpt-3-for-python-code/)

|

||||

- [Code fine-tuning benchmark](https://qodo-merge-docs.qodo.ai/finetuning_benchmark/)

|

||||

@ -49,7 +49,7 @@ __old hunk__

|

||||

...

|

||||

```

|

||||

|

||||

(3) The entire PR files that were retrieved are also used to expand and enhance the PR context (see [Dynamic Context](https://qodo-merge-docs.qodo.ai/core-abilities/dynamic_context/)).

|

||||

(3) The entire PR files that were retrieved are also used to expand and enhance the PR context (see [Dynamic Context](https://qodo-merge-docs.qodo.ai/core-abilities/dynamic-context/)).

|

||||

|

||||

|

||||

(4) All the metadata described above represents several level of cumulative analysis - ranging from hunk level, to file level, to PR level, to organization level.

|

||||

|

||||

@ -29,6 +29,7 @@ Qodo Merge offers extensive pull request functionalities across various git prov

|

||||

|-------|-----------------------------------------------------------------------------------------------------------------------|:------:|:------:|:---------:|:------------:|

|

||||

| TOOLS | Review | ✅ | ✅ | ✅ | ✅ |

|

||||

| | ⮑ Incremental | ✅ | | | |

|

||||

| | ⮑ [SOC2 Compliance](https://qodo-merge-docs.qodo.ai/tools/review/#soc2-ticket-compliance){:target="_blank"} 💎 | ✅ | ✅ | ✅ | |

|

||||

| | Ask | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Describe | ✅ | ✅ | ✅ | ✅ |

|

||||

| | ⮑ [Inline file summary](https://qodo-merge-docs.qodo.ai/tools/describe/#inline-file-summary){:target="_blank"} 💎 | ✅ | ✅ | | |

|

||||

|

||||

@ -27,6 +27,27 @@ jobs:

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

```

|

||||

|

||||

|

||||

if you want to pin your action to a specific release (v0.23 for example) for stability reasons, use:

|

||||

```yaml

|

||||

...

|

||||

steps:

|

||||

- name: PR Agent action step

|

||||

id: pragent

|

||||

uses: docker://codiumai/pr-agent:0.23-github_action

|

||||

...

|

||||

```

|

||||

|

||||

For enhanced security, you can also specify the Docker image by its [digest](https://hub.docker.com/repository/docker/codiumai/pr-agent/tags):

|

||||

```yaml

|

||||

...

|

||||

steps:

|

||||

- name: PR Agent action step

|

||||

id: pragent

|

||||

uses: docker://codiumai/pr-agent@sha256:14165e525678ace7d9b51cda8652c2d74abb4e1d76b57c4a6ccaeba84663cc64

|

||||

...

|

||||

```

|

||||

|

||||

2) Add the following secret to your repository under `Settings > Secrets and variables > Actions > New repository secret > Add secret`:

|

||||

|

||||

```

|

||||

@ -49,40 +70,6 @@ When you open your next PR, you should see a comment from `github-actions` bot w

|

||||

```

|

||||

See detailed usage instructions in the [USAGE GUIDE](https://qodo-merge-docs.qodo.ai/usage-guide/automations_and_usage/#github-action)

|

||||

|

||||

### Using a specific release

|

||||

!!! tip ""

|

||||

if you want to pin your action to a specific release (v0.23 for example) for stability reasons, use:

|

||||

```yaml

|

||||

...

|

||||

steps:

|

||||

- name: PR Agent action step

|

||||

id: pragent

|

||||

uses: docker://codiumai/pr-agent:0.23-github_action

|

||||

...

|

||||

```

|

||||

|

||||

For enhanced security, you can also specify the Docker image by its [digest](https://hub.docker.com/repository/docker/codiumai/pr-agent/tags):

|

||||

```yaml

|

||||

...

|

||||

steps:

|

||||

- name: PR Agent action step

|

||||

id: pragent

|

||||

uses: docker://codiumai/pr-agent@sha256:14165e525678ace7d9b51cda8652c2d74abb4e1d76b57c4a6ccaeba84663cc64

|

||||

...

|

||||

```

|

||||

|

||||

### Action for GitHub enterprise server

|

||||

!!! tip ""

|

||||

To use the action with a GitHub enterprise server, add an environment variable `GITHUB.BASE_URL` with the API URL of your GitHub server.

|

||||

|

||||

For example, if your GitHub server is at `https://github.mycompany.com`, add the following to your workflow file:

|

||||

```yaml

|

||||

env:

|

||||

# ... previous environment values

|

||||

GITHUB.BASE_URL: "https://github.mycompany.com/api/v3"

|

||||

```

|

||||

|

||||

|

||||

---

|

||||

|

||||

## Run as a GitHub App

|

||||

|

||||

@ -1,7 +1,7 @@

|

||||

# Installation

|

||||

|

||||

## Self-hosted Qodo Merge

|

||||

If you choose to host your own Qodo Merge, you first need to acquire two tokens:

|

||||

If you choose to host you own Qodo Merge, you first need to acquire two tokens:

|

||||

|

||||

1. An OpenAI key from [here](https://platform.openai.com/api-keys), with access to GPT-4 (or a key for other [language models](https://qodo-merge-docs.qodo.ai/usage-guide/changing_a_model/), if you prefer).

|

||||

2. A GitHub\GitLab\BitBucket personal access token (classic), with the repo scope. [GitHub from [here](https://github.com/settings/tokens)]

|

||||

@ -18,4 +18,4 @@ There are several ways to use self-hosted Qodo Merge:

|

||||

Qodo Merge Pro, an app hosted by CodiumAI for GitHub\GitLab\BitBucket, is also available.

|

||||

<br>

|

||||

With Qodo Merge Pro, installation is as simple as signing up and adding the Qodo Merge app to your relevant repo.

|

||||

See [here](https://qodo-merge-docs.qodo.ai/installation/pr_agent_pro/) for more details.

|

||||

See [here](https://qodo-merge-docs.qodo.ai/installation/pr_agent_pro/) for more details.

|

||||

@ -16,8 +16,8 @@ from pr_agent.config_loader import get_settings

|

||||

|

||||

def main():

|

||||

# Fill in the following values

|

||||

provider = "github" # github/gitlab/bitbucket/azure_devops

|

||||

user_token = "..." # user token

|

||||

provider = "github" # GitHub provider

|

||||

user_token = "..." # GitHub user token

|

||||

openai_key = "..." # OpenAI key

|

||||

pr_url = "..." # PR URL, for example 'https://github.com/Codium-ai/pr-agent/pull/809'

|

||||

command = "/review" # Command to run (e.g. '/review', '/describe', '/ask="What is the purpose of this PR?"', ...)

|

||||

@ -42,34 +42,42 @@ A list of the relevant tools can be found in the [tools guide](../tools/ask.md).

|

||||

To invoke a tool (for example `review`), you can run directly from the Docker image. Here's how:

|

||||

|

||||

- For GitHub:

|

||||

```

|

||||

docker run --rm -it -e OPENAI.KEY=<your key> -e GITHUB.USER_TOKEN=<your token> codiumai/pr-agent:latest --pr_url <pr_url> review

|

||||

```

|

||||

If you are using GitHub enterprise server, you need to specify the custom url as variable.

|

||||

For example, if your GitHub server is at `https://github.mycompany.com`, add the following to the command:

|

||||

```

|

||||

-e GITHUB.BASE_URL=https://github.mycompany.com/api/v3

|

||||

```

|

||||

```

|

||||

docker run --rm -it -e OPENAI.KEY=<your key> -e GITHUB.USER_TOKEN=<your token> codiumai/pr-agent:latest --pr_url <pr_url> review

|

||||

```

|

||||

|

||||

- For GitLab:

|

||||

```

|

||||

docker run --rm -it -e OPENAI.KEY=<your key> -e CONFIG.GIT_PROVIDER=gitlab -e GITLAB.PERSONAL_ACCESS_TOKEN=<your token> codiumai/pr-agent:latest --pr_url <pr_url> review

|

||||

```

|

||||

```

|

||||

docker run --rm -it -e OPENAI.KEY=<your key> -e CONFIG.GIT_PROVIDER=gitlab -e GITLAB.PERSONAL_ACCESS_TOKEN=<your token> codiumai/pr-agent:latest --pr_url <pr_url> review

|

||||

```

|

||||

|

||||

If you have a dedicated GitLab instance, you need to specify the custom url as variable:

|

||||

```

|

||||

-e GITLAB.URL=<your gitlab instance url>

|

||||

```

|

||||

Note: If you have a dedicated GitLab instance, you need to specify the custom url as variable:

|

||||

```

|

||||

docker run --rm -it -e OPENAI.KEY=<your key> -e CONFIG.GIT_PROVIDER=gitlab -e GITLAB.PERSONAL_ACCESS_TOKEN=<your token> -e GITLAB.URL=<your gitlab instance url> codiumai/pr-agent:latest --pr_url <pr_url> review

|

||||

```

|

||||

|

||||

- For BitBucket:

|

||||

```

|

||||

docker run --rm -it -e CONFIG.GIT_PROVIDER=bitbucket -e OPENAI.KEY=$OPENAI_API_KEY -e BITBUCKET.BEARER_TOKEN=$BITBUCKET_BEARER_TOKEN codiumai/pr-agent:latest --pr_url=<pr_url> review

|

||||

```

|

||||

```

|

||||

docker run --rm -it -e CONFIG.GIT_PROVIDER=bitbucket -e OPENAI.KEY=$OPENAI_API_KEY -e BITBUCKET.BEARER_TOKEN=$BITBUCKET_BEARER_TOKEN codiumai/pr-agent:latest --pr_url=<pr_url> review

|

||||

```

|

||||

|

||||

For other git providers, update CONFIG.GIT_PROVIDER accordingly, and check the `pr_agent/settings/.secrets_template.toml` file for the environment variables expected names and values.

|

||||

|

||||

---

|

||||

|

||||

|

||||

If you want to ensure you're running a specific version of the Docker image, consider using the image's digest:

|

||||

```bash

|

||||

docker run --rm -it -e OPENAI.KEY=<your key> -e GITHUB.USER_TOKEN=<your token> codiumai/pr-agent@sha256:71b5ee15df59c745d352d84752d01561ba64b6d51327f97d46152f0c58a5f678 --pr_url <pr_url> review

|

||||

```

|

||||

|

||||

Or you can run a [specific released versions](https://github.com/Codium-ai/pr-agent/blob/main/RELEASE_NOTES.md) of pr-agent, for example:

|

||||

```

|

||||

codiumai/pr-agent@v0.9

|

||||

```

|

||||

|

||||

---

|

||||

|

||||

## Run from source

|

||||

|

||||

1. Clone this repository:

|

||||

@ -107,7 +115,7 @@ python3 -m pr_agent.cli --issue_url <issue_url> similar_issue

|

||||

...

|

||||

```

|

||||

|

||||

[Optional] Add the pr_agent folder to your PYTHONPATH

|

||||

[Optional] Add the pr_agent folder to your PYTHONPATH

|

||||

```

|

||||

export PYTHONPATH=$PYTHONPATH:<PATH to pr_agent folder>

|

||||

```

|

||||

@ -17,8 +17,8 @@ Users without a purchased seat who interact with a repository featuring Qodo Mer

|

||||

Beyond this limit, Qodo Merge Pro will cease to respond to their inquiries unless a seat is purchased.

|

||||

|

||||

## Install Qodo Merge Pro for GitHub Enterprise Server

|

||||

|

||||

To use Qodo Merge Pro application on your private GitHub Enterprise Server, you will need to contact us for starting an [Enterprise](https://www.codium.ai/pricing/) trial.

|

||||

You can install Qodo Merge Pro application on your GitHub Enterprise Server, and enjoy two weeks of free trial.

|

||||

After the trial period, to continue using Qodo Merge Pro, you will need to contact us for an [Enterprise license](https://www.codium.ai/pricing/).

|

||||

|

||||

|

||||

## Install Qodo Merge Pro for GitLab (Teams & Enterprise)

|

||||

|

||||

@ -29,6 +29,7 @@ Qodo Merge offers extensive pull request functionalities across various git prov

|

||||

|-------|-----------------------------------------------------------------------------------------------------------------------|:------:|:------:|:---------:|:------------:|

|

||||

| TOOLS | Review | ✅ | ✅ | ✅ | ✅ |

|

||||

| | ⮑ Incremental | ✅ | | | |

|

||||

| | ⮑ [SOC2 Compliance](https://qodo-merge-docs.qodo.ai/tools/review/#soc2-ticket-compliance){:target="_blank"} 💎 | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Ask | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Describe | ✅ | ✅ | ✅ | ✅ |

|

||||

| | ⮑ [Inline file summary](https://qodo-merge-docs.qodo.ai/tools/describe/#inline-file-summary){:target="_blank"} 💎 | ✅ | ✅ | | ✅ |

|

||||

|

||||

@ -20,13 +20,14 @@ Here are some of the additional features and capabilities that Qodo Merge Pro of

|

||||

| Feature | Description |

|

||||

|----------------------------------------------------------------------------------------------------------------------|------------------------------------------------------------------------------------------------------------------------------------------------------------------|

|

||||

| [**Model selection**](https://qodo-merge-docs.qodo.ai/usage-guide/PR_agent_pro_models/) | Choose the model that best fits your needs, among top models like `GPT4` and `Claude-Sonnet-3.5`

|

||||

| [**Global and wiki configuration**](https://qodo-merge-docs.qodo.ai/usage-guide/configuration_options/) | Control configurations for many repositories from a single location; <br>Edit configuration of a single repo without committing code |

|

||||

| [**Apply suggestions**](https://qodo-merge-docs.qodo.ai/tools/improve/#overview) | Generate committable code from the relevant suggestions interactively by clicking on a checkbox |

|

||||

| [**Global and wiki configuration**](https://qodo-merge-docs.qodo.ai/usage-guide/configuration_options/) | Control configurations for many repositories from a single location; <br>Edit configuration of a single repo without commiting code |

|

||||

| [**Apply suggestions**](https://qodo-merge-docs.qodo.ai/tools/improve/#overview) | Generate commitable code from the relevant suggestions interactively by clicking on a checkbox |

|

||||

| [**Suggestions impact**](https://qodo-merge-docs.qodo.ai/tools/improve/#assessing-impact) | Automatically mark suggestions that were implemented by the user (either directly in GitHub, or indirectly in the IDE) to enable tracking of the impact of the suggestions |

|

||||

| [**CI feedback**](https://qodo-merge-docs.qodo.ai/tools/ci_feedback/) | Automatically analyze failed CI checks on GitHub and provide actionable feedback in the PR conversation, helping to resolve issues quickly |

|

||||

| [**Advanced usage statistics**](https://www.codium.ai/contact/#/) | Qodo Merge Pro offers detailed statistics at user, repository, and company levels, including metrics about Qodo Merge usage, and also general statistics and insights |

|

||||

| [**Incorporating companies' best practices**](https://qodo-merge-docs.qodo.ai/tools/improve/#best-practices) | Use the companies' best practices as reference to increase the effectiveness and the relevance of the code suggestions |

|

||||

| [**Interactive triggering**](https://qodo-merge-docs.qodo.ai/tools/analyze/#example-usage) | Interactively apply different tools via the `analyze` command |

|

||||

| [**SOC2 compliance check**](https://qodo-merge-docs.qodo.ai/tools/review/#configuration-options) | Ensures the PR contains a ticket to a project management system (e.g., Jira, Asana, Trello, etc.)

|

||||

| [**Custom labels**](https://qodo-merge-docs.qodo.ai/tools/describe/#handle-custom-labels-from-the-repos-labels-page) | Define custom labels for Qodo Merge to assign to the PR |

|

||||

|

||||

### Additional tools

|

||||

@ -48,4 +49,4 @@ Here are additional tools that are available only for Qodo Merge Pro users:

|

||||

Qodo Merge Pro leverages the world's leading code models - Claude 3.5 Sonnet and GPT-4.

|

||||

As a result, its primary tools such as `describe`, `review`, and `improve`, as well as the PR-chat feature, support virtually all programming languages.

|

||||

|

||||

For specialized commands that require static code analysis, Qodo Merge Pro offers support for specific languages. For more details about features that require static code analysis, please refer to the [documentation](https://qodo-merge-docs.qodo.ai/tools/analyze/#overview).

|

||||

For specialized commands that require static code analysis, Qodo Merge Pro offers support for specific languages. For more details about features that require static code analysis, please refer to the [documentation](https://qodo-merge-docs.qodo.ai/tools/analyze/#overview).

|

||||

@ -25,7 +25,7 @@ There are 3 ways to enable custom labels:

|

||||

When working from CLI, you need to apply the [configuration changes](#configuration-options) to the [custom_labels file](https://github.com/Codium-ai/pr-agent/blob/main/pr_agent/settings/custom_labels.toml):

|

||||

|

||||

#### 2. Repo configuration file

|

||||

To enable custom labels, you need to apply the [configuration changes](#configuration-options) to the local `.pr_agent.toml` file in your repository.

|

||||

To enable custom labels, you need to apply the [configuration changes](#configuration-options) to the local `.pr_agent.toml` file in you repository.

|

||||

|

||||

#### 3. Handle custom labels from the Repo's labels page 💎

|

||||

> This feature is available only in Qodo Merge Pro

|

||||

@ -57,4 +57,4 @@ description = "Description of when AI should suggest this label"

|

||||

|

||||

[custom_labels."Custom Label 2"]

|

||||

description = "Description of when AI should suggest this label 2"

|

||||

```

|

||||

```

|

||||

@ -34,7 +34,7 @@ pr_commands = [

|

||||

]

|

||||

|

||||

[pr_description]

|

||||

publish_labels = true

|

||||

publish_labels = ...

|

||||

...

|

||||

```

|

||||

|

||||

@ -49,7 +49,7 @@ publish_labels = true

|

||||

<table>

|

||||

<tr>

|

||||

<td><b>publish_labels</b></td>

|

||||

<td>If set to true, the tool will publish labels to the PR. Default is false.</td>

|

||||

<td>If set to true, the tool will publish the labels to the PR. Default is true.</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td><b>publish_description_as_comment</b></td>

|

||||

|

||||

@ -67,33 +67,6 @@ In post-process, Qodo Merge counts the number of suggestions that were implement

|

||||

|

||||

{width=512}

|

||||

|

||||

## Suggestion tracking 💎

|

||||

`Platforms supported: GitHub, GitLab`

|

||||

|

||||

Qodo Merge employs an novel detection system to automatically [identify](https://qodo-merge-docs.qodo.ai/core-abilities/impact_evaluation/) AI code suggestions that PR authors have accepted and implemented.

|

||||

|

||||

Accepted suggestions are also automatically documented in a dedicated wiki page called `.pr_agent_accepted_suggestions`, allowing users to track historical changes, assess the tool's effectiveness, and learn from previously implemented recommendations in the repository.

|

||||

An example [result](https://github.com/Codium-ai/pr-agent/wiki/.pr_agent_accepted_suggestions):

|

||||

|

||||

[{width=768}](https://github.com/Codium-ai/pr-agent/wiki/.pr_agent_accepted_suggestions)

|

||||

|

||||

This dedicated wiki page will also serve as a foundation for future AI model improvements, allowing it to learn from historically implemented suggestions and generate more targeted, contextually relevant recommendations.

|

||||

|

||||

This feature is controlled by a boolean configuration parameter: `pr_code_suggestions.wiki_page_accepted_suggestions` (default is true).

|

||||

|

||||

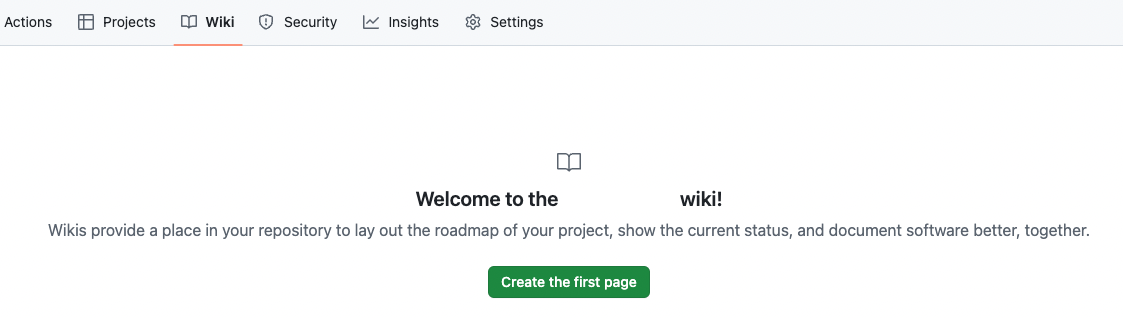

!!! note "Wiki must be enabled"

|

||||

While the aggregation process is automatic, GitHub repositories require a one-time manual wiki setup.

|

||||

|

||||

To initialize the wiki: navigate to `Wiki`, select `Create the first page`, then click `Save page`.

|

||||

|

||||

{width=768}

|

||||

|

||||

Once a wiki repo is created, the tool will automatically use this wiki for tracking suggestions.

|

||||

|

||||

!!! note "Why a wiki page?"

|

||||

Your code belongs to you, and we respect your privacy. Hence, we won't store any code suggestions in an external database.

|

||||

|

||||

Instead, we leverage a dedicated private page, within your repository wiki, to track suggestions. This approach offers convenient secure suggestion tracking while avoiding pull requests or any noise to the main repository.

|

||||

|

||||

## Usage Tips

|

||||

|

||||

@ -141,16 +114,9 @@ code_suggestions_self_review_text = "... (your text here) ..."

|

||||

{width=512}

|

||||

|

||||

|

||||

!!! tip "Tip - Reducing visual footprint after self-review 💎"

|

||||

|

||||

The configuration parameter `pr_code_suggestions.fold_suggestions_on_self_review` (default is True)

|

||||

can be used to automatically fold the suggestions after the user clicks the self-review checkbox.

|

||||

|

||||

This reduces the visual footprint of the suggestions, and also indicates to the PR reviewer that the suggestions have been reviewed by the PR author, and don't require further attention.

|

||||

|

||||

|

||||

|

||||

!!! tip "Tip - Demanding self-review from the PR author 💎"

|

||||

!!! tip "Tip - demanding self-review from the PR author 💎"

|

||||

|

||||

By setting:

|

||||

```toml

|

||||

@ -299,10 +265,6 @@ Using a combination of both can help the AI model to provide relevant and tailor

|

||||

<td><b>enable_chat_text</b></td>

|

||||

<td>If set to true, the tool will display a reference to the PR chat in the comment. Default is true.</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td><b>wiki_page_accepted_suggestions</b></td>

|

||||

<td>If set to true, the tool will automatically track accepted suggestions in a dedicated wiki page called `.pr_agent_accepted_suggestions`. Default is true.</td>

|

||||

</tr>

|

||||

</table>

|

||||

|

||||

??? example "Params for number of suggestions and AI calls"

|

||||

|

||||

@ -138,9 +138,20 @@ num_code_suggestions = ...

|

||||

<td><b>require_security_review</b></td>

|

||||

<td>If set to true, the tool will add a section that checks if the PR contains a possible security or vulnerability issue. Default is true.</td>

|

||||

</tr>

|

||||

</table>

|

||||

|

||||

!!! example "SOC2 ticket compliance 💎"

|

||||

|

||||

This sub-tool checks if the PR description properly contains a ticket to a project management system (e.g., Jira, Asana, Trello, etc.), as required by SOC2 compliance. If not, it will add a label to the PR: "Missing SOC2 ticket".

|

||||

|

||||

<table>

|

||||

<tr>

|

||||

<td><b>require_ticket_analysis_review</b></td>

|

||||

<td>If set to true, and the PR contains a GitHub ticket number, the tool will add a section that checks if the PR in fact fulfilled the ticket requirements. Default is true.</td>

|

||||

<td><b>require_soc2_ticket</b></td>

|

||||

<td>If set to true, the SOC2 ticket checker sub-tool will be enabled. Default is false.</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td><b>soc2_ticket_prompt</b></td>

|

||||

<td>The prompt for the SOC2 ticket review. Default is: `Does the PR description include a link to ticket in a project management system (e.g., Jira, Asana, Trello, etc.) ?`. Edit this field if your compliance requirements are different.</td>

|

||||

</tr>

|

||||

</table>

|

||||

|

||||

@ -182,7 +193,7 @@ If enabled, the `review` tool can approve a PR when a specific comment, `/review

|

||||

It is recommended to review the [Configuration options](#configuration-options) section, and choose the relevant options for your use case.

|

||||

|

||||

Some of the features that are disabled by default are quite useful, and should be considered for enabling. For example:

|

||||

`require_score_review`, and more.

|

||||

`require_score_review`, `require_soc2_ticket`, and more.

|

||||

|

||||

On the other hand, if you find one of the enabled features to be irrelevant for your use case, disable it. No default configuration can fit all use cases.

|

||||

|

||||

|

||||

@ -18,7 +18,7 @@ In terms of precedence, wiki configurations will override local configurations,

|

||||

|

||||

## Wiki configuration file 💎

|

||||

|

||||

`Platforms supported: GitHub, GitLab, Bitbucket`

|

||||

`Platforms supported: GitHub, GitLab`

|

||||

|

||||

With Qodo Merge Pro, you can set configurations by creating a page called `.pr_agent.toml` in the [wiki](https://github.com/Codium-ai/pr-agent/wiki/pr_agent.toml) of the repo.

|

||||

The advantage of this method is that it allows to set configurations without needing to commit new content to the repo - just edit the wiki page and **save**.

|

||||

|

||||

@ -82,11 +82,11 @@

|

||||

|

||||

<footer class="wrapper">

|

||||

<div class="container">

|

||||

<p class="footer-text">© 2024 <a href="https://www.qodo.ai/" target="_blank" rel="noopener">Qodo</a></p>

|

||||

<p class="footer-text">© 2024 <a href="https://www.codium.ai/" target="_blank" rel="noopener">CodiumAI</a></p>

|

||||

<div class="footer-links">

|

||||

<a href="https://qodo-gen-docs.qodo.ai/">Qodo Gen</a>

|

||||

<a href="https://codiumate-docs.codium.ai/">Codiumate</a>

|

||||

<p>|</p>

|

||||

<a href="https://qodo-flow-docs.qodo.ai/">AlphaCodium</a>

|

||||

<a href="https://alpha-codium-docs.codium.ai/">AlphaCodium</a>

|

||||

</div>

|

||||

<div class="social-icons">

|

||||

<a href="https://github.com/Codium-ai" target="_blank" rel="noopener" title="github.com" class="social-link">

|

||||

@ -95,16 +95,16 @@

|

||||

<a href="https://discord.com/invite/SgSxuQ65GF" target="_blank" rel="noopener" title="discord.com" class="social-link">

|

||||

<svg xmlns="http://www.w3.org/2000/svg" viewBox="0 0 640 512"><!--! Font Awesome Free 6.5.1 by @fontawesome - https://fontawesome.com License - https://fontawesome.com/license/free (Icons: CC BY 4.0, Fonts: SIL OFL 1.1, Code: MIT License) Copyright 2023 Fonticons, Inc.--><path d="M524.531 69.836a1.5 1.5 0 0 0-.764-.7A485.065 485.065 0 0 0 404.081 32.03a1.816 1.816 0 0 0-1.923.91 337.461 337.461 0 0 0-14.9 30.6 447.848 447.848 0 0 0-134.426 0 309.541 309.541 0 0 0-15.135-30.6 1.89 1.89 0 0 0-1.924-.91 483.689 483.689 0 0 0-119.688 37.107 1.712 1.712 0 0 0-.788.676C39.068 183.651 18.186 294.69 28.43 404.354a2.016 2.016 0 0 0 .765 1.375 487.666 487.666 0 0 0 146.825 74.189 1.9 1.9 0 0 0 2.063-.676A348.2 348.2 0 0 0 208.12 430.4a1.86 1.86 0 0 0-1.019-2.588 321.173 321.173 0 0 1-45.868-21.853 1.885 1.885 0 0 1-.185-3.126 251.047 251.047 0 0 0 9.109-7.137 1.819 1.819 0 0 1 1.9-.256c96.229 43.917 200.41 43.917 295.5 0a1.812 1.812 0 0 1 1.924.233 234.533 234.533 0 0 0 9.132 7.16 1.884 1.884 0 0 1-.162 3.126 301.407 301.407 0 0 1-45.89 21.83 1.875 1.875 0 0 0-1 2.611 391.055 391.055 0 0 0 30.014 48.815 1.864 1.864 0 0 0 2.063.7A486.048 486.048 0 0 0 610.7 405.729a1.882 1.882 0 0 0 .765-1.352c12.264-126.783-20.532-236.912-86.934-334.541ZM222.491 337.58c-28.972 0-52.844-26.587-52.844-59.239s23.409-59.241 52.844-59.241c29.665 0 53.306 26.82 52.843 59.239 0 32.654-23.41 59.241-52.843 59.241Zm195.38 0c-28.971 0-52.843-26.587-52.843-59.239s23.409-59.241 52.843-59.241c29.667 0 53.307 26.82 52.844 59.239 0 32.654-23.177 59.241-52.844 59.241Z"></path></svg>

|

||||

</a>

|

||||

<a href="https://www.youtube.com/@QodoAI" target="_blank" rel="noopener" title="www.youtube.com" class="social-link">

|

||||

<a href="https://www.youtube.com/@Codium-AI" target="_blank" rel="noopener" title="www.youtube.com" class="social-link">

|

||||

<svg xmlns="http://www.w3.org/2000/svg" viewBox="0 0 576 512"><!--! Font Awesome Free 6.5.1 by @fontawesome - https://fontawesome.com License - https://fontawesome.com/license/free (Icons: CC BY 4.0, Fonts: SIL OFL 1.1, Code: MIT License) Copyright 2023 Fonticons, Inc.--><path d="M549.655 124.083c-6.281-23.65-24.787-42.276-48.284-48.597C458.781 64 288 64 288 64S117.22 64 74.629 75.486c-23.497 6.322-42.003 24.947-48.284 48.597-11.412 42.867-11.412 132.305-11.412 132.305s0 89.438 11.412 132.305c6.281 23.65 24.787 41.5 48.284 47.821C117.22 448 288 448 288 448s170.78 0 213.371-11.486c23.497-6.321 42.003-24.171 48.284-47.821 11.412-42.867 11.412-132.305 11.412-132.305s0-89.438-11.412-132.305zm-317.51 213.508V175.185l142.739 81.205-142.739 81.201z"></path></svg>

|

||||

</a>

|

||||

<a href="https://www.linkedin.com/company/qodoai" target="_blank" rel="noopener" title="www.linkedin.com" class="social-link">

|

||||

<a href="https://www.linkedin.com/company/codiumai" target="_blank" rel="noopener" title="www.linkedin.com" class="social-link">

|

||||

<svg xmlns="http://www.w3.org/2000/svg" viewBox="0 0 448 512"><!--! Font Awesome Free 6.5.1 by @fontawesome - https://fontawesome.com License - https://fontawesome.com/license/free (Icons: CC BY 4.0, Fonts: SIL OFL 1.1, Code: MIT License) Copyright 2023 Fonticons, Inc.--><path d="M416 32H31.9C14.3 32 0 46.5 0 64.3v383.4C0 465.5 14.3 480 31.9 480H416c17.6 0 32-14.5 32-32.3V64.3c0-17.8-14.4-32.3-32-32.3zM135.4 416H69V202.2h66.5V416zm-33.2-243c-21.3 0-38.5-17.3-38.5-38.5S80.9 96 102.2 96c21.2 0 38.5 17.3 38.5 38.5 0 21.3-17.2 38.5-38.5 38.5zm282.1 243h-66.4V312c0-24.8-.5-56.7-34.5-56.7-34.6 0-39.9 27-39.9 54.9V416h-66.4V202.2h63.7v29.2h.9c8.9-16.8 30.6-34.5 62.9-34.5 67.2 0 79.7 44.3 79.7 101.9V416z"></path></svg>

|

||||

</a>

|

||||

<a href="https://twitter.com/QodoAI" target="_blank" rel="noopener" title="twitter.com" class="social-link">

|

||||

<a href="https://twitter.com/CodiumAI" target="_blank" rel="noopener" title="twitter.com" class="social-link">

|

||||

<svg xmlns="http://www.w3.org/2000/svg" viewBox="0 0 512 512"><!--! Font Awesome Free 6.5.1 by @fontawesome - https://fontawesome.com License - https://fontawesome.com/license/free (Icons: CC BY 4.0, Fonts: SIL OFL 1.1, Code: MIT License) Copyright 2023 Fonticons, Inc.--><path d="M459.37 151.716c.325 4.548.325 9.097.325 13.645 0 138.72-105.583 298.558-298.558 298.558-59.452 0-114.68-17.219-161.137-47.106 8.447.974 16.568 1.299 25.34 1.299 49.055 0 94.213-16.568 130.274-44.832-46.132-.975-84.792-31.188-98.112-72.772 6.498.974 12.995 1.624 19.818 1.624 9.421 0 18.843-1.3 27.614-3.573-48.081-9.747-84.143-51.98-84.143-102.985v-1.299c13.969 7.797 30.214 12.67 47.431 13.319-28.264-18.843-46.781-51.005-46.781-87.391 0-19.492 5.197-37.36 14.294-52.954 51.655 63.675 129.3 105.258 216.365 109.807-1.624-7.797-2.599-15.918-2.599-24.04 0-57.828 46.782-104.934 104.934-104.934 30.213 0 57.502 12.67 76.67 33.137 23.715-4.548 46.456-13.32 66.599-25.34-7.798 24.366-24.366 44.833-46.132 57.827 21.117-2.273 41.584-8.122 60.426-16.243-14.292 20.791-32.161 39.308-52.628 54.253z"></path></svg>

|

||||

</a>

|

||||

<a href="https://www.instagram.com/qodo_ai" target="_blank" rel="noopener" title="www.instagram.com" class="social-link">

|

||||

<a href="https://www.instagram.com/codiumai/" target="_blank" rel="noopener" title="www.instagram.com" class="social-link">

|

||||

<svg xmlns="http://www.w3.org/2000/svg" viewBox="0 0 448 512"><!--! Font Awesome Free 6.5.1 by @fontawesome - https://fontawesome.com License - https://fontawesome.com/license/free (Icons: CC BY 4.0, Fonts: SIL OFL 1.1, Code: MIT License) Copyright 2023 Fonticons, Inc.--><path d="M224.1 141c-63.6 0-114.9 51.3-114.9 114.9s51.3 114.9 114.9 114.9S339 319.5 339 255.9 287.7 141 224.1 141zm0 189.6c-41.1 0-74.7-33.5-74.7-74.7s33.5-74.7 74.7-74.7 74.7 33.5 74.7 74.7-33.6 74.7-74.7 74.7zm146.4-194.3c0 14.9-12 26.8-26.8 26.8-14.9 0-26.8-12-26.8-26.8s12-26.8 26.8-26.8 26.8 12 26.8 26.8zm76.1 27.2c-1.7-35.9-9.9-67.7-36.2-93.9-26.2-26.2-58-34.4-93.9-36.2-37-2.1-147.9-2.1-184.9 0-35.8 1.7-67.6 9.9-93.9 36.1s-34.4 58-36.2 93.9c-2.1 37-2.1 147.9 0 184.9 1.7 35.9 9.9 67.7 36.2 93.9s58 34.4 93.9 36.2c37 2.1 147.9 2.1 184.9 0 35.9-1.7 67.7-9.9 93.9-36.2 26.2-26.2 34.4-58 36.2-93.9 2.1-37 2.1-147.8 0-184.8zM398.8 388c-7.8 19.6-22.9 34.7-42.6 42.6-29.5 11.7-99.5 9-132.1 9s-102.7 2.6-132.1-9c-19.6-7.8-34.7-22.9-42.6-42.6-11.7-29.5-9-99.5-9-132.1s-2.6-102.7 9-132.1c7.8-19.6 22.9-34.7 42.6-42.6 29.5-11.7 99.5-9 132.1-9s102.7-2.6 132.1 9c19.6 7.8 34.7 22.9 42.6 42.6 11.7 29.5 9 99.5 9 132.1s2.7 102.7-9 132.1z"></path></svg>

|

||||

</a>

|

||||

</div>

|

||||

|

||||

@ -34,7 +34,6 @@ MAX_TOKENS = {

|

||||

'vertex_ai/claude-3-sonnet@20240229': 100000,

|

||||

'vertex_ai/claude-3-opus@20240229': 100000,

|

||||

'vertex_ai/claude-3-5-sonnet@20240620': 100000,

|

||||

'vertex_ai/claude-3-5-sonnet-v2@20241022': 100000,

|

||||

'vertex_ai/gemini-1.5-pro': 1048576,

|

||||

'vertex_ai/gemini-1.5-flash': 1048576,

|

||||

'vertex_ai/gemma2': 8200,

|

||||

@ -45,14 +44,12 @@ MAX_TOKENS = {

|

||||

'anthropic.claude-v2': 100000,

|

||||

'anthropic/claude-3-opus-20240229': 100000,

|

||||

'anthropic/claude-3-5-sonnet-20240620': 100000,

|

||||

'anthropic/claude-3-5-sonnet-20241022': 100000,

|

||||

'bedrock/anthropic.claude-instant-v1': 100000,

|

||||

'bedrock/anthropic.claude-v2': 100000,

|

||||

'bedrock/anthropic.claude-v2:1': 100000,

|

||||

'bedrock/anthropic.claude-3-sonnet-20240229-v1:0': 100000,

|

||||

'bedrock/anthropic.claude-3-haiku-20240307-v1:0': 100000,

|

||||

'bedrock/anthropic.claude-3-5-sonnet-20240620-v1:0': 100000,

|

||||

'bedrock/anthropic.claude-3-5-sonnet-20241022-v2:0': 100000,

|

||||

'claude-3-5-sonnet': 100000,

|

||||

'groq/llama3-8b-8192': 8192,

|

||||

'groq/llama3-70b-8192': 8192,

|

||||

|

||||

@ -171,7 +171,6 @@ class LiteLLMAIHandler(BaseAiHandler):

|

||||

get_logger().warning(

|

||||

"Empty system prompt for claude model. Adding a newline character to prevent OpenAI API error.")

|

||||

messages = [{"role": "system", "content": system}, {"role": "user", "content": user}]

|

||||

|

||||

if img_path:

|

||||

try:

|

||||

# check if the image link is alive

|

||||

@ -186,30 +185,14 @@ class LiteLLMAIHandler(BaseAiHandler):

|

||||

messages[1]["content"] = [{"type": "text", "text": messages[1]["content"]},

|

||||

{"type": "image_url", "image_url": {"url": img_path}}]

|

||||

|

||||

# Currently O1 does not support separate system and user prompts

|

||||

O1_MODEL_PREFIX = 'o1-'

|

||||

model_type = model.split('/')[-1] if '/' in model else model

|

||||

if model_type.startswith(O1_MODEL_PREFIX):

|

||||

user = f"{system}\n\n\n{user}"

|

||||

system = ""

|

||||

get_logger().info(f"Using O1 model, combining system and user prompts")

|

||||

messages = [{"role": "user", "content": user}]

|

||||

kwargs = {

|

||||

"model": model,

|

||||

"deployment_id": deployment_id,

|

||||

"messages": messages,

|

||||

"timeout": get_settings().config.ai_timeout,

|

||||

"api_base": self.api_base,

|

||||

}

|

||||

else:

|

||||

kwargs = {

|

||||

"model": model,

|

||||

"deployment_id": deployment_id,

|

||||

"messages": messages,

|

||||

"temperature": temperature,

|

||||

"timeout": get_settings().config.ai_timeout,

|

||||

"api_base": self.api_base,

|

||||

}

|

||||

kwargs = {

|

||||

"model": model,

|

||||

"deployment_id": deployment_id,

|

||||

"messages": messages,

|

||||

"temperature": temperature,

|

||||

"timeout": get_settings().config.ai_timeout,

|

||||

"api_base": self.api_base,

|

||||

}

|

||||

|

||||

if get_settings().litellm.get("enable_callbacks", False):

|

||||

kwargs = self.add_litellm_callbacks(kwargs)

|

||||

|

||||

@ -281,7 +281,7 @@ __old hunk__

|

||||

prev_header_line = []

|

||||

header_line = []

|

||||

for line_i, line in enumerate(patch_lines):

|

||||

if 'no newline at end of file' in line.lower():

|

||||

if 'no newline at end of file' in line.lower().strip().strip('//'):

|

||||

continue

|

||||

|

||||

if line.startswith('@@'):

|

||||

@ -290,19 +290,18 @@ __old hunk__

|

||||

if match and (new_content_lines or old_content_lines): # found a new hunk, split the previous lines

|

||||

if prev_header_line:

|

||||

patch_with_lines_str += f'\n{prev_header_line}\n'

|

||||

is_plus_lines = is_minus_lines = False

|

||||

if new_content_lines:

|

||||

is_plus_lines = any([line.startswith('+') for line in new_content_lines])

|

||||

if is_plus_lines:

|

||||

patch_with_lines_str = patch_with_lines_str.rstrip() + '\n__new hunk__\n'

|

||||

for i, line_new in enumerate(new_content_lines):

|

||||

patch_with_lines_str += f"{start2 + i} {line_new}\n"

|

||||

if old_content_lines:

|

||||

is_minus_lines = any([line.startswith('-') for line in old_content_lines])

|

||||

if is_plus_lines or is_minus_lines: # notice 'True' here - we always present __new hunk__ for section, otherwise LLM gets confused

|

||||

patch_with_lines_str = patch_with_lines_str.rstrip() + '\n__new hunk__\n'

|

||||

for i, line_new in enumerate(new_content_lines):

|

||||

patch_with_lines_str += f"{start2 + i} {line_new}\n"

|

||||

if is_minus_lines:

|

||||

patch_with_lines_str = patch_with_lines_str.rstrip() + '\n__old hunk__\n'

|

||||

for line_old in old_content_lines:

|

||||

patch_with_lines_str += f"{line_old}\n"

|

||||

if is_minus_lines:

|

||||

patch_with_lines_str = patch_with_lines_str.rstrip() + '\n__old hunk__\n'

|

||||

for line_old in old_content_lines:

|

||||

patch_with_lines_str += f"{line_old}\n"

|

||||

new_content_lines = []

|

||||

old_content_lines = []

|

||||

if match:

|

||||

@ -326,19 +325,18 @@ __old hunk__

|

||||

# finishing last hunk

|

||||

if match and new_content_lines:

|

||||

patch_with_lines_str += f'\n{header_line}\n'

|

||||

is_plus_lines = is_minus_lines = False

|

||||

if new_content_lines:

|

||||

is_plus_lines = any([line.startswith('+') for line in new_content_lines])

|

||||

if is_plus_lines:

|

||||

patch_with_lines_str = patch_with_lines_str.rstrip() + '\n__new hunk__\n'

|

||||

for i, line_new in enumerate(new_content_lines):

|

||||

patch_with_lines_str += f"{start2 + i} {line_new}\n"

|

||||

if old_content_lines:

|

||||

is_minus_lines = any([line.startswith('-') for line in old_content_lines])

|

||||

if is_plus_lines or is_minus_lines: # notice 'True' here - we always present __new hunk__ for section, otherwise LLM gets confused

|

||||

patch_with_lines_str = patch_with_lines_str.rstrip() + '\n__new hunk__\n'

|

||||

for i, line_new in enumerate(new_content_lines):

|

||||

patch_with_lines_str += f"{start2 + i} {line_new}\n"

|

||||

if is_minus_lines:

|

||||

patch_with_lines_str = patch_with_lines_str.rstrip() + '\n__old hunk__\n'

|

||||

for line_old in old_content_lines:

|

||||

patch_with_lines_str += f"{line_old}\n"

|

||||

if is_minus_lines:

|

||||

patch_with_lines_str = patch_with_lines_str.rstrip() + '\n__old hunk__\n'

|

||||

for line_old in old_content_lines:

|

||||

patch_with_lines_str += f"{line_old}\n"

|

||||

|

||||

return patch_with_lines_str.rstrip()

|

||||

|

||||

|

||||

@ -1,22 +1,18 @@

|

||||

from __future__ import annotations

|

||||

import html2text

|

||||

|

||||

import html

|

||||

import copy

|

||||

import difflib

|

||||

import hashlib

|

||||

import html

|

||||

import json

|

||||

import os

|

||||

import re

|

||||

import textwrap

|

||||

import time

|

||||

import traceback

|

||||

from datetime import datetime

|

||||

from enum import Enum

|

||||

from typing import Any, List, Tuple

|

||||

|

||||

|

||||

import html2text

|

||||

import requests

|

||||

import yaml

|

||||

from pydantic import BaseModel

|

||||

from starlette_context import context

|

||||

@ -114,7 +110,6 @@ def convert_to_markdown_v2(output_data: dict,

|

||||

"Insights from user's answers": "📝",

|

||||

"Code feedback": "🤖",

|

||||

"Estimated effort to review [1-5]": "⏱️",

|

||||

"Ticket compliance check": "🎫",

|

||||

}

|

||||

markdown_text = ""

|

||||

if not incremental_review:

|

||||

@ -170,8 +165,6 @@ def convert_to_markdown_v2(output_data: dict,

|

||||

markdown_text += f'### {emoji} No relevant tests\n\n'

|

||||

else:

|

||||

markdown_text += f"### PR contains tests\n\n"

|

||||

elif 'ticket compliance check' in key_nice.lower():

|

||||

markdown_text = ticket_markdown_logic(emoji, markdown_text, value, gfm_supported)

|

||||

elif 'security concerns' in key_nice.lower():

|

||||

if gfm_supported:

|

||||

markdown_text += f"<tr><td>"

|

||||

@ -261,52 +254,6 @@ def convert_to_markdown_v2(output_data: dict,

|

||||

return markdown_text

|

||||

|

||||

|

||||

def ticket_markdown_logic(emoji, markdown_text, value, gfm_supported) -> str:

|

||||

ticket_compliance_str = ""

|

||||

final_compliance_level = -1

|

||||

if isinstance(value, list):

|

||||

for v in value:

|

||||

ticket_url = v.get('ticket_url', '').strip()

|

||||

compliance_level = v.get('overall_compliance_level', '').strip()

|

||||

# add emojis, if 'Fully compliant' ✅, 'Partially compliant' 🔶, or 'Not compliant' ❌

|

||||

if compliance_level.lower() == 'fully compliant':

|

||||

# compliance_level = '✅ Fully compliant'

|

||||

final_compliance_level = 2 if final_compliance_level == -1 else 1

|

||||

elif compliance_level.lower() == 'partially compliant':

|

||||

# compliance_level = '🔶 Partially compliant'

|

||||

final_compliance_level = 1

|

||||

elif compliance_level.lower() == 'not compliant':

|

||||

# compliance_level = '❌ Not compliant'

|

||||

final_compliance_level = 0 if final_compliance_level < 1 else 1

|

||||

|

||||

# explanation = v.get('compliance_analysis', '').strip()

|

||||

explanation = ''

|

||||

fully_compliant_str = v.get('fully_compliant_requirements', '').strip()

|

||||

not_compliant_str = v.get('not_compliant_requirements', '').strip()

|

||||

if fully_compliant_str:

|

||||

explanation += f"Fully compliant requirements:\n{fully_compliant_str}\n\n"

|

||||

if not_compliant_str:

|

||||

explanation += f"Not compliant requirements:\n{not_compliant_str}\n\n"

|

||||

|

||||

ticket_compliance_str += f"\n\n**[{ticket_url.split('/')[-1]}]({ticket_url}) - {compliance_level}**\n\n{explanation}\n\n"

|

||||

if final_compliance_level == 2:

|

||||

compliance_level = '✅'

|

||||

elif final_compliance_level == 1:

|

||||

compliance_level = '🔶'

|

||||

else:

|

||||

compliance_level = '❌'

|

||||

|

||||

if gfm_supported:

|

||||

markdown_text += f"<tr><td>\n\n"

|

||||

markdown_text += f"**{emoji} Ticket compliance analysis {compliance_level}**\n\n"

|

||||

markdown_text += ticket_compliance_str

|

||||

markdown_text += f"</td></tr>\n"

|

||||

else:

|

||||

markdown_text += f"### {emoji} Ticket compliance analysis {compliance_level}\n\n"

|

||||

markdown_text += ticket_compliance_str+"\n\n"

|

||||

return markdown_text

|

||||

|

||||

|

||||

def process_can_be_split(emoji, value):

|

||||

try:

|

||||

# key_nice = "Can this PR be split?"

|

||||

@ -607,8 +554,7 @@ def load_yaml(response_text: str, keys_fix_yaml: List[str] = [], first_key="", l

|

||||

get_logger().warning(f"Initial failure to parse AI prediction: {e}")

|

||||