mirror of

https://github.com/qodo-ai/pr-agent.git

synced 2025-07-21 04:50:39 +08:00

Compare commits

1 Commits

mrT23-patc

...

es/add_qm_

| Author | SHA1 | Date | |

|---|---|---|---|

| af351cada2 |

@ -1,4 +1,4 @@

|

|||||||

|

<div align="center">

|

||||||

|

|

||||||

<div align="center">

|

<div align="center">

|

||||||

|

|

||||||

@ -22,7 +22,6 @@ PR-Agent aims to help efficiently review and handle pull requests, by providing

|

|||||||

[](https://github.com/apps/qodo-merge-pro/)

|

[](https://github.com/apps/qodo-merge-pro/)

|

||||||

[](https://github.com/apps/qodo-merge-pro-for-open-source/)

|

[](https://github.com/apps/qodo-merge-pro-for-open-source/)

|

||||||

[](https://discord.com/invite/SgSxuQ65GF)

|

[](https://discord.com/invite/SgSxuQ65GF)

|

||||||

<!-- TODO: add badge also for twitter -->

|

|

||||||

<a href="https://github.com/Codium-ai/pr-agent/commits/main">

|

<a href="https://github.com/Codium-ai/pr-agent/commits/main">

|

||||||

<img alt="GitHub" src="https://img.shields.io/github/last-commit/Codium-ai/pr-agent/main?style=for-the-badge" height="20">

|

<img alt="GitHub" src="https://img.shields.io/github/last-commit/Codium-ai/pr-agent/main?style=for-the-badge" height="20">

|

||||||

</a>

|

</a>

|

||||||

@ -45,7 +44,7 @@ PR-Agent aims to help efficiently review and handle pull requests, by providing

|

|||||||

## Getting Started

|

## Getting Started

|

||||||

|

|

||||||

### Try it Instantly

|

### Try it Instantly

|

||||||

Test PR-Agent on any public GitHub repository by commenting `@CodiumAI-Agent /improve`. The bot will reply with code suggestions

|

Test PR-Agent on any public GitHub repository by commenting `@CodiumAI-Agent /improve`

|

||||||

|

|

||||||

### GitHub Action

|

### GitHub Action

|

||||||

Add automated PR reviews to your repository with a simple workflow file using [GitHub Action setup guide](https://qodo-merge-docs.qodo.ai/installation/github/#run-as-a-github-action)

|

Add automated PR reviews to your repository with a simple workflow file using [GitHub Action setup guide](https://qodo-merge-docs.qodo.ai/installation/github/#run-as-a-github-action)

|

||||||

@ -187,7 +186,7 @@ ___

|

|||||||

|

|

||||||

## Try It Now

|

## Try It Now

|

||||||

|

|

||||||

Try PR-Agent instantly on _your public GitHub repository_. Just mention `@CodiumAI-Agent` and add the desired command in any PR comment. The agent will generate a response based on your command.

|

Try the Claude Sonnet powered PR-Agent instantly on _your public GitHub repository_. Just mention `@CodiumAI-Agent` and add the desired command in any PR comment. The agent will generate a response based on your command.

|

||||||

For example, add a comment to any pull request with the following text:

|

For example, add a comment to any pull request with the following text:

|

||||||

|

|

||||||

```

|

```

|

||||||

|

|||||||

@ -1,83 +0,0 @@

|

|||||||

# Auto-approval 💎

|

|

||||||

|

|

||||||

`Supported Git Platforms: GitHub, GitLab, Bitbucket`

|

|

||||||

|

|

||||||

Under specific conditions, Qodo Merge can auto-approve a PR when a manual comment is invoked, or when the PR meets certain criteria.

|

|

||||||

|

|

||||||

**To ensure safety, the auto-approval feature is disabled by default.**

|

|

||||||

To enable auto-approval features, you need to actively set one or both of the following options in a pre-defined _configuration file_:

|

|

||||||

|

|

||||||

```toml

|

|

||||||

[config]

|

|

||||||

enable_comment_approval = true # For approval via comments

|

|

||||||

enable_auto_approval = true # For criteria-based auto-approval

|

|

||||||

```

|

|

||||||

|

|

||||||

!!! note "Notes"

|

|

||||||

- These flags above cannot be set with a command line argument, only in the configuration file, committed to the repository.

|

|

||||||

- Enabling auto-approval must be a deliberate decision by the repository owner.

|

|

||||||

|

|

||||||

## **Approval by commenting**

|

|

||||||

|

|

||||||

To enable approval by commenting, set in the configuration file:

|

|

||||||

|

|

||||||

```toml

|

|

||||||

[config]

|

|

||||||

enable_comment_approval = true

|

|

||||||

```

|

|

||||||

|

|

||||||

After enabling, by commenting on a PR:

|

|

||||||

|

|

||||||

```

|

|

||||||

/review auto_approve

|

|

||||||

```

|

|

||||||

|

|

||||||

Qodo Merge will approve the PR and add a comment with the reason for the approval.

|

|

||||||

|

|

||||||

## **Auto-approval when the PR meets certain criteria**

|

|

||||||

|

|

||||||

To enable auto-approval based on specific criteria, first, you need to enable the top-level flag:

|

|

||||||

|

|

||||||

```toml

|

|

||||||

[config]

|

|

||||||

enable_auto_approval = true

|

|

||||||

```

|

|

||||||

|

|

||||||

There are two possible paths leading to this auto-approval - one via the `review` tool, and one via the `improve` tool. Each tool can independently trigger auto-approval.

|

|

||||||

|

|

||||||

### Auto-approval via the `review` tool

|

|

||||||

|

|

||||||

- **Review effort score criteria**

|

|

||||||

|

|

||||||

```toml

|

|

||||||

[config]

|

|

||||||

enable_auto_approval = true

|

|

||||||

auto_approve_for_low_review_effort = X # X is a number between 1 and 5

|

|

||||||

```

|

|

||||||

|

|

||||||

When the [review effort score](https://www.qodo.ai/images/pr_agent/review3.png) is lower than or equal to X, the PR will be auto-approved (unless ticket compliance is enabled and fails, see below).

|

|

||||||

|

|

||||||

- **Ticket compliance criteria**

|

|

||||||

|

|

||||||

```toml

|

|

||||||

[config]

|

|

||||||

enable_auto_approval = true

|

|

||||||

ensure_ticket_compliance = true # Default is false

|

|

||||||

```

|

|

||||||

|

|

||||||

If `ensure_ticket_compliance` is set to `true`, auto-approval will be disabled if no ticket is linked to the PR, or if the PR is not fully compliant with a linked ticket. This ensures that PRs are only auto-approved if their associated tickets are properly resolved.

|

|

||||||

|

|

||||||

You can also prevent auto-approval if the PR exceeds the ticket's scope (see [here](https://qodo-merge-docs.qodo.ai/core-abilities/fetching_ticket_context/#configuration-options)).

|

|

||||||

|

|

||||||

|

|

||||||

### Auto-approval via the `improve` tool

|

|

||||||

|

|

||||||

PRs can be auto-approved when the `improve` tool doesn't find code suggestions.

|

|

||||||

To enable this feature, set the following in the configuration file:

|

|

||||||

|

|

||||||

```toml

|

|

||||||

[config]

|

|

||||||

enable_auto_approval = true

|

|

||||||

auto_approve_for_no_suggestions = true

|

|

||||||

```

|

|

||||||

|

|

||||||

@ -1,8 +1,3 @@

|

|||||||

# Code Validation 💎

|

|

||||||

|

|

||||||

`Supported Git Platforms: GitHub, GitLab, Bitbucket`

|

|

||||||

|

|

||||||

|

|

||||||

## Introduction

|

## Introduction

|

||||||

|

|

||||||

The Git environment usually represents the final stage before code enters production. Hence, Detecting bugs and issues during the review process is critical.

|

The Git environment usually represents the final stage before code enters production. Hence, Detecting bugs and issues during the review process is critical.

|

||||||

|

|||||||

@ -1,8 +1,5 @@

|

|||||||

|

|

||||||

`Supported Git Platforms: GitHub, GitLab, Bitbucket`

|

## Overview - PR Compression Strategy

|

||||||

|

|

||||||

|

|

||||||

## Overview

|

|

||||||

|

|

||||||

There are two scenarios:

|

There are two scenarios:

|

||||||

|

|

||||||

|

|||||||

@ -1,5 +1,4 @@

|

|||||||

|

## TL;DR

|

||||||

`Supported Git Platforms: GitHub, GitLab, Bitbucket`

|

|

||||||

|

|

||||||

Qodo Merge uses an **asymmetric and dynamic context strategy** to improve AI analysis of code changes in pull requests.

|

Qodo Merge uses an **asymmetric and dynamic context strategy** to improve AI analysis of code changes in pull requests.

|

||||||

It provides more context before changes than after, and dynamically adjusts the context based on code structure (e.g., enclosing functions or classes).

|

It provides more context before changes than after, and dynamically adjusts the context based on code structure (e.g., enclosing functions or classes).

|

||||||

|

|||||||

@ -39,22 +39,10 @@ By understanding the reasoning and intent behind modifications, the LLM can offe

|

|||||||

Similarly to the `describe` tool, the `review` tool will use the ticket content to provide additional context for the code changes.

|

Similarly to the `describe` tool, the `review` tool will use the ticket content to provide additional context for the code changes.

|

||||||

|

|

||||||

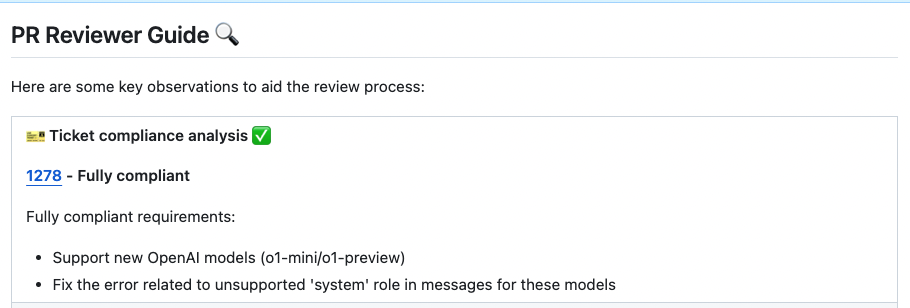

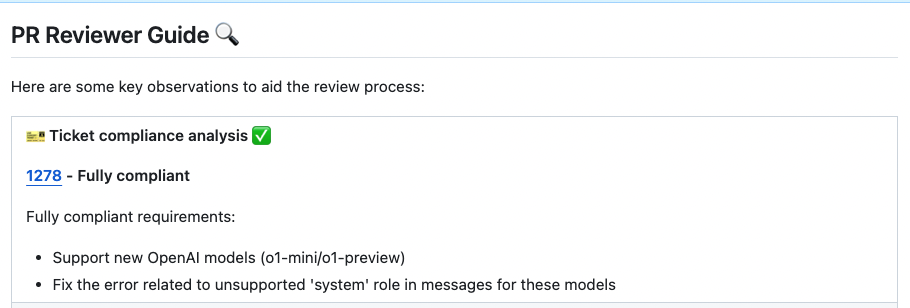

In addition, this feature will evaluate how well a Pull Request (PR) adheres to its original purpose/intent as defined by the associated ticket or issue mentioned in the PR description.

|

In addition, this feature will evaluate how well a Pull Request (PR) adheres to its original purpose/intent as defined by the associated ticket or issue mentioned in the PR description.

|

||||||

Each ticket will be assigned a label (Compliance/Alignment level), Indicates the degree to which the PR fulfills its original purpose:

|

Each ticket will be assigned a label (Compliance/Alignment level), Indicates the degree to which the PR fulfills its original purpose, Options: Fully compliant, Partially compliant or Not compliant.

|

||||||

|

|

||||||

- Fully Compliant

|

|

||||||

- Partially Compliant

|

|

||||||

- Not Compliant

|

|

||||||

- PR Code Verified

|

|

||||||

|

|

||||||

{width=768}

|

{width=768}

|

||||||

|

|

||||||

A `PR Code Verified` label indicates the PR code meets ticket requirements, but requires additional manual testing beyond the code scope. For example - validating UI display across different environments (Mac, Windows, mobile, etc.).

|

|

||||||

|

|

||||||

|

|

||||||

#### Configuration options

|

|

||||||

|

|

||||||

-

|

|

||||||

|

|

||||||

By default, the tool will automatically validate if the PR complies with the referenced ticket.

|

By default, the tool will automatically validate if the PR complies with the referenced ticket.

|

||||||

If you want to disable this feedback, add the following line to your configuration file:

|

If you want to disable this feedback, add the following line to your configuration file:

|

||||||

|

|

||||||

@ -63,17 +51,6 @@ A `PR Code Verified` label indicates the PR code meets ticket requirements, but

|

|||||||

require_ticket_analysis_review=false

|

require_ticket_analysis_review=false

|

||||||

```

|

```

|

||||||

|

|

||||||

-

|

|

||||||

|

|

||||||

If you set:

|

|

||||||

```toml

|

|

||||||

[pr_reviewer]

|

|

||||||

check_pr_additional_content=true

|

|

||||||

```

|

|

||||||

(default: `false`)

|

|

||||||

|

|

||||||

the `review` tool will also validate that the PR code doesn't contain any additional content that is not related to the ticket. If it does, the PR will be labeled at best as `PR Code Verified`, and the `review` tool will provide a comment with the additional unrelated content found in the PR code.

|

|

||||||

|

|

||||||

## GitHub Issues Integration

|

## GitHub Issues Integration

|

||||||

|

|

||||||

Qodo Merge will automatically recognize GitHub issues mentioned in the PR description and fetch the issue content.

|

Qodo Merge will automatically recognize GitHub issues mentioned in the PR description and fetch the issue content.

|

||||||

@ -392,7 +369,7 @@ To integrate with Jira, you can link your PR to a ticket using either of these m

|

|||||||

|

|

||||||

**Method 1: Description Reference:**

|

**Method 1: Description Reference:**

|

||||||

|

|

||||||

Include a ticket reference in your PR description, using either the complete URL format `https://<JIRA_ORG>.atlassian.net/browse/ISSUE-123` or the shortened ticket ID `ISSUE-123` (without prefix or suffix for the shortened ID).

|

Include a ticket reference in your PR description using either the complete URL format https://<JIRA_ORG>.atlassian.net/browse/ISSUE-123 or the shortened ticket ID ISSUE-123.

|

||||||

|

|

||||||

**Method 2: Branch Name Detection:**

|

**Method 2: Branch Name Detection:**

|

||||||

|

|

||||||

@ -405,7 +382,6 @@ Name your branch with the ticket ID as a prefix (e.g., `ISSUE-123-feature-descri

|

|||||||

[jira]

|

[jira]

|

||||||

jira_base_url = "https://<JIRA_ORG>.atlassian.net"

|

jira_base_url = "https://<JIRA_ORG>.atlassian.net"

|

||||||

```

|

```

|

||||||

Where `<JIRA_ORG>` is your Jira organization identifier (e.g., `mycompany` for `https://mycompany.atlassian.net`).

|

|

||||||

|

|

||||||

## Linear Integration 💎

|

## Linear Integration 💎

|

||||||

|

|

||||||

|

|||||||

@ -1,6 +1,4 @@

|

|||||||

# Impact Evaluation 💎

|

# Overview - Impact Evaluation 💎

|

||||||

|

|

||||||

`Supported Git Platforms: GitHub, GitLab, Bitbucket`

|

|

||||||

|

|

||||||

Demonstrating the return on investment (ROI) of AI-powered initiatives is crucial for modern organizations.

|

Demonstrating the return on investment (ROI) of AI-powered initiatives is crucial for modern organizations.

|

||||||

To address this need, Qodo Merge has developed an AI impact measurement tools and metrics, providing advanced analytics to help businesses quantify the tangible benefits of AI adoption in their PR review process.

|

To address this need, Qodo Merge has developed an AI impact measurement tools and metrics, providing advanced analytics to help businesses quantify the tangible benefits of AI adoption in their PR review process.

|

||||||

|

|||||||

@ -2,7 +2,6 @@

|

|||||||

|

|

||||||

Qodo Merge utilizes a variety of core abilities to provide a comprehensive and efficient code review experience. These abilities include:

|

Qodo Merge utilizes a variety of core abilities to provide a comprehensive and efficient code review experience. These abilities include:

|

||||||

|

|

||||||

- [Auto approval](https://qodo-merge-docs.qodo.ai/core-abilities/auto_approval/)

|

|

||||||

- [Auto best practices](https://qodo-merge-docs.qodo.ai/core-abilities/auto_best_practices/)

|

- [Auto best practices](https://qodo-merge-docs.qodo.ai/core-abilities/auto_best_practices/)

|

||||||

- [Chat on code suggestions](https://qodo-merge-docs.qodo.ai/core-abilities/chat_on_code_suggestions/)

|

- [Chat on code suggestions](https://qodo-merge-docs.qodo.ai/core-abilities/chat_on_code_suggestions/)

|

||||||

- [Code validation](https://qodo-merge-docs.qodo.ai/core-abilities/code_validation/)

|

- [Code validation](https://qodo-merge-docs.qodo.ai/core-abilities/code_validation/)

|

||||||

|

|||||||

@ -1,4 +1,4 @@

|

|||||||

# Interactivity 💎

|

# Interactivity

|

||||||

|

|

||||||

`Supported Git Platforms: GitHub, GitLab`

|

`Supported Git Platforms: GitHub, GitLab`

|

||||||

|

|

||||||

|

|||||||

@ -1,6 +1,4 @@

|

|||||||

# Local and global metadata injection with multi-stage analysis

|

## Local and global metadata injection with multi-stage analysis

|

||||||

|

|

||||||

`Supported Git Platforms: GitHub, GitLab, Bitbucket`

|

|

||||||

|

|

||||||

1\.

|

1\.

|

||||||

Qodo Merge initially retrieves for each PR the following data:

|

Qodo Merge initially retrieves for each PR the following data:

|

||||||

|

|||||||

@ -1,4 +1,4 @@

|

|||||||

`Supported Git Platforms: GitHub, GitLab, Bitbucket`

|

## TL;DR

|

||||||

|

|

||||||

Qodo Merge implements a **self-reflection** process where the AI model reflects, scores, and re-ranks its own suggestions, eliminating irrelevant or incorrect ones.

|

Qodo Merge implements a **self-reflection** process where the AI model reflects, scores, and re-ranks its own suggestions, eliminating irrelevant or incorrect ones.

|

||||||

This approach improves the quality and relevance of suggestions, saving users time and enhancing their experience.

|

This approach improves the quality and relevance of suggestions, saving users time and enhancing their experience.

|

||||||

|

|||||||

@ -1,14 +1,11 @@

|

|||||||

# Static Code Analysis 💎

|

## Overview - Static Code Analysis 💎

|

||||||

|

|

||||||

` Supported Git Platforms: GitHub, GitLab, Bitbucket`

|

|

||||||

|

|

||||||

|

|

||||||

By combining static code analysis with LLM capabilities, Qodo Merge can provide a comprehensive analysis of the PR code changes on a component level.

|

By combining static code analysis with LLM capabilities, Qodo Merge can provide a comprehensive analysis of the PR code changes on a component level.

|

||||||

|

|

||||||

It scans the PR code changes, finds all the code components (methods, functions, classes) that changed, and enables to interactively generate tests, docs, code suggestions and similar code search for each component.

|

It scans the PR code changes, finds all the code components (methods, functions, classes) that changed, and enables to interactively generate tests, docs, code suggestions and similar code search for each component.

|

||||||

|

|

||||||

!!! note "Language that are currently supported:"

|

!!! note "Language that are currently supported:"

|

||||||

Python, Java, C++, JavaScript, TypeScript, C#, Go.

|

Python, Java, C++, JavaScript, TypeScript, C#.

|

||||||

|

|

||||||

## Capabilities

|

## Capabilities

|

||||||

|

|

||||||

|

|||||||

@ -39,8 +39,6 @@ GITEA__PERSONAL_ACCESS_TOKEN=<personal_access_token>

|

|||||||

GITEA__WEBHOOK_SECRET=<webhook_secret>

|

GITEA__WEBHOOK_SECRET=<webhook_secret>

|

||||||

GITEA__URL=https://gitea.com # Or self host

|

GITEA__URL=https://gitea.com # Or self host

|

||||||

OPENAI__KEY=<your_openai_api_key>

|

OPENAI__KEY=<your_openai_api_key>

|

||||||

GITEA__SKIP_SSL_VERIFICATION=false # or true

|

|

||||||

GITEA__SSL_CA_CERT=/path/to/cacert.pem

|

|

||||||

```

|

```

|

||||||

|

|

||||||

8. Create a webhook in your Gitea project. Set the URL to `http[s]://<PR_AGENT_HOSTNAME>/api/v1/gitea_webhooks`, the secret token to the generated secret from step 3, and enable the triggers `push`, `comments` and `merge request events`.

|

8. Create a webhook in your Gitea project. Set the URL to `http[s]://<PR_AGENT_HOSTNAME>/api/v1/gitea_webhooks`, the secret token to the generated secret from step 3, and enable the triggers `push`, `comments` and `merge request events`.

|

||||||

|

|||||||

@ -27,9 +27,7 @@ Qodo Merge for GitHub cloud is available for installation through the [GitHub Ma

|

|||||||

|

|

||||||

### GitHub Enterprise Server

|

### GitHub Enterprise Server

|

||||||

|

|

||||||

To use Qodo Merge on your private GitHub Enterprise Server, you will need to [contact](https://www.qodo.ai/contact/#pricing) Qodo for starting an Enterprise trial.

|

To use Qodo Merge application on your private GitHub Enterprise Server, you will need to [contact](https://www.qodo.ai/contact/#pricing) Qodo for starting an Enterprise trial.

|

||||||

|

|

||||||

(Note: The marketplace app is not compatible with GitHub Enterprise Server. Installation requires creating a private GitHub App instead.)

|

|

||||||

|

|

||||||

### GitHub Open Source Projects

|

### GitHub Open Source Projects

|

||||||

|

|

||||||

|

|||||||

@ -2,231 +2,200 @@

|

|||||||

|

|

||||||

## Methodology

|

## Methodology

|

||||||

|

|

||||||

Qodo Merge PR Benchmark evaluates and compares the performance of Large Language Models (LLMs) in analyzing pull request code and providing meaningful code suggestions.

|

Qodo Merge PR Benchmark evaluates and compares the performance of two Large Language Models (LLMs) in analyzing pull request code and providing meaningful code suggestions.

|

||||||

Our diverse dataset comprises of 400 pull requests from over 100 repositories, spanning various programming languages and frameworks to reflect real-world scenarios.

|

Our diverse dataset comprises of 400 pull requests from over 100 repositories, spanning various programming languages and frameworks to reflect real-world scenarios.

|

||||||

|

|

||||||

- For each pull request, we have pre-generated suggestions from [11](https://qodo-merge-docs.qodo.ai/pr_benchmark/#models-used-for-generating-the-benchmark-baseline) different top-performing models using the Qodo Merge `improve` tool. The prompt for response generation can be found [here](https://github.com/qodo-ai/pr-agent/blob/main/pr_agent/settings/code_suggestions/pr_code_suggestions_prompts_not_decoupled.toml).

|

- For each pull request, two distinct LLMs process the same prompt using the Qodo Merge `improve` tool, each generating two sets of responses. The prompt for response generation can be found [here](https://github.com/qodo-ai/pr-agent/blob/main/pr_agent/settings/code_suggestions/pr_code_suggestions_prompts_not_decoupled.toml).

|

||||||

|

|

||||||

- To benchmark a model, we generate its suggestions for the same pull requests and ask a high-performing judge model to **rank** the new model's output against the 11 pre-generated baseline suggestions. We utilize OpenAI's `o3` model as the judge, though other models have yielded consistent results. The prompt for this ranking judgment is available [here](https://github.com/Codium-ai/pr-agent-settings/tree/main/benchmark).

|

- Subsequently, a high-performing third model (an AI judge) evaluates the responses from the initial two models to determine the superior one. We utilize OpenAI's `o3` model as the judge, though other models have yielded consistent results. The prompt for this comparative judgment is available [here](https://github.com/Codium-ai/pr-agent-settings/tree/main/benchmark).

|

||||||

|

|

||||||

- We aggregate ranking outcomes across all pull requests, calculating performance metrics for the evaluated model. We also analyze the qualitative feedback from the judge to identify the model's comparative strengths and weaknesses against the established baselines.

|

- We aggregate comparison outcomes across all the pull requests, calculating the win rate for each model. We also analyze the qualitative feedback (the "why" explanations from the judge) to identify each model's comparative strengths and weaknesses.

|

||||||

This approach provides not just a quantitative score but also a detailed analysis of each model's strengths and weaknesses.

|

This approach provides not just a quantitative score but also a detailed analysis of each model's strengths and weaknesses.

|

||||||

|

|

||||||

|

- For each model we build a "Model Card", comparing it against others. To ensure full transparency and enable community scrutiny, we also share the raw code suggestions generated by each model, and the judge's specific feedback. See example for the full output [here](https://github.com/Codium-ai/pr-agent-settings/blob/main/benchmark/sonnet_37_vs_gemini-2.5-pro-preview-05-06.md)

|

||||||

|

|

||||||

[//]: # (Note that this benchmark focuses on quality: the ability of an LLM to process complex pull request with multiple files and nuanced task to produce high-quality code suggestions.)

|

Note that this benchmark focuses on quality: the ability of an LLM to process complex pull request with multiple files and nuanced task to produce high-quality code suggestions.

|

||||||

|

Other factors like speed, cost, and availability, while also relevant for model selection, are outside this benchmark's scope.

|

||||||

|

|

||||||

[//]: # (Other factors like speed, cost, and availability, while also relevant for model selection, are outside this benchmark's scope. We do specify the thinking budget used by each model, which can be a factor in the model's performance.)

|

## TL;DR

|

||||||

|

|

||||||

[//]: # ()

|

Here's a summary of the win rates based on the benchmark:

|

||||||

|

|

||||||

## Results

|

[//]: # (| Model A | Model B | Model A Win Rate | Model B Win Rate |)

|

||||||

|

|

||||||

|

[//]: # (|:-------------------------------|:-------------------------------|:----------------:|:----------------:|)

|

||||||

|

|

||||||

|

[//]: # (| Gemini-2.5-pro-preview-05-06 | GPT-4.1 | 70.4% | 29.6% |)

|

||||||

|

|

||||||

|

[//]: # (| Gemini-2.5-pro-preview-05-06 | Sonnet 3.7 | 78.1% | 21.9% |)

|

||||||

|

|

||||||

|

[//]: # (| GPT-4.1 | Sonnet 3.7 | 61.0% | 39.0% |)

|

||||||

|

|

||||||

<table>

|

<table>

|

||||||

<thead>

|

<thead>

|

||||||

<tr>

|

<tr>

|

||||||

<th style="text-align:left;">Model Name</th>

|

<th style="text-align:left;">Model A</th>

|

||||||

<th style="text-align:left;">Version (Date)</th>

|

<th style="text-align:left;">Model B</th>

|

||||||

<th style="text-align:left;">Thinking budget tokens</th>

|

<th style="text-align:center;">Model A Win Rate</th> <th style="text-align:center;">Model B Win Rate</th> </tr>

|

||||||

<th style="text-align:center;">Score</th>

|

|

||||||

</tr>

|

|

||||||

</thead>

|

</thead>

|

||||||

<tbody>

|

<tbody>

|

||||||

<tr>

|

<tr>

|

||||||

<td style="text-align:left;">o3</td>

|

<td style="text-align:left;">Gemini-2.5-pro-preview-05-06</td>

|

||||||

<td style="text-align:left;">2025-04-16</td>

|

<td style="text-align:left;">GPT-4.1</td>

|

||||||

<td style="text-align:left;">'medium' (<a href="https://ai.google.dev/gemini-api/docs/openai">8000</a>)</td>

|

<td style="text-align:center; color: #1E8449;"><b>70.4%</b></td> <td style="text-align:center; color: #D8000C;"><b>29.6%</b></td> </tr>

|

||||||

<td style="text-align:center;"><b>62.5</b></td>

|

|

||||||

</tr>

|

|

||||||

<tr>

|

<tr>

|

||||||

<td style="text-align:left;">o4-mini</td>

|

<td style="text-align:left;">Gemini-2.5-pro-preview-05-06</td>

|

||||||

<td style="text-align:left;">2025-04-16</td>

|

<td style="text-align:left;">Sonnet 3.7</td>

|

||||||

<td style="text-align:left;">'medium' (<a href="https://ai.google.dev/gemini-api/docs/openai">8000</a>)</td>

|

<td style="text-align:center; color: #1E8449;"><b>78.1%</b></td> <td style="text-align:center; color: #D8000C;"><b>21.9%</b></td> </tr>

|

||||||

<td style="text-align:center;"><b>57.7</b></td>

|

|

||||||

</tr>

|

|

||||||

<tr>

|

<tr>

|

||||||

<td style="text-align:left;">Gemini-2.5-pro</td>

|

<td style="text-align:left;">Gemini-2.5-pro-preview-05-06</td>

|

||||||

<td style="text-align:left;">2025-06-05</td>

|

<td style="text-align:left;">Gemini-2.5-flash-preview-04-17</td>

|

||||||

<td style="text-align:left;">4096</td>

|

<td style="text-align:center; color: #1E8449;"><b>73.0%</b></td> <td style="text-align:center; color: #D8000C;"><b>27.0%</b></td> </tr>

|

||||||

<td style="text-align:center;"><b>56.3</b></td>

|

|

||||||

</tr>

|

|

||||||

<tr>

|

<tr>

|

||||||

<td style="text-align:left;">Gemini-2.5-pro</td>

|

<td style="text-align:left;">Gemini-2.5-flash-preview-04-17</td>

|

||||||

<td style="text-align:left;">2025-06-05</td>

|

<td style="text-align:left;">GPT-4.1</td>

|

||||||

<td style="text-align:left;">1024</td>

|

<td style="text-align:center; color: #1E8449;"><b>54.6%</b></td> <td style="text-align:center; color: #D8000C;"><b>45.4%</b></td> </tr>

|

||||||

<td style="text-align:center;"><b>44.3</b></td>

|

|

||||||

</tr>

|

|

||||||

<tr>

|

<tr>

|

||||||

<td style="text-align:left;">Claude-4-sonnet</td>

|

<td style="text-align:left;">Gemini-2.5-flash-preview-04-17</td>

|

||||||

<td style="text-align:left;">2025-05-14</td>

|

<td style="text-align:left;">Sonnet 3.7</td>

|

||||||

<td style="text-align:left;">4096</td>

|

<td style="text-align:center; color: #1E8449;"><b>60.6%</b></td> <td style="text-align:center; color: #D8000C;"><b>39.4%</b></td> </tr>

|

||||||

<td style="text-align:center;"><b>39.7</b></td>

|

|

||||||

</tr>

|

|

||||||

<tr>

|

|

||||||

<td style="text-align:left;">Claude-4-sonnet</td>

|

|

||||||

<td style="text-align:left;">2025-05-14</td>

|

|

||||||

<td style="text-align:left;"></td>

|

|

||||||

<td style="text-align:center;"><b>39.0</b></td>

|

|

||||||

</tr>

|

|

||||||

<tr>

|

|

||||||

<td style="text-align:left;">Gemini-2.5-flash</td>

|

|

||||||

<td style="text-align:left;">2025-04-17</td>

|

|

||||||

<td style="text-align:left;"></td>

|

|

||||||

<td style="text-align:center;"><b>33.5</b></td>

|

|

||||||

</tr>

|

|

||||||

<tr>

|

|

||||||

<td style="text-align:left;">Claude-3.7-sonnet</td>

|

|

||||||

<td style="text-align:left;">2025-02-19</td>

|

|

||||||

<td style="text-align:left;"></td>

|

|

||||||

<td style="text-align:center;"><b>32.4</b></td>

|

|

||||||

</tr>

|

|

||||||

<tr>

|

<tr>

|

||||||

<td style="text-align:left;">GPT-4.1</td>

|

<td style="text-align:left;">GPT-4.1</td>

|

||||||

<td style="text-align:left;">2025-04-14</td>

|

<td style="text-align:left;">Sonnet 3.7</td>

|

||||||

<td style="text-align:left;"></td>

|

<td style="text-align:center; color: #1E8449;"><b>61.0%</b></td> <td style="text-align:center; color: #D8000C;"><b>39.0%</b></td> </tr>

|

||||||

<td style="text-align:center;"><b>26.5</b></td>

|

|

||||||

</tr>

|

|

||||||

</tbody>

|

</tbody>

|

||||||

</table>

|

</table>

|

||||||

|

|

||||||

## Results Analysis

|

|

||||||

|

|

||||||

### O3

|

## Gemini-2.5-pro-preview-05-06 - Model Card

|

||||||

|

|

||||||

Final score: **62.5**

|

### Comparison against GPT-4.1

|

||||||

|

|

||||||

strengths:

|

{width=768}

|

||||||

|

|

||||||

- **High precision & compliance:** Generally respects task rules (limits, “added lines” scope, YAML schema) and avoids false-positive advice, often returning an empty list when appropriate.

|

#### Analysis Summary

|

||||||

- **Clear, actionable output:** Suggestions are concise, well-explained and include correct before/after patches, so reviewers can apply them directly.

|

|

||||||

- **Good critical-bug detection rate:** Frequently spots compile-breakers or obvious runtime faults (nil / NPE, overflow, race, wrong selector, etc.), putting it at least on par with many peers.

|

|

||||||

- **Consistent formatting:** Produces syntactically valid YAML with correct labels, making automated consumption easy.

|

|

||||||

|

|

||||||

weaknesses:

|

Model 'Gemini-2.5-pro-preview-05-06' is generally more useful thanks to wider and more accurate bug detection and concrete patches, but it sacrifices compliance discipline and sometimes oversteps the task rules. Model 'GPT-4.1' is safer and highly rule-abiding, yet often too timid—missing many genuine issues and providing limited insight. An ideal reviewer would combine 'GPT-4.1’ restraint with 'Gemini-2.5-pro-preview-05-06' thoroughness.

|

||||||

|

|

||||||

- **Narrow coverage:** Tends to stop after 1-2 issues; regularly misses additional critical defects that better answers catch, so it is seldom the top-ranked review.

|

#### Detailed Analysis

|

||||||

- **Occasional inaccuracies:** A few replies introduce new bugs, give partial/duplicate fixes, or (rarely) violate rules (e.g., import suggestions), hurting trust.

|

|

||||||

- **Conservative bias:** Prefers silence over risk; while this keeps precision high, it lowers recall and overall usefulness on larger diffs.

|

|

||||||

- **Little added insight:** Rarely offers broader context, optimisations or holistic improvements, causing it to rank only mid-tier in many comparisons.

|

|

||||||

|

|

||||||

### O4 Mini ('medium' thinking tokens)

|

Gemini-2.5-pro-preview-05-06 strengths:

|

||||||

|

|

||||||

Final score: **57.7**

|

- better_bug_coverage: Detects and explains more critical issues, winning in ~70 % of comparisons and achieving a higher average score.

|

||||||

|

- actionable_fixes: Supplies clear code snippets, correct language labels, and often multiple coherent suggestions per diff.

|

||||||

|

- deeper_reasoning: Shows stronger grasp of logic, edge cases, and cross-file implications, leading to broader, high-impact reviews.

|

||||||

|

|

||||||

strengths:

|

Gemini-2.5-pro-preview-05-06 weaknesses:

|

||||||

|

|

||||||

- **Good rule adherence:** Most answers respect the “new-lines only”, 3-suggestion, and YAML-schema limits, and frequently choose the safe empty list when the diff truly adds no critical bug.

|

- guideline_violations: More prone to over-eager advice—non-critical tweaks, touching unchanged code, suggesting new imports, or minor format errors.

|

||||||

- **Clear, minimal patches:** When the model does spot a defect it usually supplies terse, valid before/after snippets and short, targeted explanations, making fixes easy to read and apply.

|

- occasional_overreach: Some fixes are speculative or risky, potentially introducing new bugs.

|

||||||

- **Language & domain breadth:** Demonstrates competence across many ecosystems (C/C++, Java, TS/JS, Go, Rust, Python, Bash, Markdown, YAML, SQL, CSS, translation files, etc.) and can detect both compile-time and runtime mistakes.

|

- redundant_or_duplicate: At times repeats the same point or exceeds the required brevity.

|

||||||

- **Often competitive:** In a sizeable minority of cases the model ties for best or near-best answer, occasionally being the only response to catch a subtle crash or build blocker.

|

|

||||||

|

|

||||||

weaknesses:

|

|

||||||

|

|

||||||

- **High miss rate:** A large share of examples show the model returning an empty list or only minor advice while other reviewers catch clear, high-impact bugs—indicative of weak defect-detection recall.

|

|

||||||

- **False or harmful fixes:** Several answers introduce new compilation errors, propose out-of-scope changes, or violate explicit rules (e.g., adding imports, version bumps, touching untouched lines), reducing trustworthiness.

|

|

||||||

- **Shallow coverage:** Even when it identifies one real issue it often stops there, missing additional critical problems found by stronger peers; breadth and depth are inconsistent.

|

|

||||||

|

|

||||||

### Gemini-2.5 Pro (4096 thinking tokens)

|

|

||||||

|

|

||||||

Final score: **56.3**

|

|

||||||

|

|

||||||

strengths:

|

|

||||||

|

|

||||||

- **High formatting compliance:** The model almost always produces valid YAML, respects the three-suggestion limit, and supplies clear before/after code snippets and short rationales.

|

|

||||||

- **Good “first-bug” detection:** It frequently notices the single most obvious regression (crash, compile error, nil/NPE risk, wrong path, etc.) and gives a minimal, correct patch—often judged “on-par” with other solid answers.

|

|

||||||

- **Clear, concise writing:** Explanations are brief yet understandable for reviewers; fixes are scoped to the changed lines and rarely include extraneous context.

|

|

||||||

- **Low rate of harmful fixes:** Truly dangerous or build-breaking advice is rare; most mistakes are omissions rather than wrong code.

|

|

||||||

|

|

||||||

weaknesses:

|

|

||||||

|

|

||||||

- **Limited breadth of review:** The model regularly stops after the first or second issue, missing additional critical problems that stronger answers surface, so it is often out-ranked by more comprehensive peers.

|

|

||||||

- **Occasional guideline violations:** A noticeable minority of answers touch unchanged lines, exceed the 3-item cap, suggest adding imports, or drop the required YAML wrapper, leading to automatic downgrades.

|

|

||||||

- **False positives / speculative fixes:** In several cases it flags non-issues (style, performance, redundant code) or supplies debatable “improvements”, lowering precision and sometimes breaching the “critical bugs only” rule.

|

|

||||||

- **Inconsistent error coverage:** For certain domains (build scripts, schema files, test code) it either returns an empty list when real regressions exist or proposes cosmetic edits, indicating gaps in specialised knowledge.

|

|

||||||

|

|

||||||

### Claude-4 Sonnet (4096 thinking tokens)

|

|

||||||

|

|

||||||

Final score: **39.7**

|

|

||||||

|

|

||||||

strengths:

|

|

||||||

|

|

||||||

- **High guideline & format compliance:** Almost always returns valid YAML, keeps ≤ 3 suggestions, avoids forbidden import/boiler-plate changes and provides clear before/after snippets.

|

|

||||||

- **Good pinpoint accuracy on single issues:** Frequently spots at least one real critical bug and proposes a concise, technically correct fix that compiles/runs.

|

|

||||||

- **Clarity & brevity of patches:** Explanations are short, actionable, and focused on changed lines, making the advice easy for reviewers to apply.

|

|

||||||

|

|

||||||

weaknesses:

|

|

||||||

|

|

||||||

- **Low coverage / recall:** Regularly surfaces only one minor issue (or none) while missing other, often more severe, problems caught by peer models.

|

|

||||||

- **High “empty-list” rate:** In many diffs the model returns no suggestions even when clear critical bugs exist, offering zero reviewer value.

|

|

||||||

- **Occasional incorrect or harmful fixes:** A non-trivial number of suggestions are speculative, contradict code intent, or would break compilation/runtime; sometimes duplicates or contradicts itself.

|

|

||||||

- **Inconsistent severity labelling & duplication:** Repeats the same point in multiple slots, marks cosmetic edits as “critical”, or leaves `improved_code` identical to original.

|

|

||||||

|

|

||||||

|

|

||||||

### Claude-4 Sonnet

|

### Comparison against Sonnet 3.7

|

||||||

|

|

||||||

Final score: **39.0**

|

{width=768}

|

||||||

|

|

||||||

strengths:

|

#### Analysis Summary

|

||||||

|

|

||||||

- **Consistently well-formatted & rule-compliant output:** Almost every answer follows the required YAML schema, keeps within the 3-suggestion limit, and returns an empty list when no issues are found, showing good instruction following.

|

Model 'Gemini-2.5-pro-preview-05-06' is the stronger reviewer—more frequently identifies genuine, high-impact bugs and provides well-formed, actionable fixes. Model 'Sonnet 3.7' is safer against false positives and tends to be concise but often misses important defects or offers low-value or incorrect suggestions.

|

||||||

|

|

||||||

- **Actionable, code-level patches:** When it does spot a defect the model usually supplies clear, minimal diffs or replacement snippets that compile / run, making the fix easy to apply.

|

See raw results [here](https://github.com/Codium-ai/pr-agent-settings/blob/main/benchmark/sonnet_37_vs_gemini-2.5-pro-preview-05-06.md)

|

||||||

|

|

||||||

- **Decent hit-rate on “obvious” bugs:** The model reliably catches the most blatant syntax errors, null-checks, enum / cast problems, and other first-order issues, so it often ties or slightly beats weaker baseline replies.

|

|

||||||

|

|

||||||

weaknesses:

|

|

||||||

|

|

||||||

- **Shallow coverage:** It frequently stops after one easy bug and overlooks additional, equally-critical problems that stronger reviewers find, leaving significant risks unaddressed.

|

|

||||||

|

|

||||||

- **False positives & harmful fixes:** In a noticeable minority of cases it misdiagnoses code, suggests changes that break compilation or behaviour, or flags non-issues, sometimes making its output worse than doing nothing.

|

|

||||||

|

|

||||||

- **Drifts into non-critical or out-of-scope advice:** The model regularly proposes style tweaks, documentation edits, or changes to unchanged lines, violating the “critical new-code only” requirement.

|

|

||||||

|

|

||||||

|

|

||||||

### Gemini-2.5 Flash

|

#### Detailed Analysis

|

||||||

|

|

||||||

strengths:

|

Gemini-2.5-pro-preview-05-06 strengths:

|

||||||

|

|

||||||

- **High precision / low false-positive rate:** The model often stays silent or gives a single, well-justified fix, so when it does speak the suggestion is usually correct and seldom touches unchanged lines, keeping guideline compliance high.

|

- higher_accuracy_and_coverage: finds real critical bugs and supplies actionable patches in most examples (better in 78 % of cases).

|

||||||

- **Good guideline awareness:** YAML structure is consistently valid; suggestions rarely exceed the 3-item limit and generally restrict themselves to newly-added lines.

|

- guideline_awareness: usually respects new-lines-only scope, ≤3 suggestions, proper YAML, and stays silent when no issues exist.

|

||||||

- **Clear, concise patches:** When a defect is found, the model produces short rationales and tidy “improved_code” blocks that reviewers can apply directly.

|

- detailed_reasoning_and_patches: explanations tie directly to the diff and fixes are concrete, often catching multiple related defects that 'Sonnet 3.7' overlooks.

|

||||||

- **Risk-averse behaviour pays off in “no-bug” PRs:** In examples where the diff truly contained no critical issue, the model’s empty output ranked above peers that offered speculative or stylistic advice.

|

|

||||||

|

|

||||||

weaknesses:

|

Gemini-2.5-pro-preview-05-06 weaknesses:

|

||||||

|

|

||||||

- **Very low recall / shallow coverage:** In a large majority of cases it gives 0-1 suggestions and misses other evident, critical bugs highlighted by peer models, leading to inferior rankings.

|

- occasional_rule_violations: sometimes proposes new imports, package-version changes, or edits outside the added lines.

|

||||||

- **Occasional incorrect or harmful fixes:** A noticeable subset of answers propose changes that break functionality or misunderstand the code (e.g. bad constant, wrong header logic, speculative rollbacks).

|

- overzealous_suggestions: may add speculative or stylistic fixes that exceed the “critical” scope, or mis-label severity.

|

||||||

- **Non-actionable placeholders:** Some “improved_code” sections contain comments or “…” rather than real patches, reducing practical value.

|

- sporadic_technical_slips: a few patches contain minor coding errors, oversized snippets, or duplicate/contradicting advice.

|

||||||

-

|

|

||||||

### GPT-4.1

|

|

||||||

|

|

||||||

Final score: **26.5**

|

## GPT-4.1 - Model Card

|

||||||

|

|

||||||

strengths:

|

### Comparison against Sonnet 3.7

|

||||||

|

|

||||||

- **Consistent format & guideline obedience:** Output is almost always valid YAML, within the 3-suggestion limit, and rarely touches lines not prefixed with “+”.

|

{width=768}

|

||||||

- **Low false-positive rate:** When no real defect exists, the model correctly returns an empty list instead of inventing speculative fixes, avoiding the “noise” many baseline answers add.

|

|

||||||

- **Clear, concise patches when it does act:** In the minority of cases where it detects a bug (e.g., ex-13, 46, 212), the fix is usually correct, minimal, and easy to apply.

|

|

||||||

|

|

||||||

weaknesses:

|

#### Analysis Summary

|

||||||

|

|

||||||

- **Very low recall / coverage:** In a large majority of examples it outputs an empty list or only 1 trivial suggestion while obvious critical issues remain unfixed; it systematically misses circular bugs, null-checks, schema errors, etc.

|

Model 'GPT-4.1' is safer and more compliant, preferring silence over speculation, which yields fewer rule breaches and false positives but misses some real bugs.

|

||||||

- **Shallow analysis:** Even when it finds one problem it seldom looks deeper, so more severe or additional bugs in the same diff are left unaddressed.

|

Model 'Sonnet 3.7' is more adventurous and often uncovers important issues that 'GPT-4.1' ignores, yet its aggressive style leads to frequent guideline violations and a higher proportion of incorrect or non-critical advice.

|

||||||

- **Occasional technical inaccuracies:** A noticeable subset of suggestions are wrong (mis-ordered assertions, harmful Bash `set` change, false dangling-reference claims) or carry metadata errors (mis-labeling files as “python”).

|

|

||||||

- **Repetitive / derivative fixes:** Many outputs duplicate earlier simplistic ideas (e.g., single null-check) without new insight, showing limited reasoning breadth.

|

See raw results [here](https://github.com/Codium-ai/pr-agent-settings/blob/main/benchmark/gpt-4.1_vs_sonnet_3.7_judge_o3.md)

|

||||||

|

|

||||||

|

|

||||||

## Appendix - models used for generating the benchmark baseline

|

#### Detailed Analysis

|

||||||

|

|

||||||

- anthropic_sonnet_3.7_v1:0

|

GPT-4.1 strengths:

|

||||||

- claude-4-opus-20250514

|

- Strong guideline adherence: usually stays strictly on `+` lines, avoids non-critical or stylistic advice, and rarely suggests forbidden imports; often outputs an empty list when no real bug exists.

|

||||||

- claude-4-sonnet-20250514

|

- Lower false-positive rate: suggestions are more accurate and seldom introduce new bugs; fixes compile more reliably.

|

||||||

- claude-4-sonnet-20250514_thinking_2048

|

- Good schema discipline: YAML is almost always well-formed and fields are populated correctly.

|

||||||

- gemini-2.5-flash-preview-04-17

|

|

||||||

- gemini-2.5-pro-preview-05-06

|

GPT-4.1 weaknesses:

|

||||||

- gemini-2.5-pro-preview-06-05_1024

|

- Misses bugs: often returns an empty list even when a clear critical issue is present, so coverage is narrower.

|

||||||

- gemini-2.5-pro-preview-06-05_4096

|

- Sparse feedback: when it does comment, it tends to give fewer suggestions and sometimes lacks depth or completeness.

|

||||||

- gpt-4.1

|

- Occasional metadata/slip-ups (wrong language tags, overly broad code spans), though less harmful than Sonnet 3.7 errors.

|

||||||

- o3

|

|

||||||

- o4-mini_medium

|

### Comparison against Gemini-2.5-pro-preview-05-06

|

||||||

|

|

||||||

|

{width=768}

|

||||||

|

|

||||||

|

#### Analysis Summary

|

||||||

|

|

||||||

|

Model 'Gemini-2.5-pro-preview-05-06' is generally more useful thanks to wider and more accurate bug detection and concrete patches, but it sacrifices compliance discipline and sometimes oversteps the task rules. Model 'GPT-4.1' is safer and highly rule-abiding, yet often too timid—missing many genuine issues and providing limited insight. An ideal reviewer would combine 'GPT-4.1’ restraint with 'Gemini-2.5-pro-preview-05-06' thoroughness.

|

||||||

|

|

||||||

|

#### Detailed Analysis

|

||||||

|

|

||||||

|

GPT-4.1 strengths:

|

||||||

|

- strict_compliance: Usually sticks to the “critical bugs only / new ‘+’ lines only” rule, so outputs rarely violate task constraints.

|

||||||

|

- low_risk: Conservative behaviour avoids harmful or speculative fixes; safer when no obvious issue exists.

|

||||||

|

- concise_formatting: Tends to produce minimal, correctly-structured YAML without extra noise.

|

||||||

|

|

||||||

|

GPT-4.1 weaknesses:

|

||||||

|

- under_detection: Frequently returns an empty list even when real bugs are present, missing ~70 % of the time.

|

||||||

|

- shallow_analysis: When it does suggest fixes, coverage is narrow and technical depth is limited, sometimes with wrong language tags or minor format slips.

|

||||||

|

- occasional_inaccuracy: A few suggestions are unfounded or duplicate, and rare guideline breaches (e.g., import advice) still occur.

|

||||||

|

|

||||||

|

|

||||||

|

## Sonnet 3.7 - Model Card

|

||||||

|

|

||||||

|

### Comparison against GPT-4.1

|

||||||

|

|

||||||

|

{width=768}

|

||||||

|

|

||||||

|

#### Analysis Summary

|

||||||

|

|

||||||

|

Model 'GPT-4.1' is safer and more compliant, preferring silence over speculation, which yields fewer rule breaches and false positives but misses some real bugs.

|

||||||

|

Model 'Sonnet 3.7' is more adventurous and often uncovers important issues that 'GPT-4.1' ignores, yet its aggressive style leads to frequent guideline violations and a higher proportion of incorrect or non-critical advice.

|

||||||

|

|

||||||

|

See raw results [here](https://github.com/Codium-ai/pr-agent-settings/blob/main/benchmark/gpt-4.1_vs_sonnet_3.7_judge_o3.md)

|

||||||

|

|

||||||

|

#### Detailed Analysis

|

||||||

|

|

||||||

|

'Sonnet 3.7' strengths:

|

||||||

|

- Better bug discovery breadth: more willing to dive into logic and spot critical problems that 'GPT-4.1' overlooks; often supplies multiple, detailed fixes.

|

||||||

|

- Richer explanations & patches: gives fuller context and, when correct, proposes more functional or user-friendly solutions.

|

||||||

|

- Generally correct language/context tagging and targeted code snippets.

|

||||||

|

|

||||||

|

'Sonnet 3.7' weaknesses:

|

||||||

|

- Guideline violations: frequently flags non-critical issues, edits untouched code, or recommends adding imports, breaching task rules.

|

||||||

|

- Higher error rate: suggestions are more speculative and sometimes introduce new defects or duplicate work already done.

|

||||||

|

- Occasional schema or formatting mistakes (missing list value, duplicated suggestions), reducing reliability.

|

||||||

|

|

||||||

|

|

||||||

|

### Comparison against Gemini-2.5-pro-preview-05-06

|

||||||

|

|

||||||

|

{width=768}

|

||||||

|

|

||||||

|

#### Analysis Summary

|

||||||

|

|

||||||

|

Model 'Gemini-2.5-pro-preview-05-06' is the stronger reviewer—more frequently identifies genuine, high-impact bugs and provides well-formed, actionable fixes. Model 'Sonnet 3.7' is safer against false positives and tends to be concise but often misses important defects or offers low-value or incorrect suggestions.

|

||||||

|

|

||||||

|

See raw results [here](https://github.com/Codium-ai/pr-agent-settings/blob/main/benchmark/sonnet_37_vs_gemini-2.5-pro-preview-05-06.md)

|

||||||

|

|||||||

@ -17,4 +17,4 @@ An example result:

|

|||||||

{width=750}

|

{width=750}

|

||||||

|

|

||||||

!!! note "Language that are currently supported:"

|

!!! note "Language that are currently supported:"

|

||||||

Python, Java, C++, JavaScript, TypeScript, C#, Go.

|

Python, Java, C++, JavaScript, TypeScript, C#.

|

||||||

|

|||||||

@ -483,6 +483,86 @@ code_suggestions_self_review_text = "... (your text here) ..."

|

|||||||

|

|

||||||

To prevent unauthorized approvals, this configuration defaults to false, and cannot be altered through online comments; enabling requires a direct update to the configuration file and a commit to the repository. This ensures that utilizing the feature demands a deliberate documented decision by the repository owner.

|

To prevent unauthorized approvals, this configuration defaults to false, and cannot be altered through online comments; enabling requires a direct update to the configuration file and a commit to the repository. This ensures that utilizing the feature demands a deliberate documented decision by the repository owner.

|

||||||

|

|

||||||

|

### Auto-approval

|

||||||

|

|

||||||

|

> `💎 feature. Platforms supported: GitHub, GitLab, Bitbucket`

|

||||||

|

|

||||||

|

Under specific conditions, Qodo Merge can auto-approve a PR when a specific comment is invoked, or when the PR meets certain criteria.

|

||||||

|

|

||||||

|

**To ensure safety, the auto-approval feature is disabled by default.**

|

||||||

|

To enable auto-approval features, you need to actively set one or both of the following options in a pre-defined _configuration file_:

|

||||||

|

|

||||||

|

```toml

|

||||||

|

[config]

|

||||||

|

enable_comment_approval = true # For approval via comments

|

||||||

|

enable_auto_approval = true # For criteria-based auto-approval

|

||||||

|

```

|

||||||

|

|

||||||

|

!!! note "Notes"

|

||||||

|

- Note that this specific flag cannot be set with a command line argument, only in the configuration file, committed to the repository.

|

||||||

|

- Enabling auto-approval must be a deliberate decision by the repository owner.

|

||||||

|

|

||||||

|

1\. **Auto-approval by commenting**

|

||||||

|

|

||||||

|

To enable auto-approval by commenting, set in the configuration file:

|

||||||

|

|

||||||

|

```toml

|

||||||

|

[config]

|

||||||

|

enable_comment_approval = true

|

||||||

|

```

|

||||||

|

|

||||||

|

After enabling, by commenting on a PR:

|

||||||

|

|

||||||

|

```

|

||||||

|

/review auto_approve

|

||||||

|

```

|

||||||

|

|

||||||

|

Qodo Merge will automatically approve the PR, and add a comment with the approval.

|

||||||

|

|

||||||

|

2\. **Auto-approval when the PR meets certain criteria**

|

||||||

|

|

||||||

|

To enable auto-approval based on specific criteria, first, you need to enable the top-level flag:

|

||||||

|

|

||||||

|

```toml

|

||||||

|

[config]

|

||||||

|

enable_auto_approval = true

|

||||||

|

```

|

||||||

|

|

||||||

|

There are several criteria that can be set for auto-approval:

|

||||||

|

|

||||||

|

- **Review effort score**

|

||||||

|

|

||||||

|

```toml

|

||||||

|

[config]

|

||||||

|

enable_auto_approval = true

|

||||||

|

auto_approve_for_low_review_effort = X # X is a number between 1 to 5

|

||||||

|

```

|

||||||

|

|

||||||

|

When the [review effort score](https://www.qodo.ai/images/pr_agent/review3.png) is lower or equal to X, the PR will be auto-approved.

|

||||||

|

|

||||||

|

___

|

||||||

|

|

||||||

|

- **No code suggestions**

|

||||||

|

|

||||||

|

```toml

|

||||||

|

[config]

|

||||||

|

enable_auto_approval = true

|

||||||

|

auto_approve_for_no_suggestions = true

|

||||||

|

```

|

||||||

|

|

||||||

|

When no [code suggestions](https://www.qodo.ai/images/pr_agent/code_suggestions_as_comment_closed.png) were found for the PR, the PR will be auto-approved.

|

||||||

|

|

||||||

|

___

|

||||||

|

|

||||||

|

- **Ticket Compliance**

|

||||||

|

|

||||||

|

```toml

|

||||||

|

[config]

|

||||||

|

enable_auto_approval = true

|

||||||

|

ensure_ticket_compliance = true # Default is false

|

||||||

|

```

|

||||||

|

|

||||||

|

If `ensure_ticket_compliance` is set to `true`, auto-approval will be disabled if a ticket is linked to the PR and the ticket is not compliant (e.g., the `review` tool did not mark the PR as fully compliant with the ticket). This ensures that PRs are only auto-approved if their associated tickets are properly resolved.

|

||||||

|

|

||||||

### How many code suggestions are generated?

|

### How many code suggestions are generated?

|

||||||

|

|

||||||

|

|||||||

@ -155,7 +155,7 @@ extra_instructions = "..."

|

|||||||

- **`ticket compliance`**: Adds a label indicating code compliance level ("Fully compliant" | "PR Code Verified" | "Partially compliant" | "Not compliant") to any GitHub/Jira/Linea ticket linked in the PR. Controlled by the 'require_ticket_labels' flag (default: false). If 'require_no_ticket_labels' is also enabled, PRs without ticket links will receive a "No ticket found" label.

|

- **`ticket compliance`**: Adds a label indicating code compliance level ("Fully compliant" | "PR Code Verified" | "Partially compliant" | "Not compliant") to any GitHub/Jira/Linea ticket linked in the PR. Controlled by the 'require_ticket_labels' flag (default: false). If 'require_no_ticket_labels' is also enabled, PRs without ticket links will receive a "No ticket found" label.

|

||||||

|

|

||||||

|

|

||||||

### Auto-blocking PRs from being merged based on the generated labels

|

### Blocking PRs from merging based on the generated labels

|

||||||

|

|

||||||

!!! tip ""

|

!!! tip ""

|

||||||

|

|

||||||

|

|||||||

@ -25,3 +25,4 @@ It includes information on how to adjust Qodo Merge configurations, define which

|

|||||||

- [Patch Extra Lines](./additional_configurations.md#patch-extra-lines)

|

- [Patch Extra Lines](./additional_configurations.md#patch-extra-lines)

|

||||||

- [FAQ](https://qodo-merge-docs.qodo.ai/faq/)

|

- [FAQ](https://qodo-merge-docs.qodo.ai/faq/)

|

||||||

- [Qodo Merge Models](./qodo_merge_models)

|

- [Qodo Merge Models](./qodo_merge_models)

|

||||||

|

- [Qodo Merge Endpoints](./qm_endpoints)

|

||||||

|

|||||||

369

docs/docs/usage-guide/qm_endpoints.md

Normal file

369

docs/docs/usage-guide/qm_endpoints.md

Normal file

@ -0,0 +1,369 @@

|

|||||||

|

|

||||||

|

# Overview

|

||||||

|

|

||||||

|

By default, Qodo Merge processes webhooks that respond to events or comments (for example, PR is opened), posting its responses directly on the PR page.

|

||||||

|

|

||||||

|

Qodo Merge now features two CLI endpoints that let you invoke its tools and receive responses directly (both as formatted markdown as well as a raw JSON), rather than having them posted to the PR page:

|

||||||

|

|

||||||

|

- **Pull Request Endpoint** - Accepts GitHub PR URL, along with the desired tool to invoke (**note**: only available on-premises, or single tenant).

|

||||||

|

- **Diff Endpoint** - Git agnostic option that accepts a comparison of two states, either as a list of “before” and “after” files’ contents, or as a unified diff file, along with the desired tool to invoke.

|

||||||

|

|

||||||

|

# Setup

|

||||||

|

|

||||||

|

## Enabling desired endpoints (for on-prem deployment)

|

||||||

|

|

||||||

|

:bulb: Add the following to your helm chart\secrets file:

|

||||||

|

|

||||||

|

Pull Request Endpoint:

|

||||||

|

|

||||||

|

```toml

|

||||||

|

[qm_pull_request_endpoint]

|

||||||

|

enabled = true

|

||||||

|

```

|

||||||

|

|

||||||

|

Diff Endpoint:

|

||||||

|

|

||||||

|

```toml

|

||||||

|

[qm_diff_endpoint]

|

||||||

|

enabled = true

|

||||||

|

```

|

||||||

|

|

||||||

|

**Important:** This endpoint can only be enabled through the pod's main secret file, **not** through standard configuration files.

|

||||||

|

|

||||||

|

## Access Key

|

||||||

|

|

||||||

|

The endpoints require the user to provide an access key in each invocation. Choose one of the following options to retrieve such key.

|

||||||

|

|

||||||

|

### Option 1: Endpoint Key (On Premise / Single Tenant only)

|

||||||

|

|

||||||

|

Define an endpoint key in the helm chart of your pod configuration:

|

||||||

|

|

||||||

|

```toml

|

||||||

|

[qm_pull_request_endpoint]

|

||||||

|

enabled = true

|

||||||

|

endpoint_key = "your-secure-key-here"

|

||||||

|

|

||||||

|

```

|

||||||

|

|

||||||

|

```toml

|

||||||

|

[qm_diff_endpoint]

|

||||||

|

enabled = true

|

||||||

|

endpoint_key = "your-secure-key-here"

|

||||||

|

```

|

||||||

|

|

||||||

|

### Option 2: API Key for Cloud users (Diff Endpoint only)

|

||||||

|

|

||||||

|

Generate a long-lived API key by authenticating the user. We offer two different methods to achieve this:

|

||||||

|

|

||||||

|

### - Shell script

|

||||||

|

|

||||||

|

Download and run the following script: [gen_api_key.sh](https://github.com/qodo-ai/pr-agent/blob/5dfd696c2b1f43e1d620fe17b9dc10c25c2304f9/pr_agent/scripts/qm_endpoint_auth/gen_api_key.sh)

|

||||||

|

|

||||||

|

### - npx

|

||||||

|

|

||||||

|

1. Install node

|

||||||

|

2. Run: `npx @qodo/gen login`

|

||||||

|

|

||||||

|

Regardless of which method used, follow the instructions in the opened browser page. Once logged in successfully via the website, the script will return the generated API key:

|

||||||

|

|

||||||

|

```toml

|

||||||

|

✅ Authentication successful! API key saved.

|

||||||

|

📋 Your API key: ...

|

||||||

|

```

|

||||||

|

|

||||||

|

**Note:** Each login generates a new API key, making any previous ones **obsolete**.

|

||||||

|

|

||||||

|

# Available Tools

|

||||||

|

Both endpoints support the following Qodo Merge tools:

|

||||||

|

|

||||||

|

[**Improve**](https://qodo-merge-docs.qodo.ai/tools/improve/) | [**Review**](https://qodo-merge-docs.qodo.ai/tools/review/) | [**Describe**](https://qodo-merge-docs.qodo.ai/tools/describe/) | [**Ask**](https://qodo-merge-docs.qodo.ai/tools/ask/) | [**Add Docs**](https://qodo-merge-docs.qodo.ai/tools/documentation/) | [**Analyze**](https://qodo-merge-docs.qodo.ai/tools/analyze/) | [**Config**](https://qodo-merge-docs.qodo.ai/tools/config/) | [**Generate Labels**](https://qodo-merge-docs.qodo.ai/tools/custom_labels/) | [**Improve Component**](https://qodo-merge-docs.qodo.ai/tools/improve_component/) | [**Test**](https://qodo-merge-docs.qodo.ai/tools/test/) | [**Custom Prompt**](https://qodo-merge-docs.qodo.ai/tools/custom_prompt/)

|

||||||

|

|

||||||

|

# How to Run

|

||||||

|

For all endpoints, there is a need to specify the access key in the header as the value next to the field: “X-API-Key”.

|

||||||

|

|

||||||

|

## Pull Request Endpoint

|

||||||

|

|

||||||

|

**URL:** `/api/v1/qm_pull_request`

|

||||||

|

|

||||||

|

### Request Format

|

||||||

|

|

||||||

|

```json

|

||||||

|

{

|

||||||

|

"pr_url": "<https://github.com/owner/repo/pull/123>",

|

||||||

|

"command": "<COMMAND> ARG_1 ARG_2 ..."

|

||||||

|

}

|

||||||

|

```

|

||||||

|

|

||||||

|

### Usage Examples

|

||||||

|

|

||||||

|

### cURL

|

||||||

|

|

||||||

|

```bash

|

||||||

|

curl -X POST "<your-server>/api/v1/qm_pull_request" \\

|

||||||

|

-H "Content-Type: application/json" \\

|

||||||

|

-H "X-API-Key: <your-key>"

|

||||||

|

-d '{

|

||||||

|

"pr_url": "<https://github.com/owner/repo/pull/123>",

|

||||||

|

"command": "improve"

|

||||||

|

}'

|

||||||

|

```

|

||||||

|

|

||||||

|

### Python

|

||||||

|

|

||||||

|

```python

|

||||||

|

import requests

|

||||||

|

import json

|

||||||

|

|

||||||

|

def call_qm_pull_request(pr_url: str, command: str, endpoint_key: str):

|

||||||

|

url = "<your-server>/api/v1/qm_pull_request"

|

||||||

|

|

||||||

|

payload = {

|

||||||

|

"pr_url": pr_url,

|

||||||

|

"command": command

|

||||||

|

}

|

||||||

|

|