mirror of

https://github.com/qodo-ai/pr-agent.git

synced 2025-07-21 04:50:39 +08:00

Compare commits

2 Commits

hl/fix_azu

...

tr/main_lo

| Author | SHA1 | Date | |

|---|---|---|---|

| 6e7cf35761 | |||

| 7273c9c0c1 |

82

README.md

82

README.md

@ -70,47 +70,47 @@ Read more about it [here](https://qodo-merge-docs.qodo.ai/tools/scan_repo_discus

|

||||

|

||||

Supported commands per platform:

|

||||

|

||||

| | | GitHub | GitLab | Bitbucket | Azure DevOps | Gitea |

|

||||

| ----- |---------------------------------------------------------------------------------------------------------|:------:|:------:|:---------:|:------------:|:-----:|

|

||||

| TOOLS | [Review](https://qodo-merge-docs.qodo.ai/tools/review/) | ✅ | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [Describe](https://qodo-merge-docs.qodo.ai/tools/describe/) | ✅ | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [Improve](https://qodo-merge-docs.qodo.ai/tools/improve/) | ✅ | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [Ask](https://qodo-merge-docs.qodo.ai/tools/ask/) | ✅ | ✅ | ✅ | ✅ | |

|

||||

| | ⮑ [Ask on code lines](https://qodo-merge-docs.qodo.ai/tools/ask/#ask-lines) | ✅ | ✅ | | | |

|

||||

| | [Update CHANGELOG](https://qodo-merge-docs.qodo.ai/tools/update_changelog/) | ✅ | ✅ | ✅ | ✅ | |

|

||||

| | [Help Docs](https://qodo-merge-docs.qodo.ai/tools/help_docs/?h=auto#auto-approval) | ✅ | ✅ | ✅ | | |

|

||||

| | [Ticket Context](https://qodo-merge-docs.qodo.ai/core-abilities/fetching_ticket_context/) 💎 | ✅ | ✅ | ✅ | | |

|

||||

| | [Utilizing Best Practices](https://qodo-merge-docs.qodo.ai/tools/improve/#best-practices) 💎 | ✅ | ✅ | ✅ | | |

|

||||

| | [PR Chat](https://qodo-merge-docs.qodo.ai/chrome-extension/features/#pr-chat) 💎 | ✅ | | | | |

|

||||

| | [Suggestion Tracking](https://qodo-merge-docs.qodo.ai/tools/improve/#suggestion-tracking) 💎 | ✅ | ✅ | | | |

|

||||

| | [CI Feedback](https://qodo-merge-docs.qodo.ai/tools/ci_feedback/) 💎 | ✅ | | | | |

|

||||

| | [PR Documentation](https://qodo-merge-docs.qodo.ai/tools/documentation/) 💎 | ✅ | ✅ | | | |

|

||||

| | [Custom Labels](https://qodo-merge-docs.qodo.ai/tools/custom_labels/) 💎 | ✅ | ✅ | | | |

|

||||

| | [Analyze](https://qodo-merge-docs.qodo.ai/tools/analyze/) 💎 | ✅ | ✅ | | | |

|

||||

| | [Similar Code](https://qodo-merge-docs.qodo.ai/tools/similar_code/) 💎 | ✅ | | | | |

|

||||

| | [Custom Prompt](https://qodo-merge-docs.qodo.ai/tools/custom_prompt/) 💎 | ✅ | ✅ | ✅ | | |

|

||||

| | [Test](https://qodo-merge-docs.qodo.ai/tools/test/) 💎 | ✅ | ✅ | | | |

|

||||

| | [Implement](https://qodo-merge-docs.qodo.ai/tools/implement/) 💎 | ✅ | ✅ | ✅ | | |

|

||||

| | [Scan Repo Discussions](https://qodo-merge-docs.qodo.ai/tools/scan_repo_discussions/) 💎 | ✅ | | | | |

|

||||

| | [Auto-Approve](https://qodo-merge-docs.qodo.ai/tools/improve/?h=auto#auto-approval) 💎 | ✅ | ✅ | ✅ | | |

|

||||

| | | | | | | |

|

||||

| USAGE | [CLI](https://qodo-merge-docs.qodo.ai/usage-guide/automations_and_usage/#local-repo-cli) | ✅ | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [App / webhook](https://qodo-merge-docs.qodo.ai/usage-guide/automations_and_usage/#github-app) | ✅ | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [Tagging bot](https://github.com/Codium-ai/pr-agent#try-it-now) | ✅ | | | | |

|

||||

| | [Actions](https://qodo-merge-docs.qodo.ai/installation/github/#run-as-a-github-action) | ✅ | ✅ | ✅ | ✅ | |

|

||||

| | | | | | | |

|

||||

| CORE | [PR compression](https://qodo-merge-docs.qodo.ai/core-abilities/compression_strategy/) | ✅ | ✅ | ✅ | ✅ | |

|

||||

| | Adaptive and token-aware file patch fitting | ✅ | ✅ | ✅ | ✅ | |

|

||||

| | [Multiple models support](https://qodo-merge-docs.qodo.ai/usage-guide/changing_a_model/) | ✅ | ✅ | ✅ | ✅ | |

|

||||

| | [Local and global metadata](https://qodo-merge-docs.qodo.ai/core-abilities/metadata/) | ✅ | ✅ | ✅ | ✅ | |

|

||||

| | [Dynamic context](https://qodo-merge-docs.qodo.ai/core-abilities/dynamic_context/) | ✅ | ✅ | ✅ | ✅ | |

|

||||

| | [Self reflection](https://qodo-merge-docs.qodo.ai/core-abilities/self_reflection/) | ✅ | ✅ | ✅ | ✅ | |

|

||||

| | [Static code analysis](https://qodo-merge-docs.qodo.ai/core-abilities/static_code_analysis/) 💎 | ✅ | ✅ | | | |

|

||||

| | [Global and wiki configurations](https://qodo-merge-docs.qodo.ai/usage-guide/configuration_options/) 💎 | ✅ | ✅ | ✅ | | |

|

||||

| | [PR interactive actions](https://www.qodo.ai/images/pr_agent/pr-actions.mp4) 💎 | ✅ | ✅ | | | |

|

||||

| | [Impact Evaluation](https://qodo-merge-docs.qodo.ai/core-abilities/impact_evaluation/) 💎 | ✅ | ✅ | | | |

|

||||

| | [Code Validation 💎](https://qodo-merge-docs.qodo.ai/core-abilities/code_validation/) | ✅ | ✅ | ✅ | ✅ | |

|

||||

| | [Auto Best Practices 💎](https://qodo-merge-docs.qodo.ai/core-abilities/auto_best_practices/) | ✅ | | | | |

|

||||

| | | GitHub | GitLab | Bitbucket | Azure DevOps |

|

||||

| ----- |---------------------------------------------------------------------------------------------------------|:------:|:------:|:---------:|:------------:|

|

||||

| TOOLS | [Review](https://qodo-merge-docs.qodo.ai/tools/review/) | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [Describe](https://qodo-merge-docs.qodo.ai/tools/describe/) | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [Improve](https://qodo-merge-docs.qodo.ai/tools/improve/) | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [Ask](https://qodo-merge-docs.qodo.ai/tools/ask/) | ✅ | ✅ | ✅ | ✅ |

|

||||

| | ⮑ [Ask on code lines](https://qodo-merge-docs.qodo.ai/tools/ask/#ask-lines) | ✅ | ✅ | | |

|

||||

| | [Update CHANGELOG](https://qodo-merge-docs.qodo.ai/tools/update_changelog/) | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [Help Docs](https://qodo-merge-docs.qodo.ai/tools/help_docs/?h=auto#auto-approval) | ✅ | ✅ | ✅ | |

|

||||

| | [Ticket Context](https://qodo-merge-docs.qodo.ai/core-abilities/fetching_ticket_context/) 💎 | ✅ | ✅ | ✅ | |

|

||||

| | [Utilizing Best Practices](https://qodo-merge-docs.qodo.ai/tools/improve/#best-practices) 💎 | ✅ | ✅ | ✅ | |

|

||||

| | [PR Chat](https://qodo-merge-docs.qodo.ai/chrome-extension/features/#pr-chat) 💎 | ✅ | | | |

|

||||

| | [Suggestion Tracking](https://qodo-merge-docs.qodo.ai/tools/improve/#suggestion-tracking) 💎 | ✅ | ✅ | | |

|

||||

| | [CI Feedback](https://qodo-merge-docs.qodo.ai/tools/ci_feedback/) 💎 | ✅ | | | |

|

||||

| | [PR Documentation](https://qodo-merge-docs.qodo.ai/tools/documentation/) 💎 | ✅ | ✅ | | |

|

||||

| | [Custom Labels](https://qodo-merge-docs.qodo.ai/tools/custom_labels/) 💎 | ✅ | ✅ | | |

|

||||

| | [Analyze](https://qodo-merge-docs.qodo.ai/tools/analyze/) 💎 | ✅ | ✅ | | |

|

||||

| | [Similar Code](https://qodo-merge-docs.qodo.ai/tools/similar_code/) 💎 | ✅ | | | |

|

||||

| | [Custom Prompt](https://qodo-merge-docs.qodo.ai/tools/custom_prompt/) 💎 | ✅ | ✅ | ✅ | |

|

||||

| | [Test](https://qodo-merge-docs.qodo.ai/tools/test/) 💎 | ✅ | ✅ | | |

|

||||

| | [Implement](https://qodo-merge-docs.qodo.ai/tools/implement/) 💎 | ✅ | ✅ | ✅ | |

|

||||

| | [Scan Repo Discussions](https://qodo-merge-docs.qodo.ai/tools/scan_repo_discussions/) 💎 | ✅ | | | |

|

||||

| | [Auto-Approve](https://qodo-merge-docs.qodo.ai/tools/improve/?h=auto#auto-approval) 💎 | ✅ | ✅ | ✅ | |

|

||||

| | | | | | |

|

||||

| USAGE | [CLI](https://qodo-merge-docs.qodo.ai/usage-guide/automations_and_usage/#local-repo-cli) | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [App / webhook](https://qodo-merge-docs.qodo.ai/usage-guide/automations_and_usage/#github-app) | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [Tagging bot](https://github.com/Codium-ai/pr-agent#try-it-now) | ✅ | | | |

|

||||

| | [Actions](https://qodo-merge-docs.qodo.ai/installation/github/#run-as-a-github-action) | ✅ | ✅ | ✅ | ✅ |

|

||||

| | | | | | |

|

||||

| CORE | [PR compression](https://qodo-merge-docs.qodo.ai/core-abilities/compression_strategy/) | ✅ | ✅ | ✅ | ✅ |

|

||||

| | Adaptive and token-aware file patch fitting | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [Multiple models support](https://qodo-merge-docs.qodo.ai/usage-guide/changing_a_model/) | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [Local and global metadata](https://qodo-merge-docs.qodo.ai/core-abilities/metadata/) | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [Dynamic context](https://qodo-merge-docs.qodo.ai/core-abilities/dynamic_context/) | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [Self reflection](https://qodo-merge-docs.qodo.ai/core-abilities/self_reflection/) | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [Static code analysis](https://qodo-merge-docs.qodo.ai/core-abilities/static_code_analysis/) 💎 | ✅ | ✅ | | |

|

||||

| | [Global and wiki configurations](https://qodo-merge-docs.qodo.ai/usage-guide/configuration_options/) 💎 | ✅ | ✅ | ✅ | |

|

||||

| | [PR interactive actions](https://www.qodo.ai/images/pr_agent/pr-actions.mp4) 💎 | ✅ | ✅ | | |

|

||||

| | [Impact Evaluation](https://qodo-merge-docs.qodo.ai/core-abilities/impact_evaluation/) 💎 | ✅ | ✅ | | |

|

||||

| | [Code Validation 💎](https://qodo-merge-docs.qodo.ai/core-abilities/code_validation/) | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [Auto Best Practices 💎](https://qodo-merge-docs.qodo.ai/core-abilities/auto_best_practices/) | ✅ | | | |

|

||||

- 💎 means this feature is available only in [Qodo Merge](https://www.qodo.ai/pricing/)

|

||||

|

||||

[//]: # (- Support for additional git providers is described in [here](./docs/Full_environments.md))

|

||||

|

||||

@ -33,11 +33,6 @@ FROM base AS azure_devops_webhook

|

||||

ADD pr_agent pr_agent

|

||||

CMD ["python", "pr_agent/servers/azuredevops_server_webhook.py"]

|

||||

|

||||

FROM base AS gitea_app

|

||||

ADD pr_agent pr_agent

|

||||

CMD ["python", "-m", "gunicorn", "-k", "uvicorn.workers.UvicornWorker", "-c", "pr_agent/servers/gunicorn_config.py","pr_agent.servers.gitea_app:app"]

|

||||

|

||||

|

||||

FROM base AS test

|

||||

ADD requirements-dev.txt .

|

||||

RUN pip install --no-cache-dir -r requirements-dev.txt && rm requirements-dev.txt

|

||||

|

||||

@ -1,55 +0,0 @@

|

||||

# Chat on code suggestions 💎

|

||||

|

||||

`Supported Git Platforms: GitHub, GitLab`

|

||||

|

||||

## Overview

|

||||

|

||||

Qodo Merge implements an orchestrator agent that enables interactive code discussions, listening and responding to comments without requiring explicit tool calls.

|

||||

The orchestrator intelligently analyzes your responses to determine if you want to implement a suggestion, ask a question, or request help, then delegates to the appropriate specialized tool.

|

||||

|

||||

To minimize unnecessary notifications and maintain focused discussions, the orchestrator agent will only respond to comments made directly within the inline code suggestion discussions it has created (`/improve`) or within discussions initiated by the `/implement` command.

|

||||

|

||||

## Getting Started

|

||||

|

||||

### Setup

|

||||

|

||||

Enable interactive code discussions by adding the following to your configuration file (default is `True`):

|

||||

|

||||

```toml

|

||||

[pr_code_suggestions]

|

||||

enable_chat_in_code_suggestions = true

|

||||

```

|

||||

|

||||

|

||||

### Activation

|

||||

|

||||

#### `/improve`

|

||||

|

||||

To obtain dynamic responses, the following steps are required:

|

||||

|

||||

1. Run the `/improve` command (mostly automatic)

|

||||

2. Check the `/improve` recommendation checkboxes (_Apply this suggestion_) to have Qodo Merge generate a new inline code suggestion discussion

|

||||

3. The orchestrator agent will then automatically listen to and reply to comments within the discussion without requiring additional commands

|

||||

|

||||

#### `/implement`

|

||||

|

||||

To obtain dynamic responses, the following steps are required:

|

||||

|

||||

1. Select code lines in the PR diff and run the `/implement` command

|

||||

2. Wait for Qodo Merge to generate a new inline code suggestion

|

||||

3. The orchestrator agent will then automatically listen to and reply to comments within the discussion without requiring additional commands

|

||||

|

||||

|

||||

## Explore the available interaction patterns

|

||||

|

||||

!!! tip "Tip: Direct the agent with keywords"

|

||||

Use "implement" or "apply" for code generation. Use "explain", "why", or "how" for information and help.

|

||||

|

||||

=== "Asking for Details"

|

||||

{width=512}

|

||||

|

||||

=== "Implementing Suggestions"

|

||||

{width=512}

|

||||

|

||||

=== "Providing Additional Help"

|

||||

{width=512}

|

||||

@ -9,9 +9,8 @@ This integration enriches the review process by automatically surfacing relevant

|

||||

|

||||

**Ticket systems supported**:

|

||||

|

||||

- [GitHub](https://qodo-merge-docs.qodo.ai/core-abilities/fetching_ticket_context/#github-issues-integration)

|

||||

- [Jira (💎)](https://qodo-merge-docs.qodo.ai/core-abilities/fetching_ticket_context/#jira-integration)

|

||||

- [Linear (💎)](https://qodo-merge-docs.qodo.ai/core-abilities/fetching_ticket_context/#linear-integration)

|

||||

- GitHub

|

||||

- Jira (💎)

|

||||

|

||||

**Ticket data fetched:**

|

||||

|

||||

@ -76,17 +75,13 @@ The recommended way to authenticate with Jira Cloud is to install the Qodo Merge

|

||||

|

||||

Installation steps:

|

||||

|

||||

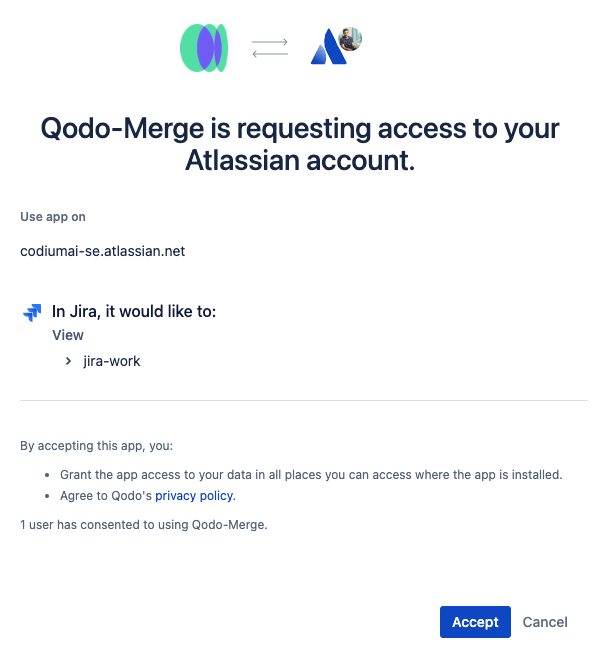

1. Go to the [Qodo Merge integrations page](https://app.qodo.ai/qodo-merge/integrations)

|

||||

|

||||

2. Click on the Connect **Jira Cloud** button to connect the Jira Cloud app

|

||||

|

||||

3. Click the `accept` button.<br>

|

||||

1. Click [here](https://auth.atlassian.com/authorize?audience=api.atlassian.com&client_id=8krKmA4gMD8mM8z24aRCgPCSepZNP1xf&scope=read%3Ajira-work%20offline_access&redirect_uri=https%3A%2F%2Fregister.jira.pr-agent.codium.ai&state=qodomerge&response_type=code&prompt=consent) to install the Qodo Merge app in your Jira Cloud instance, click the `accept` button.<br>

|

||||

{width=384}

|

||||

|

||||

4. After installing the app, you will be redirected to the Qodo Merge registration page. and you will see a success message.<br>

|

||||

2. After installing the app, you will be redirected to the Qodo Merge registration page. and you will see a success message.<br>

|

||||

{width=384}

|

||||

|

||||

5. Now Qodo Merge will be able to fetch Jira ticket context for your PRs.

|

||||

3. Now Qodo Merge will be able to fetch Jira ticket context for your PRs.

|

||||

|

||||

**2) Email/Token Authentication**

|

||||

|

||||

@ -305,45 +300,3 @@ Name your branch with the ticket ID as a prefix (e.g., `ISSUE-123-feature-descri

|

||||

[jira]

|

||||

jira_base_url = "https://<JIRA_ORG>.atlassian.net"

|

||||

```

|

||||

|

||||

## Linear Integration 💎

|

||||

|

||||

### Linear App Authentication

|

||||

|

||||

The recommended way to authenticate with Linear is to connect the Linear app through the Qodo Merge portal.

|

||||

|

||||

Installation steps:

|

||||

|

||||

1. Go to the [Qodo Merge integrations page](https://app.qodo.ai/qodo-merge/integrations)

|

||||

|

||||

2. Navigate to the **Integrations** tab

|

||||

|

||||

3. Click on the **Linear** button to connect the Linear app

|

||||

|

||||

4. Follow the authentication flow to authorize Qodo Merge to access your Linear workspace

|

||||

|

||||

5. Once connected, Qodo Merge will be able to fetch Linear ticket context for your PRs

|

||||

|

||||

### How to link a PR to a Linear ticket

|

||||

|

||||

Qodo Merge will automatically detect Linear tickets using either of these methods:

|

||||

|

||||

**Method 1: Description Reference:**

|

||||

|

||||

Include a ticket reference in your PR description using either:

|

||||

- The complete Linear ticket URL: `https://linear.app/[ORG_ID]/issue/[TICKET_ID]`

|

||||

- The shortened ticket ID: `[TICKET_ID]` (e.g., `ABC-123`) - requires linear_base_url configuration (see below).

|

||||

|

||||

**Method 2: Branch Name Detection:**

|

||||

|

||||

Name your branch with the ticket ID as a prefix (e.g., `ABC-123-feature-description` or `feature/ABC-123/feature-description`).

|

||||

|

||||

!!! note "Linear Base URL"

|

||||

For shortened ticket IDs or branch detection (method 2), you must configure the Linear base URL in your configuration file under the [linear] section:

|

||||

|

||||

```toml

|

||||

[linear]

|

||||

linear_base_url = "https://linear.app/[ORG_ID]"

|

||||

```

|

||||

|

||||

Replace `[ORG_ID]` with your Linear organization identifier.

|

||||

@ -3,7 +3,6 @@

|

||||

Qodo Merge utilizes a variety of core abilities to provide a comprehensive and efficient code review experience. These abilities include:

|

||||

|

||||

- [Auto best practices](https://qodo-merge-docs.qodo.ai/core-abilities/auto_best_practices/)

|

||||

- [Chat on code suggestions](https://qodo-merge-docs.qodo.ai/core-abilities/chat_on_code_suggestions/)

|

||||

- [Code validation](https://qodo-merge-docs.qodo.ai/core-abilities/code_validation/)

|

||||

- [Compression strategy](https://qodo-merge-docs.qodo.ai/core-abilities/compression_strategy/)

|

||||

- [Dynamic context](https://qodo-merge-docs.qodo.ai/core-abilities/dynamic_context/)

|

||||

|

||||

@ -1,46 +0,0 @@

|

||||

## Run a Gitea webhook server

|

||||

|

||||

1. In Gitea create a new user and give it "Reporter" role ("Developer" if using Pro version of the agent) for the intended group or project.

|

||||

|

||||

2. For the user from step 1. generate a `personal_access_token` with `api` access.

|

||||

|

||||

3. Generate a random secret for your app, and save it for later (`webhook_secret`). For example, you can use:

|

||||

|

||||

```bash

|

||||

WEBHOOK_SECRET=$(python -c "import secrets; print(secrets.token_hex(10))")

|

||||

```

|

||||

|

||||

4. Clone this repository:

|

||||

|

||||

```bash

|

||||

git clone https://github.com/qodo-ai/pr-agent.git

|

||||

```

|

||||

|

||||

5. Prepare variables and secrets. Skip this step if you plan on setting these as environment variables when running the agent:

|

||||

1. In the configuration file/variables:

|

||||

- Set `config.git_provider` to "gitea"

|

||||

|

||||

2. In the secrets file/variables:

|

||||

- Set your AI model key in the respective section

|

||||

- In the [Gitea] section, set `personal_access_token` (with token from step 2) and `webhook_secret` (with secret from step 3)

|

||||

|

||||

6. Build a Docker image for the app and optionally push it to a Docker repository. We'll use Dockerhub as an example:

|

||||

|

||||

```bash

|

||||

docker build -f /docker/Dockerfile -t pr-agent:gitea_app --target gitea_app .

|

||||

docker push codiumai/pr-agent:gitea_webhook # Push to your Docker repository

|

||||

```

|

||||

|

||||

7. Set the environmental variables, the method depends on your docker runtime. Skip this step if you included your secrets/configuration directly in the Docker image.

|

||||

|

||||

```bash

|

||||

CONFIG__GIT_PROVIDER=gitea

|

||||

GITEA__PERSONAL_ACCESS_TOKEN=<personal_access_token>

|

||||

GITEA__WEBHOOK_SECRET=<webhook_secret>

|

||||

GITEA__URL=https://gitea.com # Or self host

|

||||

OPENAI__KEY=<your_openai_api_key>

|

||||

```

|

||||

|

||||

8. Create a webhook in your Gitea project. Set the URL to `http[s]://<PR_AGENT_HOSTNAME>/api/v1/gitea_webhooks`, the secret token to the generated secret from step 3, and enable the triggers `push`, `comments` and `merge request events`.

|

||||

|

||||

9. Test your installation by opening a merge request or commenting on a merge request using one of PR Agent's commands.

|

||||

@ -9,7 +9,6 @@ There are several ways to use self-hosted PR-Agent:

|

||||

- [GitLab integration](./gitlab.md)

|

||||

- [BitBucket integration](./bitbucket.md)

|

||||

- [Azure DevOps integration](./azure.md)

|

||||

- [Gitea integration](./gitea.md)

|

||||

|

||||

## Qodo Merge 💎

|

||||

|

||||

|

||||

@ -1,7 +1,7 @@

|

||||

To run PR-Agent locally, you first need to acquire two keys:

|

||||

|

||||

1. An OpenAI key from [here](https://platform.openai.com/api-keys){:target="_blank"}, with access to GPT-4 and o4-mini (or a key for other [language models](https://qodo-merge-docs.qodo.ai/usage-guide/changing_a_model/), if you prefer).

|

||||

2. A personal access token from your Git platform (GitHub, GitLab, BitBucket,Gitea) with repo scope. GitHub token, for example, can be issued from [here](https://github.com/settings/tokens){:target="_blank"}

|

||||

2. A personal access token from your Git platform (GitHub, GitLab, BitBucket) with repo scope. GitHub token, for example, can be issued from [here](https://github.com/settings/tokens){:target="_blank"}

|

||||

|

||||

## Using Docker image

|

||||

|

||||

@ -40,19 +40,6 @@ To invoke a tool (for example `review`), you can run PR-Agent directly from the

|

||||

docker run --rm -it -e CONFIG.GIT_PROVIDER=bitbucket -e OPENAI.KEY=$OPENAI_API_KEY -e BITBUCKET.BEARER_TOKEN=$BITBUCKET_BEARER_TOKEN codiumai/pr-agent:latest --pr_url=<pr_url> review

|

||||

```

|

||||

|

||||

- For Gitea:

|

||||

|

||||

```bash

|

||||

docker run --rm -it -e OPENAI.KEY=<your key> -e CONFIG.GIT_PROVIDER=gitea -e GITEA.PERSONAL_ACCESS_TOKEN=<your token> codiumai/pr-agent:latest --pr_url <pr_url> review

|

||||

```

|

||||

|

||||

If you have a dedicated Gitea instance, you need to specify the custom url as variable:

|

||||

|

||||

```bash

|

||||

-e GITEA.URL=<your gitea instance url>

|

||||

```

|

||||

|

||||

|

||||

For other git providers, update `CONFIG.GIT_PROVIDER` accordingly and check the [`pr_agent/settings/.secrets_template.toml`](https://github.com/Codium-ai/pr-agent/blob/main/pr_agent/settings/.secrets_template.toml) file for environment variables expected names and values.

|

||||

|

||||

### Utilizing environment variables

|

||||

|

||||

@ -12,11 +12,10 @@ It also outlines our development roadmap for the upcoming three months. Please n

|

||||

- **Scan Repo Discussions Tool**: A new tool that analyzes past code discussions to generate a `best_practices.md` file, distilling key insights and recommendations. ([Learn more](https://qodo-merge-docs.qodo.ai/tools/scan_repo_discussions/))

|

||||

- **Enhanced Models**: Qodo Merge now defaults to a combination of top models (Claude Sonnet 3.7 and Gemini 2.5 Pro) and incorporates dedicated code validation logic for improved results. ([Details 1](https://qodo-merge-docs.qodo.ai/usage-guide/qodo_merge_models/), [Details 2](https://qodo-merge-docs.qodo.ai/core-abilities/code_validation/))

|

||||

- **Chrome Extension Update**: Qodo Merge Chrome extension now supports single-tenant users. ([Learn more](https://qodo-merge-docs.qodo.ai/chrome-extension/options/#configuration-options/))

|

||||

- **Installation Metrics**: Upon installation, Qodo Merge analyzes past PRs for key metrics (e.g., time to merge, time to first reviewer feedback), enabling pre/post-installation comparison to calculate ROI.

|

||||

|

||||

|

||||

=== "Future Roadmap"

|

||||

- **Smart Update**: Upon PR updates, Qodo Merge will offer tailored code suggestions, addressing both the entire PR and the specific incremental changes since the last feedback.

|

||||

- **CLI Endpoint**: A new Qodo Merge endpoint will accept lists of before/after code changes, execute Qodo Merge commands, and return the results.

|

||||

- **Simplified Free Tier**: We plan to transition from a two-week free trial to a free tier offering a limited number of suggestions per month per organization.

|

||||

- **Best Practices Hierarchy**: Introducing support for structured best practices, such as for folders in monorepos or a unified best practice file for a group of repositories.

|

||||

- **Installation Metrics**: Upon installation, Qodo Merge will analyze past PRs for key metrics (e.g., time to merge, time to first reviewer feedback), enabling pre/post-installation comparison to calculate ROI.

|

||||

@ -56,21 +56,6 @@ Everything below this marker is treated as previously auto-generated content and

|

||||

|

||||

{width=512}

|

||||

|

||||

### Sequence Diagram Support

|

||||

When the `enable_pr_diagram` option is enabled in your configuration, the `/describe` tool will include a `Mermaid` sequence diagram in the PR description.

|

||||

|

||||

This diagram represents interactions between components/functions based on the diff content.

|

||||

|

||||

### How to enable

|

||||

|

||||

In your configuration:

|

||||

|

||||

```

|

||||

toml

|

||||

[pr_description]

|

||||

enable_pr_diagram = true

|

||||

```

|

||||

|

||||

## Configuration options

|

||||

|

||||

!!! example "Possible configurations"

|

||||

@ -124,10 +109,6 @@ enable_pr_diagram = true

|

||||

<td><b>enable_help_text</b></td>

|

||||

<td>If set to true, the tool will display a help text in the comment. Default is false.</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td><b>enable_pr_diagram</b></td>

|

||||

<td>If set to true, the tool will generate a horizontal Mermaid flowchart summarizing the main pull request changes. This field remains empty if not applicable. Default is false.</td>

|

||||

</tr>

|

||||

</table>

|

||||

|

||||

## Inline file summary 💎

|

||||

|

||||

@ -7,50 +7,50 @@ It leverages LLM technology to transform PR comments and review suggestions into

|

||||

|

||||

## Usage Scenarios

|

||||

|

||||

=== "For Reviewers"

|

||||

### For Reviewers

|

||||

|

||||

Reviewers can request code changes by:

|

||||

Reviewers can request code changes by:

|

||||

|

||||

1. Selecting the code block to be modified.

|

||||

2. Adding a comment with the syntax:

|

||||

1. Selecting the code block to be modified.

|

||||

2. Adding a comment with the syntax:

|

||||

|

||||

```

|

||||

/implement <code-change-description>

|

||||

```

|

||||

```

|

||||

/implement <code-change-description>

|

||||

```

|

||||

|

||||

{width=640}

|

||||

{width=640}

|

||||

|

||||

=== "For PR Authors"

|

||||

### For PR Authors

|

||||

|

||||

PR authors can implement suggested changes by replying to a review comment using either:

|

||||

PR authors can implement suggested changes by replying to a review comment using either: <br>

|

||||

|

||||

1. Add specific implementation details as described above

|

||||

1. Add specific implementation details as described above

|

||||

|

||||

```

|

||||

/implement <code-change-description>

|

||||

```

|

||||

```

|

||||

/implement <code-change-description>

|

||||

```

|

||||

|

||||

2. Use the original review comment as instructions

|

||||

2. Use the original review comment as instructions

|

||||

|

||||

```

|

||||

/implement

|

||||

```

|

||||

```

|

||||

/implement

|

||||

```

|

||||

|

||||

{width=640}

|

||||

{width=640}

|

||||

|

||||

=== "For Referencing Comments"

|

||||

### For Referencing Comments

|

||||

|

||||

You can reference and implement changes from any comment by:

|

||||

You can reference and implement changes from any comment by:

|

||||

|

||||

```

|

||||

/implement <link-to-review-comment>

|

||||

```

|

||||

```

|

||||

/implement <link-to-review-comment>

|

||||

```

|

||||

|

||||

{width=640}

|

||||

{width=640}

|

||||

|

||||

Note that the implementation will occur within the review discussion thread.

|

||||

Note that the implementation will occur within the review discussion thread.

|

||||

|

||||

## Configuration options

|

||||

**Configuration options**

|

||||

|

||||

- Use `/implement` to implement code change within and based on the review discussion.

|

||||

- Use `/implement <code-change-description>` inside a review discussion to implement specific instructions.

|

||||

|

||||

@ -288,6 +288,45 @@ We advise users to apply critical analysis and judgment when implementing the pr

|

||||

In addition to mistakes (which may happen, but are rare), sometimes the presented code modification may serve more as an _illustrative example_ than a directly applicable solution.

|

||||

In such cases, we recommend prioritizing the suggestion's detailed description, using the diff snippet primarily as a supporting reference.

|

||||

|

||||

|

||||

### Chat on code suggestions

|

||||

|

||||

> `💎 feature` Platforms supported: GitHub, GitLab

|

||||

|

||||

Qodo Merge implements an orchestrator agent that enables interactive code discussions, listening and responding to comments without requiring explicit tool calls.

|

||||

The orchestrator intelligently analyzes your responses to determine if you want to implement a suggestion, ask a question, or request help, then delegates to the appropriate specialized tool.

|

||||

|

||||

#### Setup and Activation

|

||||

|

||||

Enable interactive code discussions by adding the following to your configuration file (default is `True`):

|

||||

|

||||

```toml

|

||||

[pr_code_suggestions]

|

||||

enable_chat_in_code_suggestions = true

|

||||

```

|

||||

|

||||

!!! info "Activating Dynamic Responses"

|

||||

To obtain dynamic responses, the following steps are required:

|

||||

|

||||

1. Run the `/improve` command (mostly automatic)

|

||||

2. Tick the `/improve` recommendation checkboxes (_Apply this suggestion_) to have Qodo Merge generate a new inline code suggestion discussion

|

||||

3. The orchestrator agent will then automatically listen and reply to comments within the discussion without requiring additional commands

|

||||

|

||||

#### Explore the available interaction patterns:

|

||||

|

||||

!!! tip "Tip: Direct the agent with keywords"

|

||||

Use "implement" or "apply" for code generation. Use "explain", "why", or "how" for information and help.

|

||||

|

||||

=== "Asking for Details"

|

||||

{width=512}

|

||||

|

||||

=== "Implementing Suggestions"

|

||||

{width=512}

|

||||

|

||||

=== "Providing Additional Help"

|

||||

{width=512}

|

||||

|

||||

|

||||

### Dual publishing mode

|

||||

|

||||

Our recommended approach for presenting code suggestions is through a [table](https://qodo-merge-docs.qodo.ai/tools/improve/#overview) (`--pr_code_suggestions.commitable_code_suggestions=false`).

|

||||

|

||||

@ -122,9 +122,7 @@ extra_instructions = "..."

|

||||

|

||||

## Usage Tips

|

||||

|

||||

### General guidelines

|

||||

|

||||

!!! tip ""

|

||||

!!! tip "General guidelines"

|

||||

|

||||

The `review` tool provides a collection of configurable feedbacks about a PR.

|

||||

It is recommended to review the [Configuration options](#configuration-options) section, and choose the relevant options for your use case.

|

||||

@ -134,9 +132,7 @@ extra_instructions = "..."

|

||||

|

||||

On the other hand, if you find one of the enabled features to be irrelevant for your use case, disable it. No default configuration can fit all use cases.

|

||||

|

||||

### Automation

|

||||

|

||||

!!! tip ""

|

||||

!!! tip "Automation"

|

||||

When you first install Qodo Merge app, the [default mode](../usage-guide/automations_and_usage.md#github-app-automatic-tools-when-a-new-pr-is-opened) for the `review` tool is:

|

||||

```

|

||||

pr_commands = ["/review", ...]

|

||||

@ -144,30 +140,16 @@ extra_instructions = "..."

|

||||

Meaning the `review` tool will run automatically on every PR, without any additional configurations.

|

||||

Edit this field to enable/disable the tool, or to change the configurations used.

|

||||

|

||||

### Auto-generated PR labels by the Review Tool

|

||||

!!! tip "Possible labels from the review tool"

|

||||

|

||||

!!! tip ""

|

||||

The `review` tool can auto-generate two specific types of labels for a PR:

|

||||

|

||||

The `review` can tool automatically add labels to your Pull Requests:

|

||||

- a `possible security issue` label that detects if a possible [security issue](https://github.com/Codium-ai/pr-agent/blob/tr/user_description/pr_agent/settings/pr_reviewer_prompts.toml#L136) exists in the PR code (`enable_review_labels_security` flag)

|

||||

- a `Review effort x/5` label, where x is the estimated effort to review the PR on a 1–5 scale (`enable_review_labels_effort` flag)

|

||||

|

||||

- **`possible security issue`**: This label is applied if the tool detects a potential [security vulnerability](https://github.com/qodo-ai/pr-agent/blob/main/pr_agent/settings/pr_reviewer_prompts.toml#L103) in the PR's code. This feedback is controlled by the 'enable_review_labels_security' flag (default is true).

|

||||

- **`review effort [x/5]`**: This label estimates the [effort](https://github.com/qodo-ai/pr-agent/blob/main/pr_agent/settings/pr_reviewer_prompts.toml#L90) required to review the PR on a relative scale of 1 to 5, where 'x' represents the assessed effort. This feedback is controlled by the 'enable_review_labels_effort' flag (default is true).

|

||||

- **`ticket compliance`**: Adds a label indicating code compliance level ("Fully compliant" | "PR Code Verified" | "Partially compliant" | "Not compliant") to any GitHub/Jira/Linea ticket linked in the PR. Controlled by the 'require_ticket_labels' flag (default: false). If 'require_no_ticket_labels' is also enabled, PRs without ticket links will receive a "No ticket found" label.

|

||||

Both modes are useful, and we recommended to enable them.

|

||||

|

||||

|

||||

### Blocking PRs from merging based on the generated labels

|

||||

|

||||

!!! tip ""

|

||||

|

||||

You can configure a CI/CD Action to prevent merging PRs with specific labels. For example, implement a dedicated [GitHub Action](https://medium.com/sequra-tech/quick-tip-block-pull-request-merge-using-labels-6cc326936221).

|

||||

|

||||

This approach helps ensure PRs with potential security issues or ticket compliance problems will not be merged without further review.

|

||||

|

||||

Since AI may make mistakes or lack complete context, use this feature judiciously. For flexibility, users with appropriate permissions can remove generated labels when necessary. When a label is removed, this action will be automatically documented in the PR discussion, clearly indicating it was a deliberate override by an authorized user to allow the merge.

|

||||

|

||||

### Extra instructions

|

||||

|

||||

!!! tip ""

|

||||

!!! tip "Extra instructions"

|

||||

|

||||

Extra instructions are important.

|

||||

The `review` tool can be configured with extra instructions, which can be used to guide the model to a feedback tailored to the needs of your project.

|

||||

@ -186,3 +168,7 @@ extra_instructions = "..."

|

||||

"""

|

||||

```

|

||||

Use triple quotes to write multi-line instructions. Use bullet points to make the instructions more readable.

|

||||

|

||||

!!! tip "Code suggestions"

|

||||

|

||||

The `review` tool previously included a legacy feature for providing code suggestions (controlled by `--pr_reviewer.num_code_suggestion`). This functionality has been deprecated and replaced by the [`improve`](./improve.md) tool, which offers higher quality and more actionable code suggestions.

|

||||

|

||||

@ -30,7 +30,7 @@ verbosity_level=2

|

||||

This is useful for debugging or experimenting with different tools.

|

||||

|

||||

3. **git provider**: The [git_provider](https://github.com/Codium-ai/pr-agent/blob/main/pr_agent/settings/configuration.toml#L5) field in a configuration file determines the GIT provider that will be used by Qodo Merge. Currently, the following providers are supported:

|

||||

`github` **(default)**, `gitlab`, `bitbucket`, `azure`, `codecommit`, `local`,`gitea`, and `gerrit`.

|

||||

`github` **(default)**, `gitlab`, `bitbucket`, `azure`, `codecommit`, `local`, and `gerrit`.

|

||||

|

||||

### CLI Health Check

|

||||

|

||||

@ -312,16 +312,3 @@ pr_commands = [

|

||||

"/improve",

|

||||

]

|

||||

```

|

||||

|

||||

### Gitea Webhook

|

||||

|

||||

After setting up a Gitea webhook, to control which commands will run automatically when a new MR is opened, you can set the `pr_commands` parameter in the configuration file, similar to the GitHub App:

|

||||

|

||||

```toml

|

||||

[gitea]

|

||||

pr_commands = [

|

||||

"/describe",

|

||||

"/review",

|

||||

"/improve",

|

||||

]

|

||||

```

|

||||

|

||||

@ -12,7 +12,6 @@ It includes information on how to adjust Qodo Merge configurations, define which

|

||||

- [GitHub App](./automations_and_usage.md#github-app)

|

||||

- [GitHub Action](./automations_and_usage.md#github-action)

|

||||

- [GitLab Webhook](./automations_and_usage.md#gitlab-webhook)

|

||||

- [Gitea Webhook](./automations_and_usage.md#gitea-webhook)

|

||||

- [BitBucket App](./automations_and_usage.md#bitbucket-app)

|

||||

- [Azure DevOps Provider](./automations_and_usage.md#azure-devops-provider)

|

||||

- [Managing Mail Notifications](./mail_notifications.md)

|

||||

|

||||

@ -44,7 +44,6 @@ nav:

|

||||

- Core Abilities:

|

||||

- 'core-abilities/index.md'

|

||||

- Auto best practices: 'core-abilities/auto_best_practices.md'

|

||||

- Chat on code suggestions: 'core-abilities/chat_on_code_suggestions.md'

|

||||

- Code validation: 'core-abilities/code_validation.md'

|

||||

- Compression strategy: 'core-abilities/compression_strategy.md'

|

||||

- Dynamic context: 'core-abilities/dynamic_context.md'

|

||||

|

||||

@ -64,13 +64,10 @@ MAX_TOKENS = {

|

||||

'vertex_ai/gemini-1.5-flash': 1048576,

|

||||

'vertex_ai/gemini-2.0-flash': 1048576,

|

||||

'vertex_ai/gemini-2.5-flash-preview-04-17': 1048576,

|

||||

'vertex_ai/gemini-2.5-flash-preview-05-20': 1048576,

|

||||

'vertex_ai/gemma2': 8200,

|

||||

'gemini/gemini-1.5-pro': 1048576,

|

||||

'gemini/gemini-1.5-flash': 1048576,

|

||||

'gemini/gemini-2.0-flash': 1048576,

|

||||

'gemini/gemini-2.5-flash-preview-04-17': 1048576,

|

||||

'gemini/gemini-2.5-flash-preview-05-20': 1048576,

|

||||

'gemini/gemini-2.5-pro-preview-03-25': 1048576,

|

||||

'gemini/gemini-2.5-pro-preview-05-06': 1048576,

|

||||

'codechat-bison': 6144,

|

||||

|

||||

@ -1,9 +1,6 @@

|

||||

_LANGCHAIN_INSTALLED = False

|

||||

|

||||

try:

|

||||

from langchain_core.messages import HumanMessage, SystemMessage

|

||||

from langchain_openai import AzureChatOpenAI, ChatOpenAI

|

||||

_LANGCHAIN_INSTALLED = True

|

||||

except: # we don't enforce langchain as a dependency, so if it's not installed, just move on

|

||||

pass

|

||||

|

||||

@ -11,7 +8,6 @@ import functools

|

||||

|

||||

import openai

|

||||

from tenacity import retry, retry_if_exception_type, retry_if_not_exception_type, stop_after_attempt

|

||||

from langchain_core.runnables import Runnable

|

||||

|

||||

from pr_agent.algo.ai_handlers.base_ai_handler import BaseAiHandler

|

||||

from pr_agent.config_loader import get_settings

|

||||

@ -22,14 +18,17 @@ OPENAI_RETRIES = 5

|

||||

|

||||

class LangChainOpenAIHandler(BaseAiHandler):

|

||||

def __init__(self):

|

||||

if not _LANGCHAIN_INSTALLED:

|

||||

error_msg = "LangChain is not installed. Please install it with `pip install langchain`."

|

||||

get_logger().error(error_msg)

|

||||

raise ImportError(error_msg)

|

||||

|

||||

# Initialize OpenAIHandler specific attributes here

|

||||

super().__init__()

|

||||

self.azure = get_settings().get("OPENAI.API_TYPE", "").lower() == "azure"

|

||||

|

||||

# Create a default unused chat object to trigger early validation

|

||||

self._create_chat(self.deployment_id)

|

||||

|

||||

def chat(self, messages: list, model: str, temperature: float):

|

||||

chat = self._create_chat(self.deployment_id)

|

||||

return chat.invoke(input=messages, model=model, temperature=temperature)

|

||||

|

||||

@property

|

||||

def deployment_id(self):

|

||||

"""

|

||||

@ -37,66 +36,16 @@ class LangChainOpenAIHandler(BaseAiHandler):

|

||||

"""

|

||||

return get_settings().get("OPENAI.DEPLOYMENT_ID", None)

|

||||

|

||||

async def _create_chat_async(self, deployment_id=None):

|

||||

try:

|

||||

if self.azure:

|

||||

# Using Azure OpenAI service

|

||||

return AzureChatOpenAI(

|

||||

openai_api_key=get_settings().openai.key,

|

||||

openai_api_version=get_settings().openai.api_version,

|

||||

azure_deployment=deployment_id,

|

||||

azure_endpoint=get_settings().openai.api_base,

|

||||

)

|

||||

else:

|

||||

# Using standard OpenAI or other LLM services

|

||||

openai_api_base = get_settings().get("OPENAI.API_BASE", None)

|

||||

if openai_api_base is None or len(openai_api_base) == 0:

|

||||

return ChatOpenAI(openai_api_key=get_settings().openai.key)

|

||||

else:

|

||||

return ChatOpenAI(

|

||||

openai_api_key=get_settings().openai.key,

|

||||

openai_api_base=openai_api_base

|

||||

)

|

||||

except AttributeError as e:

|

||||

# Handle configuration errors

|

||||

error_msg = f"OpenAI {e.name} is required" if getattr(e, "name") else str(e)

|

||||

get_logger().error(error_msg)

|

||||

raise ValueError(error_msg) from e

|

||||

|

||||

@retry(

|

||||

retry=retry_if_exception_type(openai.APIError) & retry_if_not_exception_type(openai.RateLimitError),

|

||||

stop=stop_after_attempt(OPENAI_RETRIES),

|

||||

)

|

||||

async def chat_completion(self, model: str, system: str, user: str, temperature: float = 0.2, img_path: str = None):

|

||||

if img_path:

|

||||

get_logger().warning(f"Image path is not supported for LangChainOpenAIHandler. Ignoring image path: {img_path}")

|

||||

async def chat_completion(self, model: str, system: str, user: str, temperature: float = 0.2):

|

||||

try:

|

||||

messages = [SystemMessage(content=system), HumanMessage(content=user)]

|

||||

llm = await self._create_chat_async(deployment_id=self.deployment_id)

|

||||

|

||||

if not isinstance(llm, Runnable):

|

||||

error_message = (

|

||||

f"The Langchain LLM object ({type(llm)}) does not implement the Runnable interface. "

|

||||

f"Please update your Langchain library to the latest version or "

|

||||

f"check your LLM configuration to support async calls. "

|

||||

f"PR-Agent is designed to utilize Langchain's async capabilities."

|

||||

)

|

||||

get_logger().error(error_message)

|

||||

raise NotImplementedError(error_message)

|

||||

|

||||

# Handle parameters based on LLM type

|

||||

if isinstance(llm, (ChatOpenAI, AzureChatOpenAI)):

|

||||

# OpenAI models support all parameters

|

||||

resp = await llm.ainvoke(

|

||||

input=messages,

|

||||

model=model,

|

||||

temperature=temperature

|

||||

)

|

||||

else:

|

||||

# Other LLMs (like Gemini) only support input parameter

|

||||

get_logger().info(f"Using simplified ainvoke for {type(llm)}")

|

||||

resp = await llm.ainvoke(input=messages)

|

||||

|

||||

# get a chat completion from the formatted messages

|

||||

resp = self.chat(messages, model=model, temperature=temperature)

|

||||

finish_reason = "completed"

|

||||

return resp.content, finish_reason

|

||||

|

||||

@ -109,3 +58,27 @@ class LangChainOpenAIHandler(BaseAiHandler):

|

||||

except Exception as e:

|

||||

get_logger().warning(f"Unknown error during LLM inference: {e}")

|

||||

raise openai.APIError from e

|

||||

|

||||

def _create_chat(self, deployment_id=None):

|

||||

try:

|

||||

if self.azure:

|

||||

# using a partial function so we can set the deployment_id later to support fallback_deployments

|

||||

# but still need to access the other settings now so we can raise a proper exception if they're missing

|

||||

return AzureChatOpenAI(

|

||||

openai_api_key=get_settings().openai.key,

|

||||

openai_api_version=get_settings().openai.api_version,

|

||||

azure_deployment=deployment_id,

|

||||

azure_endpoint=get_settings().openai.api_base,

|

||||

)

|

||||

else:

|

||||

# for llms that compatible with openai, should use custom api base

|

||||

openai_api_base = get_settings().get("OPENAI.API_BASE", None)

|

||||

if openai_api_base is None or len(openai_api_base) == 0:

|

||||

return ChatOpenAI(openai_api_key=get_settings().openai.key)

|

||||

else:

|

||||

return ChatOpenAI(openai_api_key=get_settings().openai.key, openai_api_base=openai_api_base)

|

||||

except AttributeError as e:

|

||||

if getattr(e, "name"):

|

||||

raise ValueError(f"OpenAI {e.name} is required") from e

|

||||

else:

|

||||

raise e

|

||||

|

||||

@ -42,10 +42,8 @@ class OpenAIHandler(BaseAiHandler):

|

||||

retry=retry_if_exception_type(openai.APIError) & retry_if_not_exception_type(openai.RateLimitError),

|

||||

stop=stop_after_attempt(OPENAI_RETRIES),

|

||||

)

|

||||

async def chat_completion(self, model: str, system: str, user: str, temperature: float = 0.2, img_path: str = None):

|

||||

async def chat_completion(self, model: str, system: str, user: str, temperature: float = 0.2):

|

||||

try:

|

||||

if img_path:

|

||||

get_logger().warning(f"Image path is not supported for OpenAIHandler. Ignoring image path: {img_path}")

|

||||

get_logger().info("System: ", system)

|

||||

get_logger().info("User: ", user)

|

||||

messages = [{"role": "system", "content": system}, {"role": "user", "content": user}]

|

||||

|

||||

@ -58,9 +58,6 @@ def filter_ignored(files, platform = 'github'):

|

||||

files = files_o

|

||||

elif platform == 'azure':

|

||||

files = [f for f in files if not r.match(f)]

|

||||

elif platform == 'gitea':

|

||||

files = [f for f in files if not r.match(f.get("filename", ""))]

|

||||

|

||||

|

||||

except Exception as e:

|

||||

print(f"Could not filter file list: {e}")

|

||||

|

||||

@ -1,6 +1,4 @@

|

||||

from threading import Lock

|

||||

from math import ceil

|

||||

import re

|

||||

|

||||

from jinja2 import Environment, StrictUndefined

|

||||

from tiktoken import encoding_for_model, get_encoding

|

||||

@ -9,16 +7,6 @@ from pr_agent.config_loader import get_settings

|

||||

from pr_agent.log import get_logger

|

||||

|

||||

|

||||

class ModelTypeValidator:

|

||||

@staticmethod

|

||||

def is_openai_model(model_name: str) -> bool:

|

||||

return 'gpt' in model_name or re.match(r"^o[1-9](-mini|-preview)?$", model_name)

|

||||

|

||||

@staticmethod

|

||||

def is_anthropic_model(model_name: str) -> bool:

|

||||

return 'claude' in model_name

|

||||

|

||||

|

||||

class TokenEncoder:

|

||||

_encoder_instance = None

|

||||

_model = None

|

||||

@ -52,10 +40,6 @@ class TokenHandler:

|

||||

method.

|

||||

"""

|

||||

|

||||

# Constants

|

||||

CLAUDE_MODEL = "claude-3-7-sonnet-20250219"

|

||||

CLAUDE_MAX_CONTENT_SIZE = 9_000_000 # Maximum allowed content size (9MB) for Claude API

|

||||

|

||||

def __init__(self, pr=None, vars: dict = {}, system="", user=""):

|

||||

"""

|

||||

Initializes the TokenHandler object.

|

||||

@ -67,7 +51,6 @@ class TokenHandler:

|

||||

- user: The user string.

|

||||

"""

|

||||

self.encoder = TokenEncoder.get_token_encoder()

|

||||

|

||||

if pr is not None:

|

||||

self.prompt_tokens = self._get_system_user_tokens(pr, self.encoder, vars, system, user)

|

||||

|

||||

@ -96,22 +79,22 @@ class TokenHandler:

|

||||

get_logger().error(f"Error in _get_system_user_tokens: {e}")

|

||||

return 0

|

||||

|

||||

def _calc_claude_tokens(self, patch: str) -> int:

|

||||

def calc_claude_tokens(self, patch):

|

||||

try:

|

||||

import anthropic

|

||||

from pr_agent.algo import MAX_TOKENS

|

||||

|

||||

client = anthropic.Anthropic(api_key=get_settings(use_context=False).get('anthropic.key'))

|

||||

max_tokens = MAX_TOKENS[get_settings().config.model]

|

||||

MaxTokens = MAX_TOKENS[get_settings().config.model]

|

||||

|

||||

if len(patch.encode('utf-8')) > self.CLAUDE_MAX_CONTENT_SIZE:

|

||||

# Check if the content size is too large (9MB limit)

|

||||

if len(patch.encode('utf-8')) > 9_000_000:

|

||||

get_logger().warning(

|

||||

"Content too large for Anthropic token counting API, falling back to local tokenizer"

|

||||

)

|

||||

return max_tokens

|

||||

return MaxTokens

|

||||

|

||||

response = client.messages.count_tokens(

|

||||

model=self.CLAUDE_MODEL,

|

||||

model="claude-3-7-sonnet-20250219",

|

||||

system="system",

|

||||

messages=[{

|

||||

"role": "user",

|

||||

@ -121,51 +104,42 @@ class TokenHandler:

|

||||

return response.input_tokens

|

||||

|

||||

except Exception as e:

|

||||

get_logger().error(f"Error in Anthropic token counting: {e}")

|

||||

return max_tokens

|

||||

get_logger().error( f"Error in Anthropic token counting: {e}")

|

||||

return MaxTokens

|

||||

|

||||

def _apply_estimation_factor(self, model_name: str, default_estimate: int) -> int:

|

||||

factor = 1 + get_settings().get('config.model_token_count_estimate_factor', 0)

|

||||

get_logger().warning(f"{model_name}'s token count cannot be accurately estimated. Using factor of {factor}")

|

||||

|

||||

return ceil(factor * default_estimate)

|

||||

def estimate_token_count_for_non_anth_claude_models(self, model, default_encoder_estimate):

|

||||

from math import ceil

|

||||

import re

|

||||

|

||||

def _get_token_count_by_model_type(self, patch: str, default_estimate: int) -> int:

|

||||

"""

|

||||

Get token count based on model type.

|

||||

model_is_from_o_series = re.match(r"^o[1-9](-mini|-preview)?$", model)

|

||||

if ('gpt' in get_settings().config.model.lower() or model_is_from_o_series) and get_settings(use_context=False).get('openai.key'):

|

||||

return default_encoder_estimate

|

||||

#else: Model is not an OpenAI one - therefore, cannot provide an accurate token count and instead, return a higher number as best effort.

|

||||

|

||||

Args:

|

||||

patch: The text to count tokens for.

|

||||

default_estimate: The default token count estimate.

|

||||

elbow_factor = 1 + get_settings().get('config.model_token_count_estimate_factor', 0)

|

||||

get_logger().warning(f"{model}'s expected token count cannot be accurately estimated. Using {elbow_factor} of encoder output as best effort estimate")

|

||||

return ceil(elbow_factor * default_encoder_estimate)

|

||||

|

||||

Returns:

|

||||

int: The calculated token count.

|

||||

"""

|

||||

model_name = get_settings().config.model.lower()

|

||||

|

||||

if ModelTypeValidator.is_openai_model(model_name) and get_settings(use_context=False).get('openai.key'):

|

||||

return default_estimate

|

||||

|

||||

if ModelTypeValidator.is_anthropic_model(model_name) and get_settings(use_context=False).get('anthropic.key'):

|

||||

return self._calc_claude_tokens(patch)

|

||||

|

||||

return self._apply_estimation_factor(model_name, default_estimate)

|

||||

|

||||

def count_tokens(self, patch: str, force_accurate: bool = False) -> int:

|

||||

def count_tokens(self, patch: str, force_accurate=False) -> int:

|

||||

"""

|

||||

Counts the number of tokens in a given patch string.

|

||||

|

||||

Args:

|

||||

- patch: The patch string.

|

||||

- force_accurate: If True, uses a more precise calculation method.

|

||||

|

||||

Returns:

|

||||

The number of tokens in the patch string.

|

||||

"""

|

||||

encoder_estimate = len(self.encoder.encode(patch, disallowed_special=()))

|

||||

|

||||

# If an estimate is enough (for example, in cases where the maximal allowed tokens is way below the known limits), return it.

|

||||

#If an estimate is enough (for example, in cases where the maximal allowed tokens is way below the known limits), return it.

|

||||

if not force_accurate:

|

||||

return encoder_estimate

|

||||

|

||||

return self._get_token_count_by_model_type(patch, encoder_estimate)

|

||||

#else, force_accurate==True: User requested providing an accurate estimation:

|

||||

model = get_settings().config.model.lower()

|

||||

if 'claude' in model and get_settings(use_context=False).get('anthropic.key'):

|

||||

return self.calc_claude_tokens(patch) # API call to Anthropic for accurate token counting for Claude models

|

||||

|

||||

#else: Non Anthropic provided model:

|

||||

return self.estimate_token_count_for_non_anth_claude_models(model, encoder_estimate)

|

||||

|

||||

@ -8,7 +8,6 @@ from pr_agent.git_providers.bitbucket_server_provider import \

|

||||

from pr_agent.git_providers.codecommit_provider import CodeCommitProvider

|

||||

from pr_agent.git_providers.gerrit_provider import GerritProvider

|

||||

from pr_agent.git_providers.git_provider import GitProvider

|

||||

from pr_agent.git_providers.gitea_provider import GiteaProvider

|

||||

from pr_agent.git_providers.github_provider import GithubProvider

|

||||

from pr_agent.git_providers.gitlab_provider import GitLabProvider

|

||||

from pr_agent.git_providers.local_git_provider import LocalGitProvider

|

||||

@ -23,7 +22,7 @@ _GIT_PROVIDERS = {

|

||||

'codecommit': CodeCommitProvider,

|

||||

'local': LocalGitProvider,

|

||||

'gerrit': GerritProvider,

|

||||

'gitea': GiteaProvider

|

||||

'gitea': GiteaProvider,

|

||||

}

|

||||

|

||||

|

||||

|

||||

@ -350,8 +350,7 @@ class AzureDevopsProvider(GitProvider):

|

||||

get_logger().debug(f"Skipping publish_comment for temporary comment: {pr_comment}")

|

||||

return None

|

||||

comment = Comment(content=pr_comment)

|

||||

# Set status to 'active' to prevent auto-resolve (see CommentThreadStatus docs)

|

||||

thread = CommentThread(comments=[comment], thread_context=thread_context, status='active')

|

||||

thread = CommentThread(comments=[comment], thread_context=thread_context, status="closed")

|

||||

thread_response = self.azure_devops_client.create_thread(

|

||||

comment_thread=thread,

|

||||

project=self.workspace_slug,

|

||||

|

||||

File diff suppressed because it is too large

Load Diff

@ -1,128 +0,0 @@

|

||||

import asyncio

|

||||

import copy

|

||||

import os

|

||||

from typing import Any, Dict

|

||||

|

||||

from fastapi import APIRouter, FastAPI, HTTPException, Request, Response

|

||||

from starlette.background import BackgroundTasks

|

||||

from starlette.middleware import Middleware

|

||||

from starlette_context import context

|

||||

from starlette_context.middleware import RawContextMiddleware

|

||||

|

||||

from pr_agent.agent.pr_agent import PRAgent

|

||||

from pr_agent.config_loader import get_settings, global_settings

|

||||

from pr_agent.log import LoggingFormat, get_logger, setup_logger

|

||||

from pr_agent.servers.utils import verify_signature

|

||||

|

||||

# Setup logging and router

|

||||

setup_logger(fmt=LoggingFormat.JSON, level=get_settings().get("CONFIG.LOG_LEVEL", "DEBUG"))

|

||||

router = APIRouter()

|

||||

|

||||

@router.post("/api/v1/gitea_webhooks")

|

||||

async def handle_gitea_webhooks(background_tasks: BackgroundTasks, request: Request, response: Response):

|

||||

"""Handle incoming Gitea webhook requests"""

|

||||

get_logger().debug("Received a Gitea webhook")

|

||||

|

||||

body = await get_body(request)

|

||||

|

||||

# Set context for the request

|

||||

context["settings"] = copy.deepcopy(global_settings)

|

||||

context["git_provider"] = {}

|

||||

|

||||

# Handle the webhook in background

|

||||

background_tasks.add_task(handle_request, body, event=request.headers.get("X-Gitea-Event", None))

|

||||

return {}

|

||||

|

||||

async def get_body(request: Request):

|

||||

"""Parse and verify webhook request body"""

|

||||

try:

|

||||

body = await request.json()

|

||||

except Exception as e:

|

||||

get_logger().error("Error parsing request body", artifact={'error': e})

|

||||

raise HTTPException(status_code=400, detail="Error parsing request body") from e

|

||||

|

||||

|

||||

# Verify webhook signature

|

||||

webhook_secret = getattr(get_settings().gitea, 'webhook_secret', None)

|

||||

if webhook_secret:

|

||||

body_bytes = await request.body()

|

||||

signature_header = request.headers.get('x-gitea-signature', None)

|

||||

if not signature_header:

|

||||

get_logger().error("Missing signature header")

|

||||

raise HTTPException(status_code=400, detail="Missing signature header")

|

||||

|

||||

try:

|

||||

verify_signature(body_bytes, webhook_secret, f"sha256={signature_header}")

|

||||

except Exception as ex:

|

||||

get_logger().error(f"Invalid signature: {ex}")

|

||||

raise HTTPException(status_code=401, detail="Invalid signature")

|

||||

|

||||

return body

|

||||

|

||||

async def handle_request(body: Dict[str, Any], event: str):

|

||||

"""Process Gitea webhook events"""

|

||||

action = body.get("action")

|

||||

if not action:

|

||||

get_logger().debug("No action found in request body")

|

||||

return {}

|

||||

|

||||

agent = PRAgent()

|

||||

|

||||

# Handle different event types

|

||||

if event == "pull_request":

|

||||

if action in ["opened", "reopened", "synchronized"]:

|

||||

await handle_pr_event(body, event, action, agent)

|

||||

elif event == "issue_comment":

|

||||

if action == "created":

|

||||

await handle_comment_event(body, event, action, agent)

|

||||

|

||||

return {}

|

||||

|

||||

async def handle_pr_event(body: Dict[str, Any], event: str, action: str, agent: PRAgent):

|

||||

"""Handle pull request events"""

|

||||

pr = body.get("pull_request", {})

|

||||

if not pr:

|

||||

return

|

||||

|

||||

api_url = pr.get("url")

|

||||

if not api_url:

|

||||

return

|

||||

|

||||

# Handle PR based on action

|

||||

if action in ["opened", "reopened"]:

|

||||

commands = get_settings().get("gitea.pr_commands", [])

|

||||

for command in commands:

|

||||

await agent.handle_request(api_url, command)

|

||||

elif action == "synchronized":

|

||||

# Handle push to PR

|

||||

await agent.handle_request(api_url, "/review --incremental")

|

||||

|

||||

async def handle_comment_event(body: Dict[str, Any], event: str, action: str, agent: PRAgent):

|

||||

"""Handle comment events"""

|

||||

comment = body.get("comment", {})

|

||||

if not comment:

|

||||

return

|

||||

|

||||

comment_body = comment.get("body", "")

|

||||

if not comment_body or not comment_body.startswith("/"):

|

||||

return

|

||||

|

||||

pr_url = body.get("pull_request", {}).get("url")

|

||||

if not pr_url:

|

||||

return

|

||||

|

||||

await agent.handle_request(pr_url, comment_body)

|

||||

|

||||

# FastAPI app setup

|

||||

middleware = [Middleware(RawContextMiddleware)]

|

||||

app = FastAPI(middleware=middleware)

|

||||

app.include_router(router)

|

||||

|

||||

def start():

|

||||

"""Start the Gitea webhook server"""

|

||||

port = int(os.environ.get("PORT", "3000"))

|

||||

import uvicorn

|

||||

uvicorn.run(app, host="0.0.0.0", port=port)

|

||||

|

||||

if __name__ == "__main__":

|

||||

start()

|

||||

@ -68,11 +68,6 @@ webhook_secret = "<WEBHOOK SECRET>" # Optional, may be commented out.

|

||||

personal_access_token = ""

|

||||

shared_secret = "" # webhook secret

|

||||

|

||||

[gitea]

|

||||

# Gitea personal access token

|

||||

personal_access_token=""

|

||||

webhook_secret="" # webhook secret

|

||||

|

||||

[bitbucket]

|

||||

# For Bitbucket authentication

|

||||

auth_type = "bearer" # "bearer" or "basic"

|

||||

|

||||

@ -281,15 +281,6 @@ push_commands = [

|

||||

"/review",

|

||||

]

|

||||

|

||||

[gitea_app]

|

||||

url = "https://gitea.com"

|

||||

handle_push_trigger = false

|

||||

pr_commands = [

|

||||

"/describe",

|

||||

"/review",

|

||||

"/improve",

|

||||

]

|

||||

|

||||

[bitbucket_app]

|

||||

pr_commands = [

|

||||

"/describe --pr_description.final_update_message=false",

|

||||

|

||||

@ -46,12 +46,12 @@ class PRDescription(BaseModel):

|

||||

type: List[PRType] = Field(description="one or more types that describe the PR content. Return the label member value (e.g. 'Bug fix', not 'bug_fix')")

|

||||

description: str = Field(description="summarize the PR changes in up to four bullet points, each up to 8 words. For large PRs, add sub-bullets if needed. Order bullets by importance, with each bullet highlighting a key change group.")

|

||||

title: str = Field(description="a concise and descriptive title that captures the PR's main theme")

|

||||

{%- if enable_pr_diagram %}

|

||||

changes_diagram: str = Field(description="a horizontal diagram that represents the main PR changes, in the format of a valid mermaid LR flowchart. The diagram should be concise and easy to read. Leave empty if no diagram is relevant. To create robust Mermaid diagrams, follow this two-step process: (1) Declare the nodes: nodeID["node description"]. (2) Then define the links: nodeID1 -- "link text" --> nodeID2 ")

|

||||

{%- endif %}

|

||||

{%- if enable_semantic_files_types %}

|

||||

pr_files: List[FileDescription] = Field(max_items=20, description="a list of all the files that were changed in the PR, and summary of their changes. Each file must be analyzed regardless of change size.")

|

||||

{%- endif %}

|

||||

{%- if enable_pr_diagram %}

|

||||

changes_diagram: str = Field(description="a horizontal diagram that represents the main PR changes, in the format of a mermaid flowchart. The diagram should be concise and easy to read. Leave empty if no diagram is relevant.")

|

||||

{%- endif %}

|