Compare commits

244 Commits

enhancemen

...

idsvidov/g

| Author | SHA1 | Date | |

|---|---|---|---|

| 0167003bbc | |||

| 99ed9b22a1 | |||

| eee6d51b40 | |||

| adb3f17258 | |||

| 2c03a67312 | |||

| 55eb741965 | |||

| c9c95d60d4 | |||

| cca809e91c | |||

| 57ff46ecc1 | |||

| 3819d52eb0 | |||

| 3072325d2c | |||

| abca2fdcb7 | |||

| 4d84f76948 | |||

| dd8f6eb923 | |||

| b9c25e487a | |||

| 1bf27c38a7 | |||

| 1f987380ed | |||

| cd8bbbf889 | |||

| 8e5498ee97 | |||

| 0412d7aca0 | |||

| 1eac3245d9 | |||

| cd51bef7f7 | |||

| e8aa33fa0b | |||

| 54b021b02c | |||

| 32151e3d9a | |||

| 32358678e6 | |||

| 42e32664a1 | |||

| 1e97236a15 | |||

| 321f7bce46 | |||

| 02a1d8dbfc | |||

| e34f9d8d1c | |||

| 35dac012bd | |||

| 21ced18f50 | |||

| fca78cf395 | |||

| d1b91b0ea3 | |||

| 76e00acbdb | |||

| 2f83e7738c | |||

| f4a226b0f7 | |||

| f5e2838fc3 | |||

| bbdfd2c3d4 | |||

| 74572e1768 | |||

| f0a17b863c | |||

| 86fd84e113 | |||

| d5b9be23d3 | |||

| 057bb3932f | |||

| 05f29cc406 | |||

| 63c4c7e584 | |||

| 1ea23cab96 | |||

| e99f9fd59f | |||

| fdf6a3e833 | |||

| 79cb94b4c2 | |||

| 9adec7cc10 | |||

| 1f0df47b4d | |||

| a71a12791b | |||

| 23fa834721 | |||

| 9f67d07156 | |||

| 6731a7643e | |||

| f87fdd88ad | |||

| f825f6b90a | |||

| f5d5008a24 | |||

| 0b63d4cde5 | |||

| 2e246869d0 | |||

| 2f9546e144 | |||

| 6134c2ff61 | |||

| 3cfbba74f8 | |||

| 050bb60671 | |||

| 12a7e1ce6e | |||

| cd0438005b | |||

| 7c3188ae06 | |||

| 6cd38a37cd | |||

| 12e51bb6aa | |||

| e2a4cd6b03 | |||

| 329e228aa2 | |||

| 3d5d517f2a | |||

| a2eb2e4dac | |||

| d89792d379 | |||

| 23ed2553c4 | |||

| fe29ce2911 | |||

| df25a3ede2 | |||

| 4c36fb4df2 | |||

| 67c61e0ac8 | |||

| 0985db4e36 | |||

| ee2c00abeb | |||

| 577f24d107 | |||

| fc24b34c2b | |||

| 1e962476da | |||

| 3326327572 | |||

| 36be79ea38 | |||

| 523839be7d | |||

| d1586ddd77 | |||

| 3420853923 | |||

| 1f373d7b0a | |||

| 7fdbd6a680 | |||

| 17b40a1fa1 | |||

| c47e74c5c7 | |||

| 7abbe08ff1 | |||

| 8038b6ab99 | |||

| 6e26ad0966 | |||

| 7e2449b228 | |||

| 97bfee47a3 | |||

| 3b27c834a4 | |||

| 5bc2ef1eff | |||

| 2f558006bf | |||

| 8868c92141 | |||

| 370520df51 | |||

| e17dd66dce | |||

| fc8494d696 | |||

| f8aea909b4 | |||

| 2e832b8fb4 | |||

| ccddbeccad | |||

| a47fa342cb | |||

| f73cddcb93 | |||

| 5f36f0d753 | |||

| dc4bf13d39 | |||

| bdf7eff7cd | |||

| dc67e6a66e | |||

| 6d91f44634 | |||

| 0396e10706 | |||

| 77f243b7ab | |||

| c507785475 | |||

| 5c5015b267 | |||

| 3efe08d619 | |||

| 2e36fce4eb | |||

| d6d4427545 | |||

| 5d45632247 | |||

| 90c045e3d0 | |||

| 7f0a96d8f7 | |||

| 8fb9affef3 | |||

| 6c42a471e1 | |||

| f2b74b6970 | |||

| ffd11aeffc | |||

| 05e4e09dfc | |||

| 13092118dc | |||

| 7d108992fc | |||

| e5a8ed205e | |||

| 90f97b0226 | |||

| 9e0f5f0ccc | |||

| 87ea0176b9 | |||

| 62f08f4ec4 | |||

| fe0058f25f | |||

| 6d2673f39d | |||

| b3a1d456b2 | |||

| f77a5f6929 | |||

| fdeae9c209 | |||

| a994ec1427 | |||

| e5259e2f5c | |||

| 978348240b | |||

| 4d92e7d9c2 | |||

| 6f1b418b25 | |||

| 51e08c3c2b | |||

| 4c29ff2db1 | |||

| 5fbaa4366f | |||

| aee08ebbfe | |||

| 6ad8df6be7 | |||

| 539edcad3c | |||

| b7172df700 | |||

| 768bd40ad8 | |||

| ea27c63f13 | |||

| c866288b0a | |||

| 8ae3c60670 | |||

| f8f415eb75 | |||

| 24583b05f7 | |||

| fa421fd169 | |||

| e0ae5c945e | |||

| 865888e4e8 | |||

| 3b7cfe7bc5 | |||

| 262f9dddbc | |||

| fa706b6e96 | |||

| ff51ab0946 | |||

| 7884aa2348 | |||

| 8f3520807c | |||

| fa90b242e3 | |||

| 2dfd34bd61 | |||

| 48f569bef0 | |||

| a20fb9cc0c | |||

| c58e1f90e7 | |||

| d363f148f0 | |||

| cbf96a2e67 | |||

| 4d87c3ec6a | |||

| c13c52d733 | |||

| dbf8142fe0 | |||

| bacf6c96c2 | |||

| c9d49da8f7 | |||

| 7b22edac60 | |||

| fc309f69b9 | |||

| 7efb5cf74e | |||

| 8e200197c5 | |||

| fe98f67e08 | |||

| 0b1edd9716 | |||

| e638dc075c | |||

| 559b160886 | |||

| 571b8769ac | |||

| e4bd2148ce | |||

| 1637bd8774 | |||

| ce33582d3d | |||

| bc6b592fd9 | |||

| 24ae6b966f | |||

| f4de3d2899 | |||

| 4cacb07ec2 | |||

| 2371a9b041 | |||

| 5b7403ae80 | |||

| e979b8643d | |||

| 05b4f167a3 | |||

| 2c4245e023 | |||

| d54ee252ee | |||

| 85eec0b98c | |||

| 41a988d99a | |||

| 448da3d481 | |||

| b030299547 | |||

| 5bdbfda1e2 | |||

| 047cfb21f3 | |||

| 35a2497a38 | |||

| 99630f83c2 | |||

| 1757f2707c | |||

| 66c44d715c | |||

| 8f7855013a | |||

| e200be4e57 | |||

| d0b734bc91 | |||

| 399d5c5c5d | |||

| 1b88049cb0 | |||

| 0304bf05c1 | |||

| 94173cbb06 | |||

| 75447280e4 | |||

| 5edff8b7e4 | |||

| 487351d343 | |||

| 93311a9d9b | |||

| 704030230f | |||

| 60bce8f049 | |||

| e394cb7ddb | |||

| a0e4fb01af | |||

| eb9190efa1 | |||

| 8cc37d6f59 | |||

| 6cc9fe3d06 | |||

| 0acf423450 | |||

| 7958786b4c | |||

| 719f3a9dd8 | |||

| 71efd84113 | |||

| 25e46a99fd | |||

| 2531849b73 | |||

| 19f11f99ce | |||

| 87f978e816 | |||

| 7488eb8c9e | |||

| 0a4a604c28 | |||

| 973cb2de1c |

@ -1,2 +1,3 @@

|

||||

venv/

|

||||

pr_agent/settings/.secrets.toml

|

||||

pics/

|

||||

4

.github/workflows/review.yaml

vendored

@ -8,9 +8,9 @@ jobs:

|

||||

steps:

|

||||

- name: PR Agent action step

|

||||

id: pragent

|

||||

uses: Codium-ai/pr-agent@feature/github_action

|

||||

uses: Codium-ai/pr-agent@main

|

||||

env:

|

||||

OPENAI_KEY: ${{ secrets.OPENAI_KEY }}

|

||||

OPENAI_ORG: ${{ secrets.OPENAI_ORG }}

|

||||

OPENAI_ORG: ${{ secrets.OPENAI_ORG }} # optional

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

|

||||

|

||||

19

CONFIGURATION.md

Normal file

@ -0,0 +1,19 @@

|

||||

## Configuration

|

||||

|

||||

The different tools and sub-tools used by CodiumAI pr-agent are easily configurable via the configuration file: `/pr-agent/settings/configuration.toml`.

|

||||

##### Git Provider:

|

||||

You can select your git_provider with the flag `git_provider` in the `config` section

|

||||

|

||||

##### PR Reviewer:

|

||||

|

||||

You can enable/disable the different PR Reviewer abilities with the following flags (`pr_reviewer` section):

|

||||

```

|

||||

require_focused_review=true

|

||||

require_score_review=true

|

||||

require_tests_review=true

|

||||

require_security_review=true

|

||||

```

|

||||

You can contol the number of suggestions returned by the PR Reviewer with the following flag:

|

||||

```inline_code_comments=3```

|

||||

And enable/disable the inline code suggestions with the following flag:

|

||||

```inline_code_comments=true```

|

||||

1

Dockerfile.github_action_dockerhub

Normal file

@ -0,0 +1 @@

|

||||

FROM codiumai/pr-agent:github_action

|

||||

218

INSTALL.md

Normal file

@ -0,0 +1,218 @@

|

||||

|

||||

## Installation

|

||||

|

||||

---

|

||||

|

||||

#### Method 1: Use Docker image (no installation required)

|

||||

|

||||

To request a review for a PR, or ask a question about a PR, you can run directly from the Docker image. Here's how:

|

||||

|

||||

1. To request a review for a PR, run the following command:

|

||||

|

||||

```

|

||||

docker run --rm -it -e OPENAI.KEY=<your key> -e GITHUB.USER_TOKEN=<your token> codiumai/pr-agent --pr_url <pr_url> review

|

||||

```

|

||||

|

||||

2. To ask a question about a PR, run the following command:

|

||||

|

||||

```

|

||||

docker run --rm -it -e OPENAI.KEY=<your key> -e GITHUB.USER_TOKEN=<your token> codiumai/pr-agent --pr_url <pr_url> ask "<your question>"

|

||||

```

|

||||

|

||||

Possible questions you can ask include:

|

||||

|

||||

- What is the main theme of this PR?

|

||||

- Is the PR ready for merge?

|

||||

- What are the main changes in this PR?

|

||||

- Should this PR be split into smaller parts?

|

||||

- Can you compose a rhymed song about this PR?

|

||||

|

||||

---

|

||||

|

||||

#### Method 2: Run as a GitHub Action

|

||||

|

||||

You can use our pre-built Github Action Docker image to run PR-Agent as a Github Action.

|

||||

|

||||

1. Add the following file to your repository under `.github/workflows/pr_agent.yml`:

|

||||

|

||||

```yaml

|

||||

on:

|

||||

pull_request:

|

||||

issue_comment:

|

||||

jobs:

|

||||

pr_agent_job:

|

||||

runs-on: ubuntu-latest

|

||||

name: Run pr agent on every pull request, respond to user comments

|

||||

steps:

|

||||

- name: PR Agent action step

|

||||

id: pragent

|

||||

uses: Codium-ai/pr-agent@main

|

||||

env:

|

||||

OPENAI_KEY: ${{ secrets.OPENAI_KEY }}

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

```

|

||||

|

||||

2. Add the following secret to your repository under `Settings > Secrets`:

|

||||

|

||||

```

|

||||

OPENAI_KEY: <your key>

|

||||

```

|

||||

|

||||

The GITHUB_TOKEN secret is automatically created by GitHub.

|

||||

|

||||

3. Merge this change to your main branch.

|

||||

When you open your next PR, you should see a comment from `github-actions` bot with a review of your PR, and instructions on how to use the rest of the tools.

|

||||

|

||||

4. You may configure PR-Agent by adding environment variables under the env section corresponding to any configurable property in the [configuration](./CONFIGURATION.md) file. Some examples:

|

||||

```yaml

|

||||

env:

|

||||

# ... previous environment values

|

||||

OPENAI.ORG: "<Your organization name under your OpenAI account>"

|

||||

PR_REVIEWER.REQUIRE_TESTS_REVIEW: "false" # Disable tests review

|

||||

PR_CODE_SUGGESTIONS.NUM_CODE_SUGGESTIONS: 6 # Increase number of code suggestions

|

||||

```

|

||||

|

||||

---

|

||||

|

||||

#### Method 3: Run from source

|

||||

|

||||

1. Clone this repository:

|

||||

|

||||

```

|

||||

git clone https://github.com/Codium-ai/pr-agent.git

|

||||

```

|

||||

|

||||

2. Install the requirements in your favorite virtual environment:

|

||||

|

||||

```

|

||||

pip install -r requirements.txt

|

||||

```

|

||||

|

||||

3. Copy the secrets template file and fill in your OpenAI key and your GitHub user token:

|

||||

|

||||

```

|

||||

cp pr_agent/settings/.secrets_template.toml pr_agent/settings/.secrets.toml

|

||||

# Edit .secrets.toml file

|

||||

```

|

||||

|

||||

4. Add the pr_agent folder to your PYTHONPATH, then run the cli.py script:

|

||||

|

||||

```

|

||||

export PYTHONPATH=[$PYTHONPATH:]<PATH to pr_agent folder>

|

||||

python pr_agent/cli.py --pr_url <pr_url> review

|

||||

python pr_agent/cli.py --pr_url <pr_url> ask <your question>

|

||||

python pr_agent/cli.py --pr_url <pr_url> describe

|

||||

python pr_agent/cli.py --pr_url <pr_url> improve

|

||||

```

|

||||

|

||||

---

|

||||

|

||||

#### Method 4: Run as a polling server

|

||||

Request reviews by tagging your Github user on a PR

|

||||

|

||||

Follow steps 1-3 of method 2.

|

||||

Run the following command to start the server:

|

||||

|

||||

```

|

||||

python pr_agent/servers/github_polling.py

|

||||

```

|

||||

|

||||

---

|

||||

|

||||

#### Method 5: Run as a GitHub App

|

||||

Allowing you to automate the review process on your private or public repositories.

|

||||

|

||||

1. Create a GitHub App from the [Github Developer Portal](https://docs.github.com/en/developers/apps/creating-a-github-app).

|

||||

|

||||

- Set the following permissions:

|

||||

- Pull requests: Read & write

|

||||

- Issue comment: Read & write

|

||||

- Metadata: Read-only

|

||||

- Set the following events:

|

||||

- Issue comment

|

||||

- Pull request

|

||||

|

||||

2. Generate a random secret for your app, and save it for later. For example, you can use:

|

||||

|

||||

```

|

||||

WEBHOOK_SECRET=$(python -c "import secrets; print(secrets.token_hex(10))")

|

||||

```

|

||||

|

||||

3. Acquire the following pieces of information from your app's settings page:

|

||||

|

||||

- App private key (click "Generate a private key" and save the file)

|

||||

- App ID

|

||||

|

||||

4. Clone this repository:

|

||||

|

||||

```

|

||||

git clone https://github.com/Codium-ai/pr-agent.git

|

||||

```

|

||||

|

||||

5. Copy the secrets template file and fill in the following:

|

||||

```

|

||||

cp pr_agent/settings/.secrets_template.toml pr_agent/settings/.secrets.toml

|

||||

# Edit .secrets.toml file

|

||||

```

|

||||

- Your OpenAI key.

|

||||

- Copy your app's private key to the private_key field.

|

||||

- Copy your app's ID to the app_id field.

|

||||

- Copy your app's webhook secret to the webhook_secret field.

|

||||

- Set deployment_type to 'app' in [configuration.toml](./pr_agent/settings/configuration.toml)

|

||||

|

||||

> The .secrets.toml file is not copied to the Docker image by default, and is only used for local development.

|

||||

> If you want to use the .secrets.toml file in your Docker image, you can add remove it from the .dockerignore file.

|

||||

> In most production environments, you would inject the secrets file as environment variables or as mounted volumes.

|

||||

> For example, in order to inject a secrets file as a volume in a Kubernetes environment you can update your pod spec to include the following,

|

||||

> assuming you have a secret named `pr-agent-settings` with a key named `.secrets.toml`:

|

||||

```

|

||||

volumes:

|

||||

- name: settings-volume

|

||||

secret:

|

||||

secretName: pr-agent-settings

|

||||

// ...

|

||||

containers:

|

||||

// ...

|

||||

volumeMounts:

|

||||

- mountPath: /app/pr_agent/settings_prod

|

||||

name: settings-volume

|

||||

```

|

||||

|

||||

> Another option is to set the secrets as environment variables in your deployment environment, for example `OPENAI.KEY` and `GITHUB.USER_TOKEN`.

|

||||

|

||||

6. Build a Docker image for the app and optionally push it to a Docker repository. We'll use Dockerhub as an example:

|

||||

|

||||

```

|

||||

docker build . -t codiumai/pr-agent:github_app --target github_app -f docker/Dockerfile

|

||||

docker push codiumai/pr-agent:github_app # Push to your Docker repository

|

||||

```

|

||||

|

||||

7. Host the app using a server, serverless function, or container environment. Alternatively, for development and

|

||||

debugging, you may use tools like smee.io to forward webhooks to your local machine.

|

||||

You can check [Deploy as a Lambda Function](#deploy-as-a-lambda-function)

|

||||

|

||||

8. Go back to your app's settings, and set the following:

|

||||

|

||||

- Webhook URL: The URL of your app's server or the URL of the smee.io channel.

|

||||

- Webhook secret: The secret you generated earlier.

|

||||

|

||||

9. Install the app by navigating to the "Install App" tab and selecting your desired repositories.

|

||||

|

||||

---

|

||||

|

||||

#### Deploy as a Lambda Function

|

||||

|

||||

1. Follow steps 1-5 of [Method 5](#method-5-run-as-a-github-app).

|

||||

2. Build a docker image that can be used as a lambda function

|

||||

```shell

|

||||

docker buildx build --platform=linux/amd64 . -t codiumai/pr-agent:serverless -f docker/Dockerfile.lambda

|

||||

```

|

||||

3. Push image to ECR

|

||||

```shell

|

||||

docker tag codiumai/pr-agent:serverless <AWS_ACCOUNT>.dkr.ecr.<AWS_REGION>.amazonaws.com/codiumai/pr-agent:serverless

|

||||

docker push <AWS_ACCOUNT>.dkr.ecr.<AWS_REGION>.amazonaws.com/codiumai/pr-agent:serverless

|

||||

```

|

||||

4. Create a lambda function that uses the uploaded image. Set the lambda timeout to be at least 3m.

|

||||

5. Configure the lambda function to have a Function URL.

|

||||

6. Go back to steps 8-9 of [Method 5](#method-5-run-as-a-github-app) with the function url as your Webhook URL.

|

||||

The Webhook URL would look like `https://<LAMBDA_FUNCTION_URL>/api/v1/github_webhooks`

|

||||

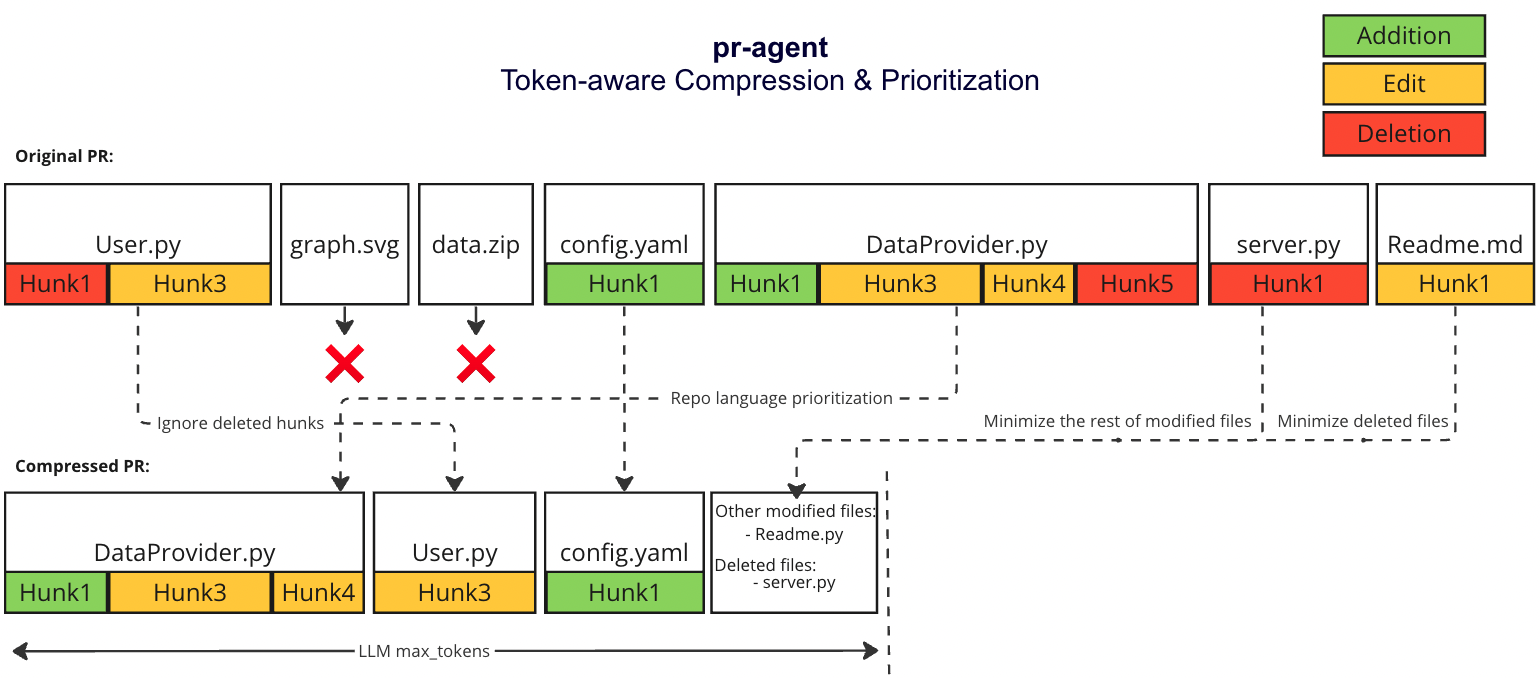

@ -39,4 +39,4 @@ We use [tiktoken](https://github.com/openai/tiktoken) to tokenize the patches af

|

||||

4. If we haven't reached the max token length, add the `deleted files` to the prompt until the prompt reaches the max token length (hard stop), skip the rest of the patches.

|

||||

|

||||

### Example

|

||||

|

||||

|

||||

343

README.md

@ -2,268 +2,161 @@

|

||||

|

||||

<div align="center">

|

||||

|

||||

<img src="./pics/logo-dark.png#gh-dark-mode-only" width="250"/>

|

||||

<img src="./pics/logo-light.png#gh-light-mode-only" width="250"/>

|

||||

|

||||

<img src="./pics/logo-dark.png#gh-dark-mode-only" width="330"/>

|

||||

<img src="./pics/logo-light.png#gh-light-mode-only" width="330"/><br/>

|

||||

Making pull requests less painful with an AI agent

|

||||

</div>

|

||||

|

||||

[](https://github.com/Codium-ai/pr-agent/blob/main/LICENSE)

|

||||

[](https://discord.com/channels/1057273017547378788/1126104260430528613)

|

||||

|

||||

CodiumAI `pr-agent` is an open-source tool aiming to help developers review PRs faster and more efficiently. It automatically analyzes the PR, provides feedback and suggestions, and can answer free-text questions.

|

||||

|

||||

<a href="https://github.com/Codium-ai/pr-agent/commits/main">

|

||||

<img alt="GitHub" src="https://img.shields.io/github/last-commit/Codium-ai/pr-agent/main?style=for-the-badge" height="20">

|

||||

</a>

|

||||

</div>

|

||||

<div style="text-align:left;">

|

||||

|

||||

- [Live demo](#live-demo)

|

||||

- [Quickstart](#Quickstart)

|

||||

CodiumAI `PR-Agent` is an open-source tool aiming to help developers review pull requests faster and more efficiently. It automatically analyzes the pull request and can provide several types of feedback:

|

||||

|

||||

**Auto-Description**: Automatically generating PR description - title, type, summary, code walkthrough and PR labels.

|

||||

\

|

||||

**PR Review**: Adjustable feedback about the PR main theme, type, relevant tests, security issues, focus, score, and various suggestions for the PR content.

|

||||

\

|

||||

**Question Answering**: Answering free-text questions about the PR.

|

||||

\

|

||||

**Code Suggestion**: Committable code suggestions for improving the PR.

|

||||

|

||||

<h3>Example results:</h2>

|

||||

</div>

|

||||

<h4>/describe:</h4>

|

||||

<div align="center">

|

||||

<p float="center">

|

||||

<img src="https://www.codium.ai/images/describe-2.gif" width="800">

|

||||

</p>

|

||||

</div>

|

||||

<h4>/review:</h4>

|

||||

<div align="center">

|

||||

<p float="center">

|

||||

<img src="https://www.codium.ai/images/review-2.gif" width="800">

|

||||

</p>

|

||||

</div>

|

||||

<h4>/reflect_and_review:</h4>

|

||||

<div align="center">

|

||||

<p float="center">

|

||||

<img src="https://www.codium.ai/images/reflect_and_review.gif" width="800">

|

||||

</p>

|

||||

</div>

|

||||

<h4>/ask:</h4>

|

||||

<div align="center">

|

||||

<p float="center">

|

||||

<img src="https://www.codium.ai/images/ask-2.gif" width="800">

|

||||

</p>

|

||||

</div>

|

||||

<h4>/improve:</h4>

|

||||

<div align="center">

|

||||

<p float="center">

|

||||

<img src="https://www.codium.ai/images/improve-2.gif" width="800">

|

||||

</p>

|

||||

</div>

|

||||

<div align="left">

|

||||

|

||||

|

||||

- [Overview](#overview)

|

||||

- [Try it now](#try-it-now)

|

||||

- [Installation](#installation)

|

||||

- [Usage and tools](#usage-and-tools)

|

||||

- [Configuration](#Configuration)

|

||||

- [Configuration](./CONFIGURATION.md)

|

||||

- [How it works](#how-it-works)

|

||||

- [Roadmap](#roadmap)

|

||||

- [Similar projects](#similar-projects)

|

||||

</div>

|

||||

|

||||

## Live demo

|

||||

|

||||

Experience GPT-4 powered PR review on your public GitHub repository with our hosted pr-agent. To try it, just mention `@CodiumAI-Agent` in any PR comment! The agent will generate a PR review in response.

|

||||

## Overview

|

||||

`PR-Agent` offers extensive pull request functionalities across various git providers:

|

||||

| | | GitHub | Gitlab | Bitbucket |

|

||||

|-------|---------------------------------------------|:------:|:------:|:---------:|

|

||||

| TOOLS | Review | :white_check_mark: | :white_check_mark: | :white_check_mark: |

|

||||

| | ⮑ Inline review | :white_check_mark: | :white_check_mark: | |

|

||||

| | Ask | :white_check_mark: | :white_check_mark: | |

|

||||

| | Auto-Description | :white_check_mark: | :white_check_mark: | |

|

||||

| | Improve Code | :white_check_mark: | :white_check_mark: | |

|

||||

| | Reflect and Review | :white_check_mark: | | |

|

||||

| | | | | |

|

||||

| USAGE | CLI | :white_check_mark: | :white_check_mark: | :white_check_mark: |

|

||||

| | App / webhook | :white_check_mark: | :white_check_mark: | |

|

||||

| | Tagging bot | :white_check_mark: | | |

|

||||

| | Actions | :white_check_mark: | | |

|

||||

| | | | | |

|

||||

| CORE | PR compression | :white_check_mark: | :white_check_mark: | :white_check_mark: |

|

||||

| | Repo language prioritization | :white_check_mark: | :white_check_mark: | :white_check_mark: |

|

||||

| | Adaptive and token-aware<br />file patch fitting | :white_check_mark: | :white_check_mark: | :white_check_mark: |

|

||||

| | Incremental PR Review | :white_check_mark: | | |

|

||||

|

||||

|

||||

Examples for invoking the different tools via the CLI:

|

||||

- **Review**: python cli.py --pr-url=<pr_url> review

|

||||

- **Describe**: python cli.py --pr-url=<pr_url> describe

|

||||

- **Improve**: python cli.py --pr-url=<pr_url> improve

|

||||

- **Ask**: python cli.py --pr-url=<pr_url> ask "Write me a poem about this PR"

|

||||

- **Reflect**: python cli.py --pr-url=<pr_url> reflect

|

||||

|

||||

To set up your own pr-agent, see the [Quickstart](#Quickstart) section

|

||||

"<pr_url>" is the url of the relevant PR (for example: https://github.com/Codium-ai/pr-agent/pull/50).

|

||||

|

||||

In the [configuration](./CONFIGURATION.md) file you can select your git provider (GitHub, Gitlab, Bitbucket), and further configure the different tools.

|

||||

|

||||

## Try it now

|

||||

|

||||

Try GPT-4 powered PR-Agent on your public GitHub repository for free. Just mention `@CodiumAI-Agent` and add the desired command in any PR comment! The agent will generate a response based on your command.

|

||||

|

||||

|

||||

|

||||

To set up your own PR-Agent, see the [Installation](#installation) section

|

||||

|

||||

---

|

||||

|

||||

## Quickstart

|

||||

## Installation

|

||||

|

||||

To get started with pr-agent quickly, you first need to acquire two tokens:

|

||||

To get started with PR-Agent quickly, you first need to acquire two tokens:

|

||||

|

||||

1. An OpenAI key from [here](https://platform.openai.com/), with access to GPT-4.

|

||||

2. A GitHub personal access token (classic) with the repo scope.

|

||||

|

||||

There are several ways to use pr-agent. Let's start with the simplest one:

|

||||

There are several ways to use PR-Agent:

|

||||

|

||||

---

|

||||

|

||||

#### Method 1: Use Docker image (no installation required)

|

||||

|

||||

To request a review for a PR, or ask a question about a PR, you can run directly from the Docker image. Here's how:

|

||||

|

||||

1. To request a review for a PR, run the following command:

|

||||

|

||||

```

|

||||

docker run --rm -it -e OPENAI.KEY=<your key> -e GITHUB.USER_TOKEN=<your token> codiumai/pr-agent --pr_url <pr url>

|

||||

```

|

||||

|

||||

2. To ask a question about a PR, run the following command:

|

||||

|

||||

```

|

||||

docker run --rm -it -e OPENAI.KEY=<your key> -e GITHUB.USER_TOKEN=<your token> codiumai/pr-agent --pr_url <pr url> --question "<your question>"

|

||||

```

|

||||

|

||||

Possible questions you can ask include:

|

||||

|

||||

- What is the main theme of this PR?

|

||||

- Is the PR ready for merge?

|

||||

- What are the main changes in this PR?

|

||||

- Should this PR be split into smaller parts?

|

||||

- Can you compose a rhymed song about this PR.

|

||||

|

||||

---

|

||||

|

||||

#### Method 2: Run from source

|

||||

|

||||

1. Clone this repository:

|

||||

|

||||

```

|

||||

git clone https://github.com/Codium-ai/pr-agent.git

|

||||

```

|

||||

|

||||

2. Install the requirements in your favorite virtual environment:

|

||||

|

||||

```

|

||||

pip install -r requirements.txt

|

||||

```

|

||||

|

||||

3. Copy the secrets template file and fill in your OpenAI key and your GitHub user token:

|

||||

|

||||

```

|

||||

cp pr_agent/settings/.secrets_template.toml pr_agent/settings/.secrets.toml

|

||||

# Edit .secrets.toml file

|

||||

```

|

||||

|

||||

4. Run the appropriate Python scripts from the scripts folder:

|

||||

|

||||

```

|

||||

python pr_agent/cli.py --pr_url <pr url>

|

||||

python pr_agent/cli.py --pr_url <pr url> --question "<your question>"

|

||||

```

|

||||

|

||||

---

|

||||

|

||||

#### Method 3: Method 3: Run as a polling server; request reviews by tagging your Github user on a PR

|

||||

|

||||

Follow steps 1-3 of method 2.

|

||||

Run the following command to start the server:

|

||||

|

||||

```

|

||||

python pr_agent/servers/github_polling.py

|

||||

```

|

||||

|

||||

---

|

||||

|

||||

#### Method 4: Run as a Github App, allowing you to automate the review process on your private or public repositories.

|

||||

|

||||

1. Create a GitHub App from the [Github Developer Portal](https://docs.github.com/en/developers/apps/creating-a-github-app).

|

||||

|

||||

- Set the following permissions:

|

||||

- Pull requests: Read & write

|

||||

- Issue comment: Read & write

|

||||

- Metadata: Read-only

|

||||

- Set the following events:

|

||||

- Issue comment

|

||||

- Pull request

|

||||

|

||||

2. Generate a random secret for your app, and save it for later. For example, you can use:

|

||||

|

||||

```

|

||||

WEBHOOK_SECRET=$(python -c "import secrets; print(secrets.token_hex(10))")

|

||||

```

|

||||

|

||||

3. Acquire the following pieces of information from your app's settings page:

|

||||

|

||||

- App private key (click "Generate a private key", and save the file)

|

||||

- App ID

|

||||

|

||||

4. Clone this repository:

|

||||

|

||||

```

|

||||

git clone https://github.com/Codium-ai/pr-agent.git

|

||||

```

|

||||

|

||||

5. Copy the secrets template file and fill in the following:

|

||||

- Your OpenAI key.

|

||||

- Set deployment_type to 'app'

|

||||

- Copy your app's private key to the private_key field.

|

||||

- Copy your app's ID to the app_id field.

|

||||

- Copy your app's webhook secret to the webhook_secret field.

|

||||

|

||||

```

|

||||

cp pr_agent/settings/.secrets_template.toml pr_agent/settings/.secrets.toml

|

||||

# Edit .secrets.toml file

|

||||

```

|

||||

|

||||

6. Build a Docker image for the app and optionally push it to a Docker repository. We'll use Dockerhub as an example:

|

||||

|

||||

```

|

||||

docker build . -t codiumai/pr-agent:github_app --target github_app -f docker/Dockerfile

|

||||

docker push codiumai/pr-agent:github_app # Push to your Docker repository

|

||||

```

|

||||

|

||||

7. Host the app using a server, serverless function, or container environment. Alternatively, for development and

|

||||

debugging, you may use tools like smee.io to forward webhooks to your local machine.

|

||||

|

||||

8. Go back to your app's settings, set the following:

|

||||

|

||||

- Webhook URL: The URL of your app's server, or the URL of the smee.io channel.

|

||||

- Webhook secret: The secret you generated earlier.

|

||||

|

||||

9. Install the app by navigating to the "Install App" tab, and selecting your desired repositories.

|

||||

|

||||

---

|

||||

- [Method 1: Use Docker image (no installation required)](INSTALL.md#method-1-use-docker-image-no-installation-required)

|

||||

- [Method 2: Run as a GitHub Action](INSTALL.md#method-2-run-as-a-github-action)

|

||||

- [Method 3: Run from source](INSTALL.md#method-3-run-from-source)

|

||||

- [Method 4: Run as a polling server](INSTALL.md#method-4-run-as-a-polling-server)

|

||||

- Request reviews by tagging your GitHub user on a PR

|

||||

- [Method 5: Run as a GitHub App](INSTALL.md#method-5-run-as-a-github-app)

|

||||

- Allowing you to automate the review process on your private or public repositories

|

||||

|

||||

## Usage and Tools

|

||||

|

||||

CodiumAI pr-agent provides two types of interactions ("tools"): `"PR Reviewer"` and `"PR Q&A"`.

|

||||

**PR-Agent** provides five types of interactions ("tools"): `"PR Reviewer"`, `"PR Q&A"`, `"PR Description"`, `"PR Code Sueggestions"` and `"PR Reflect and Review"`.

|

||||

|

||||

- The "PR Reviewer" tool automatically analyzes PRs, and provides different types of feedbacks.

|

||||

- The "PR Reviewer" tool automatically analyzes PRs, and provides various types of feedback.

|

||||

- The "PR Q&A" tool answers free-text questions about the PR.

|

||||

|

||||

### PR Reviewer

|

||||

|

||||

Here is a quick overview of the different sub-tools of PR Reviewer:

|

||||

|

||||

- PR Analysis

|

||||

- Summarize main theme

|

||||

- PR type classification

|

||||

- Is the PR covered by relevant tests

|

||||

- Is this a focused PR

|

||||

- Are there security concerns

|

||||

- PR Feedback

|

||||

- General PR suggestions

|

||||

- Code suggestions

|

||||

|

||||

This is how a typical output of the PR Reviewer looks like:

|

||||

|

||||

---

|

||||

|

||||

#### PR Analysis

|

||||

|

||||

- 🎯 **Main theme:** Adding language extension handler and token handler

|

||||

- 📌 **Type of PR:** Enhancement

|

||||

- 🧪 **Relevant tests added:** No

|

||||

- ✨ **Focused PR:** Yes, the PR is focused on adding two new handlers for language extension and token counting.

|

||||

- 🔒 **Security concerns:** No, the PR does not introduce possible security concerns or issues.

|

||||

|

||||

#### PR Feedback

|

||||

|

||||

- 💡 **General PR suggestions:** The PR is generally well-structured and the code is clean. However, it would be beneficial to add some tests to ensure the new handlers work as expected. Also, consider adding docstrings to the new functions and classes to improve code readability and maintainability.

|

||||

|

||||

- 🤖 **Code suggestions:**

|

||||

|

||||

- **relevant file:** pr_agent/algo/language_handler.py

|

||||

|

||||

**suggestion content:** Consider using a set instead of a list for 'bad_extensions' as checking membership in a set is faster than in a list. [medium]

|

||||

|

||||

- **relevant file:** pr_agent/algo/language_handler.py

|

||||

|

||||

**suggestion content:** In the 'filter_bad_extensions' function, you are splitting the filename on '.' and taking the last element to get the extension. This might not work as expected if the filename contains multiple '.' characters. Consider using 'os.path.splitext' to get the file extension more reliably. [important]

|

||||

|

||||

---

|

||||

|

||||

### PR Q&A

|

||||

|

||||

This tool answers free-text questions about the PR. This is how a typical output of the PR Q&A looks like:

|

||||

|

||||

**Question**: summarize for me the PR in 4 bullet points

|

||||

|

||||

**Answer**:

|

||||

|

||||

- The PR introduces a new feature to sort files by their main languages. It uses a mapping of programming languages to their file extensions to achieve this.

|

||||

- It also introduces a filter to exclude files with certain extensions, deemed as 'bad extensions', from the sorting process.

|

||||

- The PR modifies the `get_pr_diff` function in `pr_processing.py` to use the new sorting function. It also refactors the code to move the PR pruning logic into a separate function.

|

||||

- A new `TokenHandler` class is introduced in `token_handler.py` to handle token counting operations. This class is initialized with a PR, variables, system, and user, and provides methods to get system and user tokens and to count tokens in a patch.

|

||||

|

||||

---

|

||||

|

||||

## Configuration

|

||||

|

||||

The different tools and sub-tools used by CodiumAI pr-agent are easily configurable via the configuration file: `/settings/configuration.toml`.

|

||||

|

||||

#### Enabling/disabling sub-tools:

|

||||

|

||||

You can enable/disable the different PR Reviewer sub-sections with the following flags:

|

||||

|

||||

```

|

||||

require_focused_review=true

|

||||

require_tests_review=true

|

||||

require_security_review=true

|

||||

```

|

||||

- The "PR Description" tool automatically sets the PR Title and body.

|

||||

- The "PR Code Suggestion" tool provide inline code suggestions for the PR that can be applied and committed.

|

||||

- The "PR Reflect and Review" tool initiates a dialog with the user, asks them to reflect on the PR, and then provides a more focused review.

|

||||

|

||||

## How it works

|

||||

|

||||

|

||||

|

||||

|

||||

Check out the [PR Compression strategy](./PR_COMPRESSION.md) page for more details on how we convert a code diff to a manageable LLM prompt

|

||||

|

||||

## Roadmap

|

||||

|

||||

- [ ] Support open-source models, as a replacement for openai models. Note that a minimal requirement for each open-source model is to have 8k+ context, and good support for generating json as an output

|

||||

- [ ] Support other Git providers, such as Gitlab and Bitbucket.

|

||||

- [ ] Develop additional logics for handling large PRs, and compressing git patches

|

||||

- [ ] Dedicated tools and sub-tools for specific programming languages (Python, Javascript, Java, C++, etc)

|

||||

- [ ] Support open-source models, as a replacement for OpenAI models. (Note - a minimal requirement for each open-source model is to have 8k+ context, and good support for generating JSON as an output)

|

||||

- [x] Support other Git providers, such as Gitlab and Bitbucket.

|

||||

- [ ] Develop additional logic for handling large PRs, and compressing git patches

|

||||

- [ ] Add additional context to the prompt. For example, repo (or relevant files) summarization, with tools such a [ctags](https://github.com/universal-ctags/ctags)

|

||||

- [ ] Adding more tools. Possible directions:

|

||||

- [ ] Code Quality

|

||||

- [ ] Coding Style

|

||||

- [x] PR description

|

||||

- [x] Inline code suggestions

|

||||

- [x] Reflect and review

|

||||

- [ ] Enforcing CONTRIBUTING.md guidelines

|

||||

- [ ] Performance (are there any performance issues)

|

||||

- [ ] Documentation (is the PR properly documented)

|

||||

- [ ] Rank the PR importance

|

||||

@ -273,6 +166,6 @@ Check out the [PR Compression strategy](./PR_COMPRESSION.md) page for more detai

|

||||

|

||||

- [CodiumAI - Meaningful tests for busy devs](https://github.com/Codium-ai/codiumai-vscode-release)

|

||||

- [Aider - GPT powered coding in your terminal](https://github.com/paul-gauthier/aider)

|

||||

- [GPT-Engineer](https://github.com/AntonOsika/gpt-engineer)

|

||||

- [openai-pr-reviewer](https://github.com/coderabbitai/openai-pr-reviewer)

|

||||

- [CodeReview BOT](https://github.com/anc95/ChatGPT-CodeReview)

|

||||

- [AI-Maintainer](https://github.com/merwanehamadi/AI-Maintainer)

|

||||

|

||||

@ -1,5 +1,8 @@

|

||||

name: 'PR Agent'

|

||||

name: 'Codium PR Agent'

|

||||

description: 'Summarize, review and suggest improvements for pull requests'

|

||||

branding:

|

||||

icon: 'award'

|

||||

color: 'green'

|

||||

runs:

|

||||

using: 'docker'

|

||||

image: 'Dockerfile.github_action'

|

||||

image: 'Dockerfile.github_action_dockerhub'

|

||||

|

||||

12

docker/Dockerfile.lambda

Normal file

@ -0,0 +1,12 @@

|

||||

FROM public.ecr.aws/lambda/python:3.10

|

||||

|

||||

RUN yum update -y && \

|

||||

yum install -y gcc python3-devel && \

|

||||

yum clean all

|

||||

|

||||

ADD requirements.txt .

|

||||

RUN pip install -r requirements.txt && rm requirements.txt

|

||||

RUN pip install mangum==16.0.0

|

||||

COPY pr_agent/ ${LAMBDA_TASK_ROOT}/pr_agent/

|

||||

|

||||

CMD ["pr_agent.servers.serverless.serverless"]

|

||||

BIN

pics/.DS_Store

vendored

BIN

pics/Icon-7.png

|

Before Width: | Height: | Size: 100 KiB |

|

Before Width: | Height: | Size: 102 KiB |

|

Before Width: | Height: | Size: 346 KiB |

|

Before Width: | Height: | Size: 19 KiB After Width: | Height: | Size: 22 KiB |

|

Before Width: | Height: | Size: 20 KiB After Width: | Height: | Size: 25 KiB |

|

Before Width: | Height: | Size: 1.4 MiB |

|

Before Width: | Height: | Size: 413 KiB |

|

Before Width: | Height: | Size: 137 KiB |

|

Before Width: | Height: | Size: 267 KiB |

|

Before Width: | Height: | Size: 42 KiB |

@ -1,5 +1,9 @@

|

||||

import re

|

||||

|

||||

from pr_agent.config_loader import settings

|

||||

from pr_agent.tools.pr_code_suggestions import PRCodeSuggestions

|

||||

from pr_agent.tools.pr_description import PRDescription

|

||||

from pr_agent.tools.pr_information_from_user import PRInformationFromUser

|

||||

from pr_agent.tools.pr_questions import PRQuestions

|

||||

from pr_agent.tools.pr_reviewer import PRReviewer

|

||||

|

||||

@ -8,17 +12,22 @@ class PRAgent:

|

||||

def __init__(self):

|

||||

pass

|

||||

|

||||

async def handle_request(self, pr_url, request):

|

||||

if 'please review' in request.lower() or 'review' == request.lower().strip() or len(request) == 0:

|

||||

reviewer = PRReviewer(pr_url)

|

||||

await reviewer.review()

|

||||

async def handle_request(self, pr_url, request) -> bool:

|

||||

action, *args = request.strip().split()

|

||||

if any(cmd == action for cmd in ["/answer"]):

|

||||

await PRReviewer(pr_url, is_answer=True).review()

|

||||

elif any(cmd == action for cmd in ["/review", "/review_pr", "/reflect_and_review"]):

|

||||

if settings.pr_reviewer.ask_and_reflect or "/reflect_and_review" in request:

|

||||

await PRInformationFromUser(pr_url).generate_questions()

|

||||

else:

|

||||

await PRReviewer(pr_url, args=args).review()

|

||||

elif any(cmd == action for cmd in ["/describe", "/describe_pr"]):

|

||||

await PRDescription(pr_url).describe()

|

||||

elif any(cmd == action for cmd in ["/improve", "/improve_code"]):

|

||||

await PRCodeSuggestions(pr_url).suggest()

|

||||

elif any(cmd == action for cmd in ["/ask", "/ask_question"]):

|

||||

await PRQuestions(pr_url, args).answer()

|

||||

else:

|

||||

return False

|

||||

|

||||

else:

|

||||

if "please answer" in request.lower():

|

||||

question = re.split(r'(?i)please answer', request)[1].strip()

|

||||

elif request.lower().strip().startswith("answer"):

|

||||

question = re.split(r'(?i)answer', request)[1].strip()

|

||||

else:

|

||||

question = request

|

||||

answerer = PRQuestions(pr_url, question)

|

||||

await answerer.answer()

|

||||

return True

|

||||

|

||||

@ -1,15 +1,25 @@

|

||||

import logging

|

||||

|

||||

import openai

|

||||

from openai.error import APIError, Timeout, TryAgain

|

||||

from openai.error import APIError, Timeout, TryAgain, RateLimitError

|

||||

from retry import retry

|

||||

|

||||

from pr_agent.config_loader import settings

|

||||

|

||||

OPENAI_RETRIES=2

|

||||

OPENAI_RETRIES=5

|

||||

|

||||

class AiHandler:

|

||||

"""

|

||||

This class handles interactions with the OpenAI API for chat completions.

|

||||

It initializes the API key and other settings from a configuration file,

|

||||

and provides a method for performing chat completions using the OpenAI ChatCompletion API.

|

||||

"""

|

||||

|

||||

def __init__(self):

|

||||

"""

|

||||

Initializes the OpenAI API key and other settings from a configuration file.

|

||||

Raises a ValueError if the OpenAI key is missing.

|

||||

"""

|

||||

try:

|

||||

openai.api_key = settings.openai.key

|

||||

if settings.get("OPENAI.ORG", None):

|

||||

@ -24,9 +34,28 @@ class AiHandler:

|

||||

except AttributeError as e:

|

||||

raise ValueError("OpenAI key is required") from e

|

||||

|

||||

@retry(exceptions=(APIError, Timeout, TryAgain, AttributeError),

|

||||

@retry(exceptions=(APIError, Timeout, TryAgain, AttributeError, RateLimitError),

|

||||

tries=OPENAI_RETRIES, delay=2, backoff=2, jitter=(1, 3))

|

||||

async def chat_completion(self, model: str, temperature: float, system: str, user: str):

|

||||

"""

|

||||

Performs a chat completion using the OpenAI ChatCompletion API.

|

||||

Retries in case of API errors or timeouts.

|

||||

|

||||

Args:

|

||||

model (str): The model to use for chat completion.

|

||||

temperature (float): The temperature parameter for chat completion.

|

||||

system (str): The system message for chat completion.

|

||||

user (str): The user message for chat completion.

|

||||

|

||||

Returns:

|

||||

tuple: A tuple containing the response and finish reason from the API.

|

||||

|

||||

Raises:

|

||||

TryAgain: If the API response is empty or there are no choices in the response.

|

||||

APIError: If there is an error during OpenAI inference.

|

||||

Timeout: If there is a timeout during OpenAI inference.

|

||||

TryAgain: If there is an attribute error during OpenAI inference.

|

||||

"""

|

||||

try:

|

||||

response = await openai.ChatCompletion.acreate(

|

||||

model=model,

|

||||

@ -40,6 +69,12 @@ class AiHandler:

|

||||

except (APIError, Timeout, TryAgain) as e:

|

||||

logging.error("Error during OpenAI inference: ", e)

|

||||

raise

|

||||

except (RateLimitError) as e:

|

||||

logging.error("Rate limit error during OpenAI inference: ", e)

|

||||

raise

|

||||

except (Exception) as e:

|

||||

logging.error("Unknown error during OpenAI inference: ", e)

|

||||

raise TryAgain from e

|

||||

if response is None or len(response.choices) == 0:

|

||||

raise TryAgain

|

||||

resp = response.choices[0]['message']['content']

|

||||

|

||||

@ -8,7 +8,15 @@ from pr_agent.config_loader import settings

|

||||

|

||||

def extend_patch(original_file_str, patch_str, num_lines) -> str:

|

||||

"""

|

||||

Extends the patch to include 'num_lines' more surrounding lines

|

||||

Extends the given patch to include a specified number of surrounding lines.

|

||||

|

||||

Args:

|

||||

original_file_str (str): The original file to which the patch will be applied.

|

||||

patch_str (str): The patch to be applied to the original file.

|

||||

num_lines (int): The number of surrounding lines to include in the extended patch.

|

||||

|

||||

Returns:

|

||||

str: The extended patch string.

|

||||

"""

|

||||

if not patch_str or num_lines == 0:

|

||||

return patch_str

|

||||

@ -61,6 +69,14 @@ def extend_patch(original_file_str, patch_str, num_lines) -> str:

|

||||

|

||||

|

||||

def omit_deletion_hunks(patch_lines) -> str:

|

||||

"""

|

||||

Omit deletion hunks from the patch and return the modified patch.

|

||||

Args:

|

||||

- patch_lines: a list of strings representing the lines of the patch

|

||||

Returns:

|

||||

- A string representing the modified patch with deletion hunks omitted

|

||||

"""

|

||||

|

||||

temp_hunk = []

|

||||

added_patched = []

|

||||

add_hunk = False

|

||||

@ -93,7 +109,20 @@ def omit_deletion_hunks(patch_lines) -> str:

|

||||

def handle_patch_deletions(patch: str, original_file_content_str: str,

|

||||

new_file_content_str: str, file_name: str) -> str:

|

||||

"""

|

||||

Handle entire file or deletion patches

|

||||

Handle entire file or deletion patches.

|

||||

|

||||

This function takes a patch, original file content, new file content, and file name as input.

|

||||

It handles entire file or deletion patches and returns the modified patch with deletion hunks omitted.

|

||||

|

||||

Args:

|

||||

patch (str): The patch to be handled.

|

||||

original_file_content_str (str): The original content of the file.

|

||||

new_file_content_str (str): The new content of the file.

|

||||

file_name (str): The name of the file.

|

||||

|

||||

Returns:

|

||||

str: The modified patch with deletion hunks omitted.

|

||||

|

||||

"""

|

||||

if not new_file_content_str:

|

||||

# logic for handling deleted files - don't show patch, just show that the file was deleted

|

||||

@ -111,20 +140,26 @@ def handle_patch_deletions(patch: str, original_file_content_str: str,

|

||||

|

||||

|

||||

def convert_to_hunks_with_lines_numbers(patch: str, file) -> str:

|

||||

# toDO: (maybe remove '-' and '+' from the beginning of the line)

|

||||

"""

|

||||

Convert a given patch string into a string with line numbers for each hunk, indicating the new and old content of the file.

|

||||

|

||||

Args:

|

||||

patch (str): The patch string to be converted.

|

||||

file: An object containing the filename of the file being patched.

|

||||

|

||||

Returns:

|

||||

str: A string with line numbers for each hunk, indicating the new and old content of the file.

|

||||

|

||||

example output:

|

||||

## src/file.ts

|

||||

--new hunk--

|

||||

881 line1

|

||||

882 line2

|

||||

883 line3

|

||||

884 line4

|

||||

885 line6

|

||||

886 line7

|

||||

887 + line8

|

||||

888 + line9

|

||||

889 line10

|

||||

890 line11

|

||||

887 + line4

|

||||

888 + line5

|

||||

889 line6

|

||||

890 line7

|

||||

...

|

||||

--old hunk--

|

||||

line1

|

||||

@ -134,8 +169,8 @@ def convert_to_hunks_with_lines_numbers(patch: str, file) -> str:

|

||||

line5

|

||||

line6

|

||||

...

|

||||

|

||||

"""

|

||||

|

||||

patch_with_lines_str = f"## {file.filename}\n"

|

||||

import re

|

||||

patch_lines = patch.splitlines()

|

||||

@ -158,7 +193,7 @@ def convert_to_hunks_with_lines_numbers(patch: str, file) -> str:

|

||||

patch_with_lines_str += f"{start2 + i} {line_new}\n"

|

||||

if old_content_lines:

|

||||

patch_with_lines_str += '--old hunk--\n'

|

||||

for i, line_old in enumerate(old_content_lines):

|

||||

for line_old in old_content_lines:

|

||||

patch_with_lines_str += f"{line_old}\n"

|

||||

new_content_lines = []

|

||||

old_content_lines = []

|

||||

@ -179,7 +214,7 @@ def convert_to_hunks_with_lines_numbers(patch: str, file) -> str:

|

||||

patch_with_lines_str += f"{start2 + i} {line_new}\n"

|

||||

if old_content_lines:

|

||||

patch_with_lines_str += '\n--old hunk--\n'

|

||||

for i, line_old in enumerate(old_content_lines):

|

||||

for line_old in old_content_lines:

|

||||

patch_with_lines_str += f"{line_old}\n"

|

||||

|

||||

return patch_with_lines_str.strip()

|

||||

|

||||

@ -1,15 +1,15 @@

|

||||

from __future__ import annotations

|

||||

|

||||

import difflib

|

||||

import logging

|

||||

from typing import Any, Tuple, Union

|

||||

from typing import Tuple, Union, Callable, List

|

||||

|

||||

from pr_agent.algo.git_patch_processing import extend_patch, handle_patch_deletions, \

|

||||

convert_to_hunks_with_lines_numbers

|

||||

from pr_agent.algo import MAX_TOKENS

|

||||

from pr_agent.algo.git_patch_processing import convert_to_hunks_with_lines_numbers, extend_patch, handle_patch_deletions

|

||||

from pr_agent.algo.language_handler import sort_files_by_main_languages

|

||||

from pr_agent.algo.token_handler import TokenHandler

|

||||

from pr_agent.algo.utils import load_large_diff

|

||||

from pr_agent.config_loader import settings

|

||||

from pr_agent.git_providers import GithubProvider

|

||||

from pr_agent.git_providers.git_provider import GitProvider

|

||||

|

||||

DELETED_FILES_ = "Deleted files:\n"

|

||||

|

||||

@ -20,32 +20,42 @@ OUTPUT_BUFFER_TOKENS_HARD_THRESHOLD = 600

|

||||

PATCH_EXTRA_LINES = 3

|

||||

|

||||

|

||||

def get_pr_diff(git_provider: Union[GithubProvider, Any], token_handler: TokenHandler,

|

||||

def get_pr_diff(git_provider: GitProvider, token_handler: TokenHandler, model: str,

|

||||

add_line_numbers_to_hunks: bool = False, disable_extra_lines: bool = False) -> str:

|

||||

"""

|

||||

Returns a string with the diff of the PR.

|

||||

If needed, apply diff minimization techniques to reduce the number of tokens

|

||||

Returns a string with the diff of the pull request, applying diff minimization techniques if needed.

|

||||

|

||||

Args:

|

||||

git_provider (GitProvider): An object of the GitProvider class representing the Git provider used for the pull request.

|

||||

token_handler (TokenHandler): An object of the TokenHandler class used for handling tokens in the context of the pull request.

|

||||

model (str): The name of the model used for tokenization.

|

||||

add_line_numbers_to_hunks (bool, optional): A boolean indicating whether to add line numbers to the hunks in the diff. Defaults to False.

|

||||

disable_extra_lines (bool, optional): A boolean indicating whether to disable the extension of each patch with extra lines of context. Defaults to False.

|

||||

|

||||

Returns:

|

||||

str: A string with the diff of the pull request, applying diff minimization techniques if needed.

|

||||

"""

|

||||

|

||||

if disable_extra_lines:

|

||||

global PATCH_EXTRA_LINES

|

||||

PATCH_EXTRA_LINES = 0

|

||||

|

||||

git_provider.pr.diff_files = list(git_provider.get_diff_files())

|

||||

diff_files = list(git_provider.get_diff_files())

|

||||

|

||||

# get pr languages

|

||||

pr_languages = sort_files_by_main_languages(git_provider.get_languages(), git_provider.pr.diff_files)

|

||||

pr_languages = sort_files_by_main_languages(git_provider.get_languages(), diff_files)

|

||||

|

||||

# generate a standard diff string, with patch extension

|

||||

patches_extended, total_tokens = pr_generate_extended_diff(pr_languages, token_handler,

|

||||

add_line_numbers_to_hunks)

|

||||

|

||||

# if we are under the limit, return the full diff

|

||||

if total_tokens + OUTPUT_BUFFER_TOKENS_SOFT_THRESHOLD < token_handler.limit:

|

||||

if total_tokens + OUTPUT_BUFFER_TOKENS_SOFT_THRESHOLD < MAX_TOKENS[model]:

|

||||

return "\n".join(patches_extended)

|

||||

|

||||

# if we are over the limit, start pruning

|

||||

patches_compressed, modified_file_names, deleted_file_names = \

|

||||

pr_generate_compressed_diff(pr_languages, token_handler, add_line_numbers_to_hunks)

|

||||

pr_generate_compressed_diff(pr_languages, token_handler, model, add_line_numbers_to_hunks)

|

||||

|

||||

final_diff = "\n".join(patches_compressed)

|

||||

if modified_file_names:

|

||||

@ -61,7 +71,16 @@ def pr_generate_extended_diff(pr_languages: list, token_handler: TokenHandler,

|

||||

add_line_numbers_to_hunks: bool) -> \

|

||||

Tuple[list, int]:

|

||||

"""

|

||||

Generate a standard diff string, with patch extension

|

||||

Generate a standard diff string with patch extension, while counting the number of tokens used and applying diff minimization techniques if needed.

|

||||

|

||||

Args:

|

||||

- pr_languages: A list of dictionaries representing the languages used in the pull request and their corresponding files.

|

||||

- token_handler: An object of the TokenHandler class used for handling tokens in the context of the pull request.

|

||||

- add_line_numbers_to_hunks: A boolean indicating whether to add line numbers to the hunks in the diff.

|

||||

|

||||

Returns:

|

||||

- patches_extended: A list of extended patches for each file in the pull request.

|

||||

- total_tokens: The total number of tokens used in the extended patches.

|

||||

"""

|

||||

total_tokens = token_handler.prompt_tokens # initial tokens

|

||||

patches_extended = []

|

||||

@ -92,14 +111,28 @@ def pr_generate_extended_diff(pr_languages: list, token_handler: TokenHandler,

|

||||

return patches_extended, total_tokens

|

||||

|

||||

|

||||

def pr_generate_compressed_diff(top_langs: list, token_handler: TokenHandler,

|

||||

def pr_generate_compressed_diff(top_langs: list, token_handler: TokenHandler, model: str,

|

||||

convert_hunks_to_line_numbers: bool) -> Tuple[list, list, list]:

|

||||

# Apply Diff Minimization techniques to reduce the number of tokens:

|

||||

# 0. Start from the largest diff patch to smaller ones

|

||||

# 1. Don't use extend context lines around diff

|

||||

# 2. Minimize deleted files

|

||||

# 3. Minimize deleted hunks

|

||||

# 4. Minimize all remaining files when you reach token limit

|

||||

"""

|

||||

Generate a compressed diff string for a pull request, using diff minimization techniques to reduce the number of tokens used.

|

||||

Args:

|

||||

top_langs (list): A list of dictionaries representing the languages used in the pull request and their corresponding files.

|

||||

token_handler (TokenHandler): An object of the TokenHandler class used for handling tokens in the context of the pull request.

|

||||

model (str): The model used for tokenization.

|

||||

convert_hunks_to_line_numbers (bool): A boolean indicating whether to convert hunks to line numbers in the diff.

|

||||

Returns:

|

||||

Tuple[list, list, list]: A tuple containing the following lists:

|

||||

- patches: A list of compressed diff patches for each file in the pull request.

|

||||

- modified_files_list: A list of file names that were skipped due to large patch size.

|

||||

- deleted_files_list: A list of file names that were deleted in the pull request.

|

||||

|

||||

Minimization techniques to reduce the number of tokens:

|

||||

0. Start from the largest diff patch to smaller ones

|

||||

1. Don't use extend context lines around diff

|

||||

2. Minimize deleted files

|

||||

3. Minimize deleted hunks

|

||||

4. Minimize all remaining files when you reach token limit

|

||||

"""

|

||||

|

||||

patches = []

|

||||

modified_files_list = []

|

||||

@ -134,12 +167,12 @@ def pr_generate_compressed_diff(top_langs: list, token_handler: TokenHandler,

|

||||

new_patch_tokens = token_handler.count_tokens(patch)

|

||||

|

||||

# Hard Stop, no more tokens

|

||||

if total_tokens > token_handler.limit - OUTPUT_BUFFER_TOKENS_HARD_THRESHOLD:

|

||||

if total_tokens > MAX_TOKENS[model] - OUTPUT_BUFFER_TOKENS_HARD_THRESHOLD:

|

||||

logging.warning(f"File was fully skipped, no more tokens: {file.filename}.")

|

||||

continue

|

||||

|

||||

# If the patch is too large, just show the file name

|

||||

if total_tokens + new_patch_tokens > token_handler.limit - OUTPUT_BUFFER_TOKENS_SOFT_THRESHOLD:

|

||||

if total_tokens + new_patch_tokens > MAX_TOKENS[model] - OUTPUT_BUFFER_TOKENS_SOFT_THRESHOLD:

|

||||

# Current logic is to skip the patch if it's too large

|

||||

# TODO: Option for alternative logic to remove hunks from the patch to reduce the number of tokens

|

||||

# until we meet the requirements

|

||||

@ -164,14 +197,16 @@ def pr_generate_compressed_diff(top_langs: list, token_handler: TokenHandler,

|

||||

return patches, modified_files_list, deleted_files_list

|

||||

|

||||

|

||||

def load_large_diff(file, new_file_content_str: str, original_file_content_str: str, patch: str) -> str:

|

||||

if not patch: # to Do - also add condition for file extension

|

||||

async def retry_with_fallback_models(f: Callable):

|

||||

model = settings.config.model

|

||||

fallback_models = settings.config.fallback_models

|

||||

if not isinstance(fallback_models, list):

|

||||

fallback_models = [fallback_models]

|

||||

all_models = [model] + fallback_models

|

||||

for i, model in enumerate(all_models):

|

||||

try:

|

||||

diff = difflib.unified_diff(original_file_content_str.splitlines(keepends=True),

|

||||

new_file_content_str.splitlines(keepends=True))

|

||||

if settings.config.verbosity_level >= 2:

|

||||

logging.warning(f"File was modified, but no patch was found. Manually creating patch: {file.filename}.")

|

||||

patch = ''.join(diff)

|

||||

except Exception:

|

||||

pass

|

||||

return patch

|

||||

return await f(model)

|

||||

except Exception as e:

|

||||

logging.warning(f"Failed to generate prediction with {model}: {e}")

|

||||

if i == len(all_models) - 1: # If it's the last iteration

|

||||

raise # Re-raise the last exception

|

||||

|

||||

@ -6,12 +6,42 @@ from pr_agent.config_loader import settings

|

||||

|

||||

|

||||

class TokenHandler:

|

||||

"""

|

||||

A class for handling tokens in the context of a pull request.

|

||||

|

||||

Attributes:

|

||||

- encoder: An object of the encoding_for_model class from the tiktoken module. Used to encode strings and count the number of tokens in them.

|

||||

- limit: The maximum number of tokens allowed for the given model, as defined in the MAX_TOKENS dictionary in the pr_agent.algo module.

|

||||

- prompt_tokens: The number of tokens in the system and user strings, as calculated by the _get_system_user_tokens method.

|

||||

"""

|

||||

|

||||

def __init__(self, pr, vars: dict, system, user):

|

||||

"""

|

||||

Initializes the TokenHandler object.

|

||||

|

||||

Args:

|

||||

- pr: The pull request object.

|

||||

- vars: A dictionary of variables.

|

||||

- system: The system string.

|

||||

- user: The user string.

|

||||

"""

|

||||

self.encoder = encoding_for_model(settings.config.model)

|

||||

self.limit = MAX_TOKENS[settings.config.model]

|

||||

self.prompt_tokens = self._get_system_user_tokens(pr, self.encoder, vars, system, user)

|

||||

|

||||

def _get_system_user_tokens(self, pr, encoder, vars: dict, system, user):

|

||||

"""

|

||||

Calculates the number of tokens in the system and user strings.

|

||||

|

||||

Args:

|

||||

- pr: The pull request object.

|

||||

- encoder: An object of the encoding_for_model class from the tiktoken module.

|

||||

- vars: A dictionary of variables.

|

||||

- system: The system string.

|

||||

- user: The user string.

|

||||

|

||||

Returns:

|

||||

The sum of the number of tokens in the system and user strings.

|

||||

"""

|

||||

environment = Environment(undefined=StrictUndefined)

|

||||

system_prompt = environment.from_string(system).render(vars)

|

||||

user_prompt = environment.from_string(user).render(vars)

|

||||

@ -21,4 +51,13 @@ class TokenHandler:

|

||||

return system_prompt_tokens + user_prompt_tokens

|

||||

|

||||

def count_tokens(self, patch: str) -> int:

|

||||

"""

|

||||

Counts the number of tokens in a given patch string.

|

||||

|

||||

Args:

|

||||

- patch: The patch string.

|

||||

|

||||

Returns:

|

||||

The number of tokens in the patch string.

|

||||

"""

|

||||

return len(self.encoder.encode(patch, disallowed_special=()))

|

||||

@ -1,23 +1,36 @@

|

||||

from __future__ import annotations

|

||||

|

||||

import difflib

|

||||

from datetime import datetime

|

||||

import json

|

||||

import logging

|

||||

import re

|

||||

import textwrap

|

||||

|

||||

from pr_agent.config_loader import settings

|

||||

|

||||

|

||||

def convert_to_markdown(output_data: dict) -> str:

|

||||

"""

|

||||

Convert a dictionary of data into markdown format.

|

||||

Args:

|

||||