mirror of

https://github.com/qodo-ai/pr-agent.git

synced 2025-07-21 04:50:39 +08:00

Compare commits

72 Commits

dbf96ff749

...

main

| Author | SHA1 | Date | |

|---|---|---|---|

| 1663eaad4a | |||

| 0ee115e19c | |||

| aaba9b6b3c | |||

| 93aaa59b2d | |||

| f1c068bc44 | |||

| fffbee5b34 | |||

| 5e555d09c7 | |||

| 7f95e39361 | |||

| 730fa66594 | |||

| f42dc28a55 | |||

| 7251e6df96 | |||

| b01a2b5f4a | |||

| 65e71cb2ee | |||

| 9773afe155 | |||

| 0a8a263809 | |||

| 597f553dd5 | |||

| 4b6fcfe60e | |||

| 7cc4206b70 | |||

| 8906a81a2e | |||

| 6179eeca58 | |||

| e8c73e7baa | |||

| 754d47f187 | |||

| bec70dc96a | |||

| fd32c83c29 | |||

| 7efeeb1de8 | |||

| d7d4b7de89 | |||

| 2a37225574 | |||

| e87fdd0ab5 | |||

| c0d7fd8c36 | |||

| 5933280417 | |||

| 8e0c5c8784 | |||

| 0e9cf274ef | |||

| 3aae48f09c | |||

| c4dd07b3b8 | |||

| 8c7680d85d | |||

| 11fb6ccc7e | |||

| 3aaa727e05 | |||

| 6108f96bff | |||

| 5a00897cbe | |||

| e12b27879c | |||

| fac2141df3 | |||

| 1dbfd27d8e | |||

| eaeee97535 | |||

| 71bbc52a99 | |||

| 4a8e9b79e8 | |||

| efdb0f5744 | |||

| 28750c70e0 | |||

| 583ed10dca | |||

| 07d71f2d25 | |||

| 447a384aee | |||

| d9eb0367cf | |||

| 85484899c3 | |||

| 00b5815785 | |||

| 9becad2eaf | |||

| 74df3f8bd5 | |||

| 4ab97d8969 | |||

| 6057812a20 | |||

| 598e2c731b | |||

| 0742d8052f | |||

| 1713cded21 | |||

| e7268dd314 | |||

| 50c2578cfd | |||

| 5a56d11e16 | |||

| 31e25a5965 | |||

| 85e1e2d4ee | |||

| 2d8bee0d6d | |||

| e0d7083768 | |||

| 5e82d0a316 | |||

| e2d71acb9d | |||

| 8127d52ab3 | |||

| 6a55bbcd23 | |||

| 12af211c13 |

28

README.md

28

README.md

@ -119,19 +119,20 @@ Here are some advantages of PR-Agent:

|

||||

|

||||

PR-Agent and Qodo Merge offer comprehensive pull request functionalities integrated with various git providers:

|

||||

|

||||

| | | GitHub | GitLab | Bitbucket | Azure DevOps | Gitea |

|

||||

|---------------------------------------------------------|---------------------------------------------------------------------------------------------------------------------|:------:|:------:|:---------:|:------------:|:-----:|

|

||||

| [TOOLS](https://qodo-merge-docs.qodo.ai/tools/) | [Describe](https://qodo-merge-docs.qodo.ai/tools/describe/) | ✅ | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [Review](https://qodo-merge-docs.qodo.ai/tools/review/) | ✅ | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [Improve](https://qodo-merge-docs.qodo.ai/tools/improve/) | ✅ | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [Ask](https://qodo-merge-docs.qodo.ai/tools/ask/) | ✅ | ✅ | ✅ | ✅ | |

|

||||

| | ⮑ [Ask on code lines](https://qodo-merge-docs.qodo.ai/tools/ask/#ask-lines) | ✅ | ✅ | | | |

|

||||

| | [Help Docs](https://qodo-merge-docs.qodo.ai/tools/help_docs/?h=auto#auto-approval) | ✅ | ✅ | ✅ | | |

|

||||

| | [Update CHANGELOG](https://qodo-merge-docs.qodo.ai/tools/update_changelog/) | ✅ | ✅ | ✅ | ✅ | |

|

||||

| | [Add Documentation](https://qodo-merge-docs.qodo.ai/tools/documentation/) 💎 | ✅ | ✅ | | | |

|

||||

| | [Analyze](https://qodo-merge-docs.qodo.ai/tools/analyze/) 💎 | ✅ | ✅ | | | |

|

||||

| | [Auto-Approve](https://qodo-merge-docs.qodo.ai/tools/improve/?h=auto#auto-approval) 💎 | ✅ | ✅ | ✅ | | |

|

||||

| | [CI Feedback](https://qodo-merge-docs.qodo.ai/tools/ci_feedback/) 💎 | ✅ | | | | |

|

||||

| | | GitHub | GitLab | Bitbucket | Azure DevOps | Gitea |

|

||||

|---------------------------------------------------------|----------------------------------------------------------------------------------------|:------:|:------:|:---------:|:------------:|:-----:|

|

||||

| [TOOLS](https://qodo-merge-docs.qodo.ai/tools/) | [Describe](https://qodo-merge-docs.qodo.ai/tools/describe/) | ✅ | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [Review](https://qodo-merge-docs.qodo.ai/tools/review/) | ✅ | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [Improve](https://qodo-merge-docs.qodo.ai/tools/improve/) | ✅ | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [Ask](https://qodo-merge-docs.qodo.ai/tools/ask/) | ✅ | ✅ | ✅ | ✅ | |

|

||||

| | ⮑ [Ask on code lines](https://qodo-merge-docs.qodo.ai/tools/ask/#ask-lines) | ✅ | ✅ | | | |

|

||||

| | [Help Docs](https://qodo-merge-docs.qodo.ai/tools/help_docs/?h=auto#auto-approval) | ✅ | ✅ | ✅ | | |

|

||||

| | [Update CHANGELOG](https://qodo-merge-docs.qodo.ai/tools/update_changelog/) | ✅ | ✅ | ✅ | ✅ | |

|

||||

| | [Add Documentation](https://qodo-merge-docs.qodo.ai/tools/documentation/) 💎 | ✅ | ✅ | | | |

|

||||

| | [Analyze](https://qodo-merge-docs.qodo.ai/tools/analyze/) 💎 | ✅ | ✅ | | | |

|

||||

| | [Auto-Approve](https://qodo-merge-docs.qodo.ai/tools/improve/?h=auto#auto-approval) 💎 | ✅ | ✅ | ✅ | | |

|

||||

| | [CI Feedback](https://qodo-merge-docs.qodo.ai/tools/ci_feedback/) 💎 | ✅ | | | | |

|

||||

| | [Compliance](https://qodo-merge-docs.qodo.ai/tools/compliance/) 💎 | ✅ | ✅ | ✅ | | |

|

||||

| | [Custom Prompt](https://qodo-merge-docs.qodo.ai/tools/custom_prompt/) 💎 | ✅ | ✅ | ✅ | | |

|

||||

| | [Generate Custom Labels](https://qodo-merge-docs.qodo.ai/tools/custom_labels/) 💎 | ✅ | ✅ | | | |

|

||||

| | [Generate Tests](https://qodo-merge-docs.qodo.ai/tools/test/) 💎 | ✅ | ✅ | | | |

|

||||

@ -141,6 +142,7 @@ PR-Agent and Qodo Merge offer comprehensive pull request functionalities integra

|

||||

| | [Ticket Context](https://qodo-merge-docs.qodo.ai/core-abilities/fetching_ticket_context/) 💎 | ✅ | ✅ | ✅ | | |

|

||||

| | [Utilizing Best Practices](https://qodo-merge-docs.qodo.ai/tools/improve/#best-practices) 💎 | ✅ | ✅ | ✅ | | |

|

||||

| | [PR Chat](https://qodo-merge-docs.qodo.ai/chrome-extension/features/#pr-chat) 💎 | ✅ | | | | |

|

||||

| | [PR to Ticket](https://qodo-merge-docs.qodo.ai/tools/pr_to_ticket/) 💎 | ✅ | ✅ | ✅ | | |

|

||||

| | [Suggestion Tracking](https://qodo-merge-docs.qodo.ai/tools/improve/#suggestion-tracking) 💎 | ✅ | ✅ | | | |

|

||||

| | | | | | | |

|

||||

| [USAGE](https://qodo-merge-docs.qodo.ai/usage-guide/) | [CLI](https://qodo-merge-docs.qodo.ai/usage-guide/automations_and_usage/#local-repo-cli) | ✅ | ✅ | ✅ | ✅ | ✅ |

|

||||

|

||||

@ -104,7 +104,7 @@ Installation steps:

|

||||

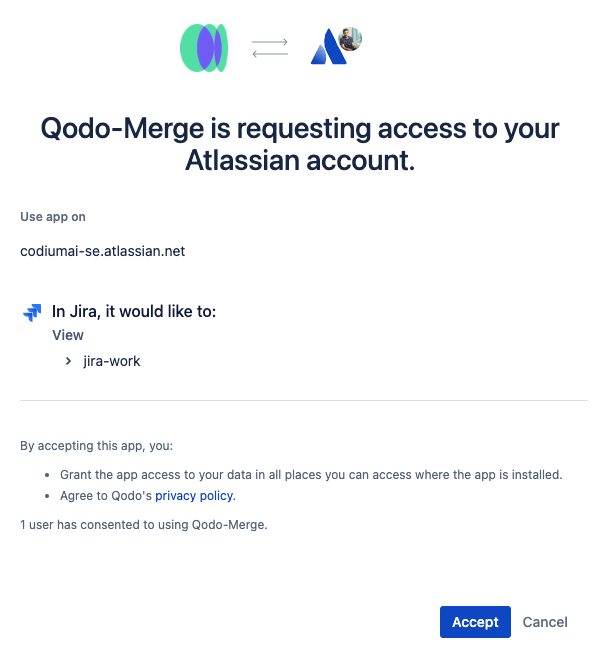

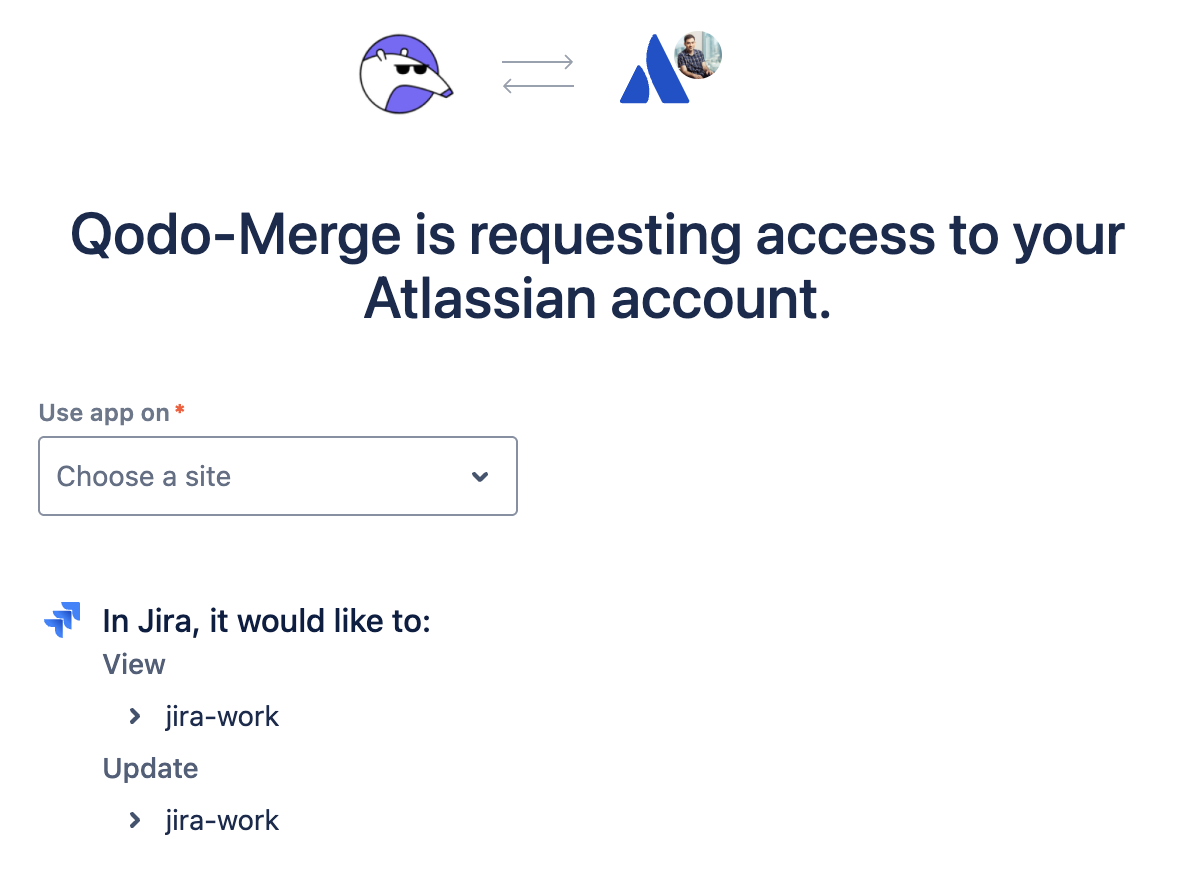

2. Click on the Connect **Jira Cloud** button to connect the Jira Cloud app

|

||||

|

||||

3. Click the `accept` button.<br>

|

||||

{width=384}

|

||||

{width=384}

|

||||

|

||||

4. After installing the app, you will be redirected to the Qodo Merge registration page. and you will see a success message.<br>

|

||||

{width=384}

|

||||

|

||||

@ -28,49 +28,51 @@ PR-Agent and Qodo Merge offer comprehensive pull request functionalities integra

|

||||

|

||||

| | | GitHub | GitLab | Bitbucket | Azure DevOps | Gitea |

|

||||

| ----- |---------------------------------------------------------------------------------------------------------------------|:------:|:------:|:---------:|:------------:|:-----:|

|

||||

| [TOOLS](https://qodo-merge-docs.qodo.ai/tools/) | [Describe](https://qodo-merge-docs.qodo.ai/tools/describe/) | ✅ | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [Review](https://qodo-merge-docs.qodo.ai/tools/review/) | ✅ | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [Improve](https://qodo-merge-docs.qodo.ai/tools/improve/) | ✅ | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [Ask](https://qodo-merge-docs.qodo.ai/tools/ask/) | ✅ | ✅ | ✅ | ✅ | |

|

||||

| [TOOLS](https://qodo-merge-docs.qodo.ai/tools/) | [Describe](https://qodo-merge-docs.qodo.ai/tools/describe/) | ✅ | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [Review](https://qodo-merge-docs.qodo.ai/tools/review/) | ✅ | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [Improve](https://qodo-merge-docs.qodo.ai/tools/improve/) | ✅ | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [Ask](https://qodo-merge-docs.qodo.ai/tools/ask/) | ✅ | ✅ | ✅ | ✅ | |

|

||||

| | ⮑ [Ask on code lines](https://qodo-merge-docs.qodo.ai/tools/ask/#ask-lines) | ✅ | ✅ | | | |

|

||||

| | [Help Docs](https://qodo-merge-docs.qodo.ai/tools/help_docs/?h=auto#auto-approval) | ✅ | ✅ | ✅ | | |

|

||||

| | [Update CHANGELOG](https://qodo-merge-docs.qodo.ai/tools/update_changelog/) | ✅ | ✅ | ✅ | ✅ | |

|

||||

| | [Update CHANGELOG](https://qodo-merge-docs.qodo.ai/tools/update_changelog/) | ✅ | ✅ | ✅ | ✅ | |

|

||||

| | [Add Documentation](https://qodo-merge-docs.qodo.ai/tools/documentation/) 💎 | ✅ | ✅ | | | |

|

||||

| | [Analyze](https://qodo-merge-docs.qodo.ai/tools/analyze/) 💎 | ✅ | ✅ | | | |

|

||||

| | [Auto-Approve](https://qodo-merge-docs.qodo.ai/tools/improve/?h=auto#auto-approval) 💎 | ✅ | ✅ | ✅ | | |

|

||||

| | [CI Feedback](https://qodo-merge-docs.qodo.ai/tools/ci_feedback/) 💎 | ✅ | | | | |

|

||||

| | [Compliance](https://qodo-merge-docs.qodo.ai/tools/compliance/) 💎 | ✅ | ✅ | ✅ | | |

|

||||

| | [Custom Prompt](https://qodo-merge-docs.qodo.ai/tools/custom_prompt/) 💎 | ✅ | ✅ | ✅ | | |

|

||||

| | [Generate Custom Labels](https://qodo-merge-docs.qodo.ai/tools/custom_labels/) 💎 | ✅ | ✅ | | | |

|

||||

| | [Generate Tests](https://qodo-merge-docs.qodo.ai/tools/test/) 💎 | ✅ | ✅ | | | |

|

||||

| | [Implement](https://qodo-merge-docs.qodo.ai/tools/implement/) 💎 | ✅ | ✅ | ✅ | | |

|

||||

| | [PR Chat](https://qodo-merge-docs.qodo.ai/chrome-extension/features/#pr-chat) 💎 | ✅ | | | | |

|

||||

| | [PR to Ticket](https://qodo-merge-docs.qodo.ai/tools/pr_to_ticket/) 💎 | ✅ | ✅ | ✅ | | |

|

||||

| | [Scan Repo Discussions](https://qodo-merge-docs.qodo.ai/tools/scan_repo_discussions/) 💎 | ✅ | | | | |

|

||||

| | [Similar Code](https://qodo-merge-docs.qodo.ai/tools/similar_code/) 💎 | ✅ | | | | |

|

||||

| | [Suggestion Tracking](https://qodo-merge-docs.qodo.ai/tools/improve/#suggestion-tracking) 💎 | ✅ | ✅ | | | |

|

||||

| | [Ticket Context](https://qodo-merge-docs.qodo.ai/core-abilities/fetching_ticket_context/) 💎 | ✅ | ✅ | ✅ | | |

|

||||

| | [Utilizing Best Practices](https://qodo-merge-docs.qodo.ai/tools/improve/#best-practices) 💎 | ✅ | ✅ | ✅ | | |

|

||||

| | [PR Chat](https://qodo-merge-docs.qodo.ai/chrome-extension/features/#pr-chat) 💎 | ✅ | | | | |

|

||||

| | [Suggestion Tracking](https://qodo-merge-docs.qodo.ai/tools/improve/#suggestion-tracking) 💎 | ✅ | ✅ | | | |

|

||||

| | | | | | | |

|

||||

| [USAGE](https://qodo-merge-docs.qodo.ai/usage-guide/) | [CLI](https://qodo-merge-docs.qodo.ai/usage-guide/automations_and_usage/#local-repo-cli) | ✅ | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [App / webhook](https://qodo-merge-docs.qodo.ai/usage-guide/automations_and_usage/#github-app) | ✅ | ✅ | ✅ | ✅ | ✅ |

|

||||

| [USAGE](https://qodo-merge-docs.qodo.ai/usage-guide/) | [CLI](https://qodo-merge-docs.qodo.ai/usage-guide/automations_and_usage/#local-repo-cli) | ✅ | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [App / webhook](https://qodo-merge-docs.qodo.ai/usage-guide/automations_and_usage/#github-app) | ✅ | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [Tagging bot](https://github.com/Codium-ai/pr-agent#try-it-now) | ✅ | | | | |

|

||||

| | [Actions](https://qodo-merge-docs.qodo.ai/installation/github/#run-as-a-github-action) | ✅ | ✅ | ✅ | ✅ | |

|

||||

| | [Actions](https://qodo-merge-docs.qodo.ai/installation/github/#run-as-a-github-action) | ✅ | ✅ | ✅ | ✅ | |

|

||||

| | | | | | | |

|

||||

| [CORE](https://qodo-merge-docs.qodo.ai/core-abilities/) | [Adaptive and token-aware file patch fitting](https://qodo-merge-docs.qodo.ai/core-abilities/compression_strategy/) | ✅ | ✅ | ✅ | ✅ | |

|

||||

| [CORE](https://qodo-merge-docs.qodo.ai/core-abilities/) | [Adaptive and token-aware file patch fitting](https://qodo-merge-docs.qodo.ai/core-abilities/compression_strategy/) | ✅ | ✅ | ✅ | ✅ | |

|

||||

| | [Auto Best Practices 💎](https://qodo-merge-docs.qodo.ai/core-abilities/auto_best_practices/) | ✅ | | | | |

|

||||

| | [Chat on code suggestions](https://qodo-merge-docs.qodo.ai/core-abilities/chat_on_code_suggestions/) | ✅ | ✅ | | | |

|

||||

| | [Code Validation 💎](https://qodo-merge-docs.qodo.ai/core-abilities/code_validation/) | ✅ | ✅ | ✅ | ✅ | |

|

||||

| | [Dynamic context](https://qodo-merge-docs.qodo.ai/core-abilities/dynamic_context/) | ✅ | ✅ | ✅ | ✅ | |

|

||||

| | [Code Validation 💎](https://qodo-merge-docs.qodo.ai/core-abilities/code_validation/) | ✅ | ✅ | ✅ | ✅ | |

|

||||

| | [Dynamic context](https://qodo-merge-docs.qodo.ai/core-abilities/dynamic_context/) | ✅ | ✅ | ✅ | ✅ | |

|

||||

| | [Fetching ticket context](https://qodo-merge-docs.qodo.ai/core-abilities/fetching_ticket_context/) | ✅ | ✅ | ✅ | | |

|

||||

| | [Global and wiki configurations](https://qodo-merge-docs.qodo.ai/usage-guide/configuration_options/) 💎 | ✅ | ✅ | ✅ | | |

|

||||

| | [Impact Evaluation](https://qodo-merge-docs.qodo.ai/core-abilities/impact_evaluation/) 💎 | ✅ | ✅ | | | |

|

||||

| | [Incremental Update 💎](https://qodo-merge-docs.qodo.ai/core-abilities/incremental_update/) | ✅ | | | | |

|

||||

| | [Interactivity](https://qodo-merge-docs.qodo.ai/core-abilities/interactivity/) | ✅ | ✅ | | | |

|

||||

| | [Local and global metadata](https://qodo-merge-docs.qodo.ai/core-abilities/metadata/) | ✅ | ✅ | ✅ | ✅ | |

|

||||

| | [Multiple models support](https://qodo-merge-docs.qodo.ai/usage-guide/changing_a_model/) | ✅ | ✅ | ✅ | ✅ | |

|

||||

| | [PR compression](https://qodo-merge-docs.qodo.ai/core-abilities/compression_strategy/) | ✅ | ✅ | ✅ | ✅ | |

|

||||

| | [Local and global metadata](https://qodo-merge-docs.qodo.ai/core-abilities/metadata/) | ✅ | ✅ | ✅ | ✅ | |

|

||||

| | [Multiple models support](https://qodo-merge-docs.qodo.ai/usage-guide/changing_a_model/) | ✅ | ✅ | ✅ | ✅ | |

|

||||

| | [PR compression](https://qodo-merge-docs.qodo.ai/core-abilities/compression_strategy/) | ✅ | ✅ | ✅ | ✅ | |

|

||||

| | [PR interactive actions](https://www.qodo.ai/images/pr_agent/pr-actions.mp4) 💎 | ✅ | ✅ | | | |

|

||||

| | [RAG context enrichment](https://qodo-merge-docs.qodo.ai/core-abilities/rag_context_enrichment/) | ✅ | | ✅ | | |

|

||||

| | [Self reflection](https://qodo-merge-docs.qodo.ai/core-abilities/self_reflection/) | ✅ | ✅ | ✅ | ✅ | |

|

||||

| | [Self reflection](https://qodo-merge-docs.qodo.ai/core-abilities/self_reflection/) | ✅ | ✅ | ✅ | ✅ | |

|

||||

| | [Static code analysis](https://qodo-merge-docs.qodo.ai/core-abilities/static_code_analysis/) 💎 | ✅ | ✅ | | | |

|

||||

!!! note "💎 means Qodo Merge only"

|

||||

All along the documentation, 💎 marks a feature available only in [Qodo Merge](https://www.codium.ai/pricing/){:target="_blank"}, and not in the open-source version.

|

||||

|

||||

@ -51,6 +51,430 @@ When you open your next PR, you should see a comment from `github-actions` bot w

|

||||

|

||||

See detailed usage instructions in the [USAGE GUIDE](https://qodo-merge-docs.qodo.ai/usage-guide/automations_and_usage/#github-action)

|

||||

|

||||

## Configuration Examples

|

||||

|

||||

This section provides detailed, step-by-step examples for configuring PR-Agent with different models and advanced options in GitHub Actions.

|

||||

|

||||

### Quick Start Examples

|

||||

|

||||

#### Basic Setup (OpenAI Default)

|

||||

|

||||

Copy this minimal workflow to get started with the default OpenAI models:

|

||||

|

||||

```yaml

|

||||

name: PR Agent

|

||||

on:

|

||||

pull_request:

|

||||

types: [opened, reopened, ready_for_review]

|

||||

issue_comment:

|

||||

jobs:

|

||||

pr_agent_job:

|

||||

if: ${{ github.event.sender.type != 'Bot' }}

|

||||

runs-on: ubuntu-latest

|

||||

permissions:

|

||||

issues: write

|

||||

pull-requests: write

|

||||

contents: write

|

||||

steps:

|

||||

- name: PR Agent action step

|

||||

uses: qodo-ai/pr-agent@main

|

||||

env:

|

||||

OPENAI_KEY: ${{ secrets.OPENAI_KEY }}

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

```

|

||||

|

||||

#### Gemini Setup

|

||||

|

||||

Ready-to-use workflow for Gemini models:

|

||||

|

||||

```yaml

|

||||

name: PR Agent (Gemini)

|

||||

on:

|

||||

pull_request:

|

||||

types: [opened, reopened, ready_for_review]

|

||||

issue_comment:

|

||||

jobs:

|

||||

pr_agent_job:

|

||||

if: ${{ github.event.sender.type != 'Bot' }}

|

||||

runs-on: ubuntu-latest

|

||||

permissions:

|

||||

issues: write

|

||||

pull-requests: write

|

||||

contents: write

|

||||

steps:

|

||||

- name: PR Agent action step

|

||||

uses: qodo-ai/pr-agent@main

|

||||

env:

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

config.model: "gemini/gemini-1.5-flash"

|

||||

config.fallback_models: '["gemini/gemini-1.5-flash"]'

|

||||

GOOGLE_AI_STUDIO.GEMINI_API_KEY: ${{ secrets.GEMINI_API_KEY }}

|

||||

github_action_config.auto_review: "true"

|

||||

github_action_config.auto_describe: "true"

|

||||

github_action_config.auto_improve: "true"

|

||||

```

|

||||

|

||||

#### Claude Setup

|

||||

|

||||

Ready-to-use workflow for Claude models:

|

||||

|

||||

```yaml

|

||||

name: PR Agent (Claude)

|

||||

on:

|

||||

pull_request:

|

||||

types: [opened, reopened, ready_for_review]

|

||||

issue_comment:

|

||||

jobs:

|

||||

pr_agent_job:

|

||||

if: ${{ github.event.sender.type != 'Bot' }}

|

||||

runs-on: ubuntu-latest

|

||||

permissions:

|

||||

issues: write

|

||||

pull-requests: write

|

||||

contents: write

|

||||

steps:

|

||||

- name: PR Agent action step

|

||||

uses: qodo-ai/pr-agent@main

|

||||

env:

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

config.model: "anthropic/claude-3-opus-20240229"

|

||||

config.fallback_models: '["anthropic/claude-3-haiku-20240307"]'

|

||||

ANTHROPIC.KEY: ${{ secrets.ANTHROPIC_KEY }}

|

||||

github_action_config.auto_review: "true"

|

||||

github_action_config.auto_describe: "true"

|

||||

github_action_config.auto_improve: "true"

|

||||

```

|

||||

|

||||

### Basic Configuration with Tool Controls

|

||||

|

||||

Start with this enhanced workflow that includes tool configuration:

|

||||

|

||||

```yaml

|

||||

on:

|

||||

pull_request:

|

||||

types: [opened, reopened, ready_for_review]

|

||||

issue_comment:

|

||||

jobs:

|

||||

pr_agent_job:

|

||||

if: ${{ github.event.sender.type != 'Bot' }}

|

||||

runs-on: ubuntu-latest

|

||||

permissions:

|

||||

issues: write

|

||||

pull-requests: write

|

||||

contents: write

|

||||

name: Run pr agent on every pull request, respond to user comments

|

||||

steps:

|

||||

- name: PR Agent action step

|

||||

id: pragent

|

||||

uses: qodo-ai/pr-agent@main

|

||||

env:

|

||||

OPENAI_KEY: ${{ secrets.OPENAI_KEY }}

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

# Enable/disable automatic tools

|

||||

github_action_config.auto_review: "true"

|

||||

github_action_config.auto_describe: "true"

|

||||

github_action_config.auto_improve: "true"

|

||||

# Configure which PR events trigger the action

|

||||

github_action_config.pr_actions: '["opened", "reopened", "ready_for_review", "review_requested"]'

|

||||

```

|

||||

|

||||

### Switching Models

|

||||

|

||||

#### Using Gemini (Google AI Studio)

|

||||

|

||||

To use Gemini models instead of the default OpenAI models:

|

||||

|

||||

```yaml

|

||||

env:

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

# Set the model to Gemini

|

||||

config.model: "gemini/gemini-1.5-flash"

|

||||

config.fallback_models: '["gemini/gemini-1.5-flash"]'

|

||||

# Add your Gemini API key

|

||||

GOOGLE_AI_STUDIO.GEMINI_API_KEY: ${{ secrets.GEMINI_API_KEY }}

|

||||

# Tool configuration

|

||||

github_action_config.auto_review: "true"

|

||||

github_action_config.auto_describe: "true"

|

||||

github_action_config.auto_improve: "true"

|

||||

```

|

||||

|

||||

**Required Secrets:**

|

||||

- Add `GEMINI_API_KEY` to your repository secrets (get it from [Google AI Studio](https://aistudio.google.com/))

|

||||

|

||||

**Note:** When using non-OpenAI models like Gemini, you don't need to set `OPENAI_KEY` - only the model-specific API key is required.

|

||||

|

||||

#### Using Claude (Anthropic)

|

||||

|

||||

To use Claude models:

|

||||

|

||||

```yaml

|

||||

env:

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

# Set the model to Claude

|

||||

config.model: "anthropic/claude-3-opus-20240229"

|

||||

config.fallback_models: '["anthropic/claude-3-haiku-20240307"]'

|

||||

# Add your Anthropic API key

|

||||

ANTHROPIC.KEY: ${{ secrets.ANTHROPIC_KEY }}

|

||||

# Tool configuration

|

||||

github_action_config.auto_review: "true"

|

||||

github_action_config.auto_describe: "true"

|

||||

github_action_config.auto_improve: "true"

|

||||

```

|

||||

|

||||

**Required Secrets:**

|

||||

- Add `ANTHROPIC_KEY` to your repository secrets (get it from [Anthropic Console](https://console.anthropic.com/))

|

||||

|

||||

**Note:** When using non-OpenAI models like Claude, you don't need to set `OPENAI_KEY` - only the model-specific API key is required.

|

||||

|

||||

#### Using Azure OpenAI

|

||||

|

||||

To use Azure OpenAI services:

|

||||

|

||||

```yaml

|

||||

env:

|

||||

OPENAI_KEY: ${{ secrets.AZURE_OPENAI_KEY }}

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

# Azure OpenAI configuration

|

||||

OPENAI.API_TYPE: "azure"

|

||||

OPENAI.API_VERSION: "2023-05-15"

|

||||

OPENAI.API_BASE: ${{ secrets.AZURE_OPENAI_ENDPOINT }}

|

||||

OPENAI.DEPLOYMENT_ID: ${{ secrets.AZURE_OPENAI_DEPLOYMENT }}

|

||||

# Set the model to match your Azure deployment

|

||||

config.model: "gpt-4o"

|

||||

config.fallback_models: '["gpt-4o"]'

|

||||

# Tool configuration

|

||||

github_action_config.auto_review: "true"

|

||||

github_action_config.auto_describe: "true"

|

||||

github_action_config.auto_improve: "true"

|

||||

```

|

||||

|

||||

**Required Secrets:**

|

||||

- `AZURE_OPENAI_KEY`: Your Azure OpenAI API key

|

||||

- `AZURE_OPENAI_ENDPOINT`: Your Azure OpenAI endpoint URL

|

||||

- `AZURE_OPENAI_DEPLOYMENT`: Your deployment name

|

||||

|

||||

#### Using Local Models (Ollama)

|

||||

|

||||

To use local models via Ollama:

|

||||

|

||||

```yaml

|

||||

env:

|

||||

OPENAI_KEY: ${{ secrets.OPENAI_KEY }}

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

# Set the model to a local Ollama model

|

||||

config.model: "ollama/qwen2.5-coder:32b"

|

||||

config.fallback_models: '["ollama/qwen2.5-coder:32b"]'

|

||||

config.custom_model_max_tokens: "128000"

|

||||

# Ollama configuration

|

||||

OLLAMA.API_BASE: "http://localhost:11434"

|

||||

# Tool configuration

|

||||

github_action_config.auto_review: "true"

|

||||

github_action_config.auto_describe: "true"

|

||||

github_action_config.auto_improve: "true"

|

||||

```

|

||||

|

||||

**Note:** For local models, you'll need to use a self-hosted runner with Ollama installed, as GitHub Actions hosted runners cannot access localhost services.

|

||||

|

||||

### Advanced Configuration Options

|

||||

|

||||

#### Custom Review Instructions

|

||||

|

||||

Add specific instructions for the review process:

|

||||

|

||||

```yaml

|

||||

env:

|

||||

OPENAI_KEY: ${{ secrets.OPENAI_KEY }}

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

# Custom review instructions

|

||||

pr_reviewer.extra_instructions: "Focus on security vulnerabilities and performance issues. Check for proper error handling."

|

||||

# Tool configuration

|

||||

github_action_config.auto_review: "true"

|

||||

github_action_config.auto_describe: "true"

|

||||

github_action_config.auto_improve: "true"

|

||||

```

|

||||

|

||||

#### Language-Specific Configuration

|

||||

|

||||

Configure for specific programming languages:

|

||||

|

||||

```yaml

|

||||

env:

|

||||

OPENAI_KEY: ${{ secrets.OPENAI_KEY }}

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

# Language-specific settings

|

||||

pr_reviewer.extra_instructions: "Focus on Python best practices, type hints, and docstrings."

|

||||

pr_code_suggestions.num_code_suggestions: "8"

|

||||

pr_code_suggestions.suggestions_score_threshold: "7"

|

||||

# Tool configuration

|

||||

github_action_config.auto_review: "true"

|

||||

github_action_config.auto_describe: "true"

|

||||

github_action_config.auto_improve: "true"

|

||||

```

|

||||

|

||||

#### Selective Tool Execution

|

||||

|

||||

Run only specific tools automatically:

|

||||

|

||||

```yaml

|

||||

env:

|

||||

OPENAI_KEY: ${{ secrets.OPENAI_KEY }}

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

# Only run review and describe, skip improve

|

||||

github_action_config.auto_review: "true"

|

||||

github_action_config.auto_describe: "true"

|

||||

github_action_config.auto_improve: "false"

|

||||

# Only trigger on PR open and reopen

|

||||

github_action_config.pr_actions: '["opened", "reopened"]'

|

||||

```

|

||||

|

||||

### Using Configuration Files

|

||||

|

||||

Instead of setting all options via environment variables, you can use a `.pr_agent.toml` file in your repository root:

|

||||

|

||||

1. Create a `.pr_agent.toml` file in your repository root:

|

||||

|

||||

```toml

|

||||

[config]

|

||||

model = "gemini/gemini-1.5-flash"

|

||||

fallback_models = ["anthropic/claude-3-opus-20240229"]

|

||||

|

||||

[pr_reviewer]

|

||||

extra_instructions = "Focus on security issues and code quality."

|

||||

|

||||

[pr_code_suggestions]

|

||||

num_code_suggestions = 6

|

||||

suggestions_score_threshold = 7

|

||||

```

|

||||

|

||||

2. Use a simpler workflow file:

|

||||

|

||||

```yaml

|

||||

on:

|

||||

pull_request:

|

||||

types: [opened, reopened, ready_for_review]

|

||||

issue_comment:

|

||||

jobs:

|

||||

pr_agent_job:

|

||||

if: ${{ github.event.sender.type != 'Bot' }}

|

||||

runs-on: ubuntu-latest

|

||||

permissions:

|

||||

issues: write

|

||||

pull-requests: write

|

||||

contents: write

|

||||

name: Run pr agent on every pull request, respond to user comments

|

||||

steps:

|

||||

- name: PR Agent action step

|

||||

id: pragent

|

||||

uses: qodo-ai/pr-agent@main

|

||||

env:

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

GOOGLE_AI_STUDIO.GEMINI_API_KEY: ${{ secrets.GEMINI_API_KEY }}

|

||||

ANTHROPIC.KEY: ${{ secrets.ANTHROPIC_KEY }}

|

||||

github_action_config.auto_review: "true"

|

||||

github_action_config.auto_describe: "true"

|

||||

github_action_config.auto_improve: "true"

|

||||

```

|

||||

|

||||

### Troubleshooting Common Issues

|

||||

|

||||

#### Model Not Found Errors

|

||||

|

||||

If you get model not found errors:

|

||||

|

||||

1. **Check model name format**: Ensure you're using the correct model identifier format (e.g., `gemini/gemini-1.5-flash`, not just `gemini-1.5-flash`)

|

||||

|

||||

2. **Verify API keys**: Make sure your API keys are correctly set as repository secrets

|

||||

|

||||

3. **Check model availability**: Some models may not be available in all regions or may require specific access

|

||||

|

||||

#### Environment Variable Format

|

||||

|

||||

Remember these key points about environment variables:

|

||||

|

||||

- Use dots (`.`) or double underscores (`__`) to separate sections and keys

|

||||

- Boolean values should be strings: `"true"` or `"false"`

|

||||

- Arrays should be JSON strings: `'["item1", "item2"]'`

|

||||

- Model names are case-sensitive

|

||||

|

||||

#### Rate Limiting

|

||||

|

||||

If you encounter rate limiting:

|

||||

|

||||

```yaml

|

||||

env:

|

||||

OPENAI_KEY: ${{ secrets.OPENAI_KEY }}

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

# Add fallback models for better reliability

|

||||

config.fallback_models: '["gpt-4o", "gpt-3.5-turbo"]'

|

||||

# Increase timeout for slower models

|

||||

config.ai_timeout: "300"

|

||||

github_action_config.auto_review: "true"

|

||||

github_action_config.auto_describe: "true"

|

||||

github_action_config.auto_improve: "true"

|

||||

```

|

||||

|

||||

#### Common Error Messages and Solutions

|

||||

|

||||

**Error: "Model not found"**

|

||||

- **Solution**: Check the model name format and ensure it matches the exact identifier. See the [Changing a model in PR-Agent](../usage-guide/changing_a_model.md) guide for supported models and their correct identifiers.

|

||||

|

||||

**Error: "API key not found"**

|

||||

- **Solution**: Verify that your API key is correctly set as a repository secret and the environment variable name matches exactly

|

||||

- **Note**: For non-OpenAI models (Gemini, Claude, etc.), you only need the model-specific API key, not `OPENAI_KEY`

|

||||

|

||||

**Error: "Rate limit exceeded"**

|

||||

- **Solution**: Add fallback models or increase the `config.ai_timeout` value

|

||||

|

||||

**Error: "Permission denied"**

|

||||

- **Solution**: Ensure your workflow has the correct permissions set:

|

||||

```yaml

|

||||

permissions:

|

||||

issues: write

|

||||

pull-requests: write

|

||||

contents: write

|

||||

```

|

||||

|

||||

**Error: "Invalid JSON format"**

|

||||

- **Solution**: Check that arrays are properly formatted as JSON strings:

|

||||

```yaml

|

||||

# Correct

|

||||

config.fallback_models: '["model1", "model2"]'

|

||||

# Incorrect (interpreted as a YAML list, not a string)

|

||||

config.fallback_models: ["model1", "model2"]

|

||||

```

|

||||

|

||||

#### Debugging Tips

|

||||

|

||||

1. **Enable verbose logging**: Add `config.verbosity_level: "2"` to see detailed logs

|

||||

2. **Check GitHub Actions logs**: Look at the step output for specific error messages

|

||||

3. **Test with minimal configuration**: Start with just the basic setup and add options one by one

|

||||

4. **Verify secrets**: Double-check that all required secrets are set in your repository settings

|

||||

|

||||

#### Performance Optimization

|

||||

|

||||

For better performance with large repositories:

|

||||

|

||||

```yaml

|

||||

env:

|

||||

OPENAI_KEY: ${{ secrets.OPENAI_KEY }}

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

# Optimize for large PRs

|

||||

config.large_patch_policy: "clip"

|

||||

config.max_model_tokens: "32000"

|

||||

config.patch_extra_lines_before: "3"

|

||||

config.patch_extra_lines_after: "1"

|

||||

github_action_config.auto_review: "true"

|

||||

github_action_config.auto_describe: "true"

|

||||

github_action_config.auto_improve: "true"

|

||||

```

|

||||

|

||||

### Reference

|

||||

|

||||

For more detailed configuration options, see:

|

||||

- [Changing a model in PR-Agent](../usage-guide/changing_a_model.md)

|

||||

- [Configuration options](../usage-guide/configuration_options.md)

|

||||

- [Automations and usage](../usage-guide/automations_and_usage.md#github-action)

|

||||

|

||||

### Using a specific release

|

||||

|

||||

!!! tip ""

|

||||

@ -296,4 +720,4 @@ After you set up AWS CodeCommit using the instructions above, here is an example

|

||||

PYTHONPATH="/PATH/TO/PROJECTS/pr-agent" python pr_agent/cli.py \

|

||||

--pr_url https://us-east-1.console.aws.amazon.com/codesuite/codecommit/repositories/MY_REPO_NAME/pull-requests/321 \

|

||||

review

|

||||

```

|

||||

```

|

||||

@ -58,6 +58,12 @@ A list of the models used for generating the baseline suggestions, and example r

|

||||

<td style="text-align:left;">1024</td>

|

||||

<td style="text-align:center;"><b>44.3</b></td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td style="text-align:left;">Grok-4</td>

|

||||

<td style="text-align:left;">2025-07-09</td>

|

||||

<td style="text-align:left;">unknown</td>

|

||||

<td style="text-align:center;"><b>41.7</b></td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td style="text-align:left;">Claude-4-sonnet</td>

|

||||

<td style="text-align:left;">2025-05-14</td>

|

||||

@ -262,6 +268,23 @@ weaknesses:

|

||||

- **Frequent incorrect or no-op fixes:** It sometimes supplies identical “before/after” code, flags non-issues, or suggests changes that would break compilation or logic, reducing reviewer trust.

|

||||

- **Shaky guideline consistency:** Although generally compliant, it still occasionally violates rules (touches unchanged lines, offers stylistic advice, adds imports) and duplicates suggestions, indicating unstable internal checks.

|

||||

|

||||

### Grok-4

|

||||

|

||||

final score: **32.8**

|

||||

|

||||

strengths:

|

||||

|

||||

- **Focused and concise fixes:** When the model does detect a problem it usually proposes a minimal, well-scoped patch that compiles and directly addresses the defect without unnecessary noise.

|

||||

- **Good critical-bug instinct:** It often prioritises show-stoppers (compile failures, crashes, security issues) over cosmetic matters and occasionally spots subtle issues that all other reviewers miss.

|

||||

- **Clear explanations & snippets:** Explanations are short, readable and paired with ready-to-paste code, making the advice easy to apply.

|

||||

|

||||

weaknesses:

|

||||

|

||||

- **High miss rate:** In a large fraction of examples the model returned an empty list or covered only one minor issue while overlooking more serious newly-introduced bugs.

|

||||

- **Inconsistent accuracy:** A noticeable subset of answers contain wrong or even harmful fixes (e.g., removing valid flags, creating compile errors, re-introducing bugs).

|

||||

- **Limited breadth:** Even when it finds a real defect it rarely reports additional related problems that peers catch, leading to partial reviews.

|

||||

- **Occasional guideline slips:** A few replies modify unchanged lines, suggest new imports, or duplicate suggestions, showing imperfect compliance with instructions.

|

||||

|

||||

## Appendix - Example Results

|

||||

|

||||

Some examples of benchmarked PRs and their results:

|

||||

|

||||

304

docs/docs/tools/compliance.md

Normal file

304

docs/docs/tools/compliance.md

Normal file

@ -0,0 +1,304 @@

|

||||

`Platforms supported: GitHub, GitLab, Bitbucket`

|

||||

|

||||

## Overview

|

||||

|

||||

The `compliance` tool performs comprehensive compliance checks on PR code changes, validating them against security standards, ticket requirements, and custom organizational compliance checklists, thereby helping teams, enterprises, and agents maintain consistent code quality and security practices while ensuring that development work aligns with business requirements.

|

||||

|

||||

=== "Fully Compliant"

|

||||

{width=256}

|

||||

|

||||

=== "Partially Compliant"

|

||||

{width=256}

|

||||

|

||||

___

|

||||

|

||||

[//]: # (???+ note "The following features are available only for Qodo Merge 💎 users:")

|

||||

|

||||

[//]: # ( - Custom compliance checklists and hierarchical compliance checklists)

|

||||

|

||||

[//]: # ( - Ticket compliance validation with Jira/Linear integration)

|

||||

|

||||

[//]: # ( - Auto-approval based on compliance status)

|

||||

|

||||

[//]: # ( - Compliance labels and automated enforcement)

|

||||

|

||||

## Example Usage

|

||||

|

||||

### Manual Triggering

|

||||

|

||||

Invoke the tool manually by commenting `/compliance` on any PR. The compliance results are presented in a comprehensive table:

|

||||

|

||||

To edit [configurations](#configuration-options) related to the `compliance` tool, use the following template:

|

||||

|

||||

```toml

|

||||

/compliance --pr_compliance.some_config1=... --pr_compliance.some_config2=...

|

||||

```

|

||||

|

||||

For example, you can enable ticket compliance labels by running:

|

||||

|

||||

```toml

|

||||

/compliance --pr_compliance.enable_ticket_labels=true

|

||||

```

|

||||

|

||||

### Automatic Triggering

|

||||

|

||||

|

||||

The tool can be triggered automatically every time a new PR is [opened](https://qodo-merge-docs.qodo.ai/usage-guide/automations_and_usage/#github-app-automatic-tools-when-a-new-pr-is-opened), or in a [push](https://qodo-merge-docs.qodo.ai/usage-guide/automations_and_usage/?h=push#github-app-automatic-tools-for-push-actions-commits-to-an-open-pr) event to an existing PR.

|

||||

|

||||

To run the `compliance` tool automatically when a PR is opened, define the following in the [configuration file](https://qodo-merge-docs.qodo.ai/usage-guide/configuration_options/):

|

||||

|

||||

```toml

|

||||

[github_app] # for example

|

||||

pr_commands = [

|

||||

"/compliance",

|

||||

...

|

||||

]

|

||||

|

||||

```

|

||||

|

||||

## Compliance Categories

|

||||

|

||||

The compliance tool evaluates three main categories:

|

||||

|

||||

|

||||

### 1. Security Compliance

|

||||

|

||||

Scans for security vulnerabilities and potential exploits in the PR code changes:

|

||||

|

||||

- **Verified Security Concerns** 🔴: Clear security vulnerabilities that require immediate attention

|

||||

- **Possible Security Risks** ⚪: Potential security issues that need human verification

|

||||

- **No Security Concerns** 🟢: No security vulnerabilities detected

|

||||

|

||||

Examples of security issues:

|

||||

|

||||

- Exposure of sensitive information (API keys, passwords, secrets)

|

||||

- SQL injection vulnerabilities

|

||||

- Cross-site scripting (XSS) risks

|

||||

- Cross-site request forgery (CSRF) vulnerabilities

|

||||

- Insecure data handling patterns

|

||||

|

||||

|

||||

### 2. Ticket Compliance

|

||||

|

||||

???+ tip "How to set up ticket compliance"

|

||||

Follow the guide on how to set up [ticket compliance](https://qodo-merge-docs.qodo.ai/core-abilities/fetching_ticket_context/) with Qodo Merge.

|

||||

|

||||

???+ tip "Auto-create ticket"

|

||||

Follow this [guide](https://qodo-merge-docs.qodo.ai/tools/pr_to_ticket/) to learn how to enable triggering `create tickets` based on PR content.

|

||||

|

||||

{width=256}

|

||||

|

||||

|

||||

Validates that PR changes fulfill the requirements specified in linked tickets:

|

||||

|

||||

- **Fully Compliant** 🟢: All ticket requirements are satisfied

|

||||

- **Partially Compliant** 🟡: Some requirements are met, others need attention

|

||||

- **Not Compliant** 🔴: Clear violations of ticket requirements

|

||||

- **Requires Verification** ⚪: Requirements that need human review

|

||||

|

||||

|

||||

### 3. Custom Compliance

|

||||

|

||||

Validates against an organization-specific compliance checklist:

|

||||

|

||||

- **Fully Compliant** 🟢: All custom compliance are satisfied

|

||||

- **Not Compliant** 🔴: Violations of custom compliance

|

||||

- **Requires Verification** ⚪: Compliance that need human assessment

|

||||

|

||||

## Custom Compliance

|

||||

|

||||

### Setting Up Custom Compliance

|

||||

|

||||

Each compliance is defined in a YAML file as follows:

|

||||

- `title`: Used to provide a clear name for the compliance

|

||||

- `compliance_label`: Used to automatically generate labels for non-compliance issues

|

||||

- `objective`, `success_criteria`, and `failure_criteria`: These fields are used to clearly define what constitutes compliance

|

||||

|

||||

???+ tip "Example of a compliance checklist"

|

||||

|

||||

```yaml

|

||||

# pr_compliance_checklist.yaml

|

||||

pr_compliances:

|

||||

- title: "Error Handling"

|

||||

compliance_label: true

|

||||

objective: "All external API calls must have proper error handling"

|

||||

success_criteria: "Try-catch blocks around external calls with appropriate logging"

|

||||

failure_criteria: "External API calls without error handling or logging"

|

||||

|

||||

...

|

||||

```

|

||||

|

||||

???+ tip "Writing effective compliance checklists"

|

||||

- Avoid overly complex or subjective compliances that are hard to verify

|

||||

- Keep compliances focused on security, business requirements, and critical standards

|

||||

- Use clear, actionable language that developers can understand

|

||||

- Focus on meaningful compliance requirements, not style preferences

|

||||

|

||||

|

||||

### Global Hierarchical Compliance

|

||||

|

||||

Qodo Merge supports hierarchical compliance checklists using a dedicated global configuration repository.

|

||||

|

||||

#### Setting up global hierarchical compliance

|

||||

|

||||

1\. Create a new repository named `pr-agent-settings` in your organization or workspace.

|

||||

|

||||

2\. Build the folder hierarchy in your `pr-agent-settings` repository:

|

||||

|

||||

```bash

|

||||

pr-agent-settings/

|

||||

├── metadata.yaml # Maps repos/folders to compliance paths

|

||||

└── compliance_standards/ # Root for all compliance definitions

|

||||

├── global/ # Global compliance, inherited widely

|

||||

│ └── pr_compliance_checklist.yaml

|

||||

├── groups/ # For groups of repositories

|

||||

│ ├── frontend_repos/

|

||||

│ │ └── pr_compliance_checklist.yaml

|

||||

│ └── backend_repos/

|

||||

│ └── pr_compliance_checklist.yaml

|

||||

├── qodo-merge/ # For standalone repositories

|

||||

│ └── pr_compliance_checklist.yaml

|

||||

└── qodo-monorepo/ # For monorepo-specific compliance

|

||||

├── pr_compliance_checklist.yaml # Root-level monorepo compliance

|

||||

├── qodo-github/ # Subproject compliance

|

||||

│ └── pr_compliance_checklist.yaml

|

||||

└── qodo-gitlab/ # Another subproject

|

||||

└── pr_compliance_checklist.yaml

|

||||

```

|

||||

|

||||

3\. Define the metadata file `metadata.yaml` in the root of `pr-agent-settings`:

|

||||

|

||||

```yaml

|

||||

# Standalone repos

|

||||

qodo-merge:

|

||||

pr_compliance_checklist_paths:

|

||||

- "qodo-merge"

|

||||

|

||||

# Group-associated repos

|

||||

repo_b:

|

||||

pr_compliance_checklist_paths:

|

||||

- "groups/backend_repos"

|

||||

|

||||

# Multi-group repos

|

||||

repo_c:

|

||||

pr_compliance_checklist_paths:

|

||||

- "groups/frontend_repos"

|

||||

- "groups/backend_repos"

|

||||

|

||||

# Monorepo with subprojects

|

||||

qodo-monorepo:

|

||||

pr_compliance_checklist_paths:

|

||||

- "qodo-monorepo"

|

||||

monorepo_subprojects:

|

||||

frontend:

|

||||

pr_compliance_checklist_paths:

|

||||

- "qodo-monorepo/qodo-github"

|

||||

backend:

|

||||

pr_compliance_checklist_paths:

|

||||

- "qodo-monorepo/qodo-gitlab"

|

||||

```

|

||||

|

||||

4\. Set the following configuration:

|

||||

|

||||

```toml

|

||||

[pr_compliance]

|

||||

enable_global_pr_compliance = true

|

||||

```

|

||||

|

||||

???- info "Compliance priority and fallback behavior"

|

||||

|

||||

1\. **Primary**: Global hierarchical compliance checklists from the `pr-agent-settings` repository:

|

||||

|

||||

1.1 If the repository is mapped in `metadata.yaml`, it uses the specified paths

|

||||

|

||||

1.2 For monorepos, it automatically collects compliance checklists matching PR file paths

|

||||

|

||||

1.3 If no mapping exists, it falls back to the global compliance checklists

|

||||

|

||||

2\. **Fallback**: Local repository wiki `pr_compliance_checklist.yaml` file:

|

||||

|

||||

2.1 Used when global compliance checklists are not found or configured

|

||||

|

||||

2.2 Acts as a safety net for repositories not yet configured in the global system

|

||||

|

||||

2.3 Local wiki compliance checklists are completely ignored when compliance checklists are successfully loaded

|

||||

|

||||

|

||||

## Configuration Options

|

||||

|

||||

???+ example "General options"

|

||||

|

||||

<table>

|

||||

<tr>

|

||||

<td><b>extra_instructions</b></td>

|

||||

<td>Optional extra instructions for the tool. For example: "Ensure that all error-handling paths in the code contain appropriate logging statements". Default is empty string.</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td><b>persistent_comment</b></td>

|

||||

<td>If set to true, the compliance comment will be persistent, meaning that every new compliance request will edit the previous one. Default is true.</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td><b>enable_user_defined_compliance_labels</b></td>

|

||||

<td>If set to true, the tool will add the label `Failed compliance check` for custom compliance violations. Default is true.</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td><b>enable_estimate_effort_to_review</b></td>

|

||||

<td>If set to true, the tool will estimate the effort required to review the PR (1-5 scale) as a label. Default is true.</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td><b>enable_todo_scan</b></td>

|

||||

<td>If set to true, the tool will scan for TODO comments in the PR code. Default is false.</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td><b>enable_update_pr_compliance_checkbox</b></td>

|

||||

<td>If set to true, the tool will add an update checkbox to refresh compliance status following push events. Default is true.</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td><b>enable_help_text</b></td>

|

||||

<td>If set to true, the tool will display help text in the comment. Default is false.</td>

|

||||

</tr>

|

||||

</table>

|

||||

|

||||

???+ example "Security compliance options"

|

||||

|

||||

<table>

|

||||

<tr>

|

||||

<td><b>enable_security_compliance</b></td>

|

||||

<td>If set to true, the tool will check for security vulnerabilities. Default is true.</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td><b>enable_compliance_labels_security</b></td>

|

||||

<td>If set to true, the tool will add a `Possible security concern` label to the PR when security-related concerns are detected. Default is true.</td>

|

||||

</tr>

|

||||

</table>

|

||||

|

||||

???+ example "Ticket compliance options"

|

||||

|

||||

<table>

|

||||

<tr>

|

||||

<td><b>enable_ticket_labels</b></td>

|

||||

<td>If set to true, the tool will add ticket compliance labels to the PR. Default is false.</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td><b>enable_no_ticket_labels</b></td>

|

||||

<td>If set to true, the tool will add a label when no ticket is found. Default is false.</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td><b>check_pr_additional_content</b></td>

|

||||

<td>If set to true, the tool will check if the PR contains content not related to the ticket. Default is false.</td>

|

||||

</tr>

|

||||

</table>

|

||||

|

||||

|

||||

## Usage Tips

|

||||

|

||||

### Blocking PRs Based on Compliance

|

||||

|

||||

!!! tip ""

|

||||

You can configure CI/CD Actions to prevent merging PRs with specific compliance labels:

|

||||

|

||||

- `Possible security concern` - Block PRs with potential security issues

|

||||

- `Failed compliance check` - Block PRs that violate custom compliance checklists

|

||||

|

||||

Implement a dedicated [GitHub Action](https://medium.com/sequra-tech/quick-tip-block-pull-request-merge-using-labels-6cc326936221) to enforce these checklists.

|

||||

|

||||

@ -124,6 +124,10 @@ This option is enabled by default via the `pr_description.enable_pr_diagram` par

|

||||

<td><b>enable_semantic_files_types</b></td>

|

||||

<td>If set to true, "Changes walkthrough" section will be generated. Default is true.</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td><b>file_table_collapsible_open_by_default</b></td>

|

||||

<td>If set to true, the file list in the "Changes walkthrough" section will be open by default. If set to false, it will be closed by default. Default is false.</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td><b>collapsible_file_list</b></td>

|

||||

<td>If set to true, the file list in the "Changes walkthrough" section will be collapsible. If set to "adaptive", the file list will be collapsible only if there are more than 8 files. Default is "adaptive".</td>

|

||||

@ -140,6 +144,10 @@ This option is enabled by default via the `pr_description.enable_pr_diagram` par

|

||||

<td><b>enable_pr_diagram</b></td>

|

||||

<td>If set to true, the tool will generate a horizontal Mermaid flowchart summarizing the main pull request changes. This field remains empty if not applicable. Default is true.</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td><b>auto_create_ticket</b></td>

|

||||

<td>If set to true, this will <a href="https://qodo-merge-docs.qodo.ai/tools/pr_to_ticket/">automatically create a ticket</a> in the ticketing system when a PR is opened. Default is false.</td>

|

||||

</tr>

|

||||

</table>

|

||||

|

||||

## Inline file summary 💎

|

||||

|

||||

@ -598,6 +598,10 @@ Note: Chunking is primarily relevant for large PRs. For most PRs (up to 600 line

|

||||

<td><b>num_code_suggestions_per_chunk</b></td>

|

||||

<td>Number of code suggestions provided by the 'improve' tool, per chunk. Default is 3.</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td><b>num_best_practice_suggestions 💎</b></td>

|

||||

<td>Number of code suggestions provided by the 'improve' tool for best practices. Default is 1.</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td><b>max_number_of_calls</b></td>

|

||||

<td>Maximum number of chunks. Default is 3.</td>

|

||||

|

||||

@ -14,11 +14,13 @@ Here is a list of Qodo Merge tools, each with a dedicated page that explains how

|

||||

| **💎 [Add Documentation (`/add_docs`](./documentation.md))** | Generates documentation to methods/functions/classes that changed in the PR |

|

||||

| **💎 [Analyze (`/analyze`](./analyze.md))** | Identify code components that changed in the PR, and enables to interactively generate tests, docs, and code suggestions for each component |

|

||||

| **💎 [CI Feedback (`/checks ci_job`](./ci_feedback.md))** | Automatically generates feedback and analysis for a failed CI job |

|

||||

| **💎 [Compliance (`/compliance`](./compliance.md))** | Comprehensive compliance checks for security, ticket requirements, and custom organizational rules |

|

||||

| **💎 [Custom Prompt (`/custom_prompt`](./custom_prompt.md))** | Automatically generates custom suggestions for improving the PR code, based on specific guidelines defined by the user |

|

||||

| **💎 [Generate Custom Labels (`/generate_labels`](./custom_labels.md))** | Generates custom labels for the PR, based on specific guidelines defined by the user |

|

||||

| **💎 [Generate Tests (`/test`](./test.md))** | Automatically generates unit tests for a selected component, based on the PR code changes |

|

||||

| **💎 [Implement (`/implement`](./implement.md))** | Generates implementation code from review suggestions |

|

||||

| **💎 [Improve Component (`/improve_component component_name`](./improve_component.md))** | Generates code suggestions for a specific code component that changed in the PR |

|

||||

| **💎 [PR to Ticket (`/create_ticket`](./pr_to_ticket.md))** | Generates ticket in the ticket tracking systems (Jira, Linear, or Git provider issues) based on PR content |

|

||||

| **💎 [Scan Repo Discussions (`/scan_repo_discussions`](./scan_repo_discussions.md))** | Generates `best_practices.md` file based on previous discussions in the repository |

|

||||

| **💎 [Similar Code (`/similar_code`](./similar_code.md))** | Retrieves the most similar code components from inside the organization's codebase, or from open-source code. |

|

||||

|

||||

|

||||

87

docs/docs/tools/pr_to_ticket.md

Normal file

87

docs/docs/tools/pr_to_ticket.md

Normal file

@ -0,0 +1,87 @@

|

||||

`Platforms supported: GitHub, GitLab, Bitbucket`

|

||||

|

||||

## Overview

|

||||

The `create_ticket` tool automatically generates tickets in ticket tracking systems (`Jira`, `Linear`, or `GitHub Issues`) based on PR content.

|

||||

|

||||

It analyzes the PR's data (code changes, commit messages, and description) to create well-structured tickets that capture the essence of the development work, helping teams maintain traceability between code changes and project management systems.

|

||||

|

||||

When a ticket is created, it appears in the PR description under an `Auto-created Ticket` section, complete with a link to the generated ticket.

|

||||

|

||||

{width=512}

|

||||

|

||||

!!! info "Pre-requisites"

|

||||

- To use this tool you need to integrate your ticketing system with Qodo-merge, follow the [Ticket Compliance Documentation](https://qodo-merge-docs.qodo.ai/core-abilities/fetching_ticket_context/).

|

||||

- For Jira Cloud users, please re-integrate your connection through the [qodo merge integration page](https://app.qodo.ai/qodo-merge/integrations) to enable the `update` permission required for ticket creation

|

||||

- You need to configure the project key in ticket corresponding to the repository where the PR is created. This is done by adding the `default_project_key`.

|

||||

|

||||

```toml

|

||||

[pr_to_ticket]

|

||||

default_project_key = "PROJECT_KEY" # e.g., "SCRUM"

|

||||

```

|

||||

|

||||

## Usage

|

||||

there are 3 ways to use the `create_ticket` tool:

|

||||

|

||||

1. [**Automatic Ticket Creation**](#automatic-ticket-creation)

|

||||

2. [**Interactive Triggering via Compliance Tool**](#interactive-triggering-via-compliance-tool)

|

||||

3. [**Manual Ticket Creation**](#manual-ticket-creation)

|

||||

|

||||

### Automatic Ticket Creation

|

||||

The tool can be configured to automatically create tickets when a PR is opened or updated and the PR does not already have a ticket associated with it.

|

||||

This ensures that every code change is documented in the ticketing system without manual intervention.

|

||||

|

||||

To configure automatic ticket creation, add the following to `.pr_agent.toml`:

|

||||

|

||||

```toml

|

||||

[pr_description]

|

||||

auto_create_ticket = true

|

||||

```

|

||||

|

||||

### Interactive Triggering via Compliance Tool

|

||||

`Supported only in Github and Gitlab`

|

||||

|

||||

The tool can be triggered interactively through a checkbox in the compliance tool. This allows users to create tickets as part of their PR Compliance Review workflow.

|

||||

|

||||

{width=512}

|

||||

|

||||

- After clicking the checkbox, the tool will create a ticket and will add/update the `PR Description` with a section called `Auto-created Ticket` with the link to the created ticket.

|

||||

- Then you can click `update` in the `Ticket compliance` section in the `Compliance` tool

|

||||

|

||||

{width=512}

|

||||

|

||||

### Manual Ticket Creation

|

||||

Users can manually trigger the ticket creation process from the PR interface.

|

||||

|

||||

To trigger ticket creation manually, the user can call this tool from the PR comment:

|

||||

|

||||

```

|

||||

/create_ticket

|

||||

```

|

||||

|

||||

After triggering, the tool will create a ticket and will add/update the `PR Description` with a section called `Auto-created Ticket` with the link to the created ticket.

|

||||

|

||||

|

||||

## Configuration

|

||||

|

||||

## Configuration Options

|

||||

|

||||

???+ example "Configuration"

|

||||

|

||||

<table>

|

||||

<tr>

|

||||

<td><b>default_project_key</b></td>

|

||||

<td>The default project key for your ticketing system (e.g., `SCRUM`). This is required unless `fallback_to_git_provider_issues` is set to `true`.</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td><b>default_base_url</b></td>

|

||||

<td>If your organization have integrated to multiple ticketing systems, you can set the default base URL for the ticketing system. This will be used to create tickets in the default system. Example: `https://YOUR-ORG.atlassian.net`.</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td><b>fallback_to_git_provider_issues</b></td>

|

||||

<td>If set to `true`, the tool will create issues in the Git provider's issue tracker (GitHub) if the `default_project_key` is not configured in the repository configuration. Default is `false`.</td>

|

||||

</tr>

|

||||

</table>

|

||||

|

||||

|

||||

## Helping Your Organization Meet SOC-2 Requirements

|

||||

The `create_ticket` tool helps your organization satisfy SOC-2 compliance. By automatically creating tickets from PRs and establishing bidirectional links between them, it ensures every code change is traceable to its corresponding business requirement or task.

|

||||

@ -246,7 +246,7 @@ To supplement the automatic bot detection, you can manually specify users to ign

|

||||

ignore_pr_authors = ["my-special-bot-user", ...]

|

||||

```

|

||||

|

||||

Where the `ignore_pr_authors` is a list of usernames that you want to ignore.

|

||||

Where the `ignore_pr_authors` is a regex list of usernames that you want to ignore.

|

||||

|

||||

!!! note

|

||||

There is one specific case where bots will receive an automatic response - when they generated a PR with a _failed test_. In that case, the [`ci_feedback`](https://qodo-merge-docs.qodo.ai/tools/ci_feedback/) tool will be invoked.

|

||||

|

||||

@ -30,7 +30,7 @@ verbosity_level=2

|

||||

This is useful for debugging or experimenting with different tools.

|

||||

|

||||

3. **git provider**: The [git_provider](https://github.com/Codium-ai/pr-agent/blob/main/pr_agent/settings/configuration.toml#L5) field in a configuration file determines the GIT provider that will be used by Qodo Merge. Currently, the following providers are supported:

|

||||

`github` **(default)**, `gitlab`, `bitbucket`, `azure`, `codecommit`, `local`,`gitea`, and `gerrit`.

|

||||

`github` **(default)**, `gitlab`, `bitbucket`, `azure`, `codecommit`, `local`, and `gitea`.

|

||||

|

||||

### CLI Health Check

|

||||

|

||||

@ -202,6 +202,25 @@ publish_labels = false

|

||||

|

||||

to prevent Qodo Merge from publishing labels when running the `describe` tool.

|

||||

|

||||

#### Quick Reference: Model Configuration in GitHub Actions

|

||||

|

||||

For detailed step-by-step examples of configuring different models (Gemini, Claude, Azure OpenAI, etc.) in GitHub Actions, see the [Configuration Examples](../installation/github.md#configuration-examples) section in the installation guide.

|

||||

|

||||

**Common Model Configuration Patterns:**

|

||||

|

||||

- **OpenAI**: Set `config.model: "gpt-4o"` and `OPENAI_KEY`

|

||||

- **Gemini**: Set `config.model: "gemini/gemini-1.5-flash"` and `GOOGLE_AI_STUDIO.GEMINI_API_KEY` (no `OPENAI_KEY` needed)

|

||||

- **Claude**: Set `config.model: "anthropic/claude-3-opus-20240229"` and `ANTHROPIC.KEY` (no `OPENAI_KEY` needed)

|

||||

- **Azure OpenAI**: Set `OPENAI.API_TYPE: "azure"`, `OPENAI.API_BASE`, and `OPENAI.DEPLOYMENT_ID`

|

||||

- **Local Models**: Set `config.model: "ollama/model-name"` and `OLLAMA.API_BASE`

|

||||

|

||||

**Environment Variable Format:**

|

||||

- Use dots (`.`) to separate sections and keys: `config.model`, `pr_reviewer.extra_instructions`

|

||||

- Boolean values as strings: `"true"` or `"false"`

|

||||

- Arrays as JSON strings: `'["item1", "item2"]'`

|

||||

|

||||

For complete model configuration details, see [Changing a model in PR-Agent](changing_a_model.md).

|

||||

|

||||

### GitLab Webhook

|

||||

|

||||

After setting up a GitLab webhook, to control which commands will run automatically when a new MR is opened, you can set the `pr_commands` parameter in the configuration file, similar to the GitHub App:

|

||||

|

||||

@ -32,6 +32,16 @@ OPENAI__API_BASE=https://api.openai.com/v1

|

||||

OPENAI__KEY=sk-...

|

||||

```

|

||||

|

||||

### OpenAI Flex Processing

|

||||

|

||||

To reduce costs for non-urgent/background tasks, enable Flex Processing:

|

||||

|

||||

```toml

|

||||

[litellm]

|

||||

extra_body='{"processing_mode": "flex"}'

|

||||

```

|

||||

|

||||

See [OpenAI Flex Processing docs](https://platform.openai.com/docs/guides/flex-processing) for details.

|

||||

|

||||

### Azure

|

||||

|

||||

|

||||

@ -34,11 +34,13 @@ nav:

|

||||

- 💎 Add Documentation: 'tools/documentation.md'

|

||||

- 💎 Analyze: 'tools/analyze.md'

|

||||

- 💎 CI Feedback: 'tools/ci_feedback.md'

|

||||

- 💎 Compliance: 'tools/compliance.md'

|

||||

- 💎 Custom Prompt: 'tools/custom_prompt.md'

|

||||

- 💎 Generate Labels: 'tools/custom_labels.md'

|

||||

- 💎 Generate Tests: 'tools/test.md'

|

||||

- 💎 Implement: 'tools/implement.md'

|

||||

- 💎 Improve Components: 'tools/improve_component.md'

|

||||

- 💎 PR to Ticket: 'tools/pr_to_ticket.md'

|

||||

- 💎 Scan Repo Discussions: 'tools/scan_repo_discussions.md'

|

||||

- 💎 Similar Code: 'tools/similar_code.md'

|

||||

- Core Abilities:

|

||||

|

||||

@ -3,5 +3,5 @@

|

||||

new Date().getTime(),event:'gtm.js'});var f=d.getElementsByTagName(s)[0],

|

||||

j=d.createElement(s),dl=l!='dataLayer'?'&l='+l:'';j.async=true;j.src=

|

||||

'https://www.googletagmanager.com/gtm.js?id='+i+dl;f.parentNode.insertBefore(j,f);

|

||||

})(window,document,'script','dataLayer','GTM-M6PJSFV');</script>

|

||||

})(window,document,'script','dataLayer','GTM-5C9KZBM3');</script>

|

||||

<!-- End Google Tag Manager -->

|

||||

|

||||

@ -45,6 +45,7 @@ MAX_TOKENS = {

|

||||

'command-nightly': 4096,

|

||||

'deepseek/deepseek-chat': 128000, # 128K, but may be limited by config.max_model_tokens