Compare commits

126 Commits

add-pr-tem

...

test-almog

| Author | SHA1 | Date | |

|---|---|---|---|

| 94826f81c8 | |||

| d6f405dd0d | |||

| 25ba9414fe | |||

| d097266c38 | |||

| fa1eda967f | |||

| c89c0eab8c | |||

| 93e34703ab | |||

| c15ed628db | |||

| 70f47336d6 | |||

| c6a6a2f352 | |||

| 1dc3db7322 | |||

| 049fc558a8 | |||

| 2dc89d0998 | |||

| 07bbfff4ba | |||

| 328637b8be | |||

| 44d9535dbc | |||

| 7fec17f3ff | |||

| cd15f64f11 | |||

| d4ac206c46 | |||

| 444910868e | |||

| a24b06b253 | |||

| 393516f746 | |||

| 152b111ef2 | |||

| 56250f5ea8 | |||

| 7b1df82c05 | |||

| 05960f2c3f | |||

| feb306727e | |||

| 2a647709c4 | |||

| a4cd05e71c | |||

| da6ef8c80f | |||

| 775bfc74eb | |||

| ebdbde1bca | |||

| e16c6d0b27 | |||

| f0b52870a2 | |||

| 1b35f01aa1 | |||

| a0dc9deb30 | |||

| 7e32a08f00 | |||

| 84983f3e9d | |||

| 71451de156 | |||

| 0e4a1d9ab8 | |||

| e7b05732f8 | |||

| 37083ae354 | |||

| 020ef212c1 | |||

| 01cd66f4f5 | |||

| af72b45593 | |||

| 9abb212e83 | |||

| e81b0dca30 | |||

| d37732c25d | |||

| e6b6e28d6b | |||

| 5bace4ddc6 | |||

| b80d7d1189 | |||

| b2d8dee00a | |||

| ac3dbdf5fc | |||

| f143a24879 | |||

| 347af1dd99 | |||

| d91245a9d3 | |||

| bfdaac0a05 | |||

| 183d2965d0 | |||

| 86647810e0 | |||

| 56978d9793 | |||

| 6433e827f4 | |||

| c0e78ba522 | |||

| 45d776a1f7 | |||

| 6e19e77e5e | |||

| 1e98d27ab4 | |||

| a47d4032b8 | |||

| 2887d0a7ed | |||

| a07f6855cb | |||

| 237a6ffb5f | |||

| 5e1cc12df4 | |||

| 29a350b4f8 | |||

| 6efcd61087 | |||

| c7cafa720e | |||

| 9de9b397e2 | |||

| 35059cadf7 | |||

| 0317951e32 | |||

| 4edb8b89d1 | |||

| a5278bdad2 | |||

| 3fd586c9bd | |||

| 717b2fe5f1 | |||

| 262c1cbc68 | |||

| defdaa0e02 | |||

| 5ca6918943 | |||

| cfd813883b | |||

| c6d32a4c9f | |||

| ae4e99026e | |||

| 42f493f41e | |||

| 34d73feb1d | |||

| ebc94bbd44 | |||

| 4fcc7a5f3a | |||

| 41760ea333 | |||

| 13128c4c2f | |||

| da168151e8 | |||

| a132927052 | |||

| b52d0726a2 | |||

| fc411dc8bc | |||

| 22f02ac08c | |||

| 52883fb1b5 | |||

| adfc2a6b69 | |||

| c4aa13e798 | |||

| 90575e3f0d | |||

| fcbe986ec7 | |||

| 061fec0d36 | |||

| 778d00d1a0 | |||

| cc8d5a6c50 | |||

| 62c47f9cb5 | |||

| bb31b0c66b | |||

| 359c963ad1 | |||

| 130b1ff4fb | |||

| 605a4b99ad | |||

| b989f41b96 | |||

| 26168a605b | |||

| 2c37b02aa0 | |||

| a2550870c2 | |||

| 279c6ead8f | |||

| c9500cf796 | |||

| 0f63d8685f | |||

| 77204faa51 | |||

| 43fb8ff433 | |||

| cd129d8b27 | |||

| 04aff0d3b2 | |||

| be1dd4bd20 | |||

| b3b89e7138 | |||

| 9045723084 | |||

| 34e22a2c8e | |||

| 1d784c60cb |

@ -3,7 +3,7 @@ FROM python:3.12 as base

|

|||||||

WORKDIR /app

|

WORKDIR /app

|

||||||

ADD pyproject.toml .

|

ADD pyproject.toml .

|

||||||

ADD requirements.txt .

|

ADD requirements.txt .

|

||||||

RUN pip install . && rm pyproject.toml requirements.txt

|

RUN pip install --no-cache-dir . && rm pyproject.toml requirements.txt

|

||||||

ENV PYTHONPATH=/app

|

ENV PYTHONPATH=/app

|

||||||

ADD docs docs

|

ADD docs docs

|

||||||

ADD pr_agent pr_agent

|

ADD pr_agent pr_agent

|

||||||

|

|||||||

@ -1,48 +0,0 @@

|

|||||||

## 📌 Pull Request Template

|

|

||||||

|

|

||||||

### 1️⃣ Short Description

|

|

||||||

<!-- Provide a concise summary of the changes in this PR. -->

|

|

||||||

|

|

||||||

---

|

|

||||||

|

|

||||||

### 2️⃣ Related Open Issue

|

|

||||||

<!-- Link the related issue(s) this PR is addressing, e.g., Fixes #123 or Closes #456. -->

|

|

||||||

Fixes #

|

|

||||||

|

|

||||||

---

|

|

||||||

|

|

||||||

### 3️⃣ PR Type

|

|

||||||

<!-- Select one of the following by marking `[x]` -->

|

|

||||||

|

|

||||||

- [ ] 🐞 Bug Fix

|

|

||||||

- [ ] ✨ New Feature

|

|

||||||

- [ ] 🔄 Refactoring

|

|

||||||

- [ ] 📖 Documentation Update

|

|

||||||

|

|

||||||

---

|

|

||||||

|

|

||||||

### 4️⃣ Does this PR Introduce a Breaking Change?

|

|

||||||

<!-- Mark the applicable option -->

|

|

||||||

- [ ] ❌ No

|

|

||||||

- [ ] ⚠️ Yes (Explain below)

|

|

||||||

|

|

||||||

If **yes**, describe the impact and necessary migration steps:

|

|

||||||

<!-- Provide a short explanation of what needs to be changed. -->

|

|

||||||

|

|

||||||

---

|

|

||||||

|

|

||||||

### 5️⃣ Current Behavior (Before Changes)

|

|

||||||

<!-- Describe the existing behavior before applying the changes in this PR. -->

|

|

||||||

|

|

||||||

---

|

|

||||||

|

|

||||||

### 6️⃣ New Behavior (After Changes)

|

|

||||||

<!-- Explain how the behavior changes with this PR. -->

|

|

||||||

|

|

||||||

---

|

|

||||||

|

|

||||||

### ✅ Checklist

|

|

||||||

- [ ] Code follows the project's coding guidelines.

|

|

||||||

- [ ] Tests have been added or updated (if applicable).

|

|

||||||

- [ ] Documentation has been updated (if applicable).

|

|

||||||

- [ ] Ready for review and approval.

|

|

||||||

78

README.md

@ -4,12 +4,18 @@

|

|||||||

|

|

||||||

|

|

||||||

<picture>

|

<picture>

|

||||||

<source media="(prefers-color-scheme: dark)" srcset="https://codium.ai/images/pr_agent/logo-dark.png" width="330">

|

<source media="(prefers-color-scheme: dark)" srcset="https://www.qodo.ai/wp-content/uploads/2025/02/PR-Agent-Purple-2.png">

|

||||||

<source media="(prefers-color-scheme: light)" srcset="https://codium.ai/images/pr_agent/logo-light.png" width="330">

|

<source media="(prefers-color-scheme: light)" srcset="https://www.qodo.ai/wp-content/uploads/2025/02/PR-Agent-Purple-2.png">

|

||||||

<img src="https://codium.ai/images/pr_agent/logo-light.png" alt="logo" width="330">

|

<img src="https://codium.ai/images/pr_agent/logo-light.png" alt="logo" width="330">

|

||||||

|

|

||||||

</picture>

|

</picture>

|

||||||

<br/>

|

<br/>

|

||||||

|

|

||||||

|

[Installation Guide](https://qodo-merge-docs.qodo.ai/installation/) |

|

||||||

|

[Usage Guide](https://qodo-merge-docs.qodo.ai/usage-guide/) |

|

||||||

|

[Tools Guide](https://qodo-merge-docs.qodo.ai/tools/) |

|

||||||

|

[Qodo Merge](https://qodo-merge-docs.qodo.ai/overview/pr_agent_pro/) 💎

|

||||||

|

|

||||||

PR-Agent aims to help efficiently review and handle pull requests, by providing AI feedback and suggestions

|

PR-Agent aims to help efficiently review and handle pull requests, by providing AI feedback and suggestions

|

||||||

</div>

|

</div>

|

||||||

|

|

||||||

@ -22,13 +28,16 @@ PR-Agent aims to help efficiently review and handle pull requests, by providing

|

|||||||

</a>

|

</a>

|

||||||

</div>

|

</div>

|

||||||

|

|

||||||

### [Documentation](https://qodo-merge-docs.qodo.ai/)

|

[//]: # (### [Documentation](https://qodo-merge-docs.qodo.ai/))

|

||||||

|

|

||||||

- See the [Installation Guide](https://qodo-merge-docs.qodo.ai/installation/) for instructions on installing PR-Agent on different platforms.

|

[//]: # ()

|

||||||

|

[//]: # (- See the [Installation Guide](https://qodo-merge-docs.qodo.ai/installation/) for instructions on installing PR-Agent on different platforms.)

|

||||||

|

|

||||||

- See the [Usage Guide](https://qodo-merge-docs.qodo.ai/usage-guide/) for instructions on running PR-Agent tools via different interfaces, such as CLI, PR Comments, or by automatically triggering them when a new PR is opened.

|

[//]: # ()

|

||||||

|

[//]: # (- See the [Usage Guide](https://qodo-merge-docs.qodo.ai/usage-guide/) for instructions on running PR-Agent tools via different interfaces, such as CLI, PR Comments, or by automatically triggering them when a new PR is opened.)

|

||||||

|

|

||||||

- See the [Tools Guide](https://qodo-merge-docs.qodo.ai/tools/) for a detailed description of the different tools, and the available configurations for each tool.

|

[//]: # ()

|

||||||

|

[//]: # (- See the [Tools Guide](https://qodo-merge-docs.qodo.ai/tools/) for a detailed description of the different tools, and the available configurations for each tool.)

|

||||||

|

|

||||||

|

|

||||||

## Table of Contents

|

## Table of Contents

|

||||||

@ -37,12 +46,17 @@ PR-Agent aims to help efficiently review and handle pull requests, by providing

|

|||||||

- [Overview](#overview)

|

- [Overview](#overview)

|

||||||

- [Example results](#example-results)

|

- [Example results](#example-results)

|

||||||

- [Try it now](#try-it-now)

|

- [Try it now](#try-it-now)

|

||||||

- [Qodo Merge 💎](https://qodo-merge-docs.qodo.ai/overview/pr_agent_pro/)

|

- [Qodo Merge](https://qodo-merge-docs.qodo.ai/overview/pr_agent_pro/)

|

||||||

- [How it works](#how-it-works)

|

- [How it works](#how-it-works)

|

||||||

- [Why use PR-Agent?](#why-use-pr-agent)

|

- [Why use PR-Agent?](#why-use-pr-agent)

|

||||||

|

|

||||||

## News and Updates

|

## News and Updates

|

||||||

|

|

||||||

|

### Feb 6, 2025

|

||||||

|

New design for the `/improve` tool:

|

||||||

|

|

||||||

|

<kbd><img src="https://github.com/user-attachments/assets/26506430-550e-469a-adaa-af0a09b70c6d" width="512"></kbd>

|

||||||

|

|

||||||

### Jan 25, 2025

|

### Jan 25, 2025

|

||||||

|

|

||||||

The open-source GitHub organization was updated:

|

The open-source GitHub organization was updated:

|

||||||

@ -60,7 +74,7 @@ to

|

|||||||

|

|

||||||

New tool [/Implement](https://qodo-merge-docs.qodo.ai/tools/implement/) (💎), which converts human code review discussions and feedback into ready-to-commit code changes.

|

New tool [/Implement](https://qodo-merge-docs.qodo.ai/tools/implement/) (💎), which converts human code review discussions and feedback into ready-to-commit code changes.

|

||||||

|

|

||||||

<kbd><img src="https://www.qodo.ai/images/pr_agent/implement1.png" width="512"></kbd>

|

<kbd><img src="https://www.qodo.ai/images/pr_agent/implement1.png?v=2" width="512"></kbd>

|

||||||

|

|

||||||

|

|

||||||

### Jan 1, 2025

|

### Jan 1, 2025

|

||||||

@ -69,40 +83,7 @@ Update logic and [documentation](https://qodo-merge-docs.qodo.ai/usage-guide/cha

|

|||||||

|

|

||||||

### December 30, 2024

|

### December 30, 2024

|

||||||

|

|

||||||

Following feedback from the community, we have addressed two vulnerabilities identified in the open-source PR-Agent project. The fixes are now included in the newly released version (v0.26), available as of today.

|

Following feedback from the community, we have addressed two vulnerabilities identified in the open-source PR-Agent project. The [fixes](https://github.com/qodo-ai/pr-agent/pull/1425) are now included in the newly released version (v0.26), available as of today.

|

||||||

|

|

||||||

### December 25, 2024

|

|

||||||

|

|

||||||

The `review` tool previously included a legacy feature for providing code suggestions (controlled by '--pr_reviewer.num_code_suggestion'). This functionality has been deprecated. Use instead the [`improve`](https://qodo-merge-docs.qodo.ai/tools/improve/) tool, which offers higher quality and more actionable code suggestions.

|

|

||||||

|

|

||||||

### December 2, 2024

|

|

||||||

|

|

||||||

Open-source repositories can now freely use Qodo Merge, and enjoy easy one-click installation using a marketplace [app](https://github.com/apps/qodo-merge-pro-for-open-source).

|

|

||||||

|

|

||||||

<kbd><img src="https://github.com/user-attachments/assets/b0838724-87b9-43b0-ab62-73739a3a855c" width="512"></kbd>

|

|

||||||

|

|

||||||

See [here](https://qodo-merge-docs.qodo.ai/installation/pr_agent_pro/) for more details about installing Qodo Merge for private repositories.

|

|

||||||

|

|

||||||

|

|

||||||

### November 18, 2024

|

|

||||||

|

|

||||||

A new mode was enabled by default for code suggestions - `--pr_code_suggestions.focus_only_on_problems=true`:

|

|

||||||

|

|

||||||

- This option reduces the number of code suggestions received

|

|

||||||

- The suggestions will focus more on identifying and fixing code problems, rather than style considerations like best practices, maintainability, or readability.

|

|

||||||

- The suggestions will be categorized into just two groups: "Possible Issues" and "General".

|

|

||||||

|

|

||||||

Still, if you prefer the previous mode, you can set `--pr_code_suggestions.focus_only_on_problems=false` in the [configuration file](https://qodo-merge-docs.qodo.ai/usage-guide/configuration_options/).

|

|

||||||

|

|

||||||

**Example results:**

|

|

||||||

|

|

||||||

Original mode

|

|

||||||

|

|

||||||

<kbd><img src="https://qodo.ai/images/pr_agent/code_suggestions_original_mode.png" width="512"></kbd>

|

|

||||||

|

|

||||||

Focused mode

|

|

||||||

|

|

||||||

<kbd><img src="https://qodo.ai/images/pr_agent/code_suggestions_focused_mode.png" width="512"></kbd>

|

|

||||||

|

|

||||||

|

|

||||||

## Overview

|

## Overview

|

||||||

@ -111,7 +92,7 @@ Focused mode

|

|||||||

Supported commands per platform:

|

Supported commands per platform:

|

||||||

|

|

||||||

| | | GitHub | GitLab | Bitbucket | Azure DevOps |

|

| | | GitHub | GitLab | Bitbucket | Azure DevOps |

|

||||||

|-------|---------------------------------------------------------------------------------------------------------|:--------------------:|:--------------------:|:--------------------:|:------------:|

|

|-------|---------------------------------------------------------------------------------------------------------|:--------------------:|:--------------------:|:---------:|:------------:|

|

||||||

| TOOLS | [Review](https://qodo-merge-docs.qodo.ai/tools/review/) | ✅ | ✅ | ✅ | ✅ |

|

| TOOLS | [Review](https://qodo-merge-docs.qodo.ai/tools/review/) | ✅ | ✅ | ✅ | ✅ |

|

||||||

| | [Describe](https://qodo-merge-docs.qodo.ai/tools/describe/) | ✅ | ✅ | ✅ | ✅ |

|

| | [Describe](https://qodo-merge-docs.qodo.ai/tools/describe/) | ✅ | ✅ | ✅ | ✅ |

|

||||||

| | [Improve](https://qodo-merge-docs.qodo.ai/tools/improve/) | ✅ | ✅ | ✅ | ✅ |

|

| | [Improve](https://qodo-merge-docs.qodo.ai/tools/improve/) | ✅ | ✅ | ✅ | ✅ |

|

||||||

@ -130,6 +111,7 @@ Supported commands per platform:

|

|||||||

| | [Custom Prompt](https://qodo-merge-docs.qodo.ai/tools/custom_prompt/) 💎 | ✅ | ✅ | ✅ | |

|

| | [Custom Prompt](https://qodo-merge-docs.qodo.ai/tools/custom_prompt/) 💎 | ✅ | ✅ | ✅ | |

|

||||||

| | [Test](https://qodo-merge-docs.qodo.ai/tools/test/) 💎 | ✅ | ✅ | | |

|

| | [Test](https://qodo-merge-docs.qodo.ai/tools/test/) 💎 | ✅ | ✅ | | |

|

||||||

| | [Implement](https://qodo-merge-docs.qodo.ai/tools/implement/) 💎 | ✅ | ✅ | ✅ | |

|

| | [Implement](https://qodo-merge-docs.qodo.ai/tools/implement/) 💎 | ✅ | ✅ | ✅ | |

|

||||||

|

| | [Auto-Approve](https://qodo-merge-docs.qodo.ai/tools/improve/?h=auto#auto-approval) 💎 | ✅ | ✅ | ✅ | |

|

||||||

| | | | | | |

|

| | | | | | |

|

||||||

| USAGE | [CLI](https://qodo-merge-docs.qodo.ai/usage-guide/automations_and_usage/#local-repo-cli) | ✅ | ✅ | ✅ | ✅ |

|

| USAGE | [CLI](https://qodo-merge-docs.qodo.ai/usage-guide/automations_and_usage/#local-repo-cli) | ✅ | ✅ | ✅ | ✅ |

|

||||||

| | [App / webhook](https://qodo-merge-docs.qodo.ai/usage-guide/automations_and_usage/#github-app) | ✅ | ✅ | ✅ | ✅ |

|

| | [App / webhook](https://qodo-merge-docs.qodo.ai/usage-guide/automations_and_usage/#github-app) | ✅ | ✅ | ✅ | ✅ |

|

||||||

@ -142,7 +124,7 @@ Supported commands per platform:

|

|||||||

| | [Local and global metadata](https://qodo-merge-docs.qodo.ai/core-abilities/metadata/) | ✅ | ✅ | ✅ | ✅ |

|

| | [Local and global metadata](https://qodo-merge-docs.qodo.ai/core-abilities/metadata/) | ✅ | ✅ | ✅ | ✅ |

|

||||||

| | [Dynamic context](https://qodo-merge-docs.qodo.ai/core-abilities/dynamic_context/) | ✅ | ✅ | ✅ | ✅ |

|

| | [Dynamic context](https://qodo-merge-docs.qodo.ai/core-abilities/dynamic_context/) | ✅ | ✅ | ✅ | ✅ |

|

||||||

| | [Self reflection](https://qodo-merge-docs.qodo.ai/core-abilities/self_reflection/) | ✅ | ✅ | ✅ | ✅ |

|

| | [Self reflection](https://qodo-merge-docs.qodo.ai/core-abilities/self_reflection/) | ✅ | ✅ | ✅ | ✅ |

|

||||||

| | [Static code analysis](https://qodo-merge-docs.qodo.ai/core-abilities/static_code_analysis/) 💎 | ✅ | ✅ | ✅ | |

|

| | [Static code analysis](https://qodo-merge-docs.qodo.ai/core-abilities/static_code_analysis/) 💎 | ✅ | ✅ | | |

|

||||||

| | [Global and wiki configurations](https://qodo-merge-docs.qodo.ai/usage-guide/configuration_options/) 💎 | ✅ | ✅ | ✅ | |

|

| | [Global and wiki configurations](https://qodo-merge-docs.qodo.ai/usage-guide/configuration_options/) 💎 | ✅ | ✅ | ✅ | |

|

||||||

| | [PR interactive actions](https://www.qodo.ai/images/pr_agent/pr-actions.mp4) 💎 | ✅ | ✅ | | |

|

| | [PR interactive actions](https://www.qodo.ai/images/pr_agent/pr-actions.mp4) 💎 | ✅ | ✅ | | |

|

||||||

| | [Impact Evaluation](https://qodo-merge-docs.qodo.ai/core-abilities/impact_evaluation/) 💎 | ✅ | ✅ | | |

|

| | [Impact Evaluation](https://qodo-merge-docs.qodo.ai/core-abilities/impact_evaluation/) 💎 | ✅ | ✅ | | |

|

||||||

@ -232,12 +214,6 @@ Note that this is a promotional bot, suitable only for initial experimentation.

|

|||||||

It does not have 'edit' access to your repo, for example, so it cannot update the PR description or add labels (`@CodiumAI-Agent /describe` will publish PR description as a comment). In addition, the bot cannot be used on private repositories, as it does not have access to the files there.

|

It does not have 'edit' access to your repo, for example, so it cannot update the PR description or add labels (`@CodiumAI-Agent /describe` will publish PR description as a comment). In addition, the bot cannot be used on private repositories, as it does not have access to the files there.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

To set up your own PR-Agent, see the [Installation](https://qodo-merge-docs.qodo.ai/installation/) section below.

|

|

||||||

Note that when you set your own PR-Agent or use Qodo hosted PR-Agent, there is no need to mention `@CodiumAI-Agent ...`. Instead, directly start with the command, e.g., `/ask ...`.

|

|

||||||

|

|

||||||

---

|

---

|

||||||

|

|

||||||

|

|

||||||

@ -292,8 +268,6 @@ https://openai.com/enterprise-privacy

|

|||||||

|

|

||||||

## Links

|

## Links

|

||||||

|

|

||||||

[](https://discord.gg/kG35uSHDBc)

|

|

||||||

|

|

||||||

- Discord community: https://discord.gg/kG35uSHDBc

|

- Discord community: https://discord.gg/kG35uSHDBc

|

||||||

- Qodo site: https://www.qodo.ai/

|

- Qodo site: https://www.qodo.ai/

|

||||||

- Blog: https://www.qodo.ai/blog/

|

- Blog: https://www.qodo.ai/blog/

|

||||||

|

|||||||

315

docs/docs/ai_search/index.md

Normal file

@ -0,0 +1,315 @@

|

|||||||

|

<div class="search-section">

|

||||||

|

<h1>AI Docs Search</h1>

|

||||||

|

<p class="search-description">

|

||||||

|

Search through our documentation using AI-powered natural language queries.

|

||||||

|

</p>

|

||||||

|

<div class="search-container">

|

||||||

|

<input

|

||||||

|

type="text"

|

||||||

|

id="searchInput"

|

||||||

|

class="search-input"

|

||||||

|

placeholder="Enter your search term..."

|

||||||

|

>

|

||||||

|

<button id="searchButton" class="search-button">Search</button>

|

||||||

|

</div>

|

||||||

|

<div id="spinner" class="spinner-container" style="display: none;">

|

||||||

|

<div class="spinner"></div>

|

||||||

|

</div>

|

||||||

|

<div id="results" class="results-container"></div>

|

||||||

|

</div>

|

||||||

|

|

||||||

|

<style>

|

||||||

|

Untitled

|

||||||

|

.search-section {

|

||||||

|

max-width: 800px;

|

||||||

|

margin: 0 auto;

|

||||||

|

padding: 0 1rem 2rem;

|

||||||

|

}

|

||||||

|

|

||||||

|

h1 {

|

||||||

|

color: #666;

|

||||||

|

font-size: 2.125rem;

|

||||||

|

font-weight: normal;

|

||||||

|

margin-bottom: 1rem;

|

||||||

|

}

|

||||||

|

|

||||||

|

.search-description {

|

||||||

|

color: #666;

|

||||||

|

font-size: 1rem;

|

||||||

|

line-height: 1.5;

|

||||||

|

margin-bottom: 2rem;

|

||||||

|

max-width: 800px;

|

||||||

|

}

|

||||||

|

|

||||||

|

.search-container {

|

||||||

|

display: flex;

|

||||||

|

gap: 1rem;

|

||||||

|

max-width: 800px;

|

||||||

|

margin: 0; /* Changed from auto to 0 to align left */

|

||||||

|

}

|

||||||

|

|

||||||

|

.search-input {

|

||||||

|

flex: 1;

|

||||||

|

padding: 0 0.875rem;

|

||||||

|

border: 1px solid #ddd;

|

||||||

|

border-radius: 4px;

|

||||||

|

font-size: 0.9375rem;

|

||||||

|

outline: none;

|

||||||

|

height: 40px; /* Explicit height */

|

||||||

|

}

|

||||||

|

|

||||||

|

.search-input:focus {

|

||||||

|

border-color: #6c63ff;

|

||||||

|

}

|

||||||

|

|

||||||

|

.search-button {

|

||||||

|

padding: 0 1.25rem;

|

||||||

|

background-color: #2196F3;

|

||||||

|

color: white;

|

||||||

|

border: none;

|

||||||

|

border-radius: 4px;

|

||||||

|

cursor: pointer;

|

||||||

|

font-size: 0.875rem;

|

||||||

|

transition: background-color 0.2s;

|

||||||

|

height: 40px; /* Match the height of search input */

|

||||||

|

display: flex;

|

||||||

|

align-items: center;

|

||||||

|

justify-content: center;

|

||||||

|

}

|

||||||

|

|

||||||

|

.search-button:hover {

|

||||||

|

background-color: #1976D2;

|

||||||

|

}

|

||||||

|

|

||||||

|

.spinner-container {

|

||||||

|

display: flex;

|

||||||

|

justify-content: center;

|

||||||

|

margin-top: 2rem;

|

||||||

|

}

|

||||||

|

|

||||||

|

.spinner {

|

||||||

|

width: 40px;

|

||||||

|

height: 40px;

|

||||||

|

border: 4px solid #f3f3f3;

|

||||||

|

border-top: 4px solid #2196F3;

|

||||||

|

border-radius: 50%;

|

||||||

|

animation: spin 1s linear infinite;

|

||||||

|

}

|

||||||

|

|

||||||

|

@keyframes spin {

|

||||||

|

0% { transform: rotate(0deg); }

|

||||||

|

100% { transform: rotate(360deg); }

|

||||||

|

}

|

||||||

|

|

||||||

|

.results-container {

|

||||||

|

margin-top: 2rem;

|

||||||

|

max-width: 800px;

|

||||||

|

}

|

||||||

|

|

||||||

|

.result-item {

|

||||||

|

padding: 1rem;

|

||||||

|

border: 1px solid #ddd;

|

||||||

|

border-radius: 4px;

|

||||||

|

margin-bottom: 1rem;

|

||||||

|

}

|

||||||

|

|

||||||

|

.result-title {

|

||||||

|

font-size: 1.2rem;

|

||||||

|

color: #2196F3;

|

||||||

|

margin-bottom: 0.5rem;

|

||||||

|

}

|

||||||

|

|

||||||

|

.result-description {

|

||||||

|

color: #666;

|

||||||

|

}

|

||||||

|

|

||||||

|

.error-message {

|

||||||

|

color: #dc3545;

|

||||||

|

padding: 1rem;

|

||||||

|

border: 1px solid #dc3545;

|

||||||

|

border-radius: 4px;

|

||||||

|

margin-top: 1rem;

|

||||||

|

}

|

||||||

|

|

||||||

|

.markdown-content {

|

||||||

|

line-height: 1.6;

|

||||||

|

color: var(--md-typeset-color);

|

||||||

|

background: var(--md-default-bg-color);

|

||||||

|

border: 1px solid var(--md-default-fg-color--lightest);

|

||||||

|

border-radius: 12px;

|

||||||

|

padding: 1.5rem;

|

||||||

|

box-shadow: 0 2px 4px rgba(0,0,0,0.05);

|

||||||

|

position: relative;

|

||||||

|

margin-top: 2rem;

|

||||||

|

}

|

||||||

|

|

||||||

|

.markdown-content::before {

|

||||||

|

content: '';

|

||||||

|

position: absolute;

|

||||||

|

top: -8px;

|

||||||

|

left: 24px;

|

||||||

|

width: 16px;

|

||||||

|

height: 16px;

|

||||||

|

background: var(--md-default-bg-color);

|

||||||

|

border-left: 1px solid var(--md-default-fg-color--lightest);

|

||||||

|

border-top: 1px solid var(--md-default-fg-color--lightest);

|

||||||

|

transform: rotate(45deg);

|

||||||

|

}

|

||||||

|

|

||||||

|

.markdown-content > *:first-child {

|

||||||

|

margin-top: 0;

|

||||||

|

padding-top: 0;

|

||||||

|

}

|

||||||

|

|

||||||

|

.markdown-content p {

|

||||||

|

margin-bottom: 1rem;

|

||||||

|

}

|

||||||

|

|

||||||

|

.markdown-content p:last-child {

|

||||||

|

margin-bottom: 0;

|

||||||

|

}

|

||||||

|

|

||||||

|

.markdown-content code {

|

||||||

|

background: var(--md-code-bg-color);

|

||||||

|

color: var(--md-code-fg-color);

|

||||||

|

padding: 0.2em 0.4em;

|

||||||

|

border-radius: 3px;

|

||||||

|

font-size: 0.9em;

|

||||||

|

font-family: ui-monospace, SFMono-Regular, SF Mono, Menlo, Consolas, Liberation Mono, monospace;

|

||||||

|

}

|

||||||

|

|

||||||

|

.markdown-content pre {

|

||||||

|

background: var(--md-code-bg-color);

|

||||||

|

padding: 1rem;

|

||||||

|

border-radius: 6px;

|

||||||

|

overflow-x: auto;

|

||||||

|

margin: 1rem 0;

|

||||||

|

}

|

||||||

|

|

||||||

|

.markdown-content pre code {

|

||||||

|

background: none;

|

||||||

|

padding: 0;

|

||||||

|

font-size: 0.9em;

|

||||||

|

}

|

||||||

|

|

||||||

|

[data-md-color-scheme="slate"] .markdown-content {

|

||||||

|

box-shadow: 0 2px 4px rgba(0,0,0,0.1);

|

||||||

|

}

|

||||||

|

|

||||||

|

</style>

|

||||||

|

|

||||||

|

<script src="https://cdnjs.cloudflare.com/ajax/libs/marked/9.1.6/marked.min.js"></script>

|

||||||

|

|

||||||

|

<script>

|

||||||

|

window.addEventListener('load', function() {

|

||||||

|

function displayResults(responseText) {

|

||||||

|

const resultsContainer = document.getElementById('results');

|

||||||

|

const spinner = document.getElementById('spinner');

|

||||||

|

const searchContainer = document.querySelector('.search-container');

|

||||||

|

|

||||||

|

// Hide spinner

|

||||||

|

spinner.style.display = 'none';

|

||||||

|

|

||||||

|

// Scroll to search bar

|

||||||

|

searchContainer.scrollIntoView({ behavior: 'smooth', block: 'start' });

|

||||||

|

|

||||||

|

try {

|

||||||

|

const results = JSON.parse(responseText);

|

||||||

|

|

||||||

|

marked.setOptions({

|

||||||

|

breaks: true,

|

||||||

|

gfm: true,

|

||||||

|

headerIds: false,

|

||||||

|

sanitize: false

|

||||||

|

});

|

||||||

|

|

||||||

|

const htmlContent = marked.parse(results.message);

|

||||||

|

|

||||||

|

resultsContainer.className = 'markdown-content';

|

||||||

|

resultsContainer.innerHTML = htmlContent;

|

||||||

|

|

||||||

|

// Scroll after content is rendered

|

||||||

|

setTimeout(() => {

|

||||||

|

const searchContainer = document.querySelector('.search-container');

|

||||||

|

const offset = 55; // Offset from top in pixels

|

||||||

|

const elementPosition = searchContainer.getBoundingClientRect().top;

|

||||||

|

const offsetPosition = elementPosition + window.pageYOffset - offset;

|

||||||

|

|

||||||

|

window.scrollTo({

|

||||||

|

top: offsetPosition,

|

||||||

|

behavior: 'smooth'

|

||||||

|

});

|

||||||

|

}, 100);

|

||||||

|

} catch (error) {

|

||||||

|

console.error('Error parsing results:', error);

|

||||||

|

resultsContainer.innerHTML = '<div class="error-message">Error processing results</div>';

|

||||||

|

}

|

||||||

|

}

|

||||||

|

|

||||||

|

async function performSearch() {

|

||||||

|

const searchInput = document.getElementById('searchInput');

|

||||||

|

const resultsContainer = document.getElementById('results');

|

||||||

|

const spinner = document.getElementById('spinner');

|

||||||

|

const searchTerm = searchInput.value.trim();

|

||||||

|

|

||||||

|

if (!searchTerm) {

|

||||||

|

resultsContainer.innerHTML = '<div class="error-message">Please enter a search term</div>';

|

||||||

|

return;

|

||||||

|

}

|

||||||

|

|

||||||

|

// Show spinner, clear results

|

||||||

|

spinner.style.display = 'flex';

|

||||||

|

resultsContainer.innerHTML = '';

|

||||||

|

|

||||||

|

try {

|

||||||

|

const data = {

|

||||||

|

"query": searchTerm

|

||||||

|

};

|

||||||

|

|

||||||

|

const options = {

|

||||||

|

method: 'POST',

|

||||||

|

headers: {

|

||||||

|

'accept': 'text/plain',

|

||||||

|

'content-type': 'application/json',

|

||||||

|

},

|

||||||

|

body: JSON.stringify(data)

|

||||||

|

};

|

||||||

|

|

||||||

|

// const API_ENDPOINT = 'http://0.0.0.0:3000/api/v1/docs_help';

|

||||||

|

const API_ENDPOINT = 'https://help.merge.qodo.ai/api/v1/docs_help';

|

||||||

|

|

||||||

|

const response = await fetch(API_ENDPOINT, options);

|

||||||

|

|

||||||

|

if (!response.ok) {

|

||||||

|

throw new Error(`HTTP error! status: ${response.status}`);

|

||||||

|

}

|

||||||

|

|

||||||

|

const responseText = await response.text();

|

||||||

|

displayResults(responseText);

|

||||||

|

} catch (error) {

|

||||||

|

spinner.style.display = 'none';

|

||||||

|

resultsContainer.innerHTML = `

|

||||||

|

<div class="error-message">

|

||||||

|

An error occurred while searching. Please try again later.

|

||||||

|

</div>

|

||||||

|

`;

|

||||||

|

}

|

||||||

|

}

|

||||||

|

|

||||||

|

// Add event listeners

|

||||||

|

const searchButton = document.getElementById('searchButton');

|

||||||

|

const searchInput = document.getElementById('searchInput');

|

||||||

|

|

||||||

|

if (searchButton) {

|

||||||

|

searchButton.addEventListener('click', performSearch);

|

||||||

|

}

|

||||||

|

|

||||||

|

if (searchInput) {

|

||||||

|

searchInput.addEventListener('keypress', function(e) {

|

||||||

|

if (e.key === 'Enter') {

|

||||||

|

performSearch();

|

||||||

|

}

|

||||||

|

});

|

||||||

|

}

|

||||||

|

});

|

||||||

|

</script>

|

||||||

|

Before Width: | Height: | Size: 4.2 KiB After Width: | Height: | Size: 15 KiB |

|

Before Width: | Height: | Size: 263 KiB After Width: | Height: | Size: 57 KiB |

|

Before Width: | Height: | Size: 1.2 KiB After Width: | Height: | Size: 24 KiB |

|

Before Width: | Height: | Size: 8.7 KiB After Width: | Height: | Size: 17 KiB |

@ -1,5 +1,5 @@

|

|||||||

## Local and global metadata injection with multi-stage analysis

|

## Local and global metadata injection with multi-stage analysis

|

||||||

(1)

|

1\.

|

||||||

Qodo Merge initially retrieves for each PR the following data:

|

Qodo Merge initially retrieves for each PR the following data:

|

||||||

|

|

||||||

- PR title and branch name

|

- PR title and branch name

|

||||||

@ -11,7 +11,7 @@ Qodo Merge initially retrieves for each PR the following data:

|

|||||||

!!! tip "Tip: Organization-level metadata"

|

!!! tip "Tip: Organization-level metadata"

|

||||||

In addition to the inputs above, Qodo Merge can incorporate supplementary preferences provided by the user, like [`extra_instructions` and `organization best practices`](https://qodo-merge-docs.qodo.ai/tools/improve/#extra-instructions-and-best-practices). This information can be used to enhance the PR analysis.

|

In addition to the inputs above, Qodo Merge can incorporate supplementary preferences provided by the user, like [`extra_instructions` and `organization best practices`](https://qodo-merge-docs.qodo.ai/tools/improve/#extra-instructions-and-best-practices). This information can be used to enhance the PR analysis.

|

||||||

|

|

||||||

(2)

|

2\.

|

||||||

By default, the first command that Qodo Merge executes is [`describe`](https://qodo-merge-docs.qodo.ai/tools/describe/), which generates three types of outputs:

|

By default, the first command that Qodo Merge executes is [`describe`](https://qodo-merge-docs.qodo.ai/tools/describe/), which generates three types of outputs:

|

||||||

|

|

||||||

- PR Type (e.g. bug fix, feature, refactor, etc)

|

- PR Type (e.g. bug fix, feature, refactor, etc)

|

||||||

@ -49,8 +49,8 @@ __old hunk__

|

|||||||

...

|

...

|

||||||

```

|

```

|

||||||

|

|

||||||

(3) The entire PR files that were retrieved are also used to expand and enhance the PR context (see [Dynamic Context](https://qodo-merge-docs.qodo.ai/core-abilities/dynamic_context/)).

|

3\. The entire PR files that were retrieved are also used to expand and enhance the PR context (see [Dynamic Context](https://qodo-merge-docs.qodo.ai/core-abilities/dynamic_context/)).

|

||||||

|

|

||||||

|

|

||||||

(4) All the metadata described above represents several level of cumulative analysis - ranging from hunk level, to file level, to PR level, to organization level.

|

4\. All the metadata described above represents several level of cumulative analysis - ranging from hunk level, to file level, to PR level, to organization level.

|

||||||

This comprehensive approach enables Qodo Merge AI models to generate more precise and contextually relevant suggestions and feedback.

|

This comprehensive approach enables Qodo Merge AI models to generate more precise and contextually relevant suggestions and feedback.

|

||||||

|

|||||||

@ -35,6 +35,7 @@ Qodo Merge offers extensive pull request functionalities across various git prov

|

|||||||

| | ⮑ [Inline file summary](https://qodo-merge-docs.qodo.ai/tools/describe/#inline-file-summary){:target="_blank"} 💎 | ✅ | ✅ | | ✅ |

|

| | ⮑ [Inline file summary](https://qodo-merge-docs.qodo.ai/tools/describe/#inline-file-summary){:target="_blank"} 💎 | ✅ | ✅ | | ✅ |

|

||||||

| | Improve | ✅ | ✅ | ✅ | ✅ |

|

| | Improve | ✅ | ✅ | ✅ | ✅ |

|

||||||

| | ⮑ Extended | ✅ | ✅ | ✅ | ✅ |

|

| | ⮑ Extended | ✅ | ✅ | ✅ | ✅ |

|

||||||

|

| | [Auto-Approve](https://qodo-merge-docs.qodo.ai/tools/improve/#auto-approval) 💎 | ✅ | ✅ | ✅ | |

|

||||||

| | [Custom Prompt](./tools/custom_prompt.md){:target="_blank"} 💎 | ✅ | ✅ | ✅ | ✅ |

|

| | [Custom Prompt](./tools/custom_prompt.md){:target="_blank"} 💎 | ✅ | ✅ | ✅ | ✅ |

|

||||||

| | Reflect and Review | ✅ | ✅ | ✅ | ✅ |

|

| | Reflect and Review | ✅ | ✅ | ✅ | ✅ |

|

||||||

| | Update CHANGELOG.md | ✅ | ✅ | ✅ | ️ |

|

| | Update CHANGELOG.md | ✅ | ✅ | ✅ | ️ |

|

||||||

@ -53,8 +54,7 @@ Qodo Merge offers extensive pull request functionalities across various git prov

|

|||||||

| | Repo language prioritization | ✅ | ✅ | ✅ | ✅ |

|

| | Repo language prioritization | ✅ | ✅ | ✅ | ✅ |

|

||||||

| | Adaptive and token-aware file patch fitting | ✅ | ✅ | ✅ | ✅ |

|

| | Adaptive and token-aware file patch fitting | ✅ | ✅ | ✅ | ✅ |

|

||||||

| | Multiple models support | ✅ | ✅ | ✅ | ✅ |

|

| | Multiple models support | ✅ | ✅ | ✅ | ✅ |

|

||||||

| | Incremental PR review | ✅ | | | |

|

| | [Static code analysis](./core-abilities/static_code_analysis/){:target="_blank"} 💎 | ✅ | ✅ | | |

|

||||||

| | [Static code analysis](./tools/analyze.md/){:target="_blank"} 💎 | ✅ | ✅ | ✅ | ✅ |

|

|

||||||

| | [Multiple configuration options](./usage-guide/configuration_options.md){:target="_blank"} 💎 | ✅ | ✅ | ✅ | ✅ |

|

| | [Multiple configuration options](./usage-guide/configuration_options.md){:target="_blank"} 💎 | ✅ | ✅ | ✅ | ✅ |

|

||||||

|

|

||||||

💎 marks a feature available only in [Qodo Merge](https://www.codium.ai/pricing/){:target="_blank"}, and not in the open-source version.

|

💎 marks a feature available only in [Qodo Merge](https://www.codium.ai/pricing/){:target="_blank"}, and not in the open-source version.

|

||||||

|

|||||||

@ -15,7 +15,7 @@ Qodo Merge for GitHub cloud is available for installation through the [GitHub Ma

|

|||||||

|

|

||||||

### GitHub Enterprise Server

|

### GitHub Enterprise Server

|

||||||

|

|

||||||

To use Qodo Merge application on your private GitHub Enterprise Server, you will need to contact us for starting an [Enterprise](https://www.codium.ai/pricing/) trial.

|

To use Qodo Merge application on your private GitHub Enterprise Server, you will need to [contact](https://www.qodo.ai/contact/#pricing) Qodo for starting an Enterprise trial.

|

||||||

|

|

||||||

### GitHub Open Source Projects

|

### GitHub Open Source Projects

|

||||||

|

|

||||||

@ -34,7 +34,9 @@ Qodo Merge for Bitbucket Cloud is available for installation through the followi

|

|||||||

To use Qodo Merge application on your private Bitbucket Server, you will need to contact us for starting an [Enterprise](https://www.qodo.ai/pricing/) trial.

|

To use Qodo Merge application on your private Bitbucket Server, you will need to contact us for starting an [Enterprise](https://www.qodo.ai/pricing/) trial.

|

||||||

|

|

||||||

|

|

||||||

## Install Qodo Merge for GitLab (Teams & Enterprise)

|

## Install Qodo Merge for GitLab

|

||||||

|

|

||||||

|

### GitLab Cloud

|

||||||

|

|

||||||

Since GitLab platform does not support apps, installing Qodo Merge for GitLab is a bit more involved, and requires the following steps:

|

Since GitLab platform does not support apps, installing Qodo Merge for GitLab is a bit more involved, and requires the following steps:

|

||||||

|

|

||||||

@ -79,3 +81,7 @@ Enable SSL verification: Check the box.

|

|||||||

You’re all set!

|

You’re all set!

|

||||||

|

|

||||||

Open a new merge request or add a MR comment with one of Qodo Merge’s commands such as /review, /describe or /improve.

|

Open a new merge request or add a MR comment with one of Qodo Merge’s commands such as /review, /describe or /improve.

|

||||||

|

|

||||||

|

### GitLab Server

|

||||||

|

|

||||||

|

For a trial period of two weeks on your private GitLab Server, the same [installation steps](#gitlab-cloud) as for GitLab Cloud apply. After the trial period, you will need to [contact](https://www.qodo.ai/contact/#pricing) Qodo for moving to an Enterprise account.

|

||||||

|

|||||||

@ -1,6 +1,6 @@

|

|||||||

### Overview

|

### Overview

|

||||||

|

|

||||||

[Qodo Merge](https://www.codium.ai/pricing/){:target="_blank"} is a hosted version of open-source [PR-Agent](https://github.com/Codium-ai/pr-agent){:target="_blank"}. A complimentary two-week trial is offered, followed by a monthly subscription fee.

|

[Qodo Merge](https://www.codium.ai/pricing/){:target="_blank"} is a paid, hosted version of open-source [PR-Agent](https://github.com/Codium-ai/pr-agent){:target="_blank"}. A complimentary two-week trial is offered, followed by a monthly subscription fee.

|

||||||

Qodo Merge is designed for companies and teams that require additional features and capabilities. It provides the following benefits:

|

Qodo Merge is designed for companies and teams that require additional features and capabilities. It provides the following benefits:

|

||||||

|

|

||||||

1. **Fully managed** - We take care of everything for you - hosting, models, regular updates, and more. Installation is as simple as signing up and adding the Qodo Merge app to your GitHub\GitLab\BitBucket repo.

|

1. **Fully managed** - We take care of everything for you - hosting, models, regular updates, and more. Installation is as simple as signing up and adding the Qodo Merge app to your GitHub\GitLab\BitBucket repo.

|

||||||

|

|||||||

@ -14,6 +14,5 @@ An example result:

|

|||||||

|

|

||||||

{width=750}

|

{width=750}

|

||||||

|

|

||||||

**Notes**

|

!!! note "Language that are currently supported:"

|

||||||

|

Python, Java, C++, JavaScript, TypeScript, C#.

|

||||||

- Language that are currently supported: Python, Java, C++, JavaScript, TypeScript, C#.

|

|

||||||

|

|||||||

@ -38,20 +38,20 @@ where `https://real_link_to_image` is the direct link to the image.

|

|||||||

Note that GitHub has a built-in mechanism of pasting images in comments. However, pasted image does not provide a direct link.

|

Note that GitHub has a built-in mechanism of pasting images in comments. However, pasted image does not provide a direct link.

|

||||||

To get a direct link to an image, we recommend using the following scheme:

|

To get a direct link to an image, we recommend using the following scheme:

|

||||||

|

|

||||||

1) First, post a comment that contains **only** the image:

|

1\. First, post a comment that contains **only** the image:

|

||||||

|

|

||||||

{width=512}

|

{width=512}

|

||||||

|

|

||||||

2) Quote reply to that comment:

|

2\. Quote reply to that comment:

|

||||||

|

|

||||||

{width=512}

|

{width=512}

|

||||||

|

|

||||||

3) In the screen opened, type the question below the image:

|

3\. In the screen opened, type the question below the image:

|

||||||

|

|

||||||

{width=512}

|

{width=512}

|

||||||

{width=512}

|

{width=512}

|

||||||

|

|

||||||

4) Post the comment, and receive the answer:

|

4\. Post the comment, and receive the answer:

|

||||||

|

|

||||||

{width=512}

|

{width=512}

|

||||||

|

|

||||||

|

|||||||

@ -51,8 +51,8 @@ Results obtained with the prompt above:

|

|||||||

|

|

||||||

## Configuration options

|

## Configuration options

|

||||||

|

|

||||||

`prompt`: the prompt for the tool. It should be a multi-line string.

|

- `prompt`: the prompt for the tool. It should be a multi-line string.

|

||||||

|

|

||||||

`num_code_suggestions`: number of code suggestions provided by the 'custom_prompt' tool. Default is 4.

|

- `num_code_suggestions_per_chunk`: number of code suggestions provided by the 'custom_prompt' tool, per chunk. Default is 4.

|

||||||

|

|

||||||

`enable_help_text`: if set to true, the tool will display a help text in the comment. Default is true.

|

- `enable_help_text`: if set to true, the tool will display a help text in the comment. Default is true.

|

||||||

|

|||||||

@ -143,7 +143,7 @@ The marker `pr_agent:type` will be replaced with the PR type, `pr_agent:summary`

|

|||||||

|

|

||||||

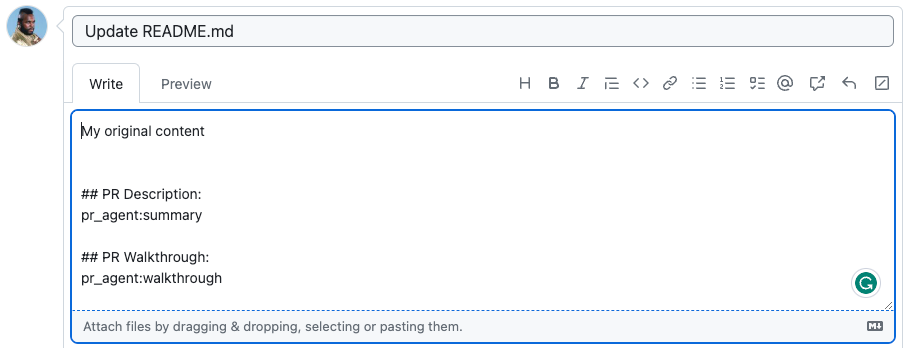

{width=512}

|

{width=512}

|

||||||

|

|

||||||

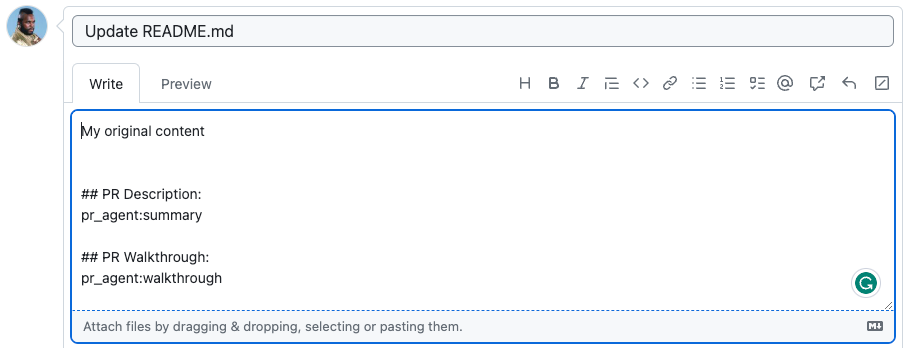

→

|

becomes

|

||||||

|

|

||||||

{width=512}

|

{width=512}

|

||||||

|

|

||||||

|

|||||||

@ -27,7 +27,6 @@ You can state a name of a specific component in the PR to get documentation only

|

|||||||

- `docs_style`: The exact style of the documentation (for python docstring). you can choose between: `google`, `numpy`, `sphinx`, `restructuredtext`, `plain`. Default is `sphinx`.

|

- `docs_style`: The exact style of the documentation (for python docstring). you can choose between: `google`, `numpy`, `sphinx`, `restructuredtext`, `plain`. Default is `sphinx`.

|

||||||

- `extra_instructions`: Optional extra instructions to the tool. For example: "focus on the changes in the file X. Ignore change in ...".

|

- `extra_instructions`: Optional extra instructions to the tool. For example: "focus on the changes in the file X. Ignore change in ...".

|

||||||

|

|

||||||

**Notes**

|

!!! note "Notes"

|

||||||

|

- The following languages are currently supported: Python, Java, C++, JavaScript, TypeScript, C#.

|

||||||

- Language that are currently fully supported: Python, Java, C++, JavaScript, TypeScript, C#.

|

|

||||||

- This tool can also be triggered interactively by using the [`analyze`](./analyze.md) tool.

|

- This tool can also be triggered interactively by using the [`analyze`](./analyze.md) tool.

|

||||||

|

|||||||

@ -10,8 +10,9 @@ It leverages LLM technology to transform PR comments and review suggestions into

|

|||||||

|

|

||||||

### For Reviewers

|

### For Reviewers

|

||||||

|

|

||||||

Reviewers can request code changes by: <br>

|

Reviewers can request code changes by:

|

||||||

1. Selecting the code block to be modified. <br>

|

|

||||||

|

1. Selecting the code block to be modified.

|

||||||

2. Adding a comment with the syntax:

|

2. Adding a comment with the syntax:

|

||||||

```

|

```

|

||||||

/implement <code-change-description>

|

/implement <code-change-description>

|

||||||

@ -46,7 +47,8 @@ You can reference and implement changes from any comment by:

|

|||||||

Note that the implementation will occur within the review discussion thread.

|

Note that the implementation will occur within the review discussion thread.

|

||||||

|

|

||||||

|

|

||||||

**Configuration options** <br>

|

**Configuration options**

|

||||||

- Use `/implement` to implement code change within and based on the review discussion. <br>

|

|

||||||

- Use `/implement <code-change-description>` inside a review discussion to implement specific instructions. <br>

|

- Use `/implement` to implement code change within and based on the review discussion.

|

||||||

- Use `/implement <link-to-review-comment>` to indirectly call the tool from any comment. <br>

|

- Use `/implement <code-change-description>` inside a review discussion to implement specific instructions.

|

||||||

|

- Use `/implement <link-to-review-comment>` to indirectly call the tool from any comment.

|

||||||

|

|||||||

@ -9,8 +9,9 @@ The tool can be triggered automatically every time a new PR is [opened](../usage

|

|||||||

|

|

||||||

{width=512}

|

{width=512}

|

||||||

|

|

||||||

Note that the `Apply this suggestion` checkbox, which interactively converts a suggestion into a commitable code comment, is available only for Qodo Merge💎 users.

|

!!! note "The following features are available only for Qodo Merge 💎 users:"

|

||||||

|

- The `Apply this suggestion` checkbox, which interactively converts a suggestion into a committable code comment

|

||||||

|

- The `More` checkbox to generate additional suggestions

|

||||||

|

|

||||||

## Example usage

|

## Example usage

|

||||||

|

|

||||||

@ -52,9 +53,10 @@ num_code_suggestions_per_chunk = ...

|

|||||||

- The `pr_commands` lists commands that will be executed automatically when a PR is opened.

|

- The `pr_commands` lists commands that will be executed automatically when a PR is opened.

|

||||||

- The `[pr_code_suggestions]` section contains the configurations for the `improve` tool you want to edit (if any)

|

- The `[pr_code_suggestions]` section contains the configurations for the `improve` tool you want to edit (if any)

|

||||||

|

|

||||||

### Assessing Impact 💎

|

### Assessing Impact

|

||||||

|

>`💎 feature`

|

||||||

|

|

||||||

Note that Qodo Merge tracks two types of implementations:

|

Qodo Merge tracks two types of implementations for tracking implemented suggestions:

|

||||||

|

|

||||||

- Direct implementation - when the user directly applies the suggestion by clicking the `Apply` checkbox.

|

- Direct implementation - when the user directly applies the suggestion by clicking the `Apply` checkbox.

|

||||||

- Indirect implementation - when the user implements the suggestion in their IDE environment. In this case, Qodo Merge will utilize, after each commit, a dedicated logic to identify if a suggestion was implemented, and will mark it as implemented.

|

- Indirect implementation - when the user implements the suggestion in their IDE environment. In this case, Qodo Merge will utilize, after each commit, a dedicated logic to identify if a suggestion was implemented, and will mark it as implemented.

|

||||||

@ -67,8 +69,8 @@ In post-process, Qodo Merge counts the number of suggestions that were implement

|

|||||||

|

|

||||||

{width=512}

|

{width=512}

|

||||||

|

|

||||||

## Suggestion tracking 💎

|

## Suggestion tracking

|

||||||

`Platforms supported: GitHub, GitLab`

|

>`💎 feature. Platforms supported: GitHub, GitLab`

|

||||||

|

|

||||||

Qodo Merge employs a novel detection system to automatically [identify](https://qodo-merge-docs.qodo.ai/core-abilities/impact_evaluation/) AI code suggestions that PR authors have accepted and implemented.

|

Qodo Merge employs a novel detection system to automatically [identify](https://qodo-merge-docs.qodo.ai/core-abilities/impact_evaluation/) AI code suggestions that PR authors have accepted and implemented.

|

||||||

|

|

||||||

@ -101,8 +103,6 @@ The `improve` tool can be further customized by providing additional instruction

|

|||||||

|

|

||||||

### Extra instructions

|

### Extra instructions

|

||||||

|

|

||||||

>`Platforms supported: GitHub, GitLab, Bitbucket, Azure DevOps`

|

|

||||||

|

|

||||||

You can use the `extra_instructions` configuration option to give the AI model additional instructions for the `improve` tool.

|

You can use the `extra_instructions` configuration option to give the AI model additional instructions for the `improve` tool.

|

||||||

Be specific, clear, and concise in the instructions. With extra instructions, you are the prompter.

|

Be specific, clear, and concise in the instructions. With extra instructions, you are the prompter.

|

||||||

|

|

||||||

@ -118,9 +118,9 @@ extra_instructions="""\

|

|||||||

```

|

```

|

||||||

Use triple quotes to write multi-line instructions. Use bullet points or numbers to make the instructions more readable.

|

Use triple quotes to write multi-line instructions. Use bullet points or numbers to make the instructions more readable.

|

||||||

|

|

||||||

### Best practices 💎

|

### Best practices

|

||||||

|

|

||||||

>`Platforms supported: GitHub, GitLab, Bitbucket`

|

> `💎 feature. Platforms supported: GitHub, GitLab, Bitbucket`

|

||||||

|

|

||||||

Another option to give additional guidance to the AI model is by creating a `best_practices.md` file, either in your repository's root directory or as a [**wiki page**](https://github.com/Codium-ai/pr-agent/wiki) (we recommend the wiki page, as editing and maintaining it over time is easier).

|

Another option to give additional guidance to the AI model is by creating a `best_practices.md` file, either in your repository's root directory or as a [**wiki page**](https://github.com/Codium-ai/pr-agent/wiki) (we recommend the wiki page, as editing and maintaining it over time is easier).

|

||||||

This page can contain a list of best practices, coding standards, and guidelines that are specific to your repo/organization.

|

This page can contain a list of best practices, coding standards, and guidelines that are specific to your repo/organization.

|

||||||

@ -191,11 +191,11 @@ And the label will be: `{organization_name} best practice`.

|

|||||||

|

|

||||||

{width=512}

|

{width=512}

|

||||||

|

|

||||||

### Auto best practices 💎

|

### Auto best practices

|

||||||

|

|

||||||

>`Platforms supported: GitHub`

|

>`💎 feature. Platforms supported: GitHub.`

|

||||||

|

|

||||||

'Auto best practices' is a novel Qodo Merge capability that:

|

`Auto best practices` is a novel Qodo Merge capability that:

|

||||||

|

|

||||||

1. Identifies recurring patterns from accepted suggestions

|

1. Identifies recurring patterns from accepted suggestions

|

||||||

2. **Automatically** generates [best practices page](https://github.com/qodo-ai/pr-agent/wiki/.pr_agent_auto_best_practices) based on what your team consistently values

|

2. **Automatically** generates [best practices page](https://github.com/qodo-ai/pr-agent/wiki/.pr_agent_auto_best_practices) based on what your team consistently values

|

||||||

@ -228,7 +228,8 @@ max_patterns = 5

|

|||||||

```

|

```

|

||||||

|

|

||||||

|

|

||||||

### Combining `extra instructions` and `best practices` 💎

|

### Combining 'extra instructions' and 'best practices'

|

||||||

|

> `💎 feature`

|

||||||

|

|

||||||

The `extra instructions` configuration is more related to the `improve` tool prompt. It can be used, for example, to avoid specific suggestions ("Don't suggest to add try-except block", "Ignore changes in toml files", ...) or to emphasize specific aspects or formats ("Answer in Japanese", "Give only short suggestions", ...)

|

The `extra instructions` configuration is more related to the `improve` tool prompt. It can be used, for example, to avoid specific suggestions ("Don't suggest to add try-except block", "Ignore changes in toml files", ...) or to emphasize specific aspects or formats ("Answer in Japanese", "Give only short suggestions", ...)

|

||||||

|

|

||||||

@ -267,6 +268,8 @@ dual_publishing_score_threshold = x

|

|||||||

Where x represents the minimum score threshold (>=) for suggestions to be presented as commitable PR comments in addition to the table. Default is -1 (disabled).

|

Where x represents the minimum score threshold (>=) for suggestions to be presented as commitable PR comments in addition to the table. Default is -1 (disabled).

|

||||||

|

|

||||||

### Self-review

|

### Self-review

|

||||||

|

> `💎 feature`

|

||||||

|

|

||||||

If you set in a configuration file:

|

If you set in a configuration file:

|

||||||

```toml

|

```toml

|

||||||

[pr_code_suggestions]

|

[pr_code_suggestions]

|

||||||

@ -310,21 +313,56 @@ code_suggestions_self_review_text = "... (your text here) ..."

|

|||||||

|

|

||||||

To prevent unauthorized approvals, this configuration defaults to false, and cannot be altered through online comments; enabling requires a direct update to the configuration file and a commit to the repository. This ensures that utilizing the feature demands a deliberate documented decision by the repository owner.

|

To prevent unauthorized approvals, this configuration defaults to false, and cannot be altered through online comments; enabling requires a direct update to the configuration file and a commit to the repository. This ensures that utilizing the feature demands a deliberate documented decision by the repository owner.

|

||||||

|

|

||||||

|

### Auto-approval

|

||||||

|

> `💎 feature. Platforms supported: GitHub, GitLab, Bitbucket`

|

||||||

|

|

||||||

|

Under specific conditions, Qodo Merge can auto-approve a PR when a specific comment is invoked, or when the PR meets certain criteria.

|

||||||

|

|

||||||

|

To ensure safety, the auto-approval feature is disabled by default. To enable auto-approval, you need to actively set, in a pre-defined _configuration file_, the following:

|

||||||

|

```toml

|

||||||

|

[config]

|

||||||

|

enable_auto_approval = true

|

||||||

|

```

|

||||||

|

Note that this specific flag cannot be set with a command line argument, only in the configuration file, committed to the repository.

|

||||||

|

This ensures that enabling auto-approval is a deliberate decision by the repository owner.

|

||||||

|

|

||||||

|

**(1) Auto-approval by commenting**

|

||||||

|

|

||||||

|

After enabling, by commenting on a PR:

|

||||||

|

```

|

||||||

|

/review auto_approve

|

||||||

|

```

|

||||||

|

Qodo Merge will automatically approve the PR, and add a comment with the approval.

|

||||||

|

|

||||||

|

**(2) Auto-approval when the PR meets certain criteria**

|

||||||

|

|

||||||

|

There are two criteria that can be set for auto-approval:

|

||||||

|

|

||||||

|

- **Review effort score**

|

||||||

|

```toml

|

||||||

|

[config]

|

||||||

|

auto_approve_for_low_review_effort = X # X is a number between 1 to 5

|

||||||

|

```

|

||||||

|

When the [review effort score](https://www.qodo.ai/images/pr_agent/review3.png) is lower or equal to X, the PR will be auto-approved.

|

||||||

|

|

||||||

|

___

|

||||||

|

- **No code suggestions**

|

||||||

|

```toml

|

||||||

|

[config]

|

||||||

|

auto_approve_for_no_suggestions = true

|

||||||

|

```

|

||||||

|

When no [code suggestion](https://www.qodo.ai/images/pr_agent/code_suggestions_as_comment_closed.png) were found for the PR, the PR will be auto-approved.

|

||||||

|

|

||||||

### How many code suggestions are generated?

|

### How many code suggestions are generated?

|

||||||

Qodo Merge uses a dynamic strategy to generate code suggestions based on the size of the pull request (PR). Here's how it works:

|

Qodo Merge uses a dynamic strategy to generate code suggestions based on the size of the pull request (PR). Here's how it works:

|

||||||

|

|

||||||

1) Chunking large PRs:

|

#### 1. Chunking large PRs

|

||||||

|

|

||||||

- Qodo Merge divides large PRs into 'chunks'.

|

- Qodo Merge divides large PRs into 'chunks'.

|

||||||

- Each chunk contains up to `pr_code_suggestions.max_context_tokens` tokens (default: 14,000).

|

- Each chunk contains up to `pr_code_suggestions.max_context_tokens` tokens (default: 14,000).

|

||||||

|

|

||||||

|

#### 2. Generating suggestions

|

||||||

2) Generating suggestions:

|

|

||||||

|

|

||||||

- For each chunk, Qodo Merge generates up to `pr_code_suggestions.num_code_suggestions_per_chunk` suggestions (default: 4).

|

- For each chunk, Qodo Merge generates up to `pr_code_suggestions.num_code_suggestions_per_chunk` suggestions (default: 4).

|

||||||

|

|

||||||

|

|

||||||

This approach has two main benefits:

|

This approach has two main benefits:

|

||||||

|

|

||||||

- Scalability: The number of suggestions scales with the PR size, rather than being fixed.

|

- Scalability: The number of suggestions scales with the PR size, rather than being fixed.

|

||||||

@ -366,6 +404,10 @@ Note: Chunking is primarily relevant for large PRs. For most PRs (up to 500 line

|

|||||||

<td><b>apply_suggestions_checkbox</b></td>

|

<td><b>apply_suggestions_checkbox</b></td>

|

||||||

<td> Enable the checkbox to create a committable suggestion. Default is true.</td>

|

<td> Enable the checkbox to create a committable suggestion. Default is true.</td>

|

||||||

</tr>

|

</tr>

|

||||||

|

<tr>

|

||||||

|

<td><b>enable_more_suggestions_checkbox</b></td>

|

||||||

|

<td> Enable the checkbox to generate more suggestions. Default is true.</td>

|

||||||

|

</tr>

|

||||||

<tr>

|

<tr>

|

||||||

<td><b>enable_help_text</b></td>

|

<td><b>enable_help_text</b></td>

|

||||||

<td>If set to true, the tool will display a help text in the comment. Default is true.</td>

|

<td>If set to true, the tool will display a help text in the comment. Default is true.</td>

|

||||||

|

|||||||

@ -18,7 +18,7 @@ The tool will generate code suggestions for the selected component (if no compon

|

|||||||

|

|

||||||

{width=768}

|

{width=768}

|

||||||

|

|

||||||

**Notes**

|

!!! note "Notes"

|

||||||

- Language that are currently supported by the tool: Python, Java, C++, JavaScript, TypeScript, C#.

|

- Language that are currently supported by the tool: Python, Java, C++, JavaScript, TypeScript, C#.

|

||||||

- This tool can also be triggered interactively by using the [`analyze`](./analyze.md) tool.

|

- This tool can also be triggered interactively by using the [`analyze`](./analyze.md) tool.

|

||||||

|

|

||||||

|

|||||||

@ -114,16 +114,6 @@ You can enable\disable the `review` tool to add specific labels to the PR:

|

|||||||

</tr>

|

</tr>

|

||||||

</table>

|

</table>

|

||||||

|

|

||||||

!!! example "Auto-approval"

|

|

||||||

|

|

||||||

If enabled, the `review` tool can approve a PR when a specific comment, `/review auto_approve`, is invoked.

|

|

||||||

|

|

||||||

<table>

|

|

||||||

<tr>

|

|

||||||

<td><b>enable_auto_approval</b></td>

|

|

||||||

<td>If set to true, the tool will approve the PR when invoked with the 'auto_approve' command. Default is false. This flag can be changed only from a configuration file.</td>

|

|

||||||

</tr>

|

|

||||||

</table>

|

|

||||||

|

|

||||||

## Usage Tips

|

## Usage Tips

|

||||||

|

|

||||||

@ -175,23 +165,6 @@ If enabled, the `review` tool can approve a PR when a specific comment, `/review

|

|||||||

Use triple quotes to write multi-line instructions. Use bullet points to make the instructions more readable.

|

Use triple quotes to write multi-line instructions. Use bullet points to make the instructions more readable.

|

||||||

|

|

||||||

|

|

||||||

!!! tip "Auto-approval"

|

|

||||||

|

|

||||||