mirror of

https://github.com/qodo-ai/pr-agent.git

synced 2025-07-21 04:50:39 +08:00

@ -1,7 +1,7 @@

|

||||

# Overview - Impact Evaluation 💎

|

||||

|

||||

Demonstrating the return on investment (ROI) of AI-powered initiatives is crucial for modern organizations.

|

||||

To address this need, PR-Agent has developed an AI impact measurement tools and metrics, providing advanced analytics to help businesses quantify the tangible benefits of AI adoption in their development processes.

|

||||

To address this need, PR-Agent has developed an AI impact measurement tools and metrics, providing advanced analytics to help businesses quantify the tangible benefits of AI adoption in their PR review process.

|

||||

|

||||

|

||||

## Auto Impact Validator - Real-Time Tracking of Implemented PR-Agent Suggestions

|

||||

@ -27,9 +27,10 @@ Here are key metrics that the dashboard tracks:

|

||||

|

||||

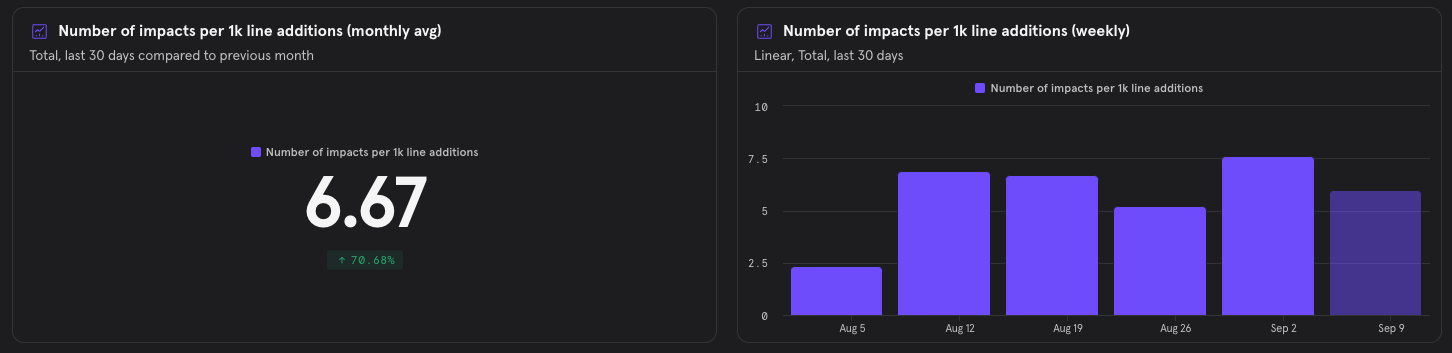

#### PR-Agent Impacts per 1K Lines

|

||||

{width=512}

|

||||

> Explanation: for every 1K lines of code (additions/edits), PR-Agent had in average ~X suggestions implemented.

|

||||

> Explanation: for every 1K lines of code (additions/edits), PR-Agent had on average ~X suggestions implemented.

|

||||

|

||||

**Why This Metric Matters:**

|

||||

|

||||

1. **Standardized and Comparable Measurement:** By measuring impacts per 1K lines of code additions, you create a standardized metric that can be compared across different projects, teams, customers, and time periods. This standardization is crucial for meaningful analysis, benchmarking, and identifying where PR-Agent is most effective.

|

||||

2. **Accounts for PR Variability and Incentivizes Quality:** This metric addresses the fact that "Not all PRs are created equal." By normalizing against lines of code rather than PR count, you account for the variability in PR sizes and focus on the quality and impact of suggestions rather than just the number of PRs affected.

|

||||

3. **Quantifies Value and ROI:** The metric directly correlates with the value PR-Agent is providing, showing how frequently it offers improvements relative to the amount of new code being written. This provides a clear, quantifiable way to demonstrate PR-Agent's return on investment to stakeholders.

|

||||

|

||||

@ -2,7 +2,6 @@

|

||||

PR-Agent utilizes a variety of core abilities to provide a comprehensive and efficient code review experience. These abilities include:

|

||||

|

||||

- [Local and global metadata](https://pr-agent-docs.codium.ai/core-abilities/metadata/)

|

||||

- [Line localization](https://pr-agent-docs.codium.ai/core-abilities/line_localization/)

|

||||

- [Dynamic context](https://pr-agent-docs.codium.ai/core-abilities/dynamic_context/)

|

||||

- [Self-reflection](https://pr-agent-docs.codium.ai/core-abilities/self_reflection/)

|

||||

- [Impact evaluation](https://pr-agent-docs.codium.ai/core-abilities/impact_evaluation/)

|

||||

|

||||

@ -1,2 +0,0 @@

|

||||

## Overview - Line localization

|

||||

TBD

|

||||

@ -208,13 +208,12 @@ Using a combination of both can help the AI model to provide relevant and tailor

|

||||

|

||||

## A note on code suggestions quality

|

||||

|

||||

- While the current AI for code is getting better and better (GPT-4), it's not flawless. Not all the suggestions will be perfect, and a user should not accept all of them automatically. Critical reading and judgment are required.

|

||||

- AI models for code are getting better and better (Sonnet-3.5 and GPT-4), but they are not flawless. Not all the suggestions will be perfect, and a user should not accept all of them automatically. Critical reading and judgment are required.

|

||||

- While mistakes of the AI are rare but can happen, a real benefit from the suggestions of the `improve` (and [`review`](https://pr-agent-docs.codium.ai/tools/review/)) tool is to catch, with high probability, **mistakes or bugs done by the PR author**, when they happen. So, it's a good practice to spend the needed ~30-60 seconds to review the suggestions, even if not all of them are always relevant.

|

||||

- The hierarchical structure of the suggestions is designed to help the user to _quickly_ understand them, and to decide which ones are relevant and which are not:

|

||||

|

||||

- Only if the `Category` header is relevant, the user should move to the summarized suggestion description

|

||||

- Only if the summarized suggestion description is relevant, the user should click on the collapsible, to read the full suggestion description with a code preview example.

|

||||

|

||||

In addition, we recommend to use the `extra_instructions` field to guide the model to suggestions that are more relevant to the specific needs of the project.

|

||||

<br>

|

||||

Consider also trying the [Custom Prompt Tool](./custom_prompt.md) 💎, that will **only** propose code suggestions that follow specific guidelines defined by user.

|

||||

- In addition, we recommend to use the [`extra_instructions`](https://pr-agent-docs.codium.ai/tools/improve/#extra-instructions-and-best-practices) field to guide the model to suggestions that are more relevant to the specific needs of the project.

|

||||

- The interactive [PR chat](https://pr-agent-docs.codium.ai/chrome-extension/) also provides an easy way to get more tailored suggestions and feedback from the AI model.

|

||||

|

||||

@ -44,7 +44,6 @@ nav:

|

||||

- Core Abilities:

|

||||

- 'core-abilities/index.md'

|

||||

- Local and global metadata: 'core-abilities/metadata.md'

|

||||

- Line localization: 'core-abilities/line_localization.md'

|

||||

- Dynamic context: 'core-abilities/dynamic_context.md'

|

||||

- Self-reflection: 'core-abilities/self_reflection.md'

|

||||

- Impact evaluation: 'core-abilities/impact_evaluation.md'

|

||||

|

||||

Reference in New Issue

Block a user