diff --git a/docs/docs/core-abilities/incremental_update.md b/docs/docs/core-abilities/incremental_update.md

new file mode 100644

index 00000000..3813fb73

--- /dev/null

+++ b/docs/docs/core-abilities/incremental_update.md

@@ -0,0 +1,33 @@

+# Incremental Update 💎

+

+`Supported Git Platforms: GitHub`

+

+## Overview

+The Incremental Update feature helps users focus on feedback for their newest changes, making large PRs more manageable.

+

+### How it works

+

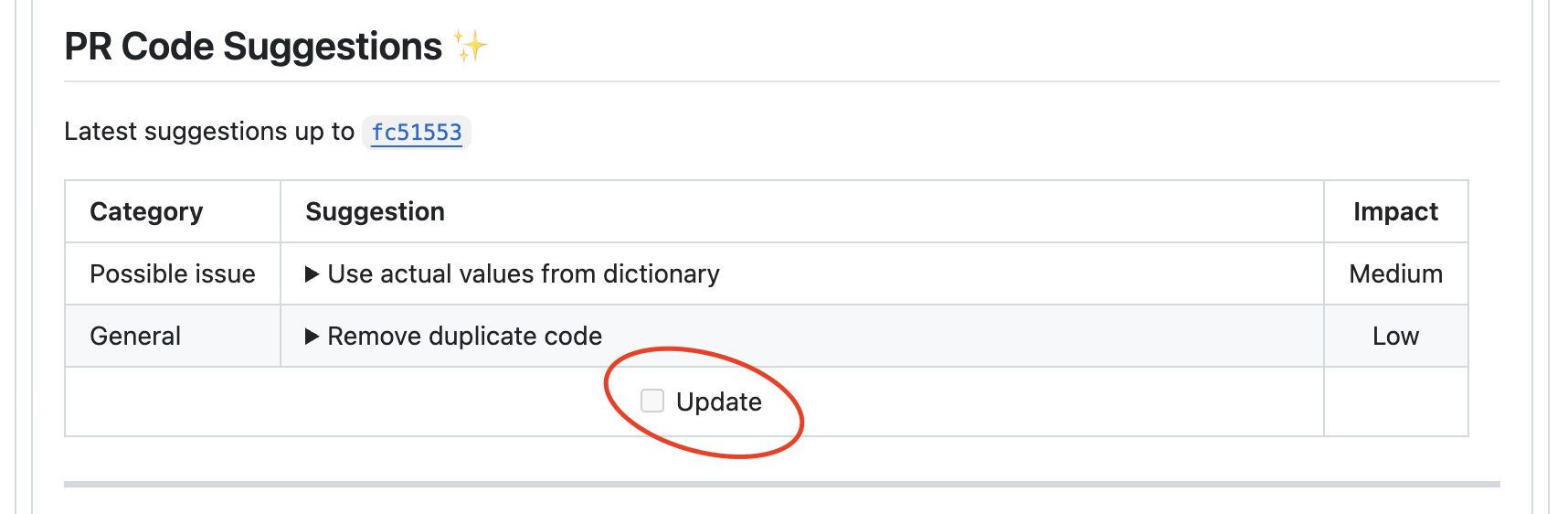

+=== "Update Option on Subsequent Commits"

+ {width=512}

+

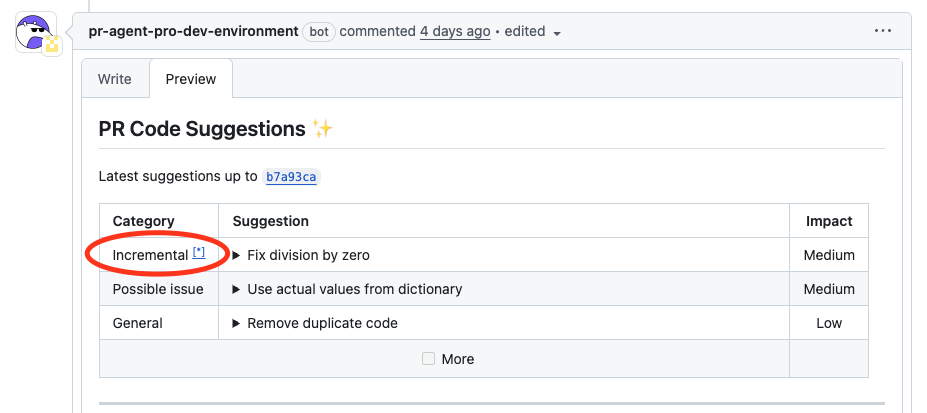

+=== "Generation of Incremental Update"

+ {width=512}

+

+___

+

+Whenever new commits are pushed following a recent code suggestions report for this PR, an Update button appears (as seen above).

+

+Once the user clicks on the button:

+

+- The `improve` tool identifies the new changes (the "delta")

+- Provides suggestions on these recent changes

+- Combines these suggestions with the overall PR feedback, prioritizing delta-related comments

+- Marks delta-related comments with a textual indication followed by an asterisk (*) with a link to this page, so they can easily be identified

+

+### Benefits for Developers

+

+- Focus on what matters: See feedback on newest code first

+- Clearer organization: Comments on recent changes are clearly marked

+- Better workflow: Address feedback more systematically, starting with recent changes

+

+

diff --git a/docs/docs/core-abilities/index.md b/docs/docs/core-abilities/index.md

index 9af26e2e..b97260ee 100644

--- a/docs/docs/core-abilities/index.md

+++ b/docs/docs/core-abilities/index.md

@@ -8,6 +8,7 @@ Qodo Merge utilizes a variety of core abilities to provide a comprehensive and e

- [Dynamic context](https://qodo-merge-docs.qodo.ai/core-abilities/dynamic_context/)

- [Fetching ticket context](https://qodo-merge-docs.qodo.ai/core-abilities/fetching_ticket_context/)

- [Impact evaluation](https://qodo-merge-docs.qodo.ai/core-abilities/impact_evaluation/)

+- [Incremental Update](https://qodo-merge-docs.qodo.ai/core-abilities/incremental_update/)

- [Interactivity](https://qodo-merge-docs.qodo.ai/core-abilities/interactivity/)

- [Local and global metadata](https://qodo-merge-docs.qodo.ai/core-abilities/metadata/)

- [RAG context enrichment](https://qodo-merge-docs.qodo.ai/core-abilities/rag_context_enrichment/)

diff --git a/docs/docs/index.md b/docs/docs/index.md

index 8dd7c955..79a06e5d 100644

--- a/docs/docs/index.md

+++ b/docs/docs/index.md

@@ -67,6 +67,7 @@ PR-Agent and Qodo Merge offers extensive pull request functionalities across var

| | [Impact Evaluation](https://qodo-merge-docs.qodo.ai/core-abilities/impact_evaluation/) 💎 | ✅ | ✅ | | |

| | [Code Validation 💎](https://qodo-merge-docs.qodo.ai/core-abilities/code_validation/) | ✅ | ✅ | ✅ | ✅ |

| | [Auto Best Practices 💎](https://qodo-merge-docs.qodo.ai/core-abilities/auto_best_practices/) | ✅ | | | |

+| | [Incremental Update 💎](https://qodo-merge-docs.qodo.ai/core-abilities/incremental_update/) | ✅ | | | |

!!! note "💎 means Qodo Merge only"

All along the documentation, 💎 marks a feature available only in [Qodo Merge](https://www.codium.ai/pricing/){:target="_blank"}, and not in the open-source version.

diff --git a/docs/docs/installation/github.md b/docs/docs/installation/github.md

index 018499ee..3eeace4f 100644

--- a/docs/docs/installation/github.md

+++ b/docs/docs/installation/github.md

@@ -193,9 +193,8 @@ For example: `GITHUB.WEBHOOK_SECRET` --> `GITHUB__WEBHOOK_SECRET`

3. Push image to ECR

```shell

-

- docker tag codiumai/pr-agent:serverless .dkr.ecr..amazonaws.com/codiumai/pr-agent:serverless

- docker push .dkr.ecr..amazonaws.com/codiumai/pr-agent:serverless

+ docker tag codiumai/pr-agent:serverless .dkr.ecr..amazonaws.com/codiumai/pr-agent:serverless

+ docker push .dkr.ecr..amazonaws.com/codiumai/pr-agent:serverless

```

4. Create a lambda function that uses the uploaded image. Set the lambda timeout to be at least 3m.

diff --git a/docs/docs/recent_updates/index.md b/docs/docs/recent_updates/index.md

index dc7ea8d1..158fb5ca 100644

--- a/docs/docs/recent_updates/index.md

+++ b/docs/docs/recent_updates/index.md

@@ -7,11 +7,11 @@ This page summarizes recent enhancements to Qodo Merge (last three months).

It also outlines our development roadmap for the upcoming three months. Please note that the roadmap is subject to change, and features may be adjusted, added, or reprioritized.

=== "Recent Updates"

+ - **Qodo Merge Pull Request Benchmark** - evaluating the performance of LLMs in analyzing pull request code ([Learn more](https://qodo-merge-docs.qodo.ai/pr_benchmark/))

- **Chat on Suggestions**: Users can now chat with Qodo Merge code suggestions ([Learn more](https://qodo-merge-docs.qodo.ai/tools/improve/#chat-on-code-suggestions))

- **Scan Repo Discussions Tool**: A new tool that analyzes past code discussions to generate a `best_practices.md` file, distilling key insights and recommendations. ([Learn more](https://qodo-merge-docs.qodo.ai/tools/scan_repo_discussions/))

- **Enhanced Models**: Qodo Merge now defaults to a combination of top models (Claude Sonnet 3.7 and Gemini 2.5 Pro) and incorporates dedicated code validation logic for improved results. ([Details 1](https://qodo-merge-docs.qodo.ai/usage-guide/qodo_merge_models/), [Details 2](https://qodo-merge-docs.qodo.ai/core-abilities/code_validation/))

- **Chrome Extension Update**: Qodo Merge Chrome extension now supports single-tenant users. ([Learn more](https://qodo-merge-docs.qodo.ai/chrome-extension/options/#configuration-options/))

- - **Help Docs Tool**: The help_docs tool can answer free-text questions based on any git documentation folder. ([Learn more](https://qodo-merge-docs.qodo.ai/tools/help_docs/))

=== "Future Roadmap"

- **Smart Update**: Upon PR updates, Qodo Merge will offer tailored code suggestions, addressing both the entire PR and the specific incremental changes since the last feedback.

diff --git a/docs/docs/tools/documentation.md b/docs/docs/tools/documentation.md

index 247d5d6d..47222f51 100644

--- a/docs/docs/tools/documentation.md

+++ b/docs/docs/tools/documentation.md

@@ -26,6 +26,29 @@ You can state a name of a specific component in the PR to get documentation only

/add_docs component_name

```

+## Manual triggering

+

+Comment `/add_docs` on a PR to invoke it manually.

+

+## Automatic triggering

+

+To automatically run the `add_docs` tool when a pull request is opened, define in a [configuration file](https://qodo-merge-docs.qodo.ai/usage-guide/configuration_options/):

+

+

+```toml

+[github_app]

+pr_commands = [

+ "/add_docs",

+ ...

+]

+```

+

+The `pr_commands` list defines commands that run automatically when a PR is opened.

+Since this is under the [github_app] section, it only applies when using the Qodo Merge GitHub App in GitHub environments.

+

+!!! note

+By default, /add_docs is not triggered automatically. You must explicitly include it in pr_commands to enable this behavior.

+

## Configuration options

- `docs_style`: The exact style of the documentation (for python docstring). you can choose between: `google`, `numpy`, `sphinx`, `restructuredtext`, `plain`. Default is `sphinx`.

diff --git a/docs/docs/tools/review.md b/docs/docs/tools/review.md

index e5cc6b93..8d4a5543 100644

--- a/docs/docs/tools/review.md

+++ b/docs/docs/tools/review.md

@@ -70,6 +70,10 @@ extra_instructions = "..."

enable_help_text

If set to true, the tool will display a help text in the comment. Default is true.

+

+ num_max_findings

+ Number of maximum returned findings. Default is 3.

+

!!! example "Enable\\disable specific sub-sections"

@@ -112,7 +116,7 @@ extra_instructions = "..."

enable_review_labels_effort

- If set to true, the tool will publish a 'Review effort [1-5]: x' label. Default is true.

+ If set to true, the tool will publish a 'Review effort x/5' label (1–5 scale). Default is true.

@@ -141,7 +145,7 @@ extra_instructions = "..."

The `review` tool can auto-generate two specific types of labels for a PR:

- a `possible security issue` label that detects if a possible [security issue](https://github.com/Codium-ai/pr-agent/blob/tr/user_description/pr_agent/settings/pr_reviewer_prompts.toml#L136) exists in the PR code (`enable_review_labels_security` flag)

- - a `Review effort [1-5]: x` label, where x is the estimated effort to review the PR (`enable_review_labels_effort` flag)

+ - a `Review effort x/5` label, where x is the estimated effort to review the PR on a 1–5 scale (`enable_review_labels_effort` flag)

Both modes are useful, and we recommended to enable them.

diff --git a/docs/docs/usage-guide/additional_configurations.md b/docs/docs/usage-guide/additional_configurations.md

index c345e0c6..9f9202f6 100644

--- a/docs/docs/usage-guide/additional_configurations.md

+++ b/docs/docs/usage-guide/additional_configurations.md

@@ -50,7 +50,7 @@ glob = ['*.py']

And to ignore Python files in all PRs using `regex` pattern, set in a configuration file:

```

-[regex]

+[ignore]

regex = ['.*\.py$']

```

@@ -164,6 +164,7 @@ Qodo Merge allows you to automatically ignore certain PRs based on various crite

- PRs with specific titles (using regex matching)

- PRs between specific branches (using regex matching)

+- PRs from specific repositories (using regex matching)

- PRs not from specific folders

- PRs containing specific labels

- PRs opened by specific users

@@ -172,7 +173,7 @@ Qodo Merge allows you to automatically ignore certain PRs based on various crite

To ignore PRs with a specific title such as "[Bump]: ...", you can add the following to your `configuration.toml` file:

-```

+```toml

[config]

ignore_pr_title = ["\\[Bump\\]"]

```

@@ -183,7 +184,7 @@ Where the `ignore_pr_title` is a list of regex patterns to match the PR title yo

To ignore PRs from specific source or target branches, you can add the following to your `configuration.toml` file:

-```

+```toml

[config]

ignore_pr_source_branches = ['develop', 'main', 'master', 'stage']

ignore_pr_target_branches = ["qa"]

@@ -192,6 +193,18 @@ ignore_pr_target_branches = ["qa"]

Where the `ignore_pr_source_branches` and `ignore_pr_target_branches` are lists of regex patterns to match the source and target branches you want to ignore.

They are not mutually exclusive, you can use them together or separately.

+### Ignoring PRs from specific repositories

+

+To ignore PRs from specific repositories, you can add the following to your `configuration.toml` file:

+

+```toml

+[config]

+ignore_repositories = ["my-org/my-repo1", "my-org/my-repo2"]

+```

+

+Where the `ignore_repositories` is a list of regex patterns to match the repositories you want to ignore. This is useful when you have multiple repositories and want to exclude certain ones from analysis.

+

+

### Ignoring PRs not from specific folders

To allow only specific folders (often needed in large monorepos), set:

diff --git a/docs/docs/usage-guide/changing_a_model.md b/docs/docs/usage-guide/changing_a_model.md

index c0b2bc28..bfeb7c3a 100644

--- a/docs/docs/usage-guide/changing_a_model.md

+++ b/docs/docs/usage-guide/changing_a_model.md

@@ -16,6 +16,23 @@ You can give parameters via a configuration file, or from environment variables.

See [litellm documentation](https://litellm.vercel.app/docs/proxy/quick_start#supported-llms) for the environment variables needed per model, as they may vary and change over time. Our documentation per-model may not always be up-to-date with the latest changes.

Failing to set the needed keys of a specific model will usually result in litellm not identifying the model type, and failing to utilize it.

+### OpenAI like API

+To use an OpenAI like API, set the following in your `.secrets.toml` file:

+

+```toml

+[openai]

+api_base = "https://api.openai.com/v1"

+api_key = "sk-..."

+```

+

+or use the environment variables (make sure to use double underscores `__`):

+

+```bash

+OPENAI__API_BASE=https://api.openai.com/v1

+OPENAI__KEY=sk-...

+```

+

+

### Azure

To use Azure, set in your `.secrets.toml` (working from CLI), or in the GitHub `Settings > Secrets and variables` (working from GitHub App or GitHub Action):

diff --git a/docs/docs/usage-guide/configuration_options.md b/docs/docs/usage-guide/configuration_options.md

index fba4bb48..f3b91031 100644

--- a/docs/docs/usage-guide/configuration_options.md

+++ b/docs/docs/usage-guide/configuration_options.md

@@ -1,20 +1,22 @@

-The different tools and sub-tools used by Qodo Merge are adjustable via the **[configuration file](https://github.com/Codium-ai/pr-agent/blob/main/pr_agent/settings/configuration.toml)**.

+The different tools and sub-tools used by Qodo Merge are adjustable via a Git configuration file.

+There are three main ways to set persistent configurations:

-In addition to general configuration options, each tool has its own configurations. For example, the `review` tool will use parameters from the [pr_reviewer](https://github.com/Codium-ai/pr-agent/blob/main/pr_agent/settings/configuration.toml#L16) section in the configuration file.

-See the [Tools Guide](https://qodo-merge-docs.qodo.ai/tools/) for a detailed description of the different tools and their configurations.

-

-There are three ways to set persistent configurations:

-

-1. Wiki configuration page 💎

-2. Local configuration file

-3. Global configuration file 💎

+1. [Wiki](https://qodo-merge-docs.qodo.ai/usage-guide/configuration_options/#wiki-configuration-file) configuration page 💎

+2. [Local](https://qodo-merge-docs.qodo.ai/usage-guide/configuration_options/#local-configuration-file) configuration file

+3. [Global](https://qodo-merge-docs.qodo.ai/usage-guide/configuration_options/#global-configuration-file) configuration file 💎

In terms of precedence, wiki configurations will override local configurations, and local configurations will override global configurations.

-!!! tip "Tip1: edit only what you need"

+

+For a list of all possible configurations, see the [configuration options](https://github.com/qodo-ai/pr-agent/blob/main/pr_agent/settings/configuration.toml/) page.

+In addition to general configuration options, each tool has its own configurations. For example, the `review` tool will use parameters from the [pr_reviewer](https://github.com/Codium-ai/pr-agent/blob/main/pr_agent/settings/configuration.toml#L16) section in the configuration file.

+

+!!! tip "Tip1: Edit only what you need"

Your configuration file should be minimal, and edit only the relevant values. Don't copy the entire configuration options, since it can lead to legacy problems when something changes.

-!!! tip "Tip2: show relevant configurations"

- If you set `config.output_relevant_configurations=true`, each tool will also output in a collapsible section its relevant configurations. This can be useful for debugging, or getting to know the configurations better.

+!!! tip "Tip2: Show relevant configurations"

+ If you set `config.output_relevant_configurations` to True, each tool will also output in a collapsible section its relevant configurations. This can be useful for debugging, or getting to know the configurations better.

+

+

## Wiki configuration file 💎

diff --git a/docs/mkdocs.yml b/docs/mkdocs.yml

index 8525fdee..a25c081b 100644

--- a/docs/mkdocs.yml

+++ b/docs/mkdocs.yml

@@ -49,6 +49,7 @@ nav:

- Dynamic context: 'core-abilities/dynamic_context.md'

- Fetching ticket context: 'core-abilities/fetching_ticket_context.md'

- Impact evaluation: 'core-abilities/impact_evaluation.md'

+ - Incremental Update: 'core-abilities/incremental_update.md'

- Interactivity: 'core-abilities/interactivity.md'

- Local and global metadata: 'core-abilities/metadata.md'

- RAG context enrichment: 'core-abilities/rag_context_enrichment.md'

diff --git a/pr_agent/algo/__init__.py b/pr_agent/algo/__init__.py

index 8d805baf..e38bd713 100644

--- a/pr_agent/algo/__init__.py

+++ b/pr_agent/algo/__init__.py

@@ -58,6 +58,7 @@ MAX_TOKENS = {

'vertex_ai/claude-3-7-sonnet@20250219': 200000,

'vertex_ai/gemini-1.5-pro': 1048576,

'vertex_ai/gemini-2.5-pro-preview-03-25': 1048576,

+ 'vertex_ai/gemini-2.5-pro-preview-05-06': 1048576,

'vertex_ai/gemini-1.5-flash': 1048576,

'vertex_ai/gemini-2.0-flash': 1048576,

'vertex_ai/gemini-2.5-flash-preview-04-17': 1048576,

@@ -66,6 +67,7 @@ MAX_TOKENS = {

'gemini/gemini-1.5-flash': 1048576,

'gemini/gemini-2.0-flash': 1048576,

'gemini/gemini-2.5-pro-preview-03-25': 1048576,

+ 'gemini/gemini-2.5-pro-preview-05-06': 1048576,

'codechat-bison': 6144,

'codechat-bison-32k': 32000,

'anthropic.claude-instant-v1': 100000,

diff --git a/pr_agent/algo/ai_handlers/litellm_ai_handler.py b/pr_agent/algo/ai_handlers/litellm_ai_handler.py

index ba4630f4..8d727b8b 100644

--- a/pr_agent/algo/ai_handlers/litellm_ai_handler.py

+++ b/pr_agent/algo/ai_handlers/litellm_ai_handler.py

@@ -59,6 +59,7 @@ class LiteLLMAIHandler(BaseAiHandler):

litellm.api_version = get_settings().openai.api_version

if get_settings().get("OPENAI.API_BASE", None):

litellm.api_base = get_settings().openai.api_base

+ self.api_base = get_settings().openai.api_base

if get_settings().get("ANTHROPIC.KEY", None):

litellm.anthropic_key = get_settings().anthropic.key

if get_settings().get("COHERE.KEY", None):

@@ -370,12 +371,12 @@ class LiteLLMAIHandler(BaseAiHandler):

get_logger().info(f"\nUser prompt:\n{user}")

response = await acompletion(**kwargs)

- except (openai.APIError, openai.APITimeoutError) as e:

- get_logger().warning(f"Error during LLM inference: {e}")

- raise

except (openai.RateLimitError) as e:

get_logger().error(f"Rate limit error during LLM inference: {e}")

raise

+ except (openai.APIError, openai.APITimeoutError) as e:

+ get_logger().warning(f"Error during LLM inference: {e}")

+ raise

except (Exception) as e:

get_logger().warning(f"Unknown error during LLM inference: {e}")

raise openai.APIError from e

diff --git a/pr_agent/algo/utils.py b/pr_agent/algo/utils.py

index 4a341386..780c7953 100644

--- a/pr_agent/algo/utils.py

+++ b/pr_agent/algo/utils.py

@@ -731,8 +731,9 @@ def try_fix_yaml(response_text: str,

response_text_original="") -> dict:

response_text_lines = response_text.split('\n')

- keys_yaml = ['relevant line:', 'suggestion content:', 'relevant file:', 'existing code:', 'improved code:']

+ keys_yaml = ['relevant line:', 'suggestion content:', 'relevant file:', 'existing code:', 'improved code:', 'label:']

keys_yaml = keys_yaml + keys_fix_yaml

+

# first fallback - try to convert 'relevant line: ...' to relevant line: |-\n ...'

response_text_lines_copy = response_text_lines.copy()

for i in range(0, len(response_text_lines_copy)):

@@ -747,8 +748,29 @@ def try_fix_yaml(response_text: str,

except:

pass

- # second fallback - try to extract only range from first ```yaml to ````

- snippet_pattern = r'```(yaml)?[\s\S]*?```'

+ # 1.5 fallback - try to convert '|' to '|2'. Will solve cases of indent decreasing during the code

+ response_text_copy = copy.deepcopy(response_text)

+ response_text_copy = response_text_copy.replace('|\n', '|2\n')

+ try:

+ data = yaml.safe_load(response_text_copy)

+ get_logger().info(f"Successfully parsed AI prediction after replacing | with |2")

+ return data

+ except:

+ # if it fails, we can try to add spaces to the lines that are not indented properly, and contain '}'.

+ response_text_lines_copy = response_text_copy.split('\n')

+ for i in range(0, len(response_text_lines_copy)):

+ initial_space = len(response_text_lines_copy[i]) - len(response_text_lines_copy[i].lstrip())

+ if initial_space == 2 and '|2' not in response_text_lines_copy[i] and '}' in response_text_lines_copy[i]:

+ response_text_lines_copy[i] = ' ' + response_text_lines_copy[i].lstrip()

+ try:

+ data = yaml.safe_load('\n'.join(response_text_lines_copy))

+ get_logger().info(f"Successfully parsed AI prediction after replacing | with |2 and adding spaces")

+ return data

+ except:

+ pass

+

+ # second fallback - try to extract only range from first ```yaml to the last ```

+ snippet_pattern = r'```yaml([\s\S]*?)```(?=\s*$|")'

snippet = re.search(snippet_pattern, '\n'.join(response_text_lines_copy))

if not snippet:

snippet = re.search(snippet_pattern, response_text_original) # before we removed the "```"

@@ -803,16 +825,47 @@ def try_fix_yaml(response_text: str,

except:

pass

- # sixth fallback - try to remove last lines

- for i in range(1, len(response_text_lines)):

- response_text_lines_tmp = '\n'.join(response_text_lines[:-i])

+ # sixth fallback - replace tabs with spaces

+ if '\t' in response_text:

+ response_text_copy = copy.deepcopy(response_text)

+ response_text_copy = response_text_copy.replace('\t', ' ')

try:

- data = yaml.safe_load(response_text_lines_tmp)

- get_logger().info(f"Successfully parsed AI prediction after removing {i} lines")

+ data = yaml.safe_load(response_text_copy)

+ get_logger().info(f"Successfully parsed AI prediction after replacing tabs with spaces")

return data

except:

pass

+ # seventh fallback - add indent for sections of code blocks

+ response_text_copy = copy.deepcopy(response_text)

+ response_text_copy_lines = response_text_copy.split('\n')

+ start_line = -1

+ for i, line in enumerate(response_text_copy_lines):

+ if 'existing_code:' in line or 'improved_code:' in line:

+ start_line = i

+ elif line.endswith(': |') or line.endswith(': |-') or line.endswith(': |2') or line.endswith(':'):

+ start_line = -1

+ elif start_line != -1:

+ response_text_copy_lines[i] = ' ' + line

+ response_text_copy = '\n'.join(response_text_copy_lines)

+ try:

+ data = yaml.safe_load(response_text_copy)

+ get_logger().info(f"Successfully parsed AI prediction after adding indent for sections of code blocks")

+ return data

+ except:

+ pass

+

+ # # sixth fallback - try to remove last lines

+ # for i in range(1, len(response_text_lines)):

+ # response_text_lines_tmp = '\n'.join(response_text_lines[:-i])

+ # try:

+ # data = yaml.safe_load(response_text_lines_tmp)

+ # get_logger().info(f"Successfully parsed AI prediction after removing {i} lines")

+ # return data

+ # except:

+ # pass

+

+

def set_custom_labels(variables, git_provider=None):

if not get_settings().config.enable_custom_labels:

diff --git a/pr_agent/git_providers/__init__.py b/pr_agent/git_providers/__init__.py

index 8ee2db08..e4acfc22 100644

--- a/pr_agent/git_providers/__init__.py

+++ b/pr_agent/git_providers/__init__.py

@@ -12,6 +12,7 @@ from pr_agent.git_providers.gitea_provider import GiteaProvider

from pr_agent.git_providers.github_provider import GithubProvider

from pr_agent.git_providers.gitlab_provider import GitLabProvider

from pr_agent.git_providers.local_git_provider import LocalGitProvider

+from pr_agent.git_providers.gitea_provider import GiteaProvider

_GIT_PROVIDERS = {

'github': GithubProvider,

@@ -22,7 +23,7 @@ _GIT_PROVIDERS = {

'codecommit': CodeCommitProvider,

'local': LocalGitProvider,

'gerrit': GerritProvider,

- 'gitea': GiteaProvider

+ 'gitea': GiteaProvider,

}

diff --git a/pr_agent/git_providers/azuredevops_provider.py b/pr_agent/git_providers/azuredevops_provider.py

index 35165bdd..d71a029f 100644

--- a/pr_agent/git_providers/azuredevops_provider.py

+++ b/pr_agent/git_providers/azuredevops_provider.py

@@ -618,7 +618,7 @@ class AzureDevopsProvider(GitProvider):

return pr_id

except Exception as e:

if get_settings().config.verbosity_level >= 2:

- get_logger().info(f"Failed to get pr id, error: {e}")

+ get_logger().info(f"Failed to get PR id, error: {e}")

return ""

def publish_file_comments(self, file_comments: list) -> bool:

diff --git a/pr_agent/git_providers/github_provider.py b/pr_agent/git_providers/github_provider.py

index 4f3a5ec7..fa52b7dc 100644

--- a/pr_agent/git_providers/github_provider.py

+++ b/pr_agent/git_providers/github_provider.py

@@ -96,7 +96,7 @@ class GithubProvider(GitProvider):

parsed_url = urlparse(given_url)

repo_path = (parsed_url.path.split('.git')[0])[1:] # //.git -> /

if not repo_path:

- get_logger().error(f"url is neither an issues url nor a pr url nor a valid git url: {given_url}. Returning empty result.")

+ get_logger().error(f"url is neither an issues url nor a PR url nor a valid git url: {given_url}. Returning empty result.")

return ""

return repo_path

except Exception as e:

diff --git a/pr_agent/git_providers/utils.py b/pr_agent/git_providers/utils.py

index 1b4232df..0cfbe116 100644

--- a/pr_agent/git_providers/utils.py

+++ b/pr_agent/git_providers/utils.py

@@ -6,8 +6,7 @@ from dynaconf import Dynaconf

from starlette_context import context

from pr_agent.config_loader import get_settings

-from pr_agent.git_providers import (get_git_provider,

- get_git_provider_with_context)

+from pr_agent.git_providers import get_git_provider_with_context

from pr_agent.log import get_logger

diff --git a/pr_agent/identity_providers/identity_provider.py b/pr_agent/identity_providers/identity_provider.py

index 58e5f6c6..0fdb9a37 100644

--- a/pr_agent/identity_providers/identity_provider.py

+++ b/pr_agent/identity_providers/identity_provider.py

@@ -10,7 +10,7 @@ class Eligibility(Enum):

class IdentityProvider(ABC):

@abstractmethod

- def verify_eligibility(self, git_provider, git_provier_id, pr_url):

+ def verify_eligibility(self, git_provider, git_provider_id, pr_url):

pass

@abstractmethod

diff --git a/pr_agent/servers/bitbucket_app.py b/pr_agent/servers/bitbucket_app.py

index a641adeb..0d75d143 100644

--- a/pr_agent/servers/bitbucket_app.py

+++ b/pr_agent/servers/bitbucket_app.py

@@ -127,6 +127,14 @@ def should_process_pr_logic(data) -> bool:

source_branch = pr_data.get("source", {}).get("branch", {}).get("name", "")

target_branch = pr_data.get("destination", {}).get("branch", {}).get("name", "")

sender = _get_username(data)

+ repo_full_name = pr_data.get("destination", {}).get("repository", {}).get("full_name", "")

+

+ # logic to ignore PRs from specific repositories

+ ignore_repos = get_settings().get("CONFIG.IGNORE_REPOSITORIES", [])

+ if repo_full_name and ignore_repos:

+ if any(re.search(regex, repo_full_name) for regex in ignore_repos):

+ get_logger().info(f"Ignoring PR from repository '{repo_full_name}' due to 'config.ignore_repositories' setting")

+ return False

# logic to ignore PRs from specific users

ignore_pr_users = get_settings().get("CONFIG.IGNORE_PR_AUTHORS", [])

diff --git a/pr_agent/servers/github_app.py b/pr_agent/servers/github_app.py

index 4576bd9d..36db3c69 100644

--- a/pr_agent/servers/github_app.py

+++ b/pr_agent/servers/github_app.py

@@ -258,6 +258,14 @@ def should_process_pr_logic(body) -> bool:

source_branch = pull_request.get("head", {}).get("ref", "")

target_branch = pull_request.get("base", {}).get("ref", "")

sender = body.get("sender", {}).get("login")

+ repo_full_name = body.get("repository", {}).get("full_name", "")

+

+ # logic to ignore PRs from specific repositories

+ ignore_repos = get_settings().get("CONFIG.IGNORE_REPOSITORIES", [])

+ if ignore_repos and repo_full_name:

+ if any(re.search(regex, repo_full_name) for regex in ignore_repos):

+ get_logger().info(f"Ignoring PR from repository '{repo_full_name}' due to 'config.ignore_repositories' setting")

+ return False

# logic to ignore PRs from specific users

ignore_pr_users = get_settings().get("CONFIG.IGNORE_PR_AUTHORS", [])

diff --git a/pr_agent/servers/gitlab_webhook.py b/pr_agent/servers/gitlab_webhook.py

index 7a753c9e..af777a9a 100644

--- a/pr_agent/servers/gitlab_webhook.py

+++ b/pr_agent/servers/gitlab_webhook.py

@@ -113,6 +113,14 @@ def should_process_pr_logic(data) -> bool:

return False

title = data['object_attributes'].get('title')

sender = data.get("user", {}).get("username", "")

+ repo_full_name = data.get('project', {}).get('path_with_namespace', "")

+

+ # logic to ignore PRs from specific repositories

+ ignore_repos = get_settings().get("CONFIG.IGNORE_REPOSITORIES", [])

+ if ignore_repos and repo_full_name:

+ if any(re.search(regex, repo_full_name) for regex in ignore_repos):

+ get_logger().info(f"Ignoring MR from repository '{repo_full_name}' due to 'config.ignore_repositories' setting")

+ return False

# logic to ignore PRs from specific users

ignore_pr_users = get_settings().get("CONFIG.IGNORE_PR_AUTHORS", [])

diff --git a/pr_agent/settings/configuration.toml b/pr_agent/settings/configuration.toml

index 421ecff4..f03a5e66 100644

--- a/pr_agent/settings/configuration.toml

+++ b/pr_agent/settings/configuration.toml

@@ -55,6 +55,7 @@ ignore_pr_target_branches = [] # a list of regular expressions of target branche

ignore_pr_source_branches = [] # a list of regular expressions of source branches to ignore from PR agent when an PR is created

ignore_pr_labels = [] # labels to ignore from PR agent when an PR is created

ignore_pr_authors = [] # authors to ignore from PR agent when an PR is created

+ignore_repositories = [] # a list of regular expressions of repository full names (e.g. "org/repo") to ignore from PR agent processing

#

is_auto_command = false # will be auto-set to true if the command is triggered by an automation

enable_ai_metadata = false # will enable adding ai metadata

@@ -80,6 +81,7 @@ require_ticket_analysis_review=true

# general options

persistent_comment=true

extra_instructions = ""

+num_max_findings = 3

final_update_message = true

# review labels

enable_review_labels_security=true

diff --git a/pr_agent/settings/pr_reviewer_prompts.toml b/pr_agent/settings/pr_reviewer_prompts.toml

index a322b2b9..d4c0a523 100644

--- a/pr_agent/settings/pr_reviewer_prompts.toml

+++ b/pr_agent/settings/pr_reviewer_prompts.toml

@@ -98,7 +98,7 @@ class Review(BaseModel):

{%- if question_str %}

insights_from_user_answers: str = Field(description="shortly summarize the insights you gained from the user's answers to the questions")

{%- endif %}

- key_issues_to_review: List[KeyIssuesComponentLink] = Field("A short and diverse list (0-3 issues) of high-priority bugs, problems or performance concerns introduced in the PR code, which the PR reviewer should further focus on and validate during the review process.")

+ key_issues_to_review: List[KeyIssuesComponentLink] = Field("A short and diverse list (0-{{ num_max_findings }} issues) of high-priority bugs, problems or performance concerns introduced in the PR code, which the PR reviewer should further focus on and validate during the review process.")

{%- if require_security_review %}

security_concerns: str = Field(description="Does this PR code introduce possible vulnerabilities such as exposure of sensitive information (e.g., API keys, secrets, passwords), or security concerns like SQL injection, XSS, CSRF, and others ? Answer 'No' (without explaining why) if there are no possible issues. If there are security concerns or issues, start your answer with a short header, such as: 'Sensitive information exposure: ...', 'SQL injection: ...' etc. Explain your answer. Be specific and give examples if possible")

{%- endif %}

diff --git a/pr_agent/tools/pr_description.py b/pr_agent/tools/pr_description.py

index 6ab13ee1..df82db67 100644

--- a/pr_agent/tools/pr_description.py

+++ b/pr_agent/tools/pr_description.py

@@ -199,7 +199,7 @@ class PRDescription:

async def _prepare_prediction(self, model: str) -> None:

if get_settings().pr_description.use_description_markers and 'pr_agent:' not in self.user_description:

- get_logger().info("Markers were enabled, but user description does not contain markers. skipping AI prediction")

+ get_logger().info("Markers were enabled, but user description does not contain markers. Skipping AI prediction")

return None

large_pr_handling = get_settings().pr_description.enable_large_pr_handling and "pr_description_only_files_prompts" in get_settings()

@@ -707,7 +707,7 @@ class PRDescription:

pr_body += """"""

except Exception as e:

- get_logger().error(f"Error processing pr files to markdown {self.pr_id}: {str(e)}")

+ get_logger().error(f"Error processing PR files to markdown {self.pr_id}: {str(e)}")

pass

return pr_body, pr_comments

diff --git a/pr_agent/tools/pr_reviewer.py b/pr_agent/tools/pr_reviewer.py

index ff48819f..714ee867 100644

--- a/pr_agent/tools/pr_reviewer.py

+++ b/pr_agent/tools/pr_reviewer.py

@@ -81,6 +81,7 @@ class PRReviewer:

"language": self.main_language,

"diff": "", # empty diff for initial calculation

"num_pr_files": self.git_provider.get_num_of_files(),

+ "num_max_findings": get_settings().pr_reviewer.num_max_findings,

"require_score": get_settings().pr_reviewer.require_score_review,

"require_tests": get_settings().pr_reviewer.require_tests_review,

"require_estimate_effort_to_review": get_settings().pr_reviewer.require_estimate_effort_to_review,

@@ -316,7 +317,9 @@ class PRReviewer:

get_logger().exception(f"Failed to remove previous review comment, error: {e}")

def _can_run_incremental_review(self) -> bool:

- """Checks if we can run incremental review according the various configurations and previous review"""

+ """

+ Checks if we can run incremental review according the various configurations and previous review.

+ """

# checking if running is auto mode but there are no new commits

if self.is_auto and not self.incremental.first_new_commit_sha:

get_logger().info(f"Incremental review is enabled for {self.pr_url} but there are no new commits")

diff --git a/requirements.txt b/requirements.txt

index 1038af69..5290b749 100644

--- a/requirements.txt

+++ b/requirements.txt

@@ -13,7 +13,7 @@ google-cloud-aiplatform==1.38.0

google-generativeai==0.8.3

google-cloud-storage==2.10.0

Jinja2==3.1.2

-litellm==1.66.3

+litellm==1.69.3

loguru==0.7.2

msrest==0.7.1

openai>=1.55.3

diff --git a/tests/e2e_tests/test_gitea_app.py b/tests/e2e_tests/test_gitea_app.py

new file mode 100644

index 00000000..3a209975

--- /dev/null

+++ b/tests/e2e_tests/test_gitea_app.py

@@ -0,0 +1,185 @@

+import os

+import time

+import requests

+from datetime import datetime

+

+from pr_agent.config_loader import get_settings

+from pr_agent.log import get_logger, setup_logger

+from tests.e2e_tests.e2e_utils import (FILE_PATH,

+ IMPROVE_START_WITH_REGEX_PATTERN,

+ NEW_FILE_CONTENT, NUM_MINUTES,

+ PR_HEADER_START_WITH, REVIEW_START_WITH)

+

+log_level = os.environ.get("LOG_LEVEL", "INFO")

+setup_logger(log_level)

+logger = get_logger()

+

+def test_e2e_run_gitea_app():

+ repo_name = 'pr-agent-tests'

+ owner = 'codiumai'

+ base_branch = "main"

+ new_branch = f"gitea_app_e2e_test-{datetime.now().strftime('%Y-%m-%d-%H-%M-%S')}"

+ get_settings().config.git_provider = "gitea"

+

+ headers = None

+ pr_number = None

+

+ try:

+ gitea_url = get_settings().get("GITEA.URL", None)

+ gitea_token = get_settings().get("GITEA.TOKEN", None)

+

+ if not gitea_url:

+ logger.error("GITEA.URL is not set in the configuration")

+ logger.info("Please set GITEA.URL in .env file or environment variables")

+ assert False, "GITEA.URL is not set in the configuration"

+

+ if not gitea_token:

+ logger.error("GITEA.TOKEN is not set in the configuration")

+ logger.info("Please set GITEA.TOKEN in .env file or environment variables")

+ assert False, "GITEA.TOKEN is not set in the configuration"

+

+ headers = {

+ 'Authorization': f'token {gitea_token}',

+ 'Content-Type': 'application/json',

+ 'Accept': 'application/json'

+ }

+

+ logger.info(f"Creating a new branch {new_branch} from {base_branch}")

+

+ response = requests.get(

+ f"{gitea_url}/api/v1/repos/{owner}/{repo_name}/branches/{base_branch}",

+ headers=headers

+ )

+ response.raise_for_status()

+ base_branch_data = response.json()

+ base_commit_sha = base_branch_data['commit']['id']

+

+ branch_data = {

+ 'ref': f"refs/heads/{new_branch}",

+ 'sha': base_commit_sha

+ }

+ response = requests.post(

+ f"{gitea_url}/api/v1/repos/{owner}/{repo_name}/git/refs",

+ headers=headers,

+ json=branch_data

+ )

+ response.raise_for_status()

+

+ logger.info(f"Updating file {FILE_PATH} in branch {new_branch}")

+

+ import base64

+ file_content_encoded = base64.b64encode(NEW_FILE_CONTENT.encode()).decode()

+

+ try:

+ response = requests.get(

+ f"{gitea_url}/api/v1/repos/{owner}/{repo_name}/contents/{FILE_PATH}?ref={new_branch}",

+ headers=headers

+ )

+ response.raise_for_status()

+ existing_file = response.json()

+ file_sha = existing_file.get('sha')

+

+ file_data = {

+ 'message': 'Update cli_pip.py',

+ 'content': file_content_encoded,

+ 'sha': file_sha,

+ 'branch': new_branch

+ }

+ except:

+ file_data = {

+ 'message': 'Add cli_pip.py',

+ 'content': file_content_encoded,

+ 'branch': new_branch

+ }

+

+ response = requests.put(

+ f"{gitea_url}/api/v1/repos/{owner}/{repo_name}/contents/{FILE_PATH}",

+ headers=headers,

+ json=file_data

+ )

+ response.raise_for_status()

+

+ logger.info(f"Creating a pull request from {new_branch} to {base_branch}")

+ pr_data = {

+ 'title': f'Test PR from {new_branch}',

+ 'body': 'update cli_pip.py',

+ 'head': new_branch,

+ 'base': base_branch

+ }

+ response = requests.post(

+ f"{gitea_url}/api/v1/repos/{owner}/{repo_name}/pulls",

+ headers=headers,

+ json=pr_data

+ )

+ response.raise_for_status()

+ pr = response.json()

+ pr_number = pr['number']

+

+ for i in range(NUM_MINUTES):

+ logger.info(f"Waiting for the PR to get all the tool results...")

+ time.sleep(60)

+

+ response = requests.get(

+ f"{gitea_url}/api/v1/repos/{owner}/{repo_name}/issues/{pr_number}/comments",

+ headers=headers

+ )

+ response.raise_for_status()

+ comments = response.json()

+

+ if len(comments) >= 5:

+ valid_review = False

+ for comment in comments:

+ if comment['body'].startswith('## PR Reviewer Guide 🔍'):

+ valid_review = True

+ break

+ if valid_review:

+ break

+ else:

+ logger.error("REVIEW feedback is invalid")

+ raise Exception("REVIEW feedback is invalid")

+ else:

+ logger.info(f"Waiting for the PR to get all the tool results. {i + 1} minute(s) passed")

+ else:

+ assert False, f"After {NUM_MINUTES} minutes, the PR did not get all the tool results"

+

+ logger.info(f"Cleaning up: closing PR and deleting branch {new_branch}")

+

+ close_data = {'state': 'closed'}

+ response = requests.patch(

+ f"{gitea_url}/api/v1/repos/{owner}/{repo_name}/pulls/{pr_number}",

+ headers=headers,

+ json=close_data

+ )

+ response.raise_for_status()

+

+ response = requests.delete(

+ f"{gitea_url}/api/v1/repos/{owner}/{repo_name}/git/refs/heads/{new_branch}",

+ headers=headers

+ )

+ response.raise_for_status()

+

+ logger.info(f"Succeeded in running e2e test for Gitea app on the PR")

+ except Exception as e:

+ logger.error(f"Failed to run e2e test for Gitea app: {e}")

+ raise

+ finally:

+ try:

+ if headers is None or gitea_url is None:

+ return

+

+ if pr_number is not None:

+ requests.patch(

+ f"{gitea_url}/api/v1/repos/{owner}/{repo_name}/pulls/{pr_number}",

+ headers=headers,

+ json={'state': 'closed'}

+ )

+

+ requests.delete(

+ f"{gitea_url}/api/v1/repos/{owner}/{repo_name}/git/refs/heads/{new_branch}",

+ headers=headers

+ )

+ except Exception as cleanup_error:

+ logger.error(f"Failed to clean up after test: {cleanup_error}")

+

+if __name__ == '__main__':

+ test_e2e_run_gitea_app()

\ No newline at end of file

diff --git a/tests/unittest/test_gitea_provider.py b/tests/unittest/test_gitea_provider.py

new file mode 100644

index 00000000..d88de0e0

--- /dev/null

+++ b/tests/unittest/test_gitea_provider.py

@@ -0,0 +1,126 @@

+from unittest.mock import MagicMock, patch

+

+import pytest

+

+from pr_agent.algo.types import EDIT_TYPE

+from pr_agent.git_providers.gitea_provider import GiteaProvider

+

+

+class TestGiteaProvider:

+ """Unit-tests for GiteaProvider following project style (explicit object construction, minimal patching)."""

+

+ def _provider(self):

+ """Create provider instance with patched settings and avoid real HTTP calls."""

+ with patch('pr_agent.git_providers.gitea_provider.get_settings') as mock_get_settings, \

+ patch('requests.get') as mock_get:

+ settings = MagicMock()

+ settings.get.side_effect = lambda k, d=None: {

+ 'GITEA.URL': 'https://gitea.example.com',

+ 'GITEA.TOKEN': 'test-token'

+ }.get(k, d)

+ mock_get_settings.return_value = settings

+ # Stub the PR fetch triggered during provider initialization

+ pr_resp = MagicMock()

+ pr_resp.json.return_value = {

+ 'title': 'stub',

+ 'body': 'stub',

+ 'head': {'ref': 'main'},

+ 'user': {'id': 1}

+ }

+ pr_resp.raise_for_status = MagicMock()

+ mock_get.return_value = pr_resp

+ return GiteaProvider('https://gitea.example.com/owner/repo/pulls/123')

+

+ # ---------------- URL parsing ----------------

+ def test_parse_pr_url_valid(self):

+ owner, repo, pr_num = GiteaProvider._parse_pr_url('https://gitea.example.com/owner/repo/pulls/123')

+ assert (owner, repo, pr_num) == ('owner', 'repo', '123')

+

+ def test_parse_pr_url_invalid(self):

+ with pytest.raises(ValueError):

+ GiteaProvider._parse_pr_url('https://gitea.example.com/owner/repo')

+

+ # ---------------- simple getters ----------------

+ def test_get_files(self):

+ provider = self._provider()

+ mock_resp = MagicMock()

+ mock_resp.json.return_value = [{'filename': 'a.txt'}, {'filename': 'b.txt'}]

+ mock_resp.raise_for_status = MagicMock()

+ with patch('requests.get', return_value=mock_resp) as mock_get:

+ assert provider.get_files() == ['a.txt', 'b.txt']

+ mock_get.assert_called_once()

+

+ def test_get_diff_files(self):

+ provider = self._provider()

+ mock_resp = MagicMock()

+ mock_resp.json.return_value = [

+ {'filename': 'f1', 'previous_filename': 'old_f1', 'status': 'renamed', 'patch': ''},

+ {'filename': 'f2', 'status': 'added', 'patch': ''},

+ {'filename': 'f3', 'status': 'deleted', 'patch': ''},

+ {'filename': 'f4', 'status': 'modified', 'patch': ''}

+ ]

+ mock_resp.raise_for_status = MagicMock()

+ with patch('requests.get', return_value=mock_resp):

+ res = provider.get_diff_files()

+ assert [f.edit_type for f in res] == [EDIT_TYPE.RENAMED, EDIT_TYPE.ADDED, EDIT_TYPE.DELETED,

+ EDIT_TYPE.MODIFIED]

+

+ # ---------------- publishing methods ----------------

+ def test_publish_description(self):

+ provider = self._provider()

+ mock_resp = MagicMock();

+ mock_resp.raise_for_status = MagicMock()

+ with patch('requests.patch', return_value=mock_resp) as mock_patch:

+ provider.publish_description('t', 'b');

+ mock_patch.assert_called_once()

+

+ def test_publish_comment(self):

+ provider = self._provider()

+ mock_resp = MagicMock();

+ mock_resp.raise_for_status = MagicMock()

+ with patch('requests.post', return_value=mock_resp) as mock_post:

+ provider.publish_comment('c');

+ mock_post.assert_called_once()

+

+ def test_publish_inline_comment(self):

+ provider = self._provider()

+ mock_resp = MagicMock();

+ mock_resp.raise_for_status = MagicMock()

+ with patch('requests.post', return_value=mock_resp) as mock_post:

+ provider.publish_inline_comment('body', 'file', '10');

+ mock_post.assert_called_once()

+

+ # ---------------- labels & reactions ----------------

+ def test_get_pr_labels(self):

+ provider = self._provider()

+ mock_resp = MagicMock();

+ mock_resp.raise_for_status = MagicMock();

+ mock_resp.json.return_value = [{'name': 'l1'}]

+ with patch('requests.get', return_value=mock_resp):

+ assert provider.get_pr_labels() == ['l1']

+

+ def test_add_eyes_reaction(self):

+ provider = self._provider()

+ mock_resp = MagicMock();

+ mock_resp.raise_for_status = MagicMock();

+ mock_resp.json.return_value = {'id': 7}

+ with patch('requests.post', return_value=mock_resp):

+ assert provider.add_eyes_reaction(1) == 7

+

+ # ---------------- commit messages & url helpers ----------------

+ def test_get_commit_messages(self):

+ provider = self._provider()

+ mock_resp = MagicMock();

+ mock_resp.raise_for_status = MagicMock()

+ mock_resp.json.return_value = [

+ {'commit': {'message': 'm1'}}, {'commit': {'message': 'm2'}}]

+ with patch('requests.get', return_value=mock_resp):

+ assert provider.get_commit_messages() == ['m1', 'm2']

+

+ def test_git_url_helpers(self):

+ provider = self._provider()

+ issues_url = 'https://gitea.example.com/owner/repo/pulls/3'

+ assert provider.get_git_repo_url(issues_url) == 'https://gitea.example.com/owner/repo.git'

+ prefix, suffix = provider.get_canonical_url_parts('https://gitea.example.com/owner/repo.git', 'dev')

+ assert prefix == 'https://gitea.example.com/owner/repo/src/branch/dev'

+ assert suffix == ''

diff --git a/tests/unittest/test_ignore_repositories.py b/tests/unittest/test_ignore_repositories.py

new file mode 100644

index 00000000..e05447d5

--- /dev/null

+++ b/tests/unittest/test_ignore_repositories.py

@@ -0,0 +1,79 @@

+import pytest

+from pr_agent.servers.github_app import should_process_pr_logic as github_should_process_pr_logic

+from pr_agent.servers.bitbucket_app import should_process_pr_logic as bitbucket_should_process_pr_logic

+from pr_agent.servers.gitlab_webhook import should_process_pr_logic as gitlab_should_process_pr_logic

+from pr_agent.config_loader import get_settings

+

+def make_bitbucket_payload(full_name):

+ return {

+ "data": {

+ "pullrequest": {

+ "title": "Test PR",

+ "source": {"branch": {"name": "feature/test"}},

+ "destination": {

+ "branch": {"name": "main"},

+ "repository": {"full_name": full_name}

+ }

+ },

+ "actor": {"username": "user", "type": "user"}

+ }

+ }

+

+def make_github_body(full_name):

+ return {

+ "pull_request": {},

+ "repository": {"full_name": full_name},

+ "sender": {"login": "user"}

+ }

+

+def make_gitlab_body(full_name):

+ return {

+ "object_attributes": {"title": "Test MR"},

+ "project": {"path_with_namespace": full_name}

+ }

+

+PROVIDERS = [

+ ("github", github_should_process_pr_logic, make_github_body),

+ ("bitbucket", bitbucket_should_process_pr_logic, make_bitbucket_payload),

+ ("gitlab", gitlab_should_process_pr_logic, make_gitlab_body),

+]

+

+class TestIgnoreRepositories:

+ def setup_method(self):

+ get_settings().set("CONFIG.IGNORE_REPOSITORIES", [])

+

+ @pytest.mark.parametrize("provider_name, provider_func, body_func", PROVIDERS)

+ def test_should_ignore_matching_repository(self, provider_name, provider_func, body_func):

+ get_settings().set("CONFIG.IGNORE_REPOSITORIES", ["org/repo-to-ignore"])

+ body = {

+ "pull_request": {},

+ "repository": {"full_name": "org/repo-to-ignore"},

+ "sender": {"login": "user"}

+ }

+ result = provider_func(body_func(body["repository"]["full_name"]))

+ # print(f"DEBUG: Provider={provider_name}, test_should_ignore_matching_repository, result={result}")

+ assert result is False, f"{provider_name}: PR from ignored repository should be ignored (return False)"

+

+ @pytest.mark.parametrize("provider_name, provider_func, body_func", PROVIDERS)

+ def test_should_not_ignore_non_matching_repository(self, provider_name, provider_func, body_func):

+ get_settings().set("CONFIG.IGNORE_REPOSITORIES", ["org/repo-to-ignore"])

+ body = {

+ "pull_request": {},

+ "repository": {"full_name": "org/other-repo"},

+ "sender": {"login": "user"}

+ }

+ result = provider_func(body_func(body["repository"]["full_name"]))

+ # print(f"DEBUG: Provider={provider_name}, test_should_not_ignore_non_matching_repository, result={result}")

+ assert result is True, f"{provider_name}: PR from non-ignored repository should not be ignored (return True)"

+

+ @pytest.mark.parametrize("provider_name, provider_func, body_func", PROVIDERS)

+ def test_should_not_ignore_when_config_empty(self, provider_name, provider_func, body_func):

+ get_settings().set("CONFIG.IGNORE_REPOSITORIES", [])

+ body = {

+ "pull_request": {},

+ "repository": {"full_name": "org/repo-to-ignore"},

+ "sender": {"login": "user"}

+ }

+ result = provider_func(body_func(body["repository"]["full_name"]))

+ # print(f"DEBUG: Provider={provider_name}, test_should_not_ignore_when_config_empty, result={result}")

+ assert result is True, f"{provider_name}: PR should not be ignored if ignore_repositories config is empty"

\ No newline at end of file

diff --git a/tests/unittest/test_try_fix_yaml.py b/tests/unittest/test_try_fix_yaml.py

index 336c4af5..826d7312 100644

--- a/tests/unittest/test_try_fix_yaml.py

+++ b/tests/unittest/test_try_fix_yaml.py

@@ -32,11 +32,6 @@ age: 35

expected_output = {'name': 'John Smith', 'age': 35}

assert try_fix_yaml(review_text) == expected_output

- # The function removes the last line(s) of the YAML string and successfully parses the YAML string.

- def test_remove_last_line(self):

- review_text = "key: value\nextra invalid line\n"

- expected_output = {"key": "value"}

- assert try_fix_yaml(review_text) == expected_output

# The YAML string is empty.

def test_empty_yaml_fixed(self):