diff --git a/README.md b/README.md

index 0fc53a1d..b6a5f901 100644

--- a/README.md

+++ b/README.md

@@ -179,43 +179,6 @@ ___

-

-

-[//]: # ()

-

-[//]: # ()

-

-[//]: # (

)

-

-[//]: # ( )

-

-[//]: # (

)

-

-[//]: # (

)

-

-[//]: # (

)

-

-[//]: # (

)

-

-[//]: # ( )

-

-[//]: # (

)

-

-[//]: # (

)

-

-[//]: # (

)

-

-[//]: # (

)

-

-[//]: # ( )

-

-[//]: # (

)

-

-[//]: # (

)

-

-[//]: # (

diff --git a/docs/docs/tools/review.md b/docs/docs/tools/review.md

index f3a4f799..1552bc9b 100644

--- a/docs/docs/tools/review.md

+++ b/docs/docs/tools/review.md

@@ -46,47 +46,6 @@ extra_instructions = "..."

- The `pr_commands` lists commands that will be executed automatically when a PR is opened.

- The `[pr_reviewer]` section contains the configurations for the `review` tool you want to edit (if any).

-[//]: # ()

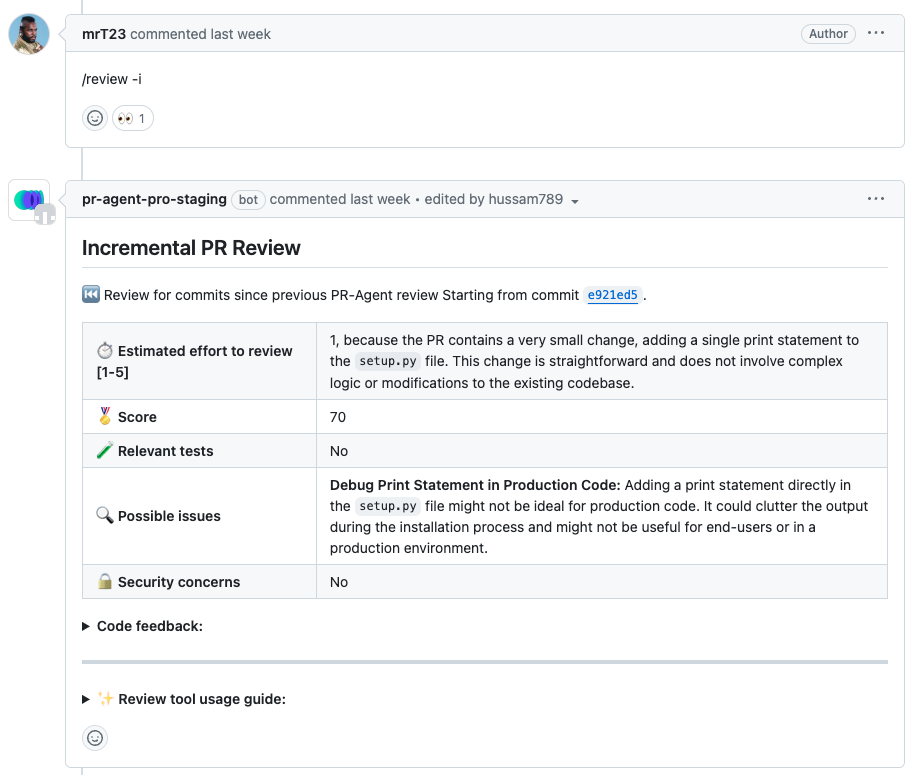

-[//]: # (### Incremental Mode)

-

-[//]: # (Incremental review only considers changes since the last Qodo Merge review. This can be useful when working on the PR in an iterative manner, and you want to focus on the changes since the last review instead of reviewing the entire PR again.)

-

-[//]: # (For invoking the incremental mode, the following command can be used:)

-

-[//]: # (```)

-

-[//]: # (/review -i)

-

-[//]: # (```)

-

-[//]: # (Note that the incremental mode is only available for GitHub.)

-

-[//]: # ()

-[//]: # ({width=512})

-

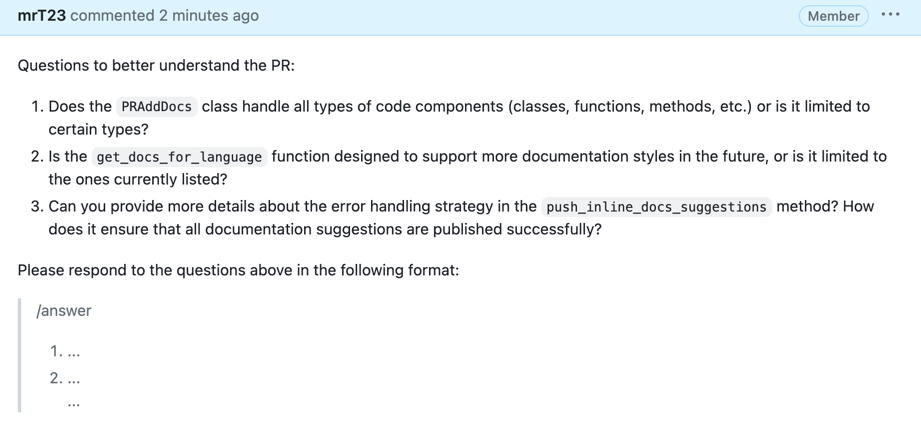

-[//]: # (### PR Reflection)

-

-[//]: # ()

-[//]: # (By invoking:)

-

-[//]: # (```)

-

-[//]: # (/reflect_and_review)

-

-[//]: # (```)

-

-[//]: # (The tool will first ask the author questions about the PR, and will guide the review based on their answers.)

-

-[//]: # ()

-[//]: # ({width=512})

-

-[//]: # ()

-[//]: # ({width=512})

-

-[//]: # ()

-[//]: # ({width=512})

-

-

## Configuration options

diff --git a/pr_agent/agent/pr_agent.py b/pr_agent/agent/pr_agent.py

index 15491ac9..53e05f03 100644

--- a/pr_agent/agent/pr_agent.py

+++ b/pr_agent/agent/pr_agent.py

@@ -13,7 +13,6 @@ from pr_agent.tools.pr_config import PRConfig

from pr_agent.tools.pr_description import PRDescription

from pr_agent.tools.pr_generate_labels import PRGenerateLabels

from pr_agent.tools.pr_help_message import PRHelpMessage

-from pr_agent.tools.pr_information_from_user import PRInformationFromUser

from pr_agent.tools.pr_line_questions import PR_LineQuestions

from pr_agent.tools.pr_questions import PRQuestions

from pr_agent.tools.pr_reviewer import PRReviewer

@@ -25,8 +24,6 @@ command2class = {

"answer": PRReviewer,

"review": PRReviewer,

"review_pr": PRReviewer,

- "reflect": PRInformationFromUser,

- "reflect_and_review": PRInformationFromUser,

"describe": PRDescription,

"describe_pr": PRDescription,

"improve": PRCodeSuggestions,

diff --git a/pr_agent/tools/pr_information_from_user.py b/pr_agent/tools/pr_information_from_user.py

deleted file mode 100644

index e5bd2f72..00000000

--- a/pr_agent/tools/pr_information_from_user.py

+++ /dev/null

@@ -1,79 +0,0 @@

-import copy

-from functools import partial

-

-from jinja2 import Environment, StrictUndefined

-

-from pr_agent.algo.ai_handlers.base_ai_handler import BaseAiHandler

-from pr_agent.algo.ai_handlers.litellm_ai_handler import LiteLLMAIHandler

-from pr_agent.algo.pr_processing import get_pr_diff, retry_with_fallback_models

-from pr_agent.algo.token_handler import TokenHandler

-from pr_agent.config_loader import get_settings

-from pr_agent.git_providers import get_git_provider

-from pr_agent.git_providers.git_provider import get_main_pr_language

-from pr_agent.log import get_logger

-

-

-class PRInformationFromUser:

- def __init__(self, pr_url: str, args: list = None,

- ai_handler: partial[BaseAiHandler,] = LiteLLMAIHandler):

- self.git_provider = get_git_provider()(pr_url)

- self.main_pr_language = get_main_pr_language(

- self.git_provider.get_languages(), self.git_provider.get_files()

- )

- self.ai_handler = ai_handler()

- self.ai_handler.main_pr_language = self.main_pr_language

-

- self.vars = {

- "title": self.git_provider.pr.title,

- "branch": self.git_provider.get_pr_branch(),

- "description": self.git_provider.get_pr_description(),

- "language": self.main_pr_language,

- "diff": "", # empty diff for initial calculation

- "commit_messages_str": self.git_provider.get_commit_messages(),

- }

- self.token_handler = TokenHandler(self.git_provider.pr,

- self.vars,

- get_settings().pr_information_from_user_prompt.system,

- get_settings().pr_information_from_user_prompt.user)

- self.patches_diff = None

- self.prediction = None

-

- async def run(self):

- get_logger().info('Generating question to the user...')

- if get_settings().config.publish_output:

- self.git_provider.publish_comment("Preparing questions...", is_temporary=True)

- await retry_with_fallback_models(self._prepare_prediction)

- get_logger().info('Preparing questions...')

- pr_comment = self._prepare_pr_answer()

- if get_settings().config.publish_output:

- get_logger().info('Pushing questions...')

- self.git_provider.publish_comment(pr_comment)

- self.git_provider.remove_initial_comment()

- return ""

-

- async def _prepare_prediction(self, model):

- get_logger().info('Getting PR diff...')

- self.patches_diff = get_pr_diff(self.git_provider, self.token_handler, model)

- get_logger().info('Getting AI prediction...')

- self.prediction = await self._get_prediction(model)

-

- async def _get_prediction(self, model: str):

- variables = copy.deepcopy(self.vars)

- variables["diff"] = self.patches_diff # update diff

- environment = Environment(undefined=StrictUndefined)

- system_prompt = environment.from_string(get_settings().pr_information_from_user_prompt.system).render(variables)

- user_prompt = environment.from_string(get_settings().pr_information_from_user_prompt.user).render(variables)

- if get_settings().config.verbosity_level >= 2:

- get_logger().info(f"\nSystem prompt:\n{system_prompt}")

- get_logger().info(f"\nUser prompt:\n{user_prompt}")

- response, finish_reason = await self.ai_handler.chat_completion(

- model=model, temperature=get_settings().config.temperature, system=system_prompt, user=user_prompt)

- return response

-

- def _prepare_pr_answer(self) -> str:

- model_output = self.prediction.strip()

- if get_settings().config.verbosity_level >= 2:

- get_logger().info(f"answer_str:\n{model_output}")

- answer_str = f"{model_output}\n\n Please respond to the questions above in the following format:\n\n" +\

- "\n>/answer\n>1) ...\n>2) ...\n>...\n"

- return answer_str

)

-

-[//]: # (

)

-

-[//]: # ( )

-

-[//]: # (

)

-

-[//]: # ( )

-

-[//]: # (

)

-

-[//]: # ( )

-

-[//]: # (

)

-

-[//]: # (