mirror of

https://github.com/qodo-ai/pr-agent.git

synced 2025-07-21 04:50:39 +08:00

Merge remote-tracking branch 'origin/main'

This commit is contained in:

33

docs/docs/core-abilities/incremental_update.md

Normal file

33

docs/docs/core-abilities/incremental_update.md

Normal file

@ -0,0 +1,33 @@

|

||||

# Incremental Update 💎

|

||||

|

||||

`Supported Git Platforms: GitHub`

|

||||

|

||||

## Overview

|

||||

The Incremental Update feature helps users focus on feedback for their newest changes, making large PRs more manageable.

|

||||

|

||||

### How it works

|

||||

|

||||

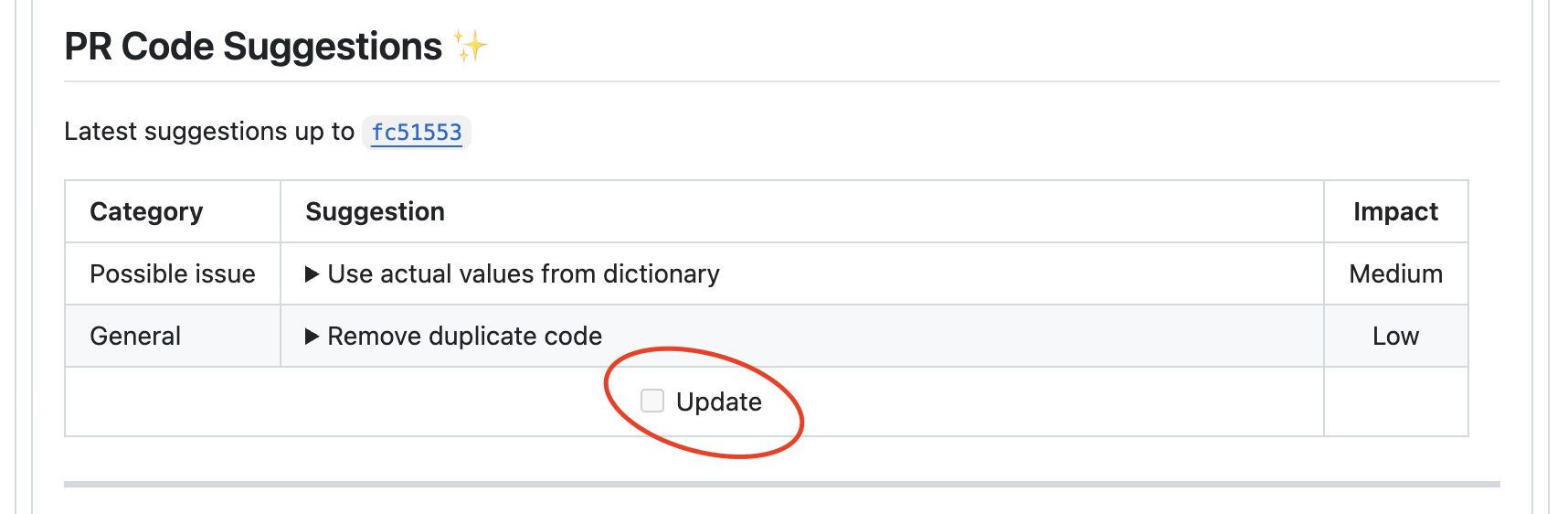

=== "Update Option on Subsequent Commits"

|

||||

{width=512}

|

||||

|

||||

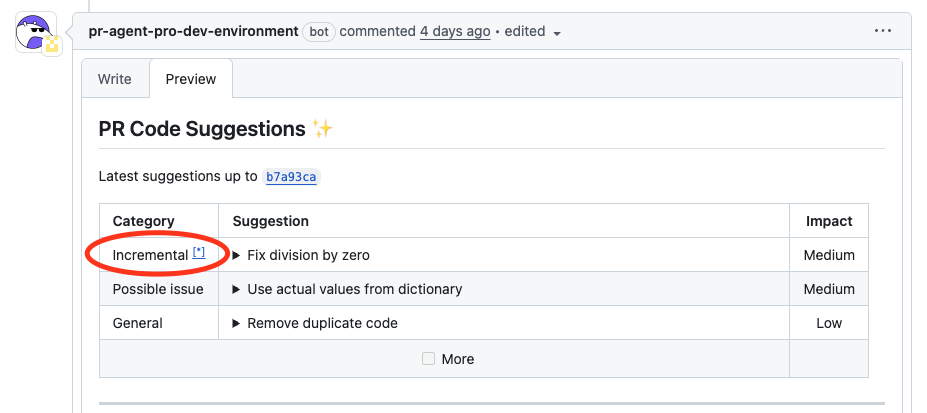

=== "Generation of Incremental Update"

|

||||

{width=512}

|

||||

|

||||

___

|

||||

|

||||

Whenever new commits are pushed following a recent code suggestions report for this PR, an Update button appears (as seen above).

|

||||

|

||||

Once the user clicks on the button:

|

||||

|

||||

- The `improve` tool identifies the new changes (the "delta")

|

||||

- Provides suggestions on these recent changes

|

||||

- Combines these suggestions with the overall PR feedback, prioritizing delta-related comments

|

||||

- Marks delta-related comments with a textual indication followed by an asterisk (*) with a link to this page, so they can easily be identified

|

||||

|

||||

### Benefits for Developers

|

||||

|

||||

- Focus on what matters: See feedback on newest code first

|

||||

- Clearer organization: Comments on recent changes are clearly marked

|

||||

- Better workflow: Address feedback more systematically, starting with recent changes

|

||||

|

||||

|

||||

@ -8,6 +8,7 @@ Qodo Merge utilizes a variety of core abilities to provide a comprehensive and e

|

||||

- [Dynamic context](https://qodo-merge-docs.qodo.ai/core-abilities/dynamic_context/)

|

||||

- [Fetching ticket context](https://qodo-merge-docs.qodo.ai/core-abilities/fetching_ticket_context/)

|

||||

- [Impact evaluation](https://qodo-merge-docs.qodo.ai/core-abilities/impact_evaluation/)

|

||||

- [Incremental Update](https://qodo-merge-docs.qodo.ai/core-abilities/incremental_update/)

|

||||

- [Interactivity](https://qodo-merge-docs.qodo.ai/core-abilities/interactivity/)

|

||||

- [Local and global metadata](https://qodo-merge-docs.qodo.ai/core-abilities/metadata/)

|

||||

- [RAG context enrichment](https://qodo-merge-docs.qodo.ai/core-abilities/rag_context_enrichment/)

|

||||

|

||||

@ -67,6 +67,7 @@ PR-Agent and Qodo Merge offers extensive pull request functionalities across var

|

||||

| | [Impact Evaluation](https://qodo-merge-docs.qodo.ai/core-abilities/impact_evaluation/) 💎 | ✅ | ✅ | | |

|

||||

| | [Code Validation 💎](https://qodo-merge-docs.qodo.ai/core-abilities/code_validation/) | ✅ | ✅ | ✅ | ✅ |

|

||||

| | [Auto Best Practices 💎](https://qodo-merge-docs.qodo.ai/core-abilities/auto_best_practices/) | ✅ | | | |

|

||||

| | [Incremental Update 💎](https://qodo-merge-docs.qodo.ai/core-abilities/incremental_update/) | ✅ | | | |

|

||||

!!! note "💎 means Qodo Merge only"

|

||||

All along the documentation, 💎 marks a feature available only in [Qodo Merge](https://www.codium.ai/pricing/){:target="_blank"}, and not in the open-source version.

|

||||

|

||||

|

||||

@ -193,9 +193,8 @@ For example: `GITHUB.WEBHOOK_SECRET` --> `GITHUB__WEBHOOK_SECRET`

|

||||

3. Push image to ECR

|

||||

|

||||

```shell

|

||||

|

||||

docker tag codiumai/pr-agent:serverless <AWS_ACCOUNT>.dkr.ecr.<AWS_REGION>.amazonaws.com/codiumai/pr-agent:serverless

|

||||

docker push <AWS_ACCOUNT>.dkr.ecr.<AWS_REGION>.amazonaws.com/codiumai/pr-agent:serverless

|

||||

docker tag codiumai/pr-agent:serverless <AWS_ACCOUNT>.dkr.ecr.<AWS_REGION>.amazonaws.com/codiumai/pr-agent:serverless

|

||||

docker push <AWS_ACCOUNT>.dkr.ecr.<AWS_REGION>.amazonaws.com/codiumai/pr-agent:serverless

|

||||

```

|

||||

|

||||

4. Create a lambda function that uses the uploaded image. Set the lambda timeout to be at least 3m.

|

||||

|

||||

@ -26,6 +26,29 @@ You can state a name of a specific component in the PR to get documentation only

|

||||

/add_docs component_name

|

||||

```

|

||||

|

||||

## Manual triggering

|

||||

|

||||

Comment `/add_docs` on a PR to invoke it manually.

|

||||

|

||||

## Automatic triggering

|

||||

|

||||

To automatically run the `add_docs` tool when a pull request is opened, define in a [configuration file](https://qodo-merge-docs.qodo.ai/usage-guide/configuration_options/):

|

||||

|

||||

|

||||

```toml

|

||||

[github_app]

|

||||

pr_commands = [

|

||||

"/add_docs",

|

||||

...

|

||||

]

|

||||

```

|

||||

|

||||

The `pr_commands` list defines commands that run automatically when a PR is opened.

|

||||

Since this is under the [github_app] section, it only applies when using the Qodo Merge GitHub App in GitHub environments.

|

||||

|

||||

!!! note

|

||||

By default, /add_docs is not triggered automatically. You must explicitly include it in pr_commands to enable this behavior.

|

||||

|

||||

## Configuration options

|

||||

|

||||

- `docs_style`: The exact style of the documentation (for python docstring). you can choose between: `google`, `numpy`, `sphinx`, `restructuredtext`, `plain`. Default is `sphinx`.

|

||||

|

||||

@ -70,6 +70,10 @@ extra_instructions = "..."

|

||||

<td><b>enable_help_text</b></td>

|

||||

<td>If set to true, the tool will display a help text in the comment. Default is true.</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td><b>num_max_findings</b></td>

|

||||

<td>Number of maximum returned findings. Default is 3.</td>

|

||||

</tr>

|

||||

</table>

|

||||

|

||||

!!! example "Enable\\disable specific sub-sections"

|

||||

@ -112,7 +116,7 @@ extra_instructions = "..."

|

||||

</tr>

|

||||

<tr>

|

||||

<td><b>enable_review_labels_effort</b></td>

|

||||

<td>If set to true, the tool will publish a 'Review effort [1-5]: x' label. Default is true.</td>

|

||||

<td>If set to true, the tool will publish a 'Review effort x/5' label (1–5 scale). Default is true.</td>

|

||||

</tr>

|

||||

</table>

|

||||

|

||||

@ -141,7 +145,7 @@ extra_instructions = "..."

|

||||

The `review` tool can auto-generate two specific types of labels for a PR:

|

||||

|

||||

- a `possible security issue` label that detects if a possible [security issue](https://github.com/Codium-ai/pr-agent/blob/tr/user_description/pr_agent/settings/pr_reviewer_prompts.toml#L136) exists in the PR code (`enable_review_labels_security` flag)

|

||||

- a `Review effort [1-5]: x` label, where x is the estimated effort to review the PR (`enable_review_labels_effort` flag)

|

||||

- a `Review effort x/5` label, where x is the estimated effort to review the PR on a 1–5 scale (`enable_review_labels_effort` flag)

|

||||

|

||||

Both modes are useful, and we recommended to enable them.

|

||||

|

||||

|

||||

@ -50,7 +50,7 @@ glob = ['*.py']

|

||||

And to ignore Python files in all PRs using `regex` pattern, set in a configuration file:

|

||||

|

||||

```

|

||||

[regex]

|

||||

[ignore]

|

||||

regex = ['.*\.py$']

|

||||

```

|

||||

|

||||

|

||||

@ -49,6 +49,7 @@ nav:

|

||||

- Dynamic context: 'core-abilities/dynamic_context.md'

|

||||

- Fetching ticket context: 'core-abilities/fetching_ticket_context.md'

|

||||

- Impact evaluation: 'core-abilities/impact_evaluation.md'

|

||||

- Incremental Update: 'core-abilities/incremental_update.md'

|

||||

- Interactivity: 'core-abilities/interactivity.md'

|

||||

- Local and global metadata: 'core-abilities/metadata.md'

|

||||

- RAG context enrichment: 'core-abilities/rag_context_enrichment.md'

|

||||

|

||||

@ -371,12 +371,12 @@ class LiteLLMAIHandler(BaseAiHandler):

|

||||

get_logger().info(f"\nUser prompt:\n{user}")

|

||||

|

||||

response = await acompletion(**kwargs)

|

||||

except (openai.APIError, openai.APITimeoutError) as e:

|

||||

get_logger().warning(f"Error during LLM inference: {e}")

|

||||

raise

|

||||

except (openai.RateLimitError) as e:

|

||||

get_logger().error(f"Rate limit error during LLM inference: {e}")

|

||||

raise

|

||||

except (openai.APIError, openai.APITimeoutError) as e:

|

||||

get_logger().warning(f"Error during LLM inference: {e}")

|

||||

raise

|

||||

except (Exception) as e:

|

||||

get_logger().warning(f"Unknown error during LLM inference: {e}")

|

||||

raise openai.APIError from e

|

||||

|

||||

@ -11,6 +11,7 @@ from pr_agent.git_providers.git_provider import GitProvider

|

||||

from pr_agent.git_providers.github_provider import GithubProvider

|

||||

from pr_agent.git_providers.gitlab_provider import GitLabProvider

|

||||

from pr_agent.git_providers.local_git_provider import LocalGitProvider

|

||||

from pr_agent.git_providers.gitea_provider import GiteaProvider

|

||||

|

||||

_GIT_PROVIDERS = {

|

||||

'github': GithubProvider,

|

||||

@ -21,6 +22,7 @@ _GIT_PROVIDERS = {

|

||||

'codecommit': CodeCommitProvider,

|

||||

'local': LocalGitProvider,

|

||||

'gerrit': GerritProvider,

|

||||

'gitea': GiteaProvider,

|

||||

}

|

||||

|

||||

|

||||

|

||||

@ -618,7 +618,7 @@ class AzureDevopsProvider(GitProvider):

|

||||

return pr_id

|

||||

except Exception as e:

|

||||

if get_settings().config.verbosity_level >= 2:

|

||||

get_logger().info(f"Failed to get pr id, error: {e}")

|

||||

get_logger().info(f"Failed to get PR id, error: {e}")

|

||||

return ""

|

||||

|

||||

def publish_file_comments(self, file_comments: list) -> bool:

|

||||

|

||||

258

pr_agent/git_providers/gitea_provider.py

Normal file

258

pr_agent/git_providers/gitea_provider.py

Normal file

@ -0,0 +1,258 @@

|

||||

from typing import Optional, Tuple, List, Dict

|

||||

from urllib.parse import urlparse

|

||||

import requests

|

||||

from pr_agent.git_providers.git_provider import GitProvider

|

||||

from pr_agent.config_loader import get_settings

|

||||

from pr_agent.log import get_logger

|

||||

from pr_agent.algo.types import EDIT_TYPE, FilePatchInfo

|

||||

|

||||

|

||||

class GiteaProvider(GitProvider):

|

||||

"""

|

||||

Implements GitProvider for Gitea/Forgejo API v1.

|

||||

"""

|

||||

|

||||

def __init__(self, pr_url: Optional[str] = None, incremental: Optional[bool] = False):

|

||||

self.gitea_url = get_settings().get("GITEA.URL", None)

|

||||

self.gitea_token = get_settings().get("GITEA.TOKEN", None)

|

||||

if not self.gitea_url:

|

||||

raise ValueError("GITEA.URL is not set in the config file")

|

||||

if not self.gitea_token:

|

||||

raise ValueError("GITEA.TOKEN is not set in the config file")

|

||||

self.headers = {

|

||||

'Authorization': f'token {self.gitea_token}',

|

||||

'Content-Type': 'application/json',

|

||||

'Accept': 'application/json'

|

||||

}

|

||||

self.owner = None

|

||||

self.repo = None

|

||||

self.pr_num = None

|

||||

self.pr = None

|

||||

self.pr_url = pr_url

|

||||

self.incremental = incremental

|

||||

if pr_url:

|

||||

self.set_pr(pr_url)

|

||||

|

||||

@staticmethod

|

||||

def _parse_pr_url(pr_url: str) -> Tuple[str, str, str]:

|

||||

"""

|

||||

Parse Gitea PR URL to (owner, repo, pr_number)

|

||||

"""

|

||||

parsed_url = urlparse(pr_url)

|

||||

path_parts = parsed_url.path.strip('/').split('/')

|

||||

if len(path_parts) < 4 or path_parts[2] != 'pulls':

|

||||

raise ValueError(f"Invalid PR URL format: {pr_url}")

|

||||

return path_parts[0], path_parts[1], path_parts[3]

|

||||

|

||||

def set_pr(self, pr_url: str):

|

||||

self.owner, self.repo, self.pr_num = self._parse_pr_url(pr_url)

|

||||

self.pr = self._get_pr()

|

||||

|

||||

def _get_pr(self):

|

||||

url = f"{self.gitea_url}/api/v1/repos/{self.owner}/{self.repo}/pulls/{self.pr_num}"

|

||||

response = requests.get(url, headers=self.headers)

|

||||

response.raise_for_status()

|

||||

return response.json()

|

||||

|

||||

def is_supported(self, capability: str) -> bool:

|

||||

# Gitea/Forgejo supports most capabilities

|

||||

return True

|

||||

|

||||

def get_files(self) -> List[str]:

|

||||

url = f"{self.gitea_url}/api/v1/repos/{self.owner}/{self.repo}/pulls/{self.pr_num}/files"

|

||||

response = requests.get(url, headers=self.headers)

|

||||

response.raise_for_status()

|

||||

return [file['filename'] for file in response.json()]

|

||||

|

||||

def get_diff_files(self) -> List[FilePatchInfo]:

|

||||

url = f"{self.gitea_url}/api/v1/repos/{self.owner}/{self.repo}/pulls/{self.pr_num}/files"

|

||||

response = requests.get(url, headers=self.headers)

|

||||

response.raise_for_status()

|

||||

|

||||

diff_files = []

|

||||

for file in response.json():

|

||||

edit_type = EDIT_TYPE.MODIFIED

|

||||

if file.get('status') == 'added':

|

||||

edit_type = EDIT_TYPE.ADDED

|

||||

elif file.get('status') == 'deleted':

|

||||

edit_type = EDIT_TYPE.DELETED

|

||||

elif file.get('status') == 'renamed':

|

||||

edit_type = EDIT_TYPE.RENAMED

|

||||

|

||||

diff_files.append(

|

||||

FilePatchInfo(

|

||||

file.get('previous_filename', ''),

|

||||

file.get('filename', ''),

|

||||

file.get('patch', ''),

|

||||

file['filename'],

|

||||

edit_type=edit_type,

|

||||

old_filename=file.get('previous_filename')

|

||||

)

|

||||

)

|

||||

return diff_files

|

||||

|

||||

def publish_description(self, pr_title: str, pr_body: str):

|

||||

url = f"{self.gitea_url}/api/v1/repos/{self.owner}/{self.repo}/pulls/{self.pr_num}"

|

||||

data = {'title': pr_title, 'body': pr_body}

|

||||

response = requests.patch(url, headers=self.headers, json=data)

|

||||

response.raise_for_status()

|

||||

|

||||

def publish_comment(self, pr_comment: str, is_temporary: bool = False):

|

||||

url = f"{self.gitea_url}/api/v1/repos/{self.owner}/{self.repo}/issues/{self.pr_num}/comments"

|

||||

data = {'body': pr_comment}

|

||||

response = requests.post(url, headers=self.headers, json=data)

|

||||

response.raise_for_status()

|

||||

|

||||

def publish_inline_comment(self, body: str, relevant_file: str, relevant_line_in_file: str,

|

||||

original_suggestion=None):

|

||||

url = f"{self.gitea_url}/api/v1/repos/{self.owner}/{self.repo}/pulls/{self.pr_num}/reviews"

|

||||

|

||||

data = {

|

||||

'event': 'COMMENT',

|

||||

'body': original_suggestion or '',

|

||||

'commit_id': self.pr.get('head', {}).get('sha', ''),

|

||||

'comments': [{

|

||||

'body': body,

|

||||

'path': relevant_file,

|

||||

'line': int(relevant_line_in_file)

|

||||

}]

|

||||

}

|

||||

response = requests.post(url, headers=self.headers, json=data)

|

||||

response.raise_for_status()

|

||||

|

||||

def publish_inline_comments(self, comments: list[dict]):

|

||||

for comment in comments:

|

||||

try:

|

||||

self.publish_inline_comment(

|

||||

comment['body'],

|

||||

comment['relevant_file'],

|

||||

comment['relevant_line_in_file'],

|

||||

comment.get('original_suggestion')

|

||||

)

|

||||

except Exception as e:

|

||||

get_logger().error(f"Failed to publish inline comment on {comment.get('relevant_file')}: {e}")

|

||||

|

||||

def publish_code_suggestions(self, code_suggestions: list) -> bool:

|

||||

overall_success = True

|

||||

for suggestion in code_suggestions:

|

||||

try:

|

||||

self.publish_inline_comment(

|

||||

suggestion['body'],

|

||||

suggestion['relevant_file'],

|

||||

suggestion['relevant_line_in_file'],

|

||||

suggestion.get('original_suggestion')

|

||||

)

|

||||

except Exception as e:

|

||||

overall_success = False

|

||||

get_logger().error(

|

||||

f"Failed to publish code suggestion on {suggestion.get('relevant_file')}: {e}")

|

||||

return overall_success

|

||||

|

||||

def publish_labels(self, labels):

|

||||

url = f"{self.gitea_url}/api/v1/repos/{self.owner}/{self.repo}/issues/{self.pr_num}/labels"

|

||||

data = {'labels': labels}

|

||||

response = requests.post(url, headers=self.headers, json=data)

|

||||

response.raise_for_status()

|

||||

|

||||

def get_pr_labels(self, update=False):

|

||||

url = f"{self.gitea_url}/api/v1/repos/{self.owner}/{self.repo}/issues/{self.pr_num}/labels"

|

||||

response = requests.get(url, headers=self.headers)

|

||||

response.raise_for_status()

|

||||

return [label['name'] for label in response.json()]

|

||||

|

||||

def get_issue_comments(self):

|

||||

url = f"{self.gitea_url}/api/v1/repos/{self.owner}/{self.repo}/issues/{self.pr_num}/comments"

|

||||

response = requests.get(url, headers=self.headers)

|

||||

response.raise_for_status()

|

||||

return response.json()

|

||||

|

||||

def remove_initial_comment(self):

|

||||

# Implementation depends on how you track the initial comment

|

||||

pass

|

||||

|

||||

def remove_comment(self, comment):

|

||||

url = f"{self.gitea_url}/api/v1/repos/{self.owner}/{self.repo}/issues/comments/{comment['id']}"

|

||||

response = requests.delete(url, headers=self.headers)

|

||||

response.raise_for_status()

|

||||

|

||||

def add_eyes_reaction(self, issue_comment_id: int, disable_eyes: bool = False) -> Optional[int]:

|

||||

if disable_eyes:

|

||||

return None

|

||||

url = f"{self.gitea_url}/api/v1/repos/{self.owner}/{self.repo}/issues/comments/{issue_comment_id}/reactions"

|

||||

data = {'content': 'eyes'}

|

||||

response = requests.post(url, headers=self.headers, json=data)

|

||||

response.raise_for_status()

|

||||

return response.json()['id']

|

||||

|

||||

def remove_reaction(self, issue_comment_id: int, reaction_id: int) -> bool:

|

||||

url = f"{self.gitea_url}/api/v1/repos/{self.owner}/{self.repo}/issues/comments/{issue_comment_id}/reactions/{reaction_id}"

|

||||

response = requests.delete(url, headers=self.headers)

|

||||

return response.status_code == 204

|

||||

|

||||

def get_commit_messages(self):

|

||||

url = f"{self.gitea_url}/api/v1/repos/{self.owner}/{self.repo}/pulls/{self.pr_num}/commits"

|

||||

response = requests.get(url, headers=self.headers)

|

||||

response.raise_for_status()

|

||||

return [commit['commit']['message'] for commit in response.json()]

|

||||

|

||||

def get_pr_branch(self):

|

||||

return self.pr['head']['ref']

|

||||

|

||||

def get_user_id(self):

|

||||

return self.pr['user']['id']

|

||||

|

||||

def get_pr_description_full(self) -> str:

|

||||

return self.pr['body'] or ''

|

||||

|

||||

def get_git_repo_url(self, issues_or_pr_url: str) -> str:

|

||||

try:

|

||||

parsed_url = urlparse(issues_or_pr_url)

|

||||

path_parts = parsed_url.path.strip('/').split('/')

|

||||

if len(path_parts) < 2:

|

||||

raise ValueError(f"Invalid URL format: {issues_or_pr_url}")

|

||||

return f"{parsed_url.scheme}://{parsed_url.netloc}/{path_parts[0]}/{path_parts[1]}.git"

|

||||

except Exception as e:

|

||||

get_logger().exception(f"Failed to get git repo URL from: {issues_or_pr_url}")

|

||||

return ""

|

||||

|

||||

def get_canonical_url_parts(self, repo_git_url: str, desired_branch: str) -> Tuple[str, str]:

|

||||

try:

|

||||

parsed_url = urlparse(repo_git_url)

|

||||

path_parts = parsed_url.path.strip('/').split('/')

|

||||

if len(path_parts) < 2:

|

||||

raise ValueError(f"Invalid git repo URL format: {repo_git_url}")

|

||||

|

||||

repo_name = path_parts[1]

|

||||

if repo_name.endswith('.git'):

|

||||

repo_name = repo_name[:-4]

|

||||

|

||||

prefix = f"{parsed_url.scheme}://{parsed_url.netloc}/{path_parts[0]}/{repo_name}/src/branch/{desired_branch}"

|

||||

suffix = ""

|

||||

return prefix, suffix

|

||||

except Exception as e:

|

||||

get_logger().exception(f"Failed to get canonical URL parts from: {repo_git_url}")

|

||||

return ("", "")

|

||||

|

||||

def get_languages(self) -> Dict[str, float]:

|

||||

"""

|

||||

Get the languages used in the repository and their percentages.

|

||||

Returns a dictionary mapping language names to their percentage of use.

|

||||

"""

|

||||

if not self.owner or not self.repo:

|

||||

return {}

|

||||

url = f"{self.gitea_url}/api/v1/repos/{self.owner}/{self.repo}/languages"

|

||||

response = requests.get(url, headers=self.headers)

|

||||

response.raise_for_status()

|

||||

return response.json()

|

||||

|

||||

def get_repo_settings(self) -> Dict:

|

||||

"""

|

||||

Get repository settings and configuration.

|

||||

Returns a dictionary containing repository settings.

|

||||

"""

|

||||

if not self.owner or not self.repo:

|

||||

return {}

|

||||

url = f"{self.gitea_url}/api/v1/repos/{self.owner}/{self.repo}"

|

||||

response = requests.get(url, headers=self.headers)

|

||||

response.raise_for_status()

|

||||

return response.json()

|

||||

@ -96,7 +96,7 @@ class GithubProvider(GitProvider):

|

||||

parsed_url = urlparse(given_url)

|

||||

repo_path = (parsed_url.path.split('.git')[0])[1:] # /<owner>/<repo>.git -> <owner>/<repo>

|

||||

if not repo_path:

|

||||

get_logger().error(f"url is neither an issues url nor a pr url nor a valid git url: {given_url}. Returning empty result.")

|

||||

get_logger().error(f"url is neither an issues url nor a PR url nor a valid git url: {given_url}. Returning empty result.")

|

||||

return ""

|

||||

return repo_path

|

||||

except Exception as e:

|

||||

|

||||

@ -81,6 +81,7 @@ require_ticket_analysis_review=true

|

||||

# general options

|

||||

persistent_comment=true

|

||||

extra_instructions = ""

|

||||

num_max_findings = 3

|

||||

final_update_message = true

|

||||

# review labels

|

||||

enable_review_labels_security=true

|

||||

|

||||

@ -98,7 +98,7 @@ class Review(BaseModel):

|

||||

{%- if question_str %}

|

||||

insights_from_user_answers: str = Field(description="shortly summarize the insights you gained from the user's answers to the questions")

|

||||

{%- endif %}

|

||||

key_issues_to_review: List[KeyIssuesComponentLink] = Field("A short and diverse list (0-3 issues) of high-priority bugs, problems or performance concerns introduced in the PR code, which the PR reviewer should further focus on and validate during the review process.")

|

||||

key_issues_to_review: List[KeyIssuesComponentLink] = Field("A short and diverse list (0-{{ num_max_findings }} issues) of high-priority bugs, problems or performance concerns introduced in the PR code, which the PR reviewer should further focus on and validate during the review process.")

|

||||

{%- if require_security_review %}

|

||||

security_concerns: str = Field(description="Does this PR code introduce possible vulnerabilities such as exposure of sensitive information (e.g., API keys, secrets, passwords), or security concerns like SQL injection, XSS, CSRF, and others ? Answer 'No' (without explaining why) if there are no possible issues. If there are security concerns or issues, start your answer with a short header, such as: 'Sensitive information exposure: ...', 'SQL injection: ...' etc. Explain your answer. Be specific and give examples if possible")

|

||||

{%- endif %}

|

||||

|

||||

@ -199,7 +199,7 @@ class PRDescription:

|

||||

|

||||

async def _prepare_prediction(self, model: str) -> None:

|

||||

if get_settings().pr_description.use_description_markers and 'pr_agent:' not in self.user_description:

|

||||

get_logger().info("Markers were enabled, but user description does not contain markers. skipping AI prediction")

|

||||

get_logger().info("Markers were enabled, but user description does not contain markers. Skipping AI prediction")

|

||||

return None

|

||||

|

||||

large_pr_handling = get_settings().pr_description.enable_large_pr_handling and "pr_description_only_files_prompts" in get_settings()

|

||||

@ -707,7 +707,7 @@ class PRDescription:

|

||||

pr_body += """</tr></tbody></table>"""

|

||||

|

||||

except Exception as e:

|

||||

get_logger().error(f"Error processing pr files to markdown {self.pr_id}: {str(e)}")

|

||||

get_logger().error(f"Error processing PR files to markdown {self.pr_id}: {str(e)}")

|

||||

pass

|

||||

return pr_body, pr_comments

|

||||

|

||||

|

||||

@ -81,6 +81,7 @@ class PRReviewer:

|

||||

"language": self.main_language,

|

||||

"diff": "", # empty diff for initial calculation

|

||||

"num_pr_files": self.git_provider.get_num_of_files(),

|

||||

"num_max_findings": get_settings().pr_reviewer.num_max_findings,

|

||||

"require_score": get_settings().pr_reviewer.require_score_review,

|

||||

"require_tests": get_settings().pr_reviewer.require_tests_review,

|

||||

"require_estimate_effort_to_review": get_settings().pr_reviewer.require_estimate_effort_to_review,

|

||||

@ -316,7 +317,9 @@ class PRReviewer:

|

||||

get_logger().exception(f"Failed to remove previous review comment, error: {e}")

|

||||

|

||||

def _can_run_incremental_review(self) -> bool:

|

||||

"""Checks if we can run incremental review according the various configurations and previous review"""

|

||||

"""

|

||||

Checks if we can run incremental review according the various configurations and previous review.

|

||||

"""

|

||||

# checking if running is auto mode but there are no new commits

|

||||

if self.is_auto and not self.incremental.first_new_commit_sha:

|

||||

get_logger().info(f"Incremental review is enabled for {self.pr_url} but there are no new commits")

|

||||

|

||||

185

tests/e2e_tests/test_gitea_app.py

Normal file

185

tests/e2e_tests/test_gitea_app.py

Normal file

@ -0,0 +1,185 @@

|

||||

import os

|

||||

import time

|

||||

import requests

|

||||

from datetime import datetime

|

||||

|

||||

from pr_agent.config_loader import get_settings

|

||||

from pr_agent.log import get_logger, setup_logger

|

||||

from tests.e2e_tests.e2e_utils import (FILE_PATH,

|

||||

IMPROVE_START_WITH_REGEX_PATTERN,

|

||||

NEW_FILE_CONTENT, NUM_MINUTES,

|

||||

PR_HEADER_START_WITH, REVIEW_START_WITH)

|

||||

|

||||

log_level = os.environ.get("LOG_LEVEL", "INFO")

|

||||

setup_logger(log_level)

|

||||

logger = get_logger()

|

||||

|

||||

def test_e2e_run_gitea_app():

|

||||

repo_name = 'pr-agent-tests'

|

||||

owner = 'codiumai'

|

||||

base_branch = "main"

|

||||

new_branch = f"gitea_app_e2e_test-{datetime.now().strftime('%Y-%m-%d-%H-%M-%S')}"

|

||||

get_settings().config.git_provider = "gitea"

|

||||

|

||||

headers = None

|

||||

pr_number = None

|

||||

|

||||

try:

|

||||

gitea_url = get_settings().get("GITEA.URL", None)

|

||||

gitea_token = get_settings().get("GITEA.TOKEN", None)

|

||||

|

||||

if not gitea_url:

|

||||

logger.error("GITEA.URL is not set in the configuration")

|

||||

logger.info("Please set GITEA.URL in .env file or environment variables")

|

||||

assert False, "GITEA.URL is not set in the configuration"

|

||||

|

||||

if not gitea_token:

|

||||

logger.error("GITEA.TOKEN is not set in the configuration")

|

||||

logger.info("Please set GITEA.TOKEN in .env file or environment variables")

|

||||

assert False, "GITEA.TOKEN is not set in the configuration"

|

||||

|

||||

headers = {

|

||||

'Authorization': f'token {gitea_token}',

|

||||

'Content-Type': 'application/json',

|

||||

'Accept': 'application/json'

|

||||

}

|

||||

|

||||

logger.info(f"Creating a new branch {new_branch} from {base_branch}")

|

||||

|

||||

response = requests.get(

|

||||

f"{gitea_url}/api/v1/repos/{owner}/{repo_name}/branches/{base_branch}",

|

||||

headers=headers

|

||||

)

|

||||

response.raise_for_status()

|

||||

base_branch_data = response.json()

|

||||

base_commit_sha = base_branch_data['commit']['id']

|

||||

|

||||

branch_data = {

|

||||

'ref': f"refs/heads/{new_branch}",

|

||||

'sha': base_commit_sha

|

||||

}

|

||||

response = requests.post(

|

||||

f"{gitea_url}/api/v1/repos/{owner}/{repo_name}/git/refs",

|

||||

headers=headers,

|

||||

json=branch_data

|

||||

)

|

||||

response.raise_for_status()

|

||||

|

||||

logger.info(f"Updating file {FILE_PATH} in branch {new_branch}")

|

||||

|

||||

import base64

|

||||

file_content_encoded = base64.b64encode(NEW_FILE_CONTENT.encode()).decode()

|

||||

|

||||

try:

|

||||

response = requests.get(

|

||||

f"{gitea_url}/api/v1/repos/{owner}/{repo_name}/contents/{FILE_PATH}?ref={new_branch}",

|

||||

headers=headers

|

||||

)

|

||||

response.raise_for_status()

|

||||

existing_file = response.json()

|

||||

file_sha = existing_file.get('sha')

|

||||

|

||||

file_data = {

|

||||

'message': 'Update cli_pip.py',

|

||||

'content': file_content_encoded,

|

||||

'sha': file_sha,

|

||||

'branch': new_branch

|

||||

}

|

||||

except:

|

||||

file_data = {

|

||||

'message': 'Add cli_pip.py',

|

||||

'content': file_content_encoded,

|

||||

'branch': new_branch

|

||||

}

|

||||

|

||||

response = requests.put(

|

||||

f"{gitea_url}/api/v1/repos/{owner}/{repo_name}/contents/{FILE_PATH}",

|

||||

headers=headers,

|

||||

json=file_data

|

||||

)

|

||||

response.raise_for_status()

|

||||

|

||||

logger.info(f"Creating a pull request from {new_branch} to {base_branch}")

|

||||

pr_data = {

|

||||

'title': f'Test PR from {new_branch}',

|

||||

'body': 'update cli_pip.py',

|

||||

'head': new_branch,

|

||||

'base': base_branch

|

||||

}

|

||||

response = requests.post(

|

||||

f"{gitea_url}/api/v1/repos/{owner}/{repo_name}/pulls",

|

||||

headers=headers,

|

||||

json=pr_data

|

||||

)

|

||||

response.raise_for_status()

|

||||

pr = response.json()

|

||||

pr_number = pr['number']

|

||||

|

||||

for i in range(NUM_MINUTES):

|

||||

logger.info(f"Waiting for the PR to get all the tool results...")

|

||||

time.sleep(60)

|

||||

|

||||

response = requests.get(

|

||||

f"{gitea_url}/api/v1/repos/{owner}/{repo_name}/issues/{pr_number}/comments",

|

||||

headers=headers

|

||||

)

|

||||

response.raise_for_status()

|

||||

comments = response.json()

|

||||

|

||||

if len(comments) >= 5:

|

||||

valid_review = False

|

||||

for comment in comments:

|

||||

if comment['body'].startswith('## PR Reviewer Guide 🔍'):

|

||||

valid_review = True

|

||||

break

|

||||

if valid_review:

|

||||

break

|

||||

else:

|

||||

logger.error("REVIEW feedback is invalid")

|

||||

raise Exception("REVIEW feedback is invalid")

|

||||

else:

|

||||

logger.info(f"Waiting for the PR to get all the tool results. {i + 1} minute(s) passed")

|

||||

else:

|

||||

assert False, f"After {NUM_MINUTES} minutes, the PR did not get all the tool results"

|

||||

|

||||

logger.info(f"Cleaning up: closing PR and deleting branch {new_branch}")

|

||||

|

||||

close_data = {'state': 'closed'}

|

||||

response = requests.patch(

|

||||

f"{gitea_url}/api/v1/repos/{owner}/{repo_name}/pulls/{pr_number}",

|

||||

headers=headers,

|

||||

json=close_data

|

||||

)

|

||||

response.raise_for_status()

|

||||

|

||||

response = requests.delete(

|

||||

f"{gitea_url}/api/v1/repos/{owner}/{repo_name}/git/refs/heads/{new_branch}",

|

||||

headers=headers

|

||||

)

|

||||

response.raise_for_status()

|

||||

|

||||

logger.info(f"Succeeded in running e2e test for Gitea app on the PR")

|

||||

except Exception as e:

|

||||

logger.error(f"Failed to run e2e test for Gitea app: {e}")

|

||||

raise

|

||||

finally:

|

||||

try:

|

||||

if headers is None or gitea_url is None:

|

||||

return

|

||||

|

||||

if pr_number is not None:

|

||||

requests.patch(

|

||||

f"{gitea_url}/api/v1/repos/{owner}/{repo_name}/pulls/{pr_number}",

|

||||

headers=headers,

|

||||

json={'state': 'closed'}

|

||||

)

|

||||

|

||||

requests.delete(

|

||||

f"{gitea_url}/api/v1/repos/{owner}/{repo_name}/git/refs/heads/{new_branch}",

|

||||

headers=headers

|

||||

)

|

||||

except Exception as cleanup_error:

|

||||

logger.error(f"Failed to clean up after test: {cleanup_error}")

|

||||

|

||||

if __name__ == '__main__':

|

||||

test_e2e_run_gitea_app()

|

||||

126

tests/unittest/test_gitea_provider.py

Normal file

126

tests/unittest/test_gitea_provider.py

Normal file

@ -0,0 +1,126 @@

|

||||

from unittest.mock import MagicMock, patch

|

||||

|

||||

import pytest

|

||||

|

||||

from pr_agent.algo.types import EDIT_TYPE

|

||||

from pr_agent.git_providers.gitea_provider import GiteaProvider

|

||||

|

||||

|

||||

class TestGiteaProvider:

|

||||

"""Unit-tests for GiteaProvider following project style (explicit object construction, minimal patching)."""

|

||||

|

||||

def _provider(self):

|

||||

"""Create provider instance with patched settings and avoid real HTTP calls."""

|

||||

with patch('pr_agent.git_providers.gitea_provider.get_settings') as mock_get_settings, \

|

||||

patch('requests.get') as mock_get:

|

||||

settings = MagicMock()

|

||||

settings.get.side_effect = lambda k, d=None: {

|

||||

'GITEA.URL': 'https://gitea.example.com',

|

||||

'GITEA.TOKEN': 'test-token'

|

||||

}.get(k, d)

|

||||

mock_get_settings.return_value = settings

|

||||

# Stub the PR fetch triggered during provider initialization

|

||||

pr_resp = MagicMock()

|

||||

pr_resp.json.return_value = {

|

||||

'title': 'stub',

|

||||

'body': 'stub',

|

||||

'head': {'ref': 'main'},

|

||||

'user': {'id': 1}

|

||||

}

|

||||

pr_resp.raise_for_status = MagicMock()

|

||||

mock_get.return_value = pr_resp

|

||||

return GiteaProvider('https://gitea.example.com/owner/repo/pulls/123')

|

||||

|

||||

# ---------------- URL parsing ----------------

|

||||

def test_parse_pr_url_valid(self):

|

||||

owner, repo, pr_num = GiteaProvider._parse_pr_url('https://gitea.example.com/owner/repo/pulls/123')

|

||||

assert (owner, repo, pr_num) == ('owner', 'repo', '123')

|

||||

|

||||

def test_parse_pr_url_invalid(self):

|

||||

with pytest.raises(ValueError):

|

||||

GiteaProvider._parse_pr_url('https://gitea.example.com/owner/repo')

|

||||

|

||||

# ---------------- simple getters ----------------

|

||||

def test_get_files(self):

|

||||

provider = self._provider()

|

||||

mock_resp = MagicMock()

|

||||

mock_resp.json.return_value = [{'filename': 'a.txt'}, {'filename': 'b.txt'}]

|

||||

mock_resp.raise_for_status = MagicMock()

|

||||

with patch('requests.get', return_value=mock_resp) as mock_get:

|

||||

assert provider.get_files() == ['a.txt', 'b.txt']

|

||||

mock_get.assert_called_once()

|

||||

|

||||

def test_get_diff_files(self):

|

||||

provider = self._provider()

|

||||

mock_resp = MagicMock()

|

||||

mock_resp.json.return_value = [

|

||||

{'filename': 'f1', 'previous_filename': 'old_f1', 'status': 'renamed', 'patch': ''},

|

||||

{'filename': 'f2', 'status': 'added', 'patch': ''},

|

||||

{'filename': 'f3', 'status': 'deleted', 'patch': ''},

|

||||

{'filename': 'f4', 'status': 'modified', 'patch': ''}

|

||||

]

|

||||

mock_resp.raise_for_status = MagicMock()

|

||||

with patch('requests.get', return_value=mock_resp):

|

||||

res = provider.get_diff_files()

|

||||

assert [f.edit_type for f in res] == [EDIT_TYPE.RENAMED, EDIT_TYPE.ADDED, EDIT_TYPE.DELETED,

|

||||

EDIT_TYPE.MODIFIED]

|

||||

|

||||

# ---------------- publishing methods ----------------

|

||||

def test_publish_description(self):

|

||||

provider = self._provider()

|

||||

mock_resp = MagicMock();

|

||||

mock_resp.raise_for_status = MagicMock()

|

||||

with patch('requests.patch', return_value=mock_resp) as mock_patch:

|

||||

provider.publish_description('t', 'b');

|

||||

mock_patch.assert_called_once()

|

||||

|

||||

def test_publish_comment(self):

|

||||

provider = self._provider()

|

||||

mock_resp = MagicMock();

|

||||

mock_resp.raise_for_status = MagicMock()

|

||||

with patch('requests.post', return_value=mock_resp) as mock_post:

|

||||

provider.publish_comment('c');

|

||||

mock_post.assert_called_once()

|

||||

|

||||

def test_publish_inline_comment(self):

|

||||

provider = self._provider()

|

||||

mock_resp = MagicMock();

|

||||

mock_resp.raise_for_status = MagicMock()

|

||||

with patch('requests.post', return_value=mock_resp) as mock_post:

|

||||

provider.publish_inline_comment('body', 'file', '10');

|

||||

mock_post.assert_called_once()

|

||||

|

||||

# ---------------- labels & reactions ----------------

|

||||

def test_get_pr_labels(self):

|

||||

provider = self._provider()

|

||||

mock_resp = MagicMock();

|

||||

mock_resp.raise_for_status = MagicMock();

|

||||

mock_resp.json.return_value = [{'name': 'l1'}]

|

||||

with patch('requests.get', return_value=mock_resp):

|

||||

assert provider.get_pr_labels() == ['l1']

|

||||

|

||||

def test_add_eyes_reaction(self):

|

||||

provider = self._provider()

|

||||

mock_resp = MagicMock();

|

||||

mock_resp.raise_for_status = MagicMock();

|

||||

mock_resp.json.return_value = {'id': 7}

|

||||

with patch('requests.post', return_value=mock_resp):

|

||||

assert provider.add_eyes_reaction(1) == 7

|

||||

|

||||

# ---------------- commit messages & url helpers ----------------

|

||||

def test_get_commit_messages(self):

|

||||

provider = self._provider()

|

||||

mock_resp = MagicMock();

|

||||

mock_resp.raise_for_status = MagicMock()

|

||||

mock_resp.json.return_value = [

|

||||

{'commit': {'message': 'm1'}}, {'commit': {'message': 'm2'}}]

|

||||

with patch('requests.get', return_value=mock_resp):

|

||||

assert provider.get_commit_messages() == ['m1', 'm2']

|

||||

|

||||

def test_git_url_helpers(self):

|

||||

provider = self._provider()

|

||||

issues_url = 'https://gitea.example.com/owner/repo/pulls/3'

|

||||

assert provider.get_git_repo_url(issues_url) == 'https://gitea.example.com/owner/repo.git'

|

||||

prefix, suffix = provider.get_canonical_url_parts('https://gitea.example.com/owner/repo.git', 'dev')

|

||||

assert prefix == 'https://gitea.example.com/owner/repo/src/branch/dev'

|

||||

assert suffix == ''

|

||||

Reference in New Issue

Block a user